As a programmer dataseativistam wrote

Few people believe that a modern data science stack can be built not in Python, but there are such precedents :). The Odnoklassniki stack was formed for many years, primarily by programmers who switched to data science, but still remained close to the products, so it is based on open JVM stack technologies: Hadoop, Spark, Kafka, Cassandra, etc. This helps us to reduce the time and cost of commissioning models, but sometimes creates difficulties. For example, when preparing basic solutions for the participants of SNA Hackathon 2019, I had to squeeze the will into a fist and plunge into the world of dynamic typing. Details (and easy trolling) under the cut :)

Installation

Some kind of python can be found on almost any developer machine. He was also found on mine, already in duplicate - 2.7 and 3.4. Having rummaged in the memory bins, I remembered that I installed version 3.4 three years ago, after at SNA Hackathon 2016, participants ran into epic problems, trying to decompose a 1.5GB graph in memory (a small instructional video and a separate competition resulted ), but today it’s the economy is already morally obsolete and needed to be updated.

In the world of Java, every project when assembling indicates everything that it wants to absorb in itself, and with it it lives on. In theory, everything is simple and beautiful, but in practice, when you need library A and library B, you will surely find out that they both need library C, with two different incompatible versions :). In vain attempts to break this vicious circle, some libraries pack all their dependencies into themselves and hide from the rest, while the rest spin as they can.

What's wrong with that python? There is no project as such, but there is an “environment”, and within each environment, an independent ecosystem can be formed from packages of certain versions. There are also tools for lazy people., with the help of which the local Python environment is no more difficult to manage than the distributed heterogeneous cluster of Clouder or Horton. But the mutual conflicts of the package versions are not going anywhere. I immediately ran into the fact that the release of Pandas 0.24 translated the _maybe_box_datetimelike private method into a public API, and it suddenly turned out that many people used it in its previous form and now fell off :) (and yes, Java world is all the same ). But in the end, I managed to fix everything, not counting the terrible varnings about new deprikeshishin, earned.

Collaborative baseline

Tasks on SNA Hackathon 2019 are divided into three areas - recommendations on logs, on texts and on pictures. Let's start with the logs (the spoiler - the Cmd + C / Cmd + V megapattern with the stackflow also works with python). Data was collected from live production - each user randomly, without weighing, showed some feed from his environment, after which all signs at the time of the show and the final reaction were recorded in the log. The task of piece of cake: take the signs, shove in logreg , profit!

But the plan was filed at the first stage, on reading the data. In theory, there is a wonderful Apache Arrow package , which should unify work with data in different ecosystems and, in particular, allow reading “parquet” filesfrom python without Spark. In practice, it turned out. that even with reading simple nested structures he has problems , and our beautiful hierarchy turned into flat squalor :(.

But there were some positive aspects. Jupyter , in general, pleased, he is almost as comfortable, though not as cute as Zeppelin . There is even a rock-core ! Well, I was pleased with the speed of the logistic regression on a small piece of data in memory - it does not reach the power of the distributed variant, but it learns instantly on four signs and a couple of hundreds of thousands of examples.

Then, however, there was enthusiasm that was hit hard in the stomach: if the necessary data transformation (grouped by key and put into list) is absent from the standard list and apply or map appears , then the speed drops by orders of magnitude. As a result, 80% of the baseline time is taken not by reading the data, joins, model training and sorting, but banal listing.

By the way, it is precisely because of this feature that I am always surprised at pySpark users - almost all standard functionality is available in the form of Spark SQL, which is the same in python and in the rock, and after the appearance of the first ten-thousand python computer with something personal the cluster turns into a pumpkin ...

But, in the end, all obstacles were overcome and nine paragraphs were enough for score 0.65!

Text baseline

Well, now we have a more difficult task - if the logreg can be found in hundreds of implementations for all platforms, then there is more variety of tools for working with texts under python. Fortunately, the texts are already going not only raw, but also processed by our standard preprocessing system based on Spark and Lucene . Therefore, you can immediately take a list of tokens and not to steam by tokenization / lematization / steaming.

For some time, I doubted what to take: the domestic BigARTM or the imported Gensim . As a result, I stopped at the second one and copied them with doc2vec tutorial :). I hope my colleagues from the BigARTM team will not fail to take a chance and show the advantages of their library in the competition.

We have a simple plan again: we take all the texts from the test, we train the Doc2Vec model, then we infer it on the tray and learn the logreg from its results (stacking is our everything!). But, as usual, the problems started right away. Despite the relatively modest amount of texts in the training set (only one and a half gigabytes), while trying to draw them into the pandas, the python devoured 20 (20, Karl!) Gigabytes of memory, went to the swap and did not return. I had to eat an elephant in parts.

When reading, we always indicate which columns to lift from the parquet, which allows us not to read the raw text in memory. This saves its use by half, the test set documents are loaded into the memory for learning without problems. With the training sample of such a trick is not enough, therefore, at a time we upload no more than one "parquet" file. Further, in the downloaded file, we leave only the ID of those days that we want to use for training, and already on them is the embedding.

Logreg over this works just as fast, and as a result we get 14 paragraphs and score 0.54 :)

Picture baseline

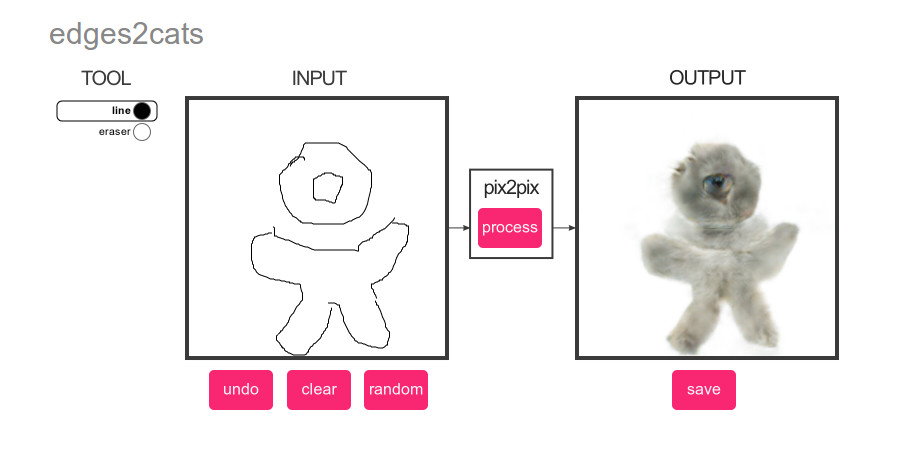

What could be more popular than deep learning? Only seals! Therefore, for a picture baseline, we made a brilliantly simple plan: we run a cat detector from pictures from the test set, and then we rank the content according to the obtained score :)

And again there is something to choose from. Classification or detection? pyTorch or Tensorflow? The main criterion is the ease of implementation by copy-paste. And the winner is ... YOLOv3 on MXNet :). The laconicism of their demo captivated at first sight, but then, as usual, the problems started.

What usually starts working with big data? With an evaluation of the performance and the required machine time! I wanted to make the baseline as autonomous as possible, so we taught it to work directly with the tar file.and quickly realized that at a speed of extracting from tar to tmpfs 200 photos per second, 352,758 images would need about half an hour to process, norm. We add loading and preprocessing of photos - 100 per second, about an hour for everything, norms. Add a neural grid calculation - 20 pics per second, 5 hours, well, ok ... Add result retrieval - 1 photo per second, week, WTF?

After a couple of hours of dancing with tambourines, comes the understanding that NDArraywhich we observe is never numpy, and the internal structure of MXNet, which performs all the calculations, is lazy. Bingo! What to do? Any beginner diplerner knows that the whole thing is in the size of a batch! If MXNet calculates the score lazily, then if we first request them for a couple of dozen photos, and then we start extracting them, then perhaps the processing of photos will go batch? And yes, after adding a batching at a speed of 10 photos per second, we managed to find all the seals :).

Then we apply already known machinery and for 10 paragraphs we get score 0.504 :).

findings

When one wise Sensei was asked, "Who will win, the master of aikido or karate?", He replied, "The master will win." This experiment led us to roughly the same conclusions: there is not and cannot be an ideal language for all occasions. With Python, you can quickly assemble a solution from ready-made blocks, but trying to move away from them with sufficiently large amounts of data will bring a lot of pain. In Java and Scala you can also find many ready-made tools and easily implement your own ideas, but the languages themselves will be more demanding on the quality of the code.

And, of course, good luck to all participants of SNA Hackathon 2019 !