Nuances of developing a plugin for Unity

Recently I encountered writing plugins for Unity. There was no experience before, and I am a user of this environment only 2-3 months. During the development of the plugin, a lot of interesting points have accumulated about which there is little information on the Internet. I want to describe all these points in more detail for the pioneers, so that they do not fall on the same rake that I myself have stepped on many, many times.

This article should also be useful for advanced users. It will consider useful tools and nuances of developing plugins for OSX, Windows, iOS and Android.

With playing videos in Unity for a long time, not everything went well. Built-in tools are very limited, and on mobile platforms they can only play video in full screen mode, which is not an ice game game! At first, we used third-party plugins. However, it either lacked the necessary functionality, or there were bugs, the fixes of which had to wait a long time (if they were fixed at all). For this reason, we decided to write our own version of the video decoder for Unity withblackjack and w ... , stop, and features.

I will not post the creation of the plugin and the code - sorry, commercial secret, but I will dwell on general principles. To implement a video decoder, he took the vp8 and vp9 codecs, which can play open and license-free WebM format. After decoding the video frame, we get the data in the YUV color model. Then we write each component in a separate texture. In fact, this is where the plugin ends. Further in Unity itself, the shader decodes YUV into the RGB color model, which we already apply to the object.

You ask - why a shader? Good question. At first I tried to convert the color model software, on the processor. For desktops, this is acceptable, and performance does not drop much, but on mobile platforms the picture is radically different. On the iPad 2 in the workspace, the software converter gave 8-12 FPS. When color conversion in the shader received 25-30 FPS, which is already a normal playable indicator.

Let's move on to the nuances of plugin development.

The documentation for writing plugins for Unity is rather scarce, everything is described in general terms (for iOS, I found many nuances myself by experience). Link to the doc.

What pleases - there are examples collected for current studios and platforms (except iOS: Apple probably didn’t pay extra to developers). The examples themselves are updated with every Unity update, but there is also a fly in the ointment: APIs, interfaces often change, defines and constants are renamed. For example, I took a fresh update, from where I used a new header. Then I figured out why the plug-in does not work on mobile platforms, until I noticed:

Probably the only important point for all platforms that you need to immediately take into account is the rendering cycle. Unity rendering can be done in a separate thread. This means that the main thread will not work with textures. To resolve this situation, the scripts have the IssuePluginEvent function , which pulls a callback at the right time, where work with the resources needed for rendering should be performed. When working with textures (creating, updating, deleting), I recommend using coroutine, which will pull callback at the end of the frame:

Interestingly, if you try to work with textures in the main thread, the game crashes only on the DX9 API, and even then not always.

Probably the easiest and hassle-free platform. The plugin is going quickly, debiting is also easy. In xCode, do attach to Process -> Unity. You can put breaks, watch a callstack when falling, etc.

There was only one interesting point. Unity recently upgraded to version 5.3.2. In the editor, OpenGL 4 became the main graphics API, in the older version was OpenGL 2.1, which is now deprecated. In the updated version, the editor simply did not play the video. A quick debug showed that the glTexSubImage2D function (GL_TEXTURE_2D, 0, 0, 0, width, height, GL_ALPHA, GL_UNSIGNED_BYTE, buffer) returns the GL_INVALID_ENUM error . Judging by the OpenGL documentation, to replace the pixel format GL_ALPHA came GL_RED, which does not work with OpenGL 2.1 ... I had to back up with a crutch:

And the most mysterious thing is that in the final build compiled under OpenGL 4, everything works fine with the GL_ALPHA flag . I wrote down this nuance in the section of magic, but nevertheless did it humanly.

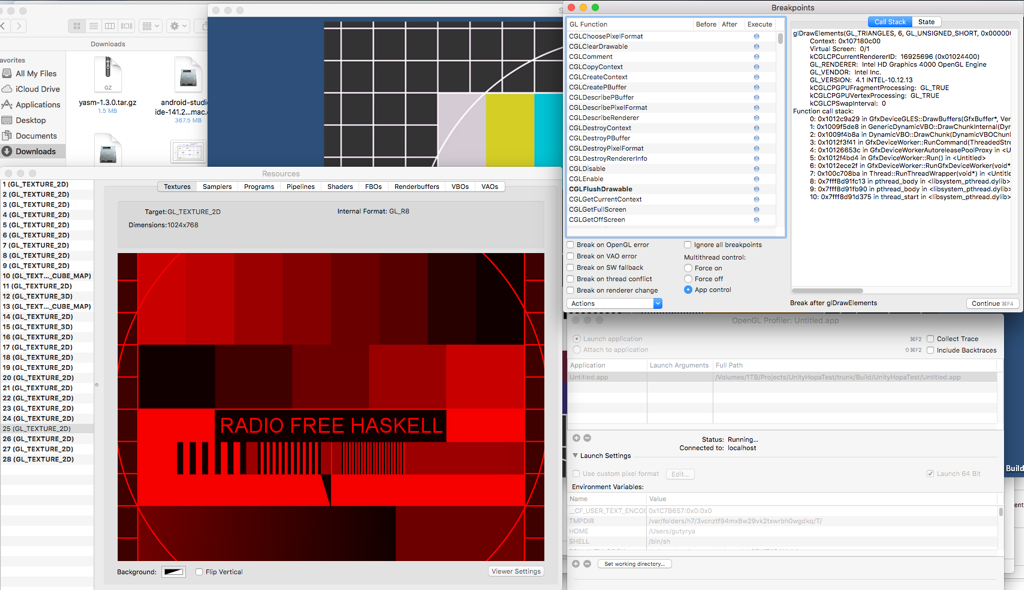

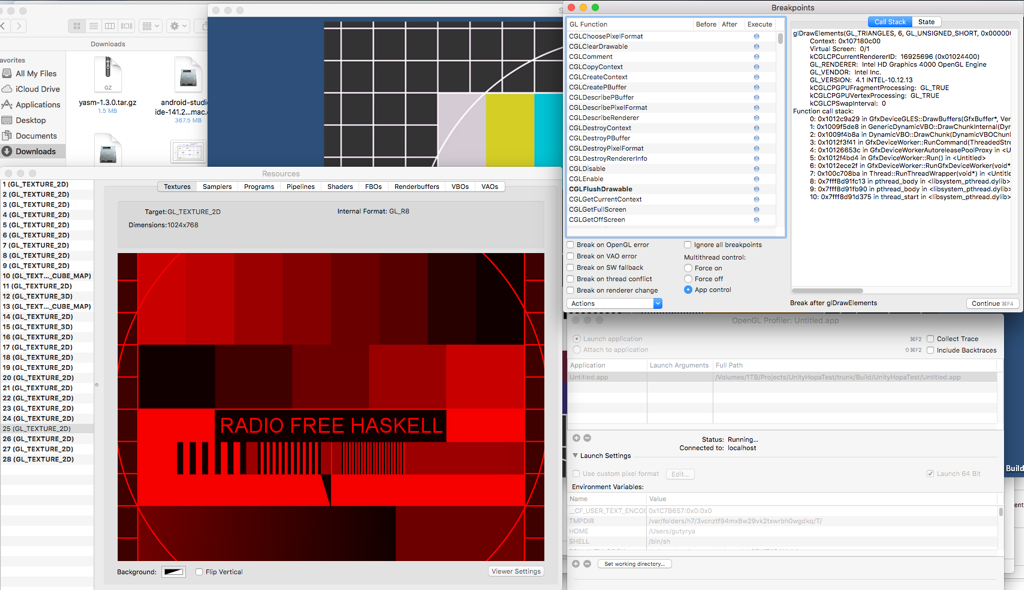

Unity Editor can be run on an older version of OGL. To do this, we write in the console: Of the useful utilities I want to mention the OpenGL Profiler, which is part of Graphics Tools. Tools can be downloaded on the Apple website in the Developer section. The profiler allows you to fully monitor the state of OpenGL in the application, you can catch errors, look at the contents of textures (size, type, format), shaders and buffers in video memory, set breakpoints for different events. A very useful tool for working with graphics. Screenshot: So I found out that 1326 textures are used in Unity Editor.

On this OpenGL platform, the plugin version was also compiled without any problems. But on DirectX 9 dwell in more detail.

1. DirectX 9 has such a "feature" as the loss of a device (lost device). OpenGL and DirectX (starting with version 10) are devoid of this drawback. In fact, there is a loss of control over graphic resources (textures, shaders, video memory meshes, etc.). It turns out that we must handle this situation, and if it happened, we must load or create all the textures again. According to my observations, many plugins do just that. I managed to cheat a little: I create textures from Unity scripts, and then pass their pointers to the plugin. Thus, I leave the entire resource management to Unity, and he himself copes with the situation of device loss.

2. When everything seemed to be ready, an unexpected problem was discovered. Sometimes and only on some videos the image was displayed with an offset, as shown in the screenshot:

Judging by the type of image, the error could be present in the algorithm for copying data to a texture, in texture coordinates, or was associated with the wrap texture. The documentation suggested that DirectX could even out texture sizes for optimization by adding extra bytes. This information is stored in the structure:

Pitch - the number of bytes in one row of the texture, taking into account alignment.

Having a little tweaked the copy algorithm, I got the desired result (filled extra pixels with zeros):

For debugging OpenGL, the gDEBugger utility will help, which is similar in functionality to OpenGL Profiler for OSX:

Unfortunately, I did not find such utilities for DX9. The presence of such a tool would help in finding an error with copying data to a texture.

There was no example project for this platform in the samples. The documentation has little useful information and mainly only about access to functions from the plugin.

I will dwell on important aspects:

1. In xCode we create a regular iOS project with the type StaticLib. We connect OpenGL frameworks - and you can build a plugin.

2. The name of the final plugin file does not matter. In Unity, functions are imported from all plugins located in the iOS folder:

3. An important point - if you have a function with the same name in another plugin, then you cannot build the build. The linker Unity will swear on double implementation. Advice - name so that no one thinks of such a name.

4. UnityPluginLoad (IUnityInterfaces * unityInterfaces) , which should be called when the plug-in is loaded, is not called! To find out when the plug-in still started and get information about the current render device, you need to create your own controller, inherited from UnityAppController and register the function call to start the plug-in and RenderEvent in it . The created file should be placed in the folder with plugins for iOS. An example implementation of a controller for registering functions:

5. If the plugin uses several different architectures, then they can be combined into a single static library for convenience: 6. During testing, I found that negative texture coordinates are not transferred from the vertex shader to the pixel shader - zeros always come. By default, textures are created with the addressing mode CLAMP_TO_EDGE . In this case, OpenGL ES cuts everything to the range [0..1]. In desktop platforms, this is not observed. 7. A serious bug was noticed. If you build a project for iOS with Script Debugging turned on, then when the game crashes, xCode also crashes. As a result, no logs, no callstack ...

Debugging the plug-in for the iOS platform is a pleasure - in xCode there is always a callstack when it crashes. In the console, you can read the logs of both scripts and the plugin, and if you add * .CPP plugin files to the project, you can put the breaks and use the full lldb debugger functionality! But with scripts, everything is much worse, so logging to help.

Building under Android requires the most tools:

- A normal IDE for editing C ++ code. I used xCode. Anyway, on a poppy under Android, collecting is somehow easier;

- NDK for building C code into a static library;

- Android Studio with all its needs like Java, etc. Studio is needed for convenient logging of what is happening in the application.

Let's go through interesting points:

1. The situation with debugging plugins in Android is rather sad, so I recommend immediately thinking about writing logs to a file. You can, of course, get confused and try to configure remote debugging, but I didn’t have time for this and I had to go the simpler way, browsing the logs through Android Studio. To do this, android / log.h has the __android_log_vprint function, which works similarly to printf. For convenience, wrapped in a cross-platform view:

I advise you not to bypass asserts . If they are triggered, Android Studio allows you to view the full call stack.

2. On this platform, a specific specificity of naming plugins is libMyPluginName.so. For example, the lib prefix is required (for more details, see the Unity documentation).

3. In the Android application, all resources are stored in one bundle, which is a jar or zip file. We can’t just open the stream and start reading data, as in other platforms. In addition to the path to the video, you need Application.dataPath, which contains the path to Android apk, only in this way we can get and open the desired asset. From here we get the file length and its offset relative to the start of the bundle:

We open the filestream along the path Application.dataPath using standard tools (fopen or whatever you like), and begin to read the file with offset offset - this is our video. The length is needed to know when the video file will end and stop further reading.

4. Found a bug.

s_DeviceType always contains kUnityGfxRendererNull . Judging by the forums, this is a Unity bug. Android wrapped part of the code in the define, where it defined by default:

When developing for Android, you need to be prepared for constant digging in the console and regular rebuilding. If you initially correctly configure Android.mk and Application.mk, then problems with the assembly should not arise.

Well, like, that's all. I tried to dwell on all the important points that were not obvious from the beginning. Now, having this knowledge, you can pre-develop a normal plug-in architecture, and you do not have to rewrite the code several times.

According to my preliminary calculations, this work should have taken 2-3 weeks, but it took 2 months. Most of the time I had to spend on clarifying the above points. The most tedious and longest stage is Android. The process of reassembling static libraries and the project took about 15 minutes, and debugging was done by adding new logs. So stock up coffee and be patient. And do not forget about the frequent crashes and freezes of Unity.

I hope this material will be useful and help save valuable time. Criticism, questions are welcome!

Thanks for attention.

This article should also be useful for advanced users. It will consider useful tools and nuances of developing plugins for OSX, Windows, iOS and Android.

With playing videos in Unity for a long time, not everything went well. Built-in tools are very limited, and on mobile platforms they can only play video in full screen mode, which is not an ice game game! At first, we used third-party plugins. However, it either lacked the necessary functionality, or there were bugs, the fixes of which had to wait a long time (if they were fixed at all). For this reason, we decided to write our own version of the video decoder for Unity with

I will not post the creation of the plugin and the code - sorry, commercial secret, but I will dwell on general principles. To implement a video decoder, he took the vp8 and vp9 codecs, which can play open and license-free WebM format. After decoding the video frame, we get the data in the YUV color model. Then we write each component in a separate texture. In fact, this is where the plugin ends. Further in Unity itself, the shader decodes YUV into the RGB color model, which we already apply to the object.

You ask - why a shader? Good question. At first I tried to convert the color model software, on the processor. For desktops, this is acceptable, and performance does not drop much, but on mobile platforms the picture is radically different. On the iPad 2 in the workspace, the software converter gave 8-12 FPS. When color conversion in the shader received 25-30 FPS, which is already a normal playable indicator.

Let's move on to the nuances of plugin development.

Key Points

The documentation for writing plugins for Unity is rather scarce, everything is described in general terms (for iOS, I found many nuances myself by experience). Link to the doc.

What pleases - there are examples collected for current studios and platforms (except iOS: Apple probably didn’t pay extra to developers). The examples themselves are updated with every Unity update, but there is also a fly in the ointment: APIs, interfaces often change, defines and constants are renamed. For example, I took a fresh update, from where I used a new header. Then I figured out why the plug-in does not work on mobile platforms, until I noticed:

SUPPORT_OPENGLES // было

SUPPORT_OPENGL_ES // стало

Probably the only important point for all platforms that you need to immediately take into account is the rendering cycle. Unity rendering can be done in a separate thread. This means that the main thread will not work with textures. To resolve this situation, the scripts have the IssuePluginEvent function , which pulls a callback at the right time, where work with the resources needed for rendering should be performed. When working with textures (creating, updating, deleting), I recommend using coroutine, which will pull callback at the end of the frame:

private IEnumerator MyCoroutine(){

while (true) {

yield return new WaitForEndOfFrame();

GL.IssuePluginEvent(MyPlugin.GetRenderEventFunc(),magicnumber);

}

}

Interestingly, if you try to work with textures in the main thread, the game crashes only on the DX9 API, and even then not always.

OSX

Probably the easiest and hassle-free platform. The plugin is going quickly, debiting is also easy. In xCode, do attach to Process -> Unity. You can put breaks, watch a callstack when falling, etc.

There was only one interesting point. Unity recently upgraded to version 5.3.2. In the editor, OpenGL 4 became the main graphics API, in the older version was OpenGL 2.1, which is now deprecated. In the updated version, the editor simply did not play the video. A quick debug showed that the glTexSubImage2D function (GL_TEXTURE_2D, 0, 0, 0, width, height, GL_ALPHA, GL_UNSIGNED_BYTE, buffer) returns the GL_INVALID_ENUM error . Judging by the OpenGL documentation, to replace the pixel format GL_ALPHA came GL_RED, which does not work with OpenGL 2.1 ... I had to back up with a crutch:

const GLubyte * strVersion = glGetString (GL_VERSION);

m_oglVersion = (int)(strVersion[0] – '0');

if (m_oglVersion >= 3)

pixelFormat = GL_RED;

else

pixelFormat = GL_ALPHA;

And the most mysterious thing is that in the final build compiled under OpenGL 4, everything works fine with the GL_ALPHA flag . I wrote down this nuance in the section of magic, but nevertheless did it humanly.

Unity Editor can be run on an older version of OGL. To do this, we write in the console: Of the useful utilities I want to mention the OpenGL Profiler, which is part of Graphics Tools. Tools can be downloaded on the Apple website in the Developer section. The profiler allows you to fully monitor the state of OpenGL in the application, you can catch errors, look at the contents of textures (size, type, format), shaders and buffers in video memory, set breakpoints for different events. A very useful tool for working with graphics. Screenshot: So I found out that 1326 textures are used in Unity Editor.

Applications/Unity/Unity.app/Contents/MacOS/Unity -force-opengl

Windows

On this OpenGL platform, the plugin version was also compiled without any problems. But on DirectX 9 dwell in more detail.

1. DirectX 9 has such a "feature" as the loss of a device (lost device). OpenGL and DirectX (starting with version 10) are devoid of this drawback. In fact, there is a loss of control over graphic resources (textures, shaders, video memory meshes, etc.). It turns out that we must handle this situation, and if it happened, we must load or create all the textures again. According to my observations, many plugins do just that. I managed to cheat a little: I create textures from Unity scripts, and then pass their pointers to the plugin. Thus, I leave the entire resource management to Unity, and he himself copes with the situation of device loss.

MyTexture = new Texture2D(w,h, TextureFormat.Alpha8, false);

MyPlugin.SetTexture(myVideo, MyTexture.GetNativeTexturePtr());

2. When everything seemed to be ready, an unexpected problem was discovered. Sometimes and only on some videos the image was displayed with an offset, as shown in the screenshot:

Judging by the type of image, the error could be present in the algorithm for copying data to a texture, in texture coordinates, or was associated with the wrap texture. The documentation suggested that DirectX could even out texture sizes for optimization by adding extra bytes. This information is stored in the structure:

struct D3DLOCKED_RECT {

INT Pitch;

void *pBits;

}

Pitch - the number of bytes in one row of the texture, taking into account alignment.

Having a little tweaked the copy algorithm, I got the desired result (filled extra pixels with zeros):

for (int i = 0; i < height; ++i)

{

memcpy(pbyDst, pbySrc, sizeof(unsigned char) * width);

pixelsDst += locked_rect.Pitch;

pixelsSrc += width;

}

For debugging OpenGL, the gDEBugger utility will help, which is similar in functionality to OpenGL Profiler for OSX:

Unfortunately, I did not find such utilities for DX9. The presence of such a tool would help in finding an error with copying data to a texture.

IOS

There was no example project for this platform in the samples. The documentation has little useful information and mainly only about access to functions from the plugin.

I will dwell on important aspects:

1. In xCode we create a regular iOS project with the type StaticLib. We connect OpenGL frameworks - and you can build a plugin.

2. The name of the final plugin file does not matter. In Unity, functions are imported from all plugins located in the iOS folder:

[DllImport("__Internal")]

3. An important point - if you have a function with the same name in another plugin, then you cannot build the build. The linker Unity will swear on double implementation. Advice - name so that no one thinks of such a name.

4. UnityPluginLoad (IUnityInterfaces * unityInterfaces) , which should be called when the plug-in is loaded, is not called! To find out when the plug-in still started and get information about the current render device, you need to create your own controller, inherited from UnityAppController and register the function call to start the plug-in and RenderEvent in it . The created file should be placed in the folder with plugins for iOS. An example implementation of a controller for registering functions:

#import

#import "UnityAppController.h"

extern "C" void MyPluginSetGraphicsDevice(void* device, int deviceType, int eventType);

extern "C" void MyPluginRenderEvent(int marker);

@interface MyPluginController : UnityAppController

{

}

- (void)shouldAttachRenderDelegate;

@end

@implementation MyPluginController

- (void)shouldAttachRenderDelegate;

{

UnityRegisterRenderingPlugin(&MyPluginSetGraphicsDevice, &MyPluginRenderEvent);

}

@end

IMPL_APP_CONTROLLER_SUBCLASS(MyPluginController)

5. If the plugin uses several different architectures, then they can be combined into a single static library for convenience: 6. During testing, I found that negative texture coordinates are not transferred from the vertex shader to the pixel shader - zeros always come. By default, textures are created with the addressing mode CLAMP_TO_EDGE . In this case, OpenGL ES cuts everything to the range [0..1]. In desktop platforms, this is not observed. 7. A serious bug was noticed. If you build a project for iOS with Script Debugging turned on, then when the game crashes, xCode also crashes. As a result, no logs, no callstack ...

lipo -arch armv7 build/libPlugin_armv7.a\

-arch i386 build/libPlugin _i386.a\

-create -output build/libPlugin .a

Debugging the plug-in for the iOS platform is a pleasure - in xCode there is always a callstack when it crashes. In the console, you can read the logs of both scripts and the plugin, and if you add * .CPP plugin files to the project, you can put the breaks and use the full lldb debugger functionality! But with scripts, everything is much worse, so logging to help.

Android

Building under Android requires the most tools:

- A normal IDE for editing C ++ code. I used xCode. Anyway, on a poppy under Android, collecting is somehow easier;

- NDK for building C code into a static library;

- Android Studio with all its needs like Java, etc. Studio is needed for convenient logging of what is happening in the application.

Let's go through interesting points:

1. The situation with debugging plugins in Android is rather sad, so I recommend immediately thinking about writing logs to a file. You can, of course, get confused and try to configure remote debugging, but I didn’t have time for this and I had to go the simpler way, browsing the logs through Android Studio. To do this, android / log.h has the __android_log_vprint function, which works similarly to printf. For convenience, wrapped in a cross-platform view:

static void DebugLog (const char* fmt, ...)

{

va_list argList;

va_start(argList, fmt);

#if UNITY_ANDROID

__android_log_vprint(ANDROID_LOG_INFO, "MyPluginDebugLog", fmt, argList);

#elif

printf (fmt, argList);

#endif

va_end(argList);

}

I advise you not to bypass asserts . If they are triggered, Android Studio allows you to view the full call stack.

2. On this platform, a specific specificity of naming plugins is libMyPluginName.so. For example, the lib prefix is required (for more details, see the Unity documentation).

3. In the Android application, all resources are stored in one bundle, which is a jar or zip file. We can’t just open the stream and start reading data, as in other platforms. In addition to the path to the video, you need Application.dataPath, which contains the path to Android apk, only in this way we can get and open the desired asset. From here we get the file length and its offset relative to the start of the bundle:

unityPlayer = new AndroidJavaClass("com.unity3d.player.UnityPlayer"))

activity = unityPlayer.GetStatic("currentActivity")

assetMng = activity.Call("getAssets")

assetDesc = assetMng.Call("openFd", myVideoPath);

offset = assetFileDescriptor.Call("getStartOffset");

length = assetFileDescriptor.Call("getLength");

We open the filestream along the path Application.dataPath using standard tools (fopen or whatever you like), and begin to read the file with offset offset - this is our video. The length is needed to know when the video file will end and stop further reading.

4. Found a bug.

s_DeviceType = s_Graphics->GetRenderer();

s_DeviceType always contains kUnityGfxRendererNull . Judging by the forums, this is a Unity bug. Android wrapped part of the code in the define, where it defined by default:

s_DeviceType = kUnityGfxRendererOpenGLES

When developing for Android, you need to be prepared for constant digging in the console and regular rebuilding. If you initially correctly configure Android.mk and Application.mk, then problems with the assembly should not arise.

Well, like, that's all. I tried to dwell on all the important points that were not obvious from the beginning. Now, having this knowledge, you can pre-develop a normal plug-in architecture, and you do not have to rewrite the code several times.

In conclusion

According to my preliminary calculations, this work should have taken 2-3 weeks, but it took 2 months. Most of the time I had to spend on clarifying the above points. The most tedious and longest stage is Android. The process of reassembling static libraries and the project took about 15 minutes, and debugging was done by adding new logs. So stock up coffee and be patient. And do not forget about the frequent crashes and freezes of Unity.

I hope this material will be useful and help save valuable time. Criticism, questions are welcome!

Thanks for attention.