Database versioning on the fly

Hello, my name is Eugene, and I am a web developer. Several years ago, the DBA (Database Administrator) function passed to me, I received several certificates on this subject and solved the corresponding problems. I have long wanted to describe the task of versioning a database, but it seemed to me that for this there should be some win-win options that skilled uncles know well, and I just misunderstood something. Yesterday's interview and subsequent search on thematic resources showed that this is not so, and the task is really difficult, relevant and can not be solved unambiguously. Let's analyze it point by point.

We use version control only for DDL (Data Definition Language) queries. The data itself does not interest us. Why? We consider two extreme cases.

If you work with a complex database, then the tables, oddly enough, are the least interesting in it (although they should also be versioned). It is much more difficult to deal with business logic, which is contained in triggers, views, packages and procedures, and similar objects. For example, in one of the databases I worked with, there were packets up to one and a half megabytes in size. Changes are constantly being made to these packages, and it is vital to know who made the changes, when, it is desirable to know why, and how would we roll it back to any desired state.

Imagine an ideal world in which we have a clear TK that does not change until the completion of the project. The release, after which we forget about what we did, and get a regular salary for beautiful eyes. The ideal code that we wrote right away, taking into account all the nuances, which works without errors and does not require maintenance. The lack of improvements, urgent bug fixes, integration mechanisms, the ability to work on a test base and test samples, the presence of ubiquitous unit tests that say that everything is perfect.

In this case, it is more than enough for us to use the version control system as the primary source of information about the state of the database, and roll out changes to the database from it. There is a single repository, there is joint work on the base code - everything is beautiful and transparent. There are several products that seem to implement this functionality quite well.

Now open your eyes and look around. In most cases, the project is implemented according to the scheme, which I would call UHV (made, rolled out, threw out). A huge percentage of completed projects cannot be sold and is being closed without any prospects for the future. The remaining lucky ones go through hundreds of iterations, after which the name remains at best from the original TK. In this reality, we are not primarily concerned about the speed of the product, its requirements and quality. We are concerned about the speed of development, since, besides the obvious reasons, the largest part of the project budget depends on it - the cost of work in development hours.

Yes, that’s wrong. The world is cruel, unfair, dynamic, and requires an instant reaction, even if quality suffers. All developers strive for the ideal code in their hearts, but most accept the terms of the deal with the devil and seek an acceptable compromise of quality and speed. We try to do our best, but learn not to blush if, instead of a half-year period and an ideal product, we made an unstable and sometimes ugly decision in two weeks. Moreover, at some point it comes to the understanding that the “last bug” will never be found, and all we can do is simply stop looking for it at some point and make a release. Bringing the solution to the ideal is the destiny of the simplest applications and console scripts - and even that often fails to take into account some non-trivial points. When we talk about large projects, then the example of Oracle, Microsoft and Apple show us that there is no perfect code. As an example, the DBA classic answer to the question that in the new release of Oracle Database - “removed 30% of the old bugs, added 40% of the new ones”.

What does it mean if we talk about the database? This is usually the case:

Further, if a developer comes to the DBA and asks to return the previous version of his object, the DBA can do this in three cases (using Oracle as an example):

The most realistic option is the third. But it is complicated by the fact that it is often not known at what date you need to restore, and restoring a base of, say, 10 terabytes is a rather long and resource-intensive operation. So usually the DBA just shrugs its hands, the developer frowns at coffee and goes to write his object from scratch.

What can we do to make life easier for developers? I see the only option - to version the database upon the fact of already completed changes. Naturally, this does not give any opportunity to prevent possible errors - but it will provide a way in a large percentage of cases to restore the desired object and the entire system to life.

The first simple “forehead” solution is simply to periodically unload the entire base. But unloading the database takes a long time, and then we will not know who, when and what changed. So obviously something more complicated is required. Namely - we need to unload only the changed DDL objects. To do this, you can use two approaches - use Audit, or create a system trigger. I used the second method. Then the sequence is as follows:

Moreover, for each action, we can get fairly detailed information, including the full text of the request, the scheme, the name and type of the object, the IP address of the user, the network name of his machine, username, type and date of change. As a rule, this is enough to find a developer and give a medal.

Next, we want to have a repository in which the structure of the database will be presented in an intuitive way in order to be able to compare different versions of the object. To do this, each time you change the database, you need to unload the changed objects and commit to the database. Nothing complicated! We create a Git repository, first we do a full upload there, then we create a service that monitors our change table, and if new records appear, it unloads the changed objects.

Side by side comparison

List of objects in the scheme

Change history of a specific object

The same on github

That is, we have a working tool with which we can find the source of any changes in the database and roll them back if necessary. In my case, the largest Git repository in Gitlab (its own instance on a separate machine) takes several hundred megabytes, it has about a hundred thousand commits, and it works quite fast. Before moving to Gitlab, the same repository lived fine on github, and then on bitbucket.

Data on what objects we then have:

It is also possible to modify the program for the task of updating an outdated database - we unload the old version, unload the new version on top of it, fix the difference in semi-automatic mode.

As a recommendation, I also add that it is better to periodically unload the current version on top of what is in the repository - in case of any changes that could not be covered by the logic of the upload algorithm.

A link to my algorithm for PHP and the installation guide is at the end of the article, but I sincerely recommend that you use it only for reference - it was written a long time ago and with a very crooked left hand while performing other tasks. The only plus is that, oddly enough, it works.

I sincerely wish you not to have to work with such a workflow. And I hope that this publication will help you if your world is still far from ideal.

Do you version your database? In the right way, or in fact? Maybe there are implementations for other DBMSs - MySQL, Postgres? Or is there some fundamentally different good approach that I overlooked?

What are we versioning

We use version control only for DDL (Data Definition Language) queries. The data itself does not interest us. Why? We consider two extreme cases.

- Little data (say, less than 50 megabytes). In this case, we can just periodically do a full dump of the database and safely put it into the repository.

- A lot of data (more than a gigabyte). In this case, versioning will not help us much, anyway, sorting it out will be quite problematic. It is advisable in this case to use the standard scheme with backups and the archive log, which allows us to get an integral version of the database at any time in time.

Why do we need to version DDL?

If you work with a complex database, then the tables, oddly enough, are the least interesting in it (although they should also be versioned). It is much more difficult to deal with business logic, which is contained in triggers, views, packages and procedures, and similar objects. For example, in one of the databases I worked with, there were packets up to one and a half megabytes in size. Changes are constantly being made to these packages, and it is vital to know who made the changes, when, it is desirable to know why, and how would we roll it back to any desired state.

Perfect world

Imagine an ideal world in which we have a clear TK that does not change until the completion of the project. The release, after which we forget about what we did, and get a regular salary for beautiful eyes. The ideal code that we wrote right away, taking into account all the nuances, which works without errors and does not require maintenance. The lack of improvements, urgent bug fixes, integration mechanisms, the ability to work on a test base and test samples, the presence of ubiquitous unit tests that say that everything is perfect.

In this case, it is more than enough for us to use the version control system as the primary source of information about the state of the database, and roll out changes to the database from it. There is a single repository, there is joint work on the base code - everything is beautiful and transparent. There are several products that seem to implement this functionality quite well.

Real world

Summary of this part

Now open your eyes and look around. In most cases, the project is implemented according to the scheme, which I would call UHV (made, rolled out, threw out). A huge percentage of completed projects cannot be sold and is being closed without any prospects for the future. The remaining lucky ones go through hundreds of iterations, after which the name remains at best from the original TK. In this reality, we are not primarily concerned about the speed of the product, its requirements and quality. We are concerned about the speed of development, since, besides the obvious reasons, the largest part of the project budget depends on it - the cost of work in development hours.

Yes, that’s wrong. The world is cruel, unfair, dynamic, and requires an instant reaction, even if quality suffers. All developers strive for the ideal code in their hearts, but most accept the terms of the deal with the devil and seek an acceptable compromise of quality and speed. We try to do our best, but learn not to blush if, instead of a half-year period and an ideal product, we made an unstable and sometimes ugly decision in two weeks. Moreover, at some point it comes to the understanding that the “last bug” will never be found, and all we can do is simply stop looking for it at some point and make a release. Bringing the solution to the ideal is the destiny of the simplest applications and console scripts - and even that often fails to take into account some non-trivial points. When we talk about large projects, then the example of Oracle, Microsoft and Apple show us that there is no perfect code. As an example, the DBA classic answer to the question that in the new release of Oracle Database - “removed 30% of the old bugs, added 40% of the new ones”.

Who is to blame and what to do?

What does it mean if we talk about the database? This is usually the case:

- A large number of developers have access to the database

- Often there is a need to roll back one or another object

- No one ever admits that it was he who broke the object

- Modifications are often incomprehensible

Further, if a developer comes to the DBA and asks to return the previous version of his object, the DBA can do this in three cases (using Oracle as an example):

- If the previous version is still saved in UNDO

- If the object was simply deleted and saved in the trash (RECYCLEBIN)

- If he can deploy a full backup of the database on the required date

The most realistic option is the third. But it is complicated by the fact that it is often not known at what date you need to restore, and restoring a base of, say, 10 terabytes is a rather long and resource-intensive operation. So usually the DBA just shrugs its hands, the developer frowns at coffee and goes to write his object from scratch.

What can we do to make life easier for developers? I see the only option - to version the database upon the fact of already completed changes. Naturally, this does not give any opportunity to prevent possible errors - but it will provide a way in a large percentage of cases to restore the desired object and the entire system to life.

Oracle implementation

The first simple “forehead” solution is simply to periodically unload the entire base. But unloading the database takes a long time, and then we will not know who, when and what changed. So obviously something more complicated is required. Namely - we need to unload only the changed DDL objects. To do this, you can use two approaches - use Audit, or create a system trigger. I used the second method. Then the sequence is as follows:

- We create a table in which data about DDL requests will be stored

- Create a system trigger that will write to this table

Moreover, for each action, we can get fairly detailed information, including the full text of the request, the scheme, the name and type of the object, the IP address of the user, the network name of his machine, username, type and date of change. As a rule, this is enough to find a developer and give a medal.

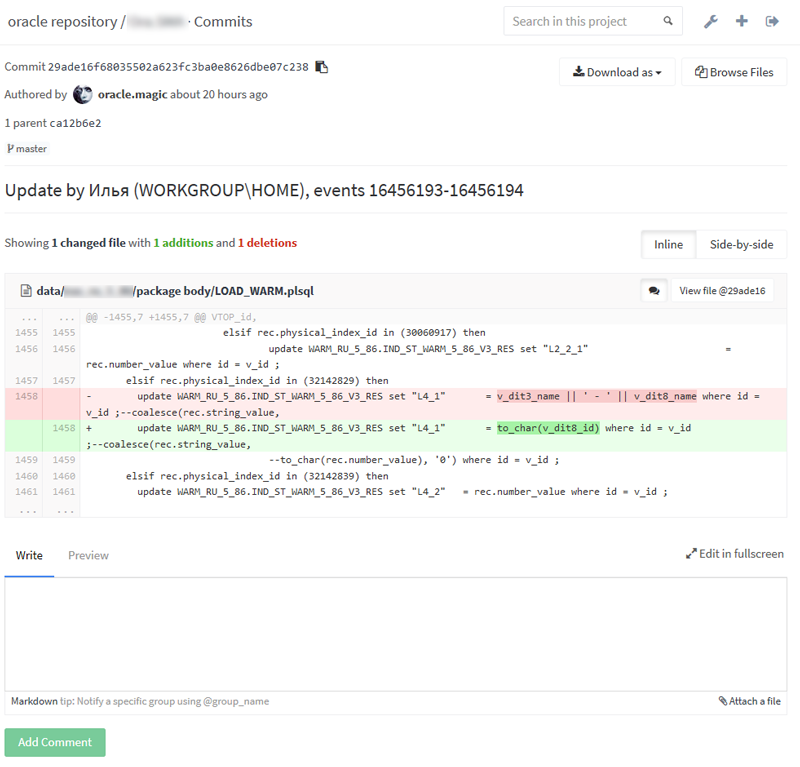

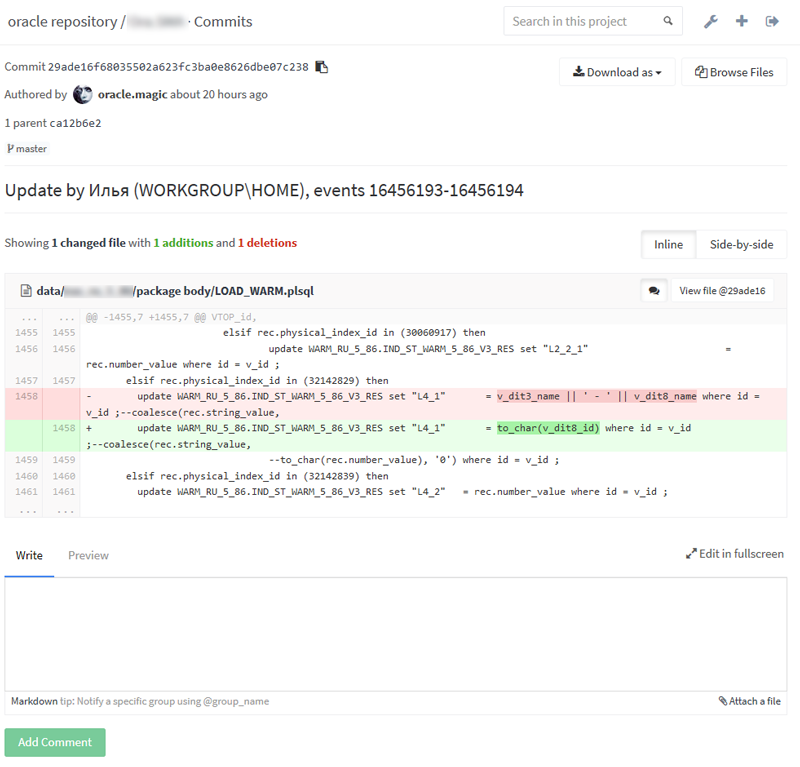

Next, we want to have a repository in which the structure of the database will be presented in an intuitive way in order to be able to compare different versions of the object. To do this, each time you change the database, you need to unload the changed objects and commit to the database. Nothing complicated! We create a Git repository, first we do a full upload there, then we create a service that monitors our change table, and if new records appear, it unloads the changed objects.

What does it look like

Normal comparison

Side by side comparison

List of objects in the scheme

Change history of a specific object

The same on github

That is, we have a working tool with which we can find the source of any changes in the database and roll them back if necessary. In my case, the largest Git repository in Gitlab (its own instance on a separate machine) takes several hundred megabytes, it has about a hundred thousand commits, and it works quite fast. Before moving to Gitlab, the same repository lived fine on github, and then on bitbucket.

Data on what objects we then have:

- Tables

- representation

- materialized views

- triggers

- sequences

- users (with password hashes that can be used to restore the old password)

- packages, functions, procedures

- database links (also with password hashes)

- grants

- constantins with their condition

- synonyms

It is also possible to modify the program for the task of updating an outdated database - we unload the old version, unload the new version on top of it, fix the difference in semi-automatic mode.

Minuses

- Some changes may occur too quickly, and the service will not have time to unload the intermediate results - but it is unlikely that they are relevant to us, and you can find them in the table of changes.

- Some changes may affect several objects at once - for example, deleting a scheme or DROP CASCADE - but this too can be worked out correctly if desired, the only question is implementation.

- Due to the fact that password hashes are stored in the repository, it cannot be issued directly to developers.

As a recommendation, I also add that it is better to periodically unload the current version on top of what is in the repository - in case of any changes that could not be covered by the logic of the upload algorithm.

A link to my algorithm for PHP and the installation guide is at the end of the article, but I sincerely recommend that you use it only for reference - it was written a long time ago and with a very crooked left hand while performing other tasks. The only plus is that, oddly enough, it works.

Conclusion

I sincerely wish you not to have to work with such a workflow. And I hope that this publication will help you if your world is still far from ideal.

Do you version your database? In the right way, or in fact? Maybe there are implementations for other DBMSs - MySQL, Postgres? Or is there some fundamentally different good approach that I overlooked?

References

- Great discussion on how to version base on stackoverflow

- Implementing the right approach from Liquibase

- Similar to my old Java + SVN implementation

- My tool website with installation instructions

- The repository of my github tool code

- You can hire me here