Kudu - New Hadoop Ecosystem Storage Engine

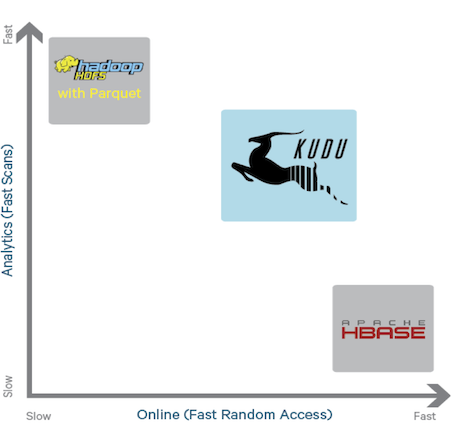

Kudu was one of the new products presented by Cloudera at the conference “Strata + Hadoop World 2015”. This is a new big data storage engine designed to fill the gap between two existing engines: the distributed HDFS file system and the Hbase column database.

The current engines are not without flaws. HDFS, which does a great job of scanning large amounts of data, shows poor results on search operations. With Hbase everything is exactly the opposite. In addition, HDFS has an additional limitation, namely, it does not allow modification of already recorded data. The new engine, according to the developers, has the advantages of both existing systems:

- search operations with quick response

- the possibility of modification

- high performance when scanning large volumes of data

Some options for using Kudu can be time series analysis, log analysis and sensory data. Currently, systems that use Hadoop for such things have a rather complex architecture. As a rule, data is in several storages at the same time (the so-called “Lambda architecture”). It is necessary to solve a number of tasks on data synchronization between storages (inevitably there is a lag with which, as a rule, they simply reconcile and live). You also have to configure data access security policies for each storage separately. And the rule “the simpler the more reliable” has not been canceled. Using Kudu instead of several simultaneous storages can significantly simplify the architecture of such systems.

Kudu features:

- High performance for scanning large amounts of data

- Fast response time in search operations

- Column database, type CP in the CAP theorem, supports several levels of data consistency

- Support for “update”

- Transactions at the record level

- Fault tolerance

- Customizable redundancy level data (for data safety in case of failure of one of the nodes)

- API for C ++, Java and Python. Access is supported from Impala, Map / Reduce, Spark.

- Open source. Apache license

SOME INFORMATION ABOUT ARCHITECTURE

The Kudu cluster consists of two types of services: master - a service responsible for managing metadata and coordination between nodes; tablet - a service installed on each node designed to store data. A cluster can have only one active master. For fault tolerance, several more standby master services can be started. Tablet - servers break up data into logical partitions (called “tablets”).

From the user's point of view, Kudu data is stored in tables. For each table, it is necessary to determine the structure (a rather atypical approach for NoSQL databases). In addition to the columns and their types, the user must define the primary key and partitioning policy.

Unlike other components of the Hadoop ecosystem, Kudu does not use HDFS to store data. OS file system is used (it is recommended to use ext4 or XFS). In order to guarantee data safety in case of failure of single nodes, Kudu replicates data between servers. Typically, each tablet is stored on three servers (however, only one of the three servers accepts write operations, the rest accepts read-only operations). Synchronization between replicas of tablet-a is implemented using the raft protocol.

FIRST STEPS

Let's try to work with Kudu from the point of view of the user. Let's create a table and try to access it using SQL and Java API.

To fill the table with data, we use this open dataset:

https://raw.githubusercontent.com/datacharmer/test_db/master/load_employees.dump

At the moment, Kudu does not have its own client console. We will use the Impala console (impala-shell) to create the table.

First of all, create the “employees” table with data storage in HDFS:

CREATE TABLE employees (

emp_no INT,

birth_date STRING,

first_name STRING,

last_name STRING,

gender STRING,

hire_date STRING

);

We load the dataset on the machine with the impala-shell client and import the data into the table:

impala-shell -f load_employees.dump

After the command completes execution, run impala-shell again and execute the following request:

create TABLE employees_kudu

TBLPROPERTIES(

'storage_handler' = 'com.cloudera.kudu.hive.KuduStorageHandler',

'kudu.table_name' = 'employees_kudu',

'kudu.master_addresses' = '127.0.0.1',

'kudu.key_columns' = 'emp_no'

) AS SELECT * FROM employees;

This query will create a table with similar fields, but with Kudu as storage. Using “AS SELECT” in the last line, copy the data from HDFS to Kudu.

Without leaving impala-shell, we will launch several SQL queries to the table just created:

[vm.local:21000] > select gender, count(gender) as amount from employees_kudu group by gender;

+--------+--------+

| gender | amount |

+--------+--------+

| M | 179973 |

| F | 120051 |

+--------+--------+

It is possible to make requests to both storages (Kudu and HDFS) at the same time:

[vm.local:21000] > select employees_kudu.* from employees_kudu inner join employees on employees.emp_no=employees_kudu.emp_no limit 2;

+--------+------------+------------+-----------+--------+------------+

| emp_no | birth_date | first_name | last_name | gender | hire_date |

+--------+------------+------------+-----------+--------+------------+

| 10001 | 1953-09-02 | Georgi | Facello | M | 1986-06-26 |

| 10002 | 1964-06-02 | Bezalel | Simmel | F | 1985-11-21 |

+--------+------------+------------+-----------+--------+------------+

Now let's try to reproduce the results of the first query (counting male and female employees) using the Java API. Here is the code:

import org.kududb.ColumnSchema;

import org.kududb.Schema;

import org.kududb.Type;

import org.kududb.client.*;

import java.util.ArrayList;

import java.util.List;

public class KuduApiTest {

public static void main(String[] args) {

String tableName = "employees_kudu";

Integer male = 0;

Integer female = 0;

KuduClient client = new KuduClient.KuduClientBuilder("localhost").build();

try {

KuduTable table = client.openTable(tableName);

List projectColumns = new ArrayList<>(1);

projectColumns.add("gender");

KuduScanner scanner = client.newScannerBuilder(table)

.setProjectedColumnNames(projectColumns)

.build();

while (scanner.hasMoreRows()) {

RowResultIterator results = scanner.nextRows();

while (results.hasNext()) {

RowResult result = results.next();

if (result.getString(0).equals("M")) { male += 1; }

if (result.getString(0).equals("F")) { female += 1; }

}

}

System.out.println("Male: " + male);

System.out.println("Female: " + female);

} catch (Exception e) {

e.printStackTrace();

} finally {

try {

client.shutdown();

} catch (Exception e) {

e.printStackTrace();

}

}

}

}

After compiling and running, we get the following result:

java -jar kudu-api-test-1.0.jar

[New I/O worker #1] INFO org.kududb.client.AsyncKuduClient - Discovered tablet Kudu Master for table Kudu Master with partition ["", "")

[New I/O worker #1] INFO org.kududb.client.AsyncKuduClient - Discovered tablet f98e05a4bbbe49528f38b5a46ef3a7a4 for table employees_kudu with partition ["", "")

Male: 179973

Female: 120051

As you can see, the result coincides with what the SQL query issued.

CONCLUSION

For big data systems, in which both analytical operations on the entire volume of stored data and search operations with fast response times should be performed, Kudu seems to be a natural candidate as a data storage engine. Thanks to its many APIs, it is well integrated into the Hadoop ecosystem. In conclusion, it should be said that Kudu is currently under active development and not ready for use in production.