How I won the Beeline BigData contest

Everyone has heard many times about the Beeline machine learning contest and even read articles ( one , two ). Now the competition is over, and it turned out that the first place went to me. And although only hundredths of a percent separated me from the previous participants, I still would like to tell you what I did so much. In fact - nothing incredible.

Data preparation

They say that 80% of the time data analytics spend on data preparation and preliminary modifications, and only 20% directly on analysis. And it’s not so casual as “garbage in - garbage out”. The process of preparing the source data can be divided into several stages, which I suggest going through.

Outlier correction

After a careful study of the histograms, it becomes clear that quite a few outliers have crept into the data. For example, if you see that 99.9% of the observations of the variable X are concentrated on the interval [0; 1], and 0.01% of the observations are thrown out for a hundred, then it’s quite logical to do two things: first, introduce a new column to indicate strange events, and secondly second, replace emissions with something reasonable.

data["x8_strange"] = (data["x8"] < -3.0)*1

data.loc[data["x8"] < -3.0 , "x8"] = -3.0

data["x31_strange"] = (data["x31"] < 0.0)*1.0

data.loc[data["x31"] < 0.0, "x31"] = 0.0

data["x40_zero"] = (data["x40"] == 0.0)*1.0

Normalization of Distributions

In general, working with normal distributions is extremely pleasant, since many statistical tests are tied to the normality hypothesis. To a lesser extent, this concerns modern methods of machine learning, but it is important to bring data to a reasonable form. It is especially important for methods that work with distances between points (almost all clustering algorithms, the classifier of k-neighbors). In this part of the data preparation, I took the standard approach: logarithm everything that is more densely distributed around zero. Thus, for each variable, I selected a transformation that gave a more pleasant appearance. Well, after that I scaled everything to the segment [0; 1]

Text variables

In general, text variables are a storehouse for data mining, but in the original data there were only hashes, and the names of the variables were anonymized. Therefore, only a standard routine: replace all rare hashes with the word Rare (rare = occurs less often than 0.5%), replace all the missing data with the word Missing and expand it as a binary variable (since many methods, including xgboost, cannot categorical variables) .

data = pd.get_dummies(data, columns=["x2", "x3", "x4", "x11", "x15"])

for col in data.columns[data.dtypes == "object"]:

data.loc[data[col].isnull(), col] = 'Missing'

thr = 0.005

for col in data.columns[data.dtypes == "object"]:

d = dict(data[col].value_counts(dropna=False)/len(data))

data[col] = data[col].apply(lambda x: 'Rare' if d[x] <= thr else x)

d = dict(data['x0'].value_counts(dropna=False)/len(data))

data = pd.get_dummies(data, columns=data.columns[data.dtypes == "object"])

Feature engineering

This is what we all love data science for. But everything is encrypted, so this item will have to be omitted. Nearly. After a close examination of the graphs, I noticed that x55 + x56 + x57 + x58 + x59 + x60 = 1, which means it’s some share. Let's say what percentage of money a subscriber spends on SMS, calls, the Internet, etc. This means that those comrades who have any of the shares of more than 90% or less than 5% are of particular interest. Thus we get 12 new variables.

thr_top = 0.9

thr_bottom = 0.05

for col in ["x55", "x56", "x57", "x58", "x59", "x60"]:

data["mostly_"+col] = (data[col] >= thr_top)*1

data["no_"+col] = (data[col] <= thr_bottom)*1

We remove NA

Everything is extremely simple here: after all the distributions are brought to a reasonable form, you can safely replace the NA-shki with the middle or median (now they almost coincide). I tried to generally remove from the training set those rows where more than 60% of the variables are NA, but this did not end in good.

Regression as a regressor

The next step is no longer so banal. From the distribution of classes, I suggested that age groups are ordered, that is, 0 <1 ... <6, or vice versa. And if so, then you can not classify, but build a regression. It will work poorly, but its result can be transferred to other algorithms for training. Therefore, we start the usual linear regression with the huber loss function and optimize it through stochastic gradient descent.

from sklearn.linear_model import SGDRegressor

sgd = SGDRegressor(loss='huber', n_iter=100)

sgd.fit(train, target)

test = np.hstack((test, sgd.predict(test)[None].T))

train = np.hstack((train, sgd.predict(train)[None].T))

Clustering

The second interesting thought I tried: clustering data using the k-means method. If the data has a real structure (and it should be in the data for subscribers), then k-means will feel it. First I took k = 7, then added 3 and 15 (twice as much and half as much). The predictions of each of these algorithms are cluster numbers for each sample. Since these numbers are not ordered, it is impossible to leave them with numbers, it is necessary to binarize. Total + 25 new variables.

from sklearn.cluster import KMeans

k15 = KMeans(n_clusters=15, precompute_distances = True, n_jobs=-1)

k15.fit(train)

k7 = KMeans(n_clusters=7, precompute_distances = True, n_jobs=-1)

k7.fit(train)

k3 = KMeans(n_clusters=3, precompute_distances = True, n_jobs=-1)

k3.fit(train)

test = np.hstack((test, k15.predict(test)[None].T, k7.predict(test)[None].T, k3.predict(test)[None].T))

train = np.hstack((train, k15.predict(train)[None].T, k7.predict(train)[None].T, k3.predict(train)[None].T))

Training

When the data preparation was completed, the question arose which machine learning method to choose. In principle, the answer to this question has long been known .

In fact, besides xgboost, I tried the k-neighbors method. Despite the fact that it is considered ineffective in high-dimensional spaces, I managed to achieve 75% (a small step for a person and a large step for k-neighbors), by counting the distance not in ordinary Euclidean space (where the variables are equivalent), but by adjusting for the importance variables as shown in the presentation .

However, all these are toys, and really good results were achieved not by the neural network, not by logistic regression and not by k-neighbors, but what was expected - xgboost. Later, coming to the defense in Beeline, I found out that they also achieved better results using this library. For classification tasks, it is already something like a “gold standard”.

"When in doubt - use xgboost"

Owen Zhang , top-2 on Kaggle.

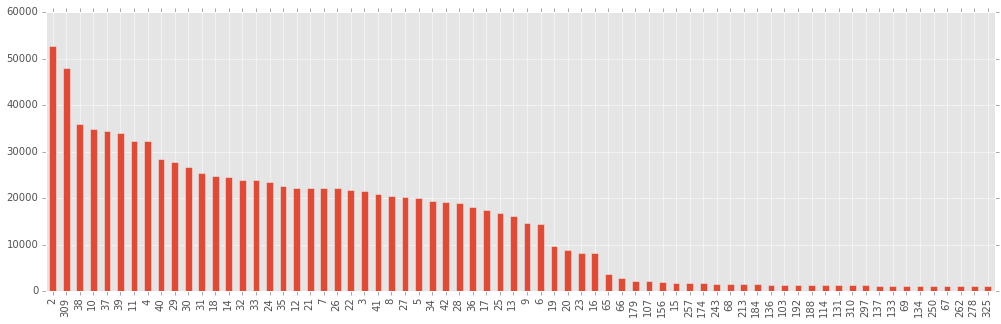

Before starting to actually launch the actual and get excellent results, I decided to see how really important all the columns that were given and those that I created by expanding the hashes and clustering using the K-means method. To do this, I did a light boost (not a lot of trees), and built columns sorted by importance (according to xgboost).

gbm = xgb.XGBClassifier(silent=False, nthread=4, max_depth=10, n_estimators=800, subsample=0.5, learning_rate=0.03, seed=1337)

gbm.fit(train, target)

bst = gbm.booster()

imps = bst.get_fscore()

My opinion is that those columns whose importance is rated as “insignificant” (and the diagram is built only for the most important 70 variables out of 335) contain more noise than actually useful correlations, and learning from them is harmful to yourself ( i.e. retrain ).

It is also interesting to note that the first important variable is x8, and the second is the results of the SGD regression that I added. Those who tried to participate in this contest must have wondered what kind of variable x8 is if it separates classes so well. At the defense in Beeline, I could not resist and did not ask what it was. It was AGE! As they explained to me, the age indicated in the purchase of the tariff and the age obtained in the polls do not always coincide, so yes, the participants did determine the age group, including age.

Through short experiments, I realized that leaving 120 columns is better than leaving 70 or leaving 170 (in the first case, apparently, something useful is thrown out, in the second, the data is polluted with something useless).

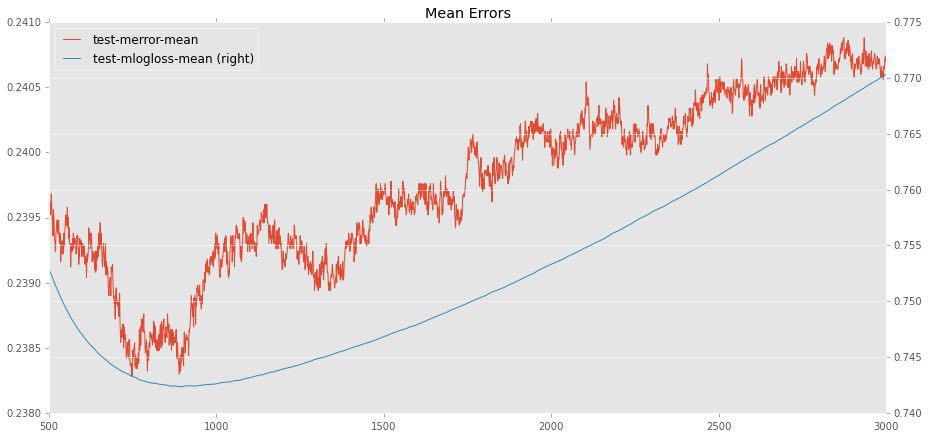

Now I had to let it go. Two parameters xgboost.XGBClassifier that deserve the most attention are eta (aka learning rate) and n_estimators (number of trees). The rest of the parameters did not really change the results (so I set max_depth = 8, subsample = 0.5, and the rest are the default parameters).

There is a natural correlation between the optimal values of eta and n_estimators - the lower eta (learning speed), the more trees are needed to achieve maximum accuracy. And we really encounter an optimum here, after which the addition of additional trees causes only retraining, worsening the accuracy of the test sample. For example, for eta = 0.02, approximately 800 trees are optimal:

First I tried to work with eta averages (0.01-0.03) and saw that, depending on the random state (seed), there is a noticeable spread (for example, for 0.02 the score varies from 76.7 to 77.1), and I also noticed that this spread becomes smaller with decreasing eta. It became clear that large eta are not suitable in principle (how can a good model be so dependent on seed?).

Then I chose the eta that I can afford on my computer (I would not want to take it for days). This is eta = 0.006. Next, it was necessary to find the optimal number of trees. In the same way as shown above, I found that for eta = 0.006, 3400 trees are suitable. Just in case, I tried two different seeds (it was important to understand whether the fluctuations were great).

for seed in [202, 203]:

gbm = xgb.XGBClassifier(silent=False, nthread=10, max_depth=8, n_estimators=3400, subsample=0.5, learning_rate=0.006, seed=seed)

gbm.fit(trainclean, target)

p = gbm.predict(testclean)

filename = ("subs/sol3400x{1}x0006.csv".format(seed))

pd.DataFrame({'ID' : test_id, 'y': p}).to_csv(filename, index=False)

Each ensemble on a regular core i7 was considered an hour and a half. It is an acceptable time when the competition takes place a month and a half. The fluctuations on the public leaderboard were small (for seed = 202 received 77.23%, for seed = 203 77.17%). Sent the best of them, although it is very likely that on a private leaderboard the other would have been no worse. However, we will not know.

Now a little about the competition itself. The first thing that catches your eye with someone familiar with Kaggle is the slightly unusual submission rules. At Kaggle, the number of sambishnas is limited (depending on the competition, but usually no more than 5 per day), here the submissions are unlimited, which allowed some participants to send results as much as 600 times. In addition, the final submission had to choose one, and Kaggle is usually allowed to choose any two, and the account on the private leaderboard is considered the best of them.

Another unusual thing is anonymized columns. On the one hand, this practically deprives any opportunity for feature design. On the other hand, this is partly understandable: columns with real names would give a powerful advantage to people versed in mobile communications, and the goal of the contest was clearly not that.