Unbearable severity of servers: on the issue of switching to the IaaS model

In 2010, Forbes magazine published an interview with John McCool, Cisco Vice President, in which he discusses the imminent future of virtual data centers and provides a surprisingly accurate forecast for 2015. Indeed, the tendency to transfer serious processes to virtual servers continues to grow, despite the constant circulation of myths about the dangers of storing data in the clouds. The reasons for this growth lie not only in the factors described by McCool (increased consumption of video content, the spread of new types of devices and the desire of companies to choose only the necessary capacities for applications) - there are no less interesting components of the success of virtualization.

Cloud technologies have penetrated into all areas of business, including the activities of data centers. Previously, virtual machines were used by specialists to create test circuits and deploy the developer's infrastructure, but now they are rented to create corporate infrastructure, including office applications, CRM customer accounting systems and complex ERP management accounting systems. Such a selection of a logically isolated set of computing resources with a decoupling from the hardware implementation is called virtualization. Like any process in technology, virtualization has its advantages and limitations, which can be related to the human factor or strictly engineering justified.

The virtual environment does not give anything new in terms of tasks with servers: there are questions of resource allocation, configuration choice, fixing problems, installing updates. Another thing is that in a virtual environment, these issues are solved easier and faster than on physical machines. The popularity of virtual servers is due to a number of undeniable advantages.

Thus, virtualization adds a new layer of allocated power management to information technology. It’s convenient, fast, scalable and not so expensive. Nevertheless, there are situations in which virtualization is not just not justified, but also dangerous.

The growth of cloud infrastructure services began following the proliferation of SaaS, but also had its own growth drivers.

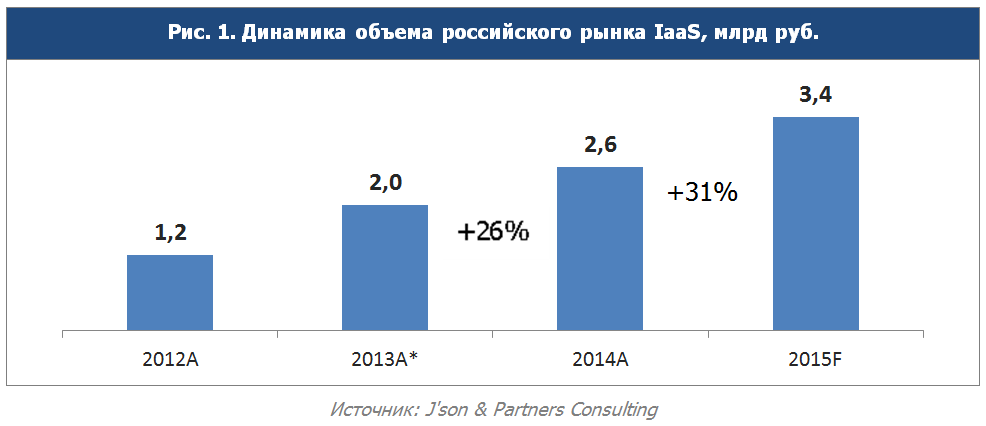

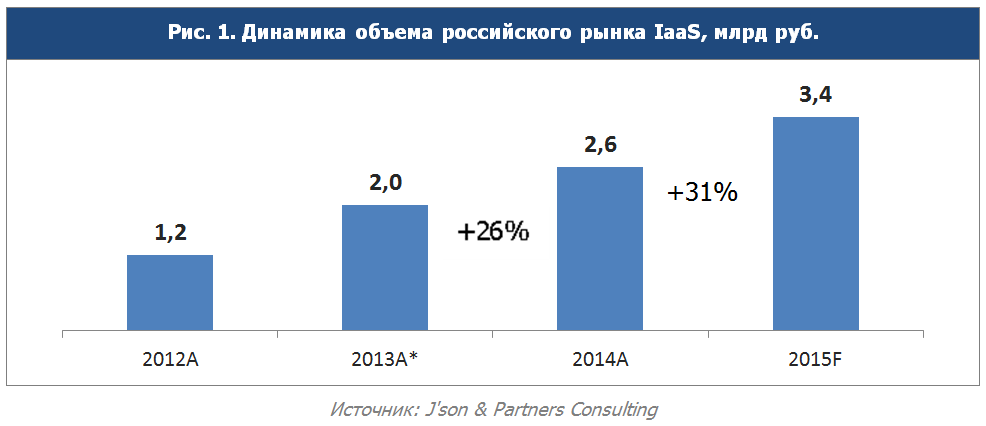

IaaS (Infrastructure as a Service) is popular among companies with a distributed management structure (networks, branches), online stores, banks, IT companies. According to a study by J'son & Partners Consulting, the IaaS market in Russia is undergoing a period of development and expects a much higher annual growth rate. According to experts, the IaaS market in 2014 amounted to 2.6 billion rubles in monetary terms, exceeding the same indicator in 2013 by 26%.

The volume of 2014 forms about 10% of the total consumption of physical and virtual servers in Russia in monetary terms in 2014. The current trend of growth drivers makes it possible to predict that the development of the IaaS market will be non-linear: moderate linear growth at 25% per year for the period 2015-2017, and the transition to accelerated growth in the period 2018-2019.

There are three main types of virtualization that companies use. The choice depends on the security policy, the requirements of the project load, the availability of qualified personnel, as well as the planned cost of the infrastructure.

Public cloud- infrastructure for wide public access, located at the data center or other organization. In public clouds, in general, simple cloud CRMs, collaboration tools, etc. are stored ... As a rule, users get access to a set of ready-made application configurations and use it as it is. It is considered the least secure.

Private cloud - resources belong to one company, they are distributed in it and are provided as a service via the Internet or local area network. The holding of resources can be both digging and a third party (DPC). They are used to securely build IT infrastructure, highly loaded projects and ensure the operational activities of branches or a distributed network of divisions. Most SaaS, PaaS, and IaaS services just use private clouds.

Hybrid cloud - a combination of public and private cloud based on the needs of the company, structure and security requirements. It is considered the most reasonable form of organizing cloud infrastructure in terms of cost management and scalability.

There is no single advice on which cloud to choose - a lot depends on the characteristics of the project. However, a hybrid cloud that combines the cost-effectiveness of the public and the security of the private for the corporate sector looks most attractive.

The information in this section is not at all like the boring task of accounting. Each business owner (developer, integrator, provider of virtual telephone exchanges, etc.) seeks not only to minimize costs, but also to free up additional financial resources in order to reinvest them in other projects or promising developments. I must say, nothing better cope with the task of saving on servers than virtualization.

The cost of ownership of a physical server (or multiple servers) is the sum of capital costs (CapEx) and operating costs (OpEx). CapEx includes the costs of actually buying the server and accessories, as well as the cost of design. OpEx includes maintenance costs, labor costs, equipment upgrades, and operating costs (electricity, cooling systems). At the same time, it often happens that operating expenses exceed capital costs, they are often estimated at 58% of total costs. Therefore, before buying server hardware, you need to correlate the cost of the entire project and the needs - it is likely that the project does not need large-scale resources, and security issues can be resolved on the side of the data center by selecting the desired virtual configuration.

As a matter of fact, the economic sense of virtualization and leasing of capacities lies precisely in reducing the system redundancy and the costs of its acquisition (CapEx) and maintenance (OpEx). Using such solutions helps free up CapEx in order to redirect capital costs to expensive projects. In the event that the company does not purchase a server, but uses virtual capacities, it simply does not bear capital costs, since they completely fall on the server holder - the data center. Therefore, to avoid unpleasant expenses and unbalanced company budget, you need to follow simple rules:

Reasonable savings and cost management can free up significant funds that can be used to develop the project itself and not the surrounding infrastructure. There are always ways to optimize costs - it is important to approach them critically and choose the one that you need and is justified in the context of the business process.

At SAFEDATA, we always offer our users solutions that would not only become part of the infrastructure, but also solve business problems. Today, one of our advanced services is VDC (Virtual Data Center). The client is provided with a pool of virtual resources (Virtual DPC), on the basis of which the customer can independently create an IT infrastructure of any complexity, completely similar to the solutions on physical equipment. The VDC service is based on the IaaS model and is included in the SFCLOUD cloud service package.

In the process of using virtual capacities, the client not only manages the server, but also can flexibly distribute the cloud resources allocated to it between infrastructure elements depending on the load on this or that element. In addition, in the process of using the VDC cloud service, the customer can change the volume of resources allocated to him, both up and down, as well as apply various pricing models. Due to this, it receives all the benefits of scalability and savings that we described at the beginning of this post.

There is no one-stop server solution for any project and any company, too many factors should be considered for an accurate choice. The choice always remains with the company and is determined, inter alia, by the security policy and resource management model. The modern market guarantees safe fault-tolerant solutions, so at the initial stage it is worthwhile to correlate the cost of ownership and performance metrics and save, if reasonable.

Cloud servers vs physical servers

Cloud technologies have penetrated into all areas of business, including the activities of data centers. Previously, virtual machines were used by specialists to create test circuits and deploy the developer's infrastructure, but now they are rented to create corporate infrastructure, including office applications, CRM customer accounting systems and complex ERP management accounting systems. Such a selection of a logically isolated set of computing resources with a decoupling from the hardware implementation is called virtualization. Like any process in technology, virtualization has its advantages and limitations, which can be related to the human factor or strictly engineering justified.

The virtual environment does not give anything new in terms of tasks with servers: there are questions of resource allocation, configuration choice, fixing problems, installing updates. Another thing is that in a virtual environment, these issues are solved easier and faster than on physical machines. The popularity of virtual servers is due to a number of undeniable advantages.

- Starting a virtual machine takes much less time than starting a physical server. In fact, a company experiencing the need for virtualization contacts the data center or the internal service department and receives the selected configuration (the amount of memory, the number of cores, the image of the operating system, etc.) of the virtual server are set based on real needs. In contrast, a physical server can only work for a few percent of its load and not pay back the cost of its capacity. In addition, the physical server requires complex settings, a qualified administrator, and incurs certain operating costs for electricity, a cooling system, and the replacement of hard drives.

- Contrary to popular belief, virtual servers as a whole, without taking into account work with super-loaded projects, are more fault tolerant. This is primarily due to the guarantees of the data center, which provides the maximum level of availability and stability due to the quick response to emergencies in 24/7/365 mode.

- Virtualization is changing the way you fix issues, backups and disaster recovery. Solving these problems requires new skills from specialists, but in general it is much simpler than for a physical machine.

- As already mentioned, virtual power can be reserved exactly for needs, without overpaying for excessive resources. From here, another advantage follows - flexibility and scalability. In the event that an application or project begins to require a larger resource, it is possible to easily expand or reduce the power of a virtual machine even with peak demands of an extreme increase or decrease in resource.

- Separately, it is worth mentioning a completely new approach to Patch Management - the process of managing software updates. Managing updates (patches) for a physical server has always been difficult: first you had to evaluate the update, test its operation in conjunction with the existing infrastructure, and only then load it onto the server. You can patch the image of a virtual machine even without first stopping it.

Thus, virtualization adds a new layer of allocated power management to information technology. It’s convenient, fast, scalable and not so expensive. Nevertheless, there are situations in which virtualization is not just not justified, but also dangerous.

- Server virtualization is so developed today that even resource-intensive projects can be output to virtual machines. The main message of virtualization is that virtual machines share the server hardware resource. Extremely loaded processes can use so much power that switching to another server in the event of a system crash becomes impossible. Therefore, for bigdata projects and resource-intensive technologies, it is better to use physical servers. This is especially important in industries with critical sustainability requirements: medicine, high-precision manufacturing, energy, etc.

- Another problem that, although rare, can be encountered on virtual machines is non-standard or unsupported operating systems that make it difficult to use a hypervisor. In such cases, it is better to deploy the necessary configuration to the physical server.

- It happens that a project or software is tied to hardware. There are applications that use physical hardware binding as part of a security policy, in particular copy protection. The security mechanism of such software may be based on the serial number of the processor or checking the presence of a flash drive in the desired port. Some software may be tied to a host bus adapter (HBA). It is logical that in such cases there is no question of any virtualization.

- There are also cases where virtualization is faced with licensing barriers: software is either not licensed for virtual machines in principle, or requires participation in special vendor licensing programs (for example, Microsoft SPLA, which is necessary for licensing the same Microsoft Server, Share Point, MS Office and etc. in virtual machines).

IaaS Market in Russia: Growing Despite the Crisis

The growth of cloud infrastructure services began following the proliferation of SaaS, but also had its own growth drivers.

- Companies seek to save on procurement and maintenance of technologies, including servers and licensed software. This savings is often based on an understanding of infrastructure redundancy - for example, when only a few of the purchased licenses are used.

- Companies are trying to remove software from capital expenditures and translate into operating expenses, mainly with the goal of more flexible scaling of the business in constantly changing conditions.

- During the crisis, new companies are created that see their niche in the market. For a quick start, they need to quickly deploy all the necessary corporate software.

- Finally, SaaS growth continues and more and more B2B sector representatives are introducing cloud technologies: from office suites to databases and serious ERPs.

- Of course, the law on the storage of users' personal data on Russian servers (which we already wrote about ) cannot be discounted either .

IaaS (Infrastructure as a Service) is popular among companies with a distributed management structure (networks, branches), online stores, banks, IT companies. According to a study by J'son & Partners Consulting, the IaaS market in Russia is undergoing a period of development and expects a much higher annual growth rate. According to experts, the IaaS market in 2014 amounted to 2.6 billion rubles in monetary terms, exceeding the same indicator in 2013 by 26%.

The volume of 2014 forms about 10% of the total consumption of physical and virtual servers in Russia in monetary terms in 2014. The current trend of growth drivers makes it possible to predict that the development of the IaaS market will be non-linear: moderate linear growth at 25% per year for the period 2015-2017, and the transition to accelerated growth in the period 2018-2019.

There are three main types of virtualization that companies use. The choice depends on the security policy, the requirements of the project load, the availability of qualified personnel, as well as the planned cost of the infrastructure.

Public cloud- infrastructure for wide public access, located at the data center or other organization. In public clouds, in general, simple cloud CRMs, collaboration tools, etc. are stored ... As a rule, users get access to a set of ready-made application configurations and use it as it is. It is considered the least secure.

Private cloud - resources belong to one company, they are distributed in it and are provided as a service via the Internet or local area network. The holding of resources can be both digging and a third party (DPC). They are used to securely build IT infrastructure, highly loaded projects and ensure the operational activities of branches or a distributed network of divisions. Most SaaS, PaaS, and IaaS services just use private clouds.

Hybrid cloud - a combination of public and private cloud based on the needs of the company, structure and security requirements. It is considered the most reasonable form of organizing cloud infrastructure in terms of cost management and scalability.

There is no single advice on which cloud to choose - a lot depends on the characteristics of the project. However, a hybrid cloud that combines the cost-effectiveness of the public and the security of the private for the corporate sector looks most attractive.

The economic meaning of virtualization

The information in this section is not at all like the boring task of accounting. Each business owner (developer, integrator, provider of virtual telephone exchanges, etc.) seeks not only to minimize costs, but also to free up additional financial resources in order to reinvest them in other projects or promising developments. I must say, nothing better cope with the task of saving on servers than virtualization.

The cost of ownership of a physical server (or multiple servers) is the sum of capital costs (CapEx) and operating costs (OpEx). CapEx includes the costs of actually buying the server and accessories, as well as the cost of design. OpEx includes maintenance costs, labor costs, equipment upgrades, and operating costs (electricity, cooling systems). At the same time, it often happens that operating expenses exceed capital costs, they are often estimated at 58% of total costs. Therefore, before buying server hardware, you need to correlate the cost of the entire project and the needs - it is likely that the project does not need large-scale resources, and security issues can be resolved on the side of the data center by selecting the desired virtual configuration.

As a matter of fact, the economic sense of virtualization and leasing of capacities lies precisely in reducing the system redundancy and the costs of its acquisition (CapEx) and maintenance (OpEx). Using such solutions helps free up CapEx in order to redirect capital costs to expensive projects. In the event that the company does not purchase a server, but uses virtual capacities, it simply does not bear capital costs, since they completely fall on the server holder - the data center. Therefore, to avoid unpleasant expenses and unbalanced company budget, you need to follow simple rules:

- evaluate the capacity required for the project

- in case virtualization is possible, use it

- to change the boundaries of capacity only in case of real need - as a rule, idle capacities “in reserve” do not bring any benefit.

Reasonable savings and cost management can free up significant funds that can be used to develop the project itself and not the surrounding infrastructure. There are always ways to optimize costs - it is important to approach them critically and choose the one that you need and is justified in the context of the business process.

Safedata Solves Virtualization Issues

At SAFEDATA, we always offer our users solutions that would not only become part of the infrastructure, but also solve business problems. Today, one of our advanced services is VDC (Virtual Data Center). The client is provided with a pool of virtual resources (Virtual DPC), on the basis of which the customer can independently create an IT infrastructure of any complexity, completely similar to the solutions on physical equipment. The VDC service is based on the IaaS model and is included in the SFCLOUD cloud service package.

In the process of using virtual capacities, the client not only manages the server, but also can flexibly distribute the cloud resources allocated to it between infrastructure elements depending on the load on this or that element. In addition, in the process of using the VDC cloud service, the customer can change the volume of resources allocated to him, both up and down, as well as apply various pricing models. Due to this, it receives all the benefits of scalability and savings that we described at the beginning of this post.

There is no one-stop server solution for any project and any company, too many factors should be considered for an accurate choice. The choice always remains with the company and is determined, inter alia, by the security policy and resource management model. The modern market guarantees safe fault-tolerant solutions, so at the initial stage it is worthwhile to correlate the cost of ownership and performance metrics and save, if reasonable.