EMC VNXe1600 Storage Overview

VNXe storage systems form the EMC line of low-end storage systems. Recently, the lineup has replenished with a new model - VNXe1600, which will be the subject of our close attention.

VNXe1600 is an entry-level model with the simplest installation and configuration. The system is positioned as a single repository for small infrastructures, on which typical modern tasks can be placed: databases, Exchange mail systems, VMware and Microsoft virtual servers or dedicated storage for the project. Of the access protocols, only Fiber Channel and iSCSI are offered - no file functionality. At least for the moment.

I note right away that the review is based on available documentation and other information - the system has not yet reached us to touch it fully. And let's start with a historical excursion - anyone who is interested only in the current technical essence can skip it painlessly.

A historical excursion to understand the genealogy: where the roots and possible rudiments come from.

Historically, EMC has been releasing a line of powerful midrange block storage systems (although this is not their main pride: the flagship is the line of high-end EMC Symmetrix products), which for several generations have been called EMC CLARiiON. Their direct descendants changed their name to EMC VNX Unified Storage, and now their second generation is relevant.

Over the years, this has been one of the main lines of midrange storage on the market, setting and shaping trends for the industry as a whole. In particular, these include the active development of the Fiber Channel protocol and RAID5.

True, the word “historically”, from which this part was begun, is applicable with reservations. Initially, CLARiiON was developed by Data General, then some architectural concepts that have been preserved to this day were laid. Data General even tried to compete with its innovative CLARiiON product with the flagship EMC Symmetrix product (now EMC Symmetrix VMAX or simply VMAX). According to the memories left by blogs by people who were at the forefront of the CLARiiON architecture, some architectural decisions were justified by this competition with EMC. But subsequently, and for a long time, Data General was bought by EMC. Today, visually, the roots of the origin of VNX systems can be seen in the fact that the volumes (LUNs) of CLARiiON / VNX systems are recognized by many systems as DGC RAID or DGC LUNZ, where DGC is an abbreviation of Data General Corporation.

Figure 1. The first CLARiiON looked something like this.

For a long time, EMC also had a line of NAS systems called EMC Celerra. Architecturally, these systems were a NAS-gateway connected to block storage systems (at some points, if I am not mistaken, support for third-party arrays was declared, but basically these block systems were supposed to be manufactured by EMC). The NAS gateway did not have its own capacity - it used the capacity of these systems and added access functionality via file protocols: CIFS, NFS, iSCSI and others. Strictness for: iSCSI is, of course, a block protocol and, in general, is SAN, but iSCSI support is typical for NAS systems, like storage systems with Ethernet access.

Figure 2. This is what the high-end storage system that provided NAS functionality looked like in the 2000 area. A real harsh industrial look - no blue backlight. But the power bus was painted in different colors.

There were also integrated models, which included components of both Celerra and CLARiiON, but the management of block and file functionality was separated. Those. in many ways, these were two systems in one box rack. Active consolidation began with the advent of the Unisphere management interface on the CLARiiON CX4 generation systems, which brought together file and block functionality management, and became established with the advent of the EMC VNX Unified Storage line. In VNX systems, block and file controllers are still implemented separately in hardware, but are fully integrated in management and authentication.

For me, these systems (CLARiiON, Celerra and VNX) are direct heirs, and, having made a reservation, I can easily call VNX a Clarion, and speaking of file functionality, I can call it a whole.

Just a month or two after the release of VNX, the VNXe generation system appeared - the VNXe3100, in which, on the contrary, there was only network functionality and no Fiber Channel out. That is, in a small box on the basis of existing experience and software developments, the functionality of both block and NAS systems was implemented, implemented on the same controller, and only NAS functionality was exposed outside. Moreover, the functionality was somewhat limited, and management was greatly simplified. The system was an attempt to enter the low-cost segment, for customers who do not have and do not plan any Fiber Channel, and have no particular desire to learn something about RAID groups (there is no concept of a Raid Group in the system interface).

Further development and so far the apogee of the VNXe series has become the VNXe3200 system, which has already provided both file and block functionality, including all the basic "advanced" functions of the older brothers. That is almost like VNX Unified, but within a single hardware box. The model, I must say, really turned out to be very decent. In my opinion, only two things can be attributed to the controversial decisions in the model: the built-in 10 GE Base-T ports are not very convenient for those with 10 GE optics (you need to install interface modules), and the limitations on the ability to configure the disk subsystem are certain templates that were not very explicitly described in specifications and marketing materials.

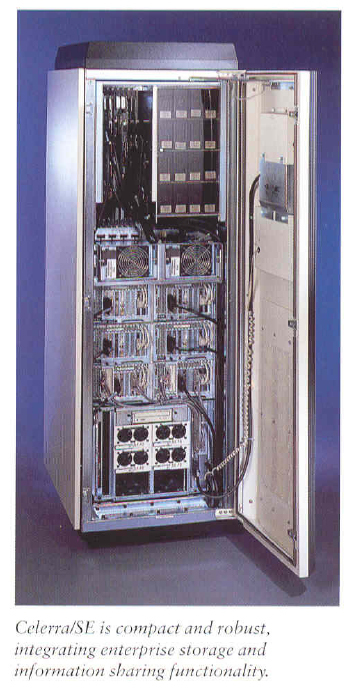

Figure 3. The current portfolio of VNX and VNXe currently

has recently launched the younger brother of the VNXe3200 system - the VNXe1600 system, which we will consider, which currently provides only block functionality.

Over the years, this has been one of the main lines of midrange storage on the market, setting and shaping trends for the industry as a whole. In particular, these include the active development of the Fiber Channel protocol and RAID5.

True, the word “historically”, from which this part was begun, is applicable with reservations. Initially, CLARiiON was developed by Data General, then some architectural concepts that have been preserved to this day were laid. Data General even tried to compete with its innovative CLARiiON product with the flagship EMC Symmetrix product (now EMC Symmetrix VMAX or simply VMAX). According to the memories left by blogs by people who were at the forefront of the CLARiiON architecture, some architectural decisions were justified by this competition with EMC. But subsequently, and for a long time, Data General was bought by EMC. Today, visually, the roots of the origin of VNX systems can be seen in the fact that the volumes (LUNs) of CLARiiON / VNX systems are recognized by many systems as DGC RAID or DGC LUNZ, where DGC is an abbreviation of Data General Corporation.

Figure 1. The first CLARiiON looked something like this.

For a long time, EMC also had a line of NAS systems called EMC Celerra. Architecturally, these systems were a NAS-gateway connected to block storage systems (at some points, if I am not mistaken, support for third-party arrays was declared, but basically these block systems were supposed to be manufactured by EMC). The NAS gateway did not have its own capacity - it used the capacity of these systems and added access functionality via file protocols: CIFS, NFS, iSCSI and others. Strictness for: iSCSI is, of course, a block protocol and, in general, is SAN, but iSCSI support is typical for NAS systems, like storage systems with Ethernet access.

Figure 2. This is what the high-end storage system that provided NAS functionality looked like in the 2000 area. A real harsh industrial look - no blue backlight. But the power bus was painted in different colors.

There were also integrated models, which included components of both Celerra and CLARiiON, but the management of block and file functionality was separated. Those. in many ways, these were two systems in one box rack. Active consolidation began with the advent of the Unisphere management interface on the CLARiiON CX4 generation systems, which brought together file and block functionality management, and became established with the advent of the EMC VNX Unified Storage line. In VNX systems, block and file controllers are still implemented separately in hardware, but are fully integrated in management and authentication.

For me, these systems (CLARiiON, Celerra and VNX) are direct heirs, and, having made a reservation, I can easily call VNX a Clarion, and speaking of file functionality, I can call it a whole.

Just a month or two after the release of VNX, the VNXe generation system appeared - the VNXe3100, in which, on the contrary, there was only network functionality and no Fiber Channel out. That is, in a small box on the basis of existing experience and software developments, the functionality of both block and NAS systems was implemented, implemented on the same controller, and only NAS functionality was exposed outside. Moreover, the functionality was somewhat limited, and management was greatly simplified. The system was an attempt to enter the low-cost segment, for customers who do not have and do not plan any Fiber Channel, and have no particular desire to learn something about RAID groups (there is no concept of a Raid Group in the system interface).

Further development and so far the apogee of the VNXe series has become the VNXe3200 system, which has already provided both file and block functionality, including all the basic "advanced" functions of the older brothers. That is almost like VNX Unified, but within a single hardware box. The model, I must say, really turned out to be very decent. In my opinion, only two things can be attributed to the controversial decisions in the model: the built-in 10 GE Base-T ports are not very convenient for those with 10 GE optics (you need to install interface modules), and the limitations on the ability to configure the disk subsystem are certain templates that were not very explicitly described in specifications and marketing materials.

Figure 3. The current portfolio of VNX and VNXe currently

has recently launched the younger brother of the VNXe3200 system - the VNXe1600 system, which we will consider, which currently provides only block functionality.

VNXe System Family

To clarify the differences within the VNXe family, we briefly list the main modules and milestones:

VNXe3100 - GA March 2011. A purely networked storage system. Only supports Ethernet: NAS protocols and iSCSI.

VNXe3300 - GA March 2011. A purely networked storage system. That is, it supports only Ethernet: NAS protocols and iSCSI. Significantly more powerful option than the VNXe3100.

VNXe3150 - GA August 2012. The "improved" version of the VNXe3100 with more memory and further development of the functionality. Also only Ethernet: NAS protocols and iSCSI.

VNXe3200 - GA May 2014. A logical option for the development of the series and so far its apotheosis. In addition to NAS and iSCSI over Ethernet, Fiber Channel, an advanced feature of older brothers, has been added.

The VNXe1600 is the new GA model in August 2015, at the moment only block functionality: Fiber Channel or iSCSI over Ethernet.

VNXe1600

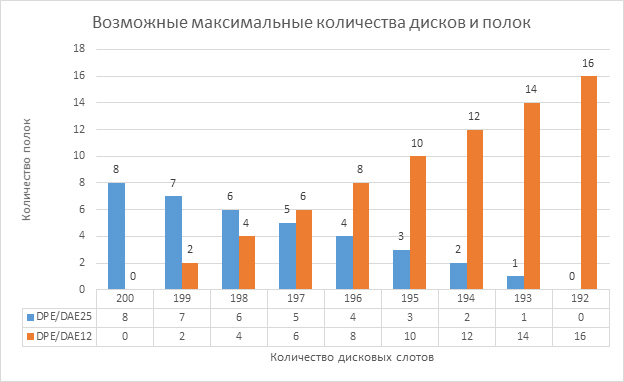

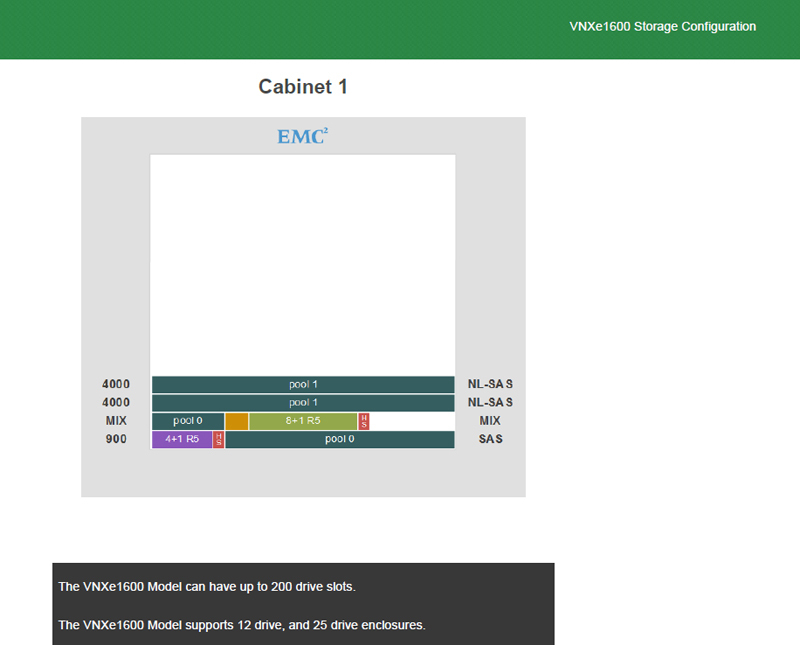

The system architecture is traditional: a controller shelf with disks (DPE) in 2U format, to which additional disk shelves (DAE) are also connected via SAS, also 2U. Both the controller shelf and the additional ones can have an option for 12 disks 3.5 inches (what some call LFF), and 25 disks 2.5 inches (what some call SFF).

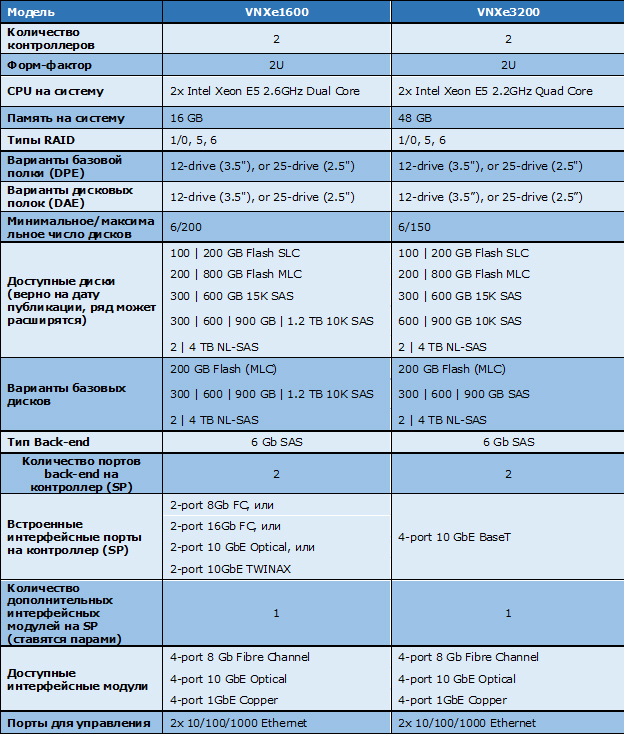

System Features Compared to Big Brother :

Table 1 VNXe1600 Specifications Compared to VNXe3200

This table does not reflect that the fundamental difference is that the VNXe1600 has only block functionality. This, perhaps, slightly compensates for the difference in RAM, which VNXe1600 has three times less: you do not need to keep the file code and service data in memory. Nevertheless, the memory is significantly less: 8 GB per controller, and fewer cores.

Of the characteristics, it can be noted that the system allows you to install even more drives than the older brother of the VNXe3200: 200 versus 150 for the VNXe3200. At the same time, taking into account less memory and cores, less computing power is needed per unit capacity. In general, with the release of the VNX EMC line, the maximum supported number of discs was seriously limited - the limitation is not technical, but reasonable marketing (here marketing is like proper positioning, i.e. in a good way). This aspect made a comparison of models with solutions of other vendors on this basis devoid of meaning. The flip side was that for the younger models of the series an almost linear increase in performance was achieved with the addition of disks: with the maximum number of disks, the saturation point was not exceeded. 200 drives are just 7 additional to the controller shelves, if all of them are 25 2.5 ”drives each - i.e. a total of 16U.

There is an additional limitation - the footnote specification states that the maximum “raw” volume is 400 TB. Those. hammering the system to the eyeballs of 4 TB drives will not work. It should be noted that the limitation in reality is not by the number of disks, but by the number of disk slots. Those. if the system already has 16 shelves of 12 disks (16x12 = 192), add another shelf, even if it has less than 8 disks, it doesn’t work out - the number of disk slots will be exceeded (192 +12 = 204; 192 + 25 = 217 )

On interfaces: in the new system, EMC was one of the first to have 16 Gb / s Fiber Channel support, and there is no 10 GE BASE-T support, while the VNXe3200 had such ports built-in.

Let's look at the iron in more detail.

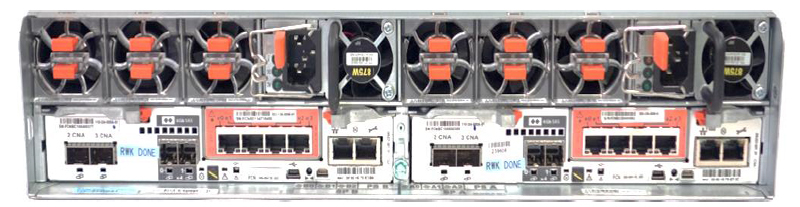

This is what the controller shelf (DPE - Disk Processor Enclosure) looks like at the back. There is nothing particularly interesting in the front, except for the blue-backed bezel, which hides 12 3.5 ”or 25 2.5” disks:

Figure 4. Rear view of the controller shelf (DPE)

We can see that the system can be divided into two parts, the components located in which A and B will be called - on the right and on the left, if viewed from behind, respectively. On the system, this is marked with arrows.

In the upper part of the shelf are the power and cooling unit, which consists of three fan modules (1) and the power unit itself (2), and the lower part is the controller with ports. Each of the power supplies is able to power the entire shelf. In order for the system not to try to save the cache and turn off, at least one of the power supplies and at least two fan modules on the controller must work.

As with other VNXe-series systems, cache protection is performed using batteries (BBUs) installed in the controller itself. The task of such a battery is not to support the work and not to support the power supply of the memory when it is turned off, but to let the system dump the contents of the cache memory to the built-in solid-state drive (the system boots from it).

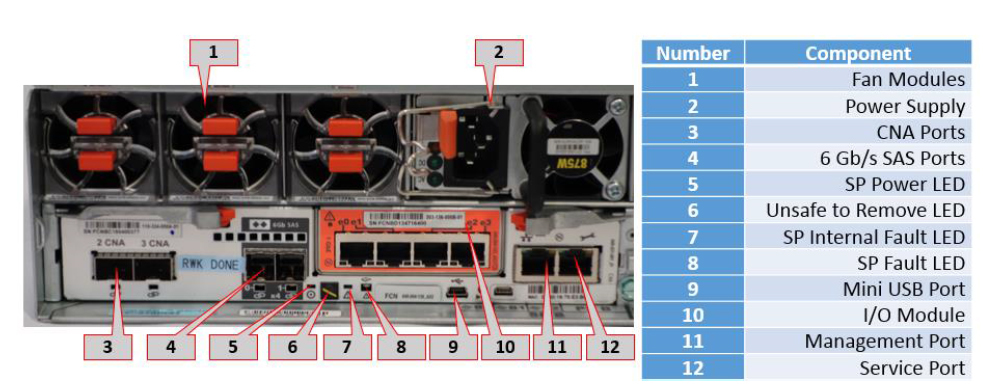

Figure 5. DPE components (half system shown)

Each controller has two built-in SFP + ports, designated as CNA (3) - i.e. The ports are universal and can be configured as Fiber Channel 8 or 16 Gb / s or 10 GE. And they have the appropriate SFP transceivers installed. This configuration is done when ordering - i.e. cannot be changed on the spot (to the customer, at least at the moment).

There is the possibility of installing an additional interface module (10), in the basic configuration there is a stub instead. It would be more accurate and more correct to say not “additional”, but “additional” - since the expansion installs a pair of identical modules in both controllers.

At the start, a choice of three modules is available, all are 4-port: Fiber Channel 8 Gb / s or Ethernet 10 GE Optical or GE Base-T. The module can be selected both initially and added later as an upgrade. To install the modules, you need to remove the controller, but the benefit is two - you can organize this as a non-disruptive upgrade, in terms of data availability.

Two square ports (4) are 6 Gb / s SAS ports - i.e. the system has two buses for connecting disk shelves (BE). The system shelf itself is shelf # 0 on bus # 0. The connectors have a SAS HD form factor.

The set of light indicators (it makes no sense to consider in detail) includes the crossed-out hand icon (Unsafe to Remove) that lights up when the controller cannot be removed so as not to lose cache data - i.e., for example, if you first remove the second controller, or during start and save processes cache. It can be noted that the VNXe1600 refused to offer “single-headed” configurations, which was possible for previous systems from the youngest line.

There is an Ethernet port for management and a service port. Like the VNXe3200, there is no hardware serial port, and if necessary, service intervention uses a virtual console on top of Ethernet.

Disk subsystem

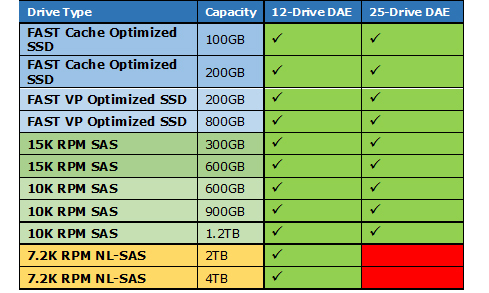

Disk shelves, like the system one, can be of two types:

- 25 ”2.5” drive

- 12 ”12” drive

Figure 6. Shelf with 25 2.5 "disks.

Figure 7. Shelf with 12 3.5" disks.

Disk shelves are connected to the controller via SAS with even distribution on two BE-buses. From the point of view of addressing, the system shelf itself is shelf # 0 on bus # 0.

Table 2. Maximum configuration of disk shelves and the number of disks for them.

SAS, NL_SAS, or Flash disks are available. In fact, all these drives have a SAS interface for connecting to disk shelves, but each vendor strives to teach people how to speak their own terms. To make it easier to understand:

SAS are 10K or 15K revolving disks.

NL_SAS - high-capacity disks with a rotation speed of 7200 rpm. Available only in 3.5 ”

Flash- solid state drives. There are two types: with the possibility of using them as FAST Cache (these are SLC drives with a longer resource and reliability) and not supporting it, but somewhat less expensive (FAST VP Flash). Storage devices supporting FAST Cache, if desired, can also be used to form a pool.

Table 3 Supported drive types

From the table you can see that high-capacity drives are available only in the 3.5-inch form factor, and the rest in any. It is understandable: now often a 3.5-inch drive is 2.5-inch in the corresponding slide.

The configuration of the disk subsystem is reduced to the configuration of pools. A pool is a collection of disks of the same type (as the system does not support FAST VP), on which virtual volumes - LUNs are subsequently created. Those. when creating a pool, the system will cut one or more RAID groups inside it with the specified type of protection, and the user will present this as a single space for placing volumes (LUNs). Within the pool, a capacity can also be reserved for creating snapshots.

The policy for hot-swap drives is not customizable, but follows the usual EMC guidelines: 1 hot-spare for 30 drives of the appropriate type.

Target sizes of RAID groups - i.e. the number of disks that are recommended to be combined into a pool when creating it:

- RAID 5: 4 + 1, 8 + 1, 12 + 1

- RAID 6: 6 + 2, 8 + 2, 10 + 2, 14 + 2

- RAID 1/0: 1 + 1, 2 + 2, 3 + 3, 4 + 4

When creating a pool, you can specify the desired size. From the description, it is not yet fully understood whether this will be a severe restriction, as in the VNXe brothers, or, if the number of disks is not multiple, the system will create slightly uneven RAID groups, as in older VNXs. So far, it seems that only multiple sizes can be specified. On VNXe systems, such a restriction on templates was apparently caused by optimization for the operation of file functionality. I won’t describe the subtleties here, especially since I don’t know exactly how VNXe works at 100%, but the point is that these limitations are not a whim, but technical optimization.

You can note the inherited feature, which is important to be aware of: the first four disks are system disks - part of the capacity is used for official needs on them, and in some operations these disks also behave slightly different than the others. The usable capacity of system disks will be less. If they are combined into one RAID-group (namely a group, not a pool, although VNXe's ideology suggests not thinking about groups) with other disks, the same capacity will be "lost" on those. On these disks, it eats up approximately 82.7 GB per disk. For example, if the first 4 disks are 900 GB of nominal volume (about 818.1 usable) and configured as RAID 1/0, then the usable volume will be 1470.8 (736.4 GB per system disk), and on the same group not on the system one we get about 1636.2 (818.1 GB per disk). If, for example, we have only 14 disks of 900 GB in the system, and we choose 12 + 1 + hot-spare for the RAID5 pool, we get 8824.80 usable volume, having lost 82.7 GB and on nine non-system disks. Why do we need such a reserve if the system is loaded from a solid-state drive and stores a cache on it? It’s not clear to the end. Perhaps this is an additional reservation, and the developers decided so far not to completely break the existing model.

Hint: when considering system configuration, ask the vendor to calculate the required breakdown. The partners have a capacity calculator, where you can set the required sets of disks and groups, outputting pdf output, where it is calculated in detail what and how, and how much space will be available.

Figure 8. Capacity Calculator and draw, and calculate the useful capacity and height in the rack

FAST Cache is available - a functionality that implements cache expansion due to solid state drives, but the configuration is limited to a maximum of two solid state drives. A separate RAID 1/0 group is created for this functionality (1 in the case of two drives). Those. taking into account mirroring, the capacity can be 100 or 200 GB of the nominal volume. "Nominal" because a drive labeled 100 GB gives about 91.69 binary GBs, and 200 GB gives about 183.41 GB. Honest ratios of capacities are in the specification sheet for the model.

Functional

Reflect some of the key features of the system. In general, for many EMC storage systems, a significant part of the functionality appears after a certain time after the launch of the storage system itself. I don’t know if this will be true for the VNXe1600 - I didn’t look at the roadmaps, but if I did, I couldn’t tell.

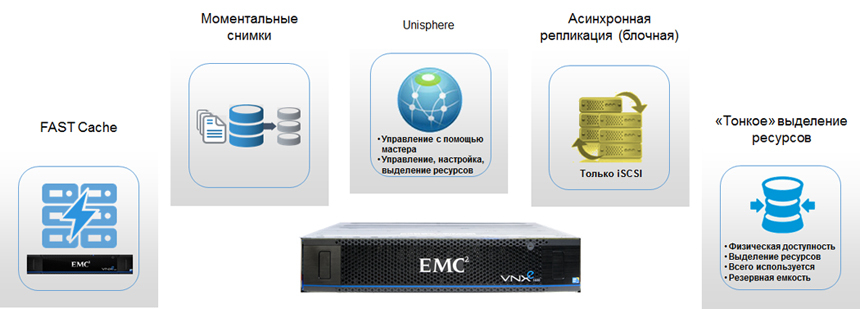

Figure 9. VNXe1600 system functionality

The following functionality is included in the basic system delivery. From the additional functionality at the moment, you can choose EMC PowerPath - installed on the server software to provide multipathing (emergency path switching and load balancing).

Protocols for accessing data: iSCSI and Fiber Channel.

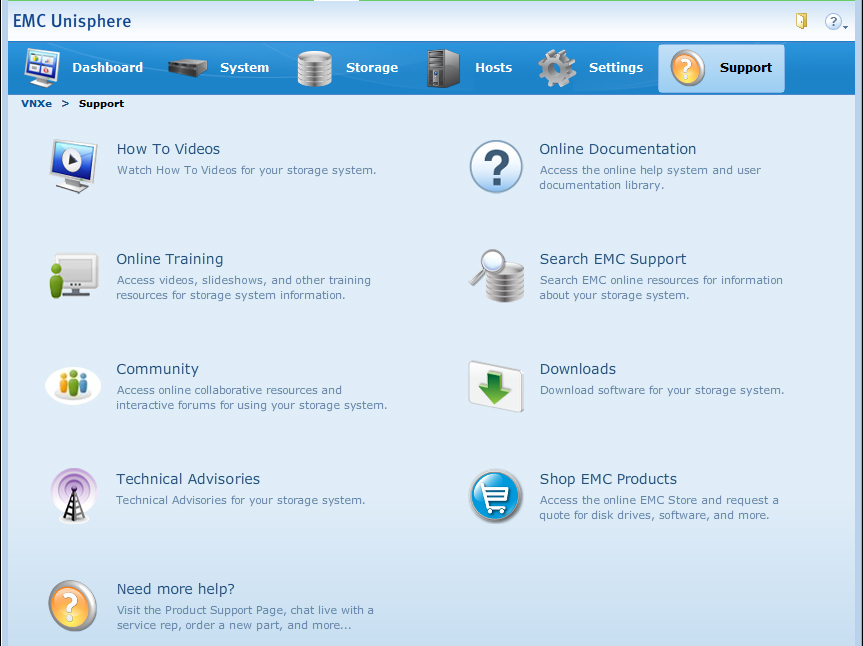

Unisphere- system management is possible both by means of the built-in web-interface, and through the Unisphere CLI command line (for those who want to script something, for example). The control interface is similar to other VNXe, and indeed quite simple. In addition to management, the interface also implements monitoring capabilities and performance characteristics. To manage large infrastructures, centralized management of heterogeneous systems (VNX, VNXe, CLARiiON CX4) is supported using Unisphere Central - a separate management server deployed as an appliance in a virtualized environment.

Figure 10. Unisphere management web interface

System management uses the RBAC model with a set of predefined roles. Integration with LDAP is possible to authenticate users when accessing the management interface.

FAST Cache - functionality for using solid-state drives as a cache extension. Support is limited to using only two SLC solid state drives - i.e. maximum up to 200 nominal GB.

Thin Provisioning - the ability to create “thin” volumes for which the actual allocation of capacity occurs as it is used, and organize re-subscription. The thing is already more than standard for modern storage systems.

Snapshots- The ability to create copies of volumes in the form of snapshots. They work on ROW (Redirect on Write) technology - i.e. the presence of snapshots does not slow down the main LUN. There is a built-in functionality for ensuring consistent creation of snapshots for a group of volumes.

Asynchronous Replication - Asynchronous replication is supported at the LUN or VMFS Datastores level (these are the same LUNs, but are highlighted separately) between the VNXe1600 and VNXe3200 storage systems. The asynchronous replication mechanism actually involves the snapshot mechanism and the transfer of the difference between them. Accordingly, there is the possibility of using consistency groups. It is possible to set automatic synchronization with a certain interval, or to carry it out at the command of the user.

Virtual Environment Support- in the best traditions, the VNXe system most fully supports integration with VMware, including: VMware Aware Integration (VAI) - the ability to see VMware data from the VNXe interface - which datastore lies on the LUN, which virtual machines are on it, etc. ; Virtual Storage Integrator (VSI) - there is an additional plug-in for the VMware vSphere client, which allows the other way around - it is more convenient to see data about storage objects from the VMware interface and manage them; VMware API for Array Integration (VAAI) - interaction with storage for transferring part of tasks, such as copying virtual machines, zeroing blocks, locking, understanding in the field of thin provisioning from the server to internal storage processes.

Fast start

After the system is mounted, it must be initialized using a special utility - Connection Utility, which is supplied with the system or available on the vendor’s support site. The Broadcast utility (therefore, you must connect to the same network segment) finds and establishes a connection with the storage system and allows you to set the control IP.

After that, system configuration can be continued through the Web interface at the assigned ip address. At the first start, a wizard will be launched, which will step by step ask about most of the infrastructure settings and help you quickly configure basic things.

There are no sophisticated settings: just configure the pools and create storage resources on them. Moreover, when using wizard-s, the system, for example, can automatically pick up a new LUN as VMware datastore right after creation — you do not need to separately scan adapters and map LUN numbers from the vSphere management interface.

Global support

To provide timely support, the storage system must be registered and displayed correctly on the manufacturer's support portal support.emc.com. If this is the first EMC product, additional registration on the portal will be required. If everything is done correctly, and the system has a route to the Internet, then most of the information can be accessed directly from the storage system interface, including HowTo video and access to forums.

For almost all of its products, EMC believes that Call-home and Dial-In should be properly configured to provide a timely response to problems. Call-home - send messages from the system to the global EMC support center. Dial-In - the ability to remotely connect global support specialists to diagnose and solve problems. In the minimum version, Call-Home is sending e-mail messages, and Dial-In is implemented through the Webex web-conference system. The recommended option is to use EMC Secure Remote Support Gateway (ESRS) - a special EMC product that implements a secure https tunnel between the customer infrastructure and the global support infrastructure. In general, now such a server is implemented as a virtual appliance, but VNXe systems have a built-in component - you don’t need to deliver anything,

Conclusions and possible applications

The new storage system fills a niche in the EMC product line - a purely block entry-level storage system from EMC. Previously, EMC occupied this niche with the CLARiiON AX4-5 and VNX5100 systems. There is a regular demand for such storage systems - it will be in demand. The system was officially announced on August 10, but we haven’t touched it yet and we don’t have feedback from customers yet.

A decent reserve for scaling and an assortment of disks allows you to solve a fairly wide range of problems where there are no requirements for advanced functionality on the damage of storage. Of the possible areas of application, at first glance, one can see:

• An entry-level block system - a good option for replacing existing block storage systems of previous generations, which ended support, and where advanced functionality is not required;

• A good option for a new entry-level system for data consolidation, where there is no need for file protocols, or it is considered more convenient to implement them independently on file servers.

• Perhaps a simple back-end storage for the file server.

• A relatively inexpensive solution for building clusters, including primarily for virtual infrastructures.

• Storage for video surveillance systems (in practice, it is often built with connection via a block SAN rather than a NAS) due to the ability to collect high-capacity disks, and possibly fast ones (if the system architecture also includes a database or directory).

• Perhaps there will be interesting All-Flash storage configurations that are more relevant for the NAS than for the SAN.

• A possible option for Backup to Disk storage to connect to the backup server. Although we are primarily for EMC Data Domain and client-direct, but this is a different story.

Links to vendor resources:

White Paper: Introduction to the EMC VNXe1600. A detailed Review

Specification Sheet: EMC VNXe1600 Block Storage System