New Free Intel® DAAL Data Analytics Library

Today, the first official release of the new Intel data analytics library, Intel Data Analytics Acceleration Library, was released . The library is available both as part of Parallel Studio XE packages, and as an independent product with a commercial and free (community) license. What kind of animal is it and why is it needed? Let's get it right.

Where is Intel DAAL needed?

Today, there is a whole science of data (data science), which studies the problems of processing, analysis and presentation of data. It includes many different areas, such as statistical methods, data mining, machine learning, theory of pattern recognition, artificial intelligence (AI) applications, and so on.

Moreover, all these areas of research have a lot of intersections, but there are differences. So, statistics is based on a theory more than data mining, and focuses on testing hypotheses. Machine learning is more heuristic and focuses on improving the performance of learning agents. And data mining represents the integration of theory and heuristics, focusing on a single process of data analysis, including data cleaning, training, integration and visualization of results. Intel DAAL will be of interest to anyone related to data science and its fields.

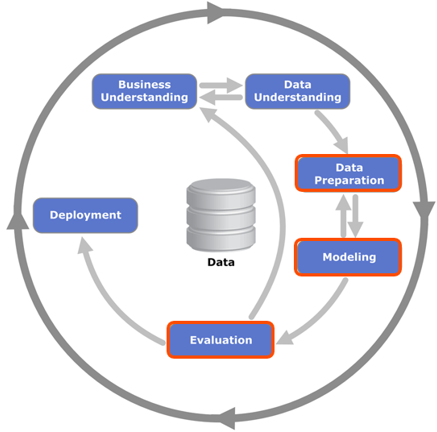

There are standards for data mining, the most common of which is the cross-industry standard process for data mining CRISP-DM (Cross Industry Standard Process for Data Mining). According to this standard, the data analysis process is iterative and includes 6 stages: business understanding, data understanding, data preparation, modeling, evaluation, implementation ( deployment).

The DAAL library is intended mainly for the stage of data preparation, modeling and evaluation of results, if we talk about the presentation of data mining methods within the framework of this standard. At the same time, it is optimized using the algorithms of the Intel Math Kernel Library and Intel Integrated Performance Primitives.

Problems and Solutions

Why DAAL and why should this library appeal to developers?

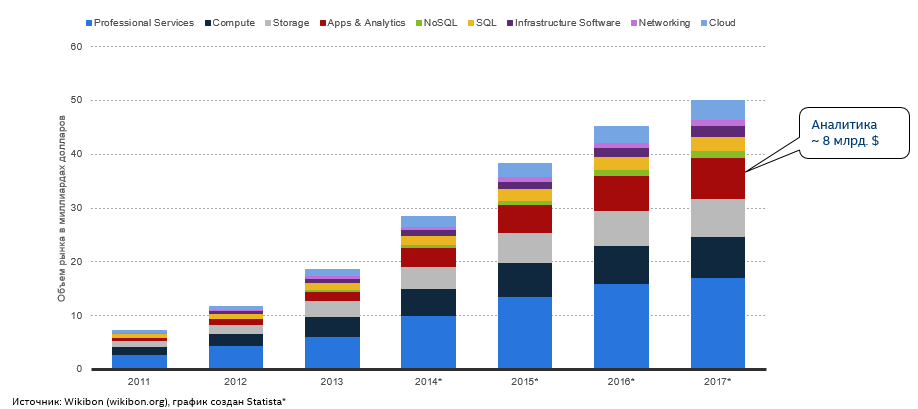

In the field of data analytics, there are now a huge number of different technologies and tools. This is quite natural, given the growth rate of this industry:

Interesting statistics from Wikibon: by 2017, the big data market will amount to about 50 billion US presidents, of which 8 are software and analytics.

Storage of data received from a large number of different sources is implemented both by means of traditional relational DBMSs with access to data using SQL, and non-traditional NoSQL (not only SQL). In addition, data can be immediately stored in memory. To process this data, large frameworks such as Hadoop, Spark, Cassandra and so on are now used.

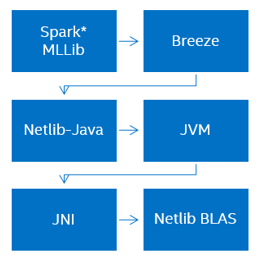

There is one big problem with current solutions, namely performance. Consider, for example, the open-source Spark framework, or rather, the Spark MLLib machine learning library.

Spark MLLib is written using the Scala language and uses another open source Breeze linear algebra package, which depends on Netlib-Java, which is a Netlib wrapper for Java. As a result, Netlib BLAS is used, the implementation of which is usually consistent and not optimized. Obviously, we have the problem of too many dependencies, "multi-layer" and poor performance.

Intel's idea is to create one library for working at all stages of data analytics, excluding similar multilayer implementations, while optimizing it for hardware:

Using this solution should give us a significant performance boost. If we compare the implementation of Intel DAAL with the same Spark MLlib using the example of the principal component analysis (PCA) method, the resulting acceleration can be from 4 to 7 times, depending on the size of the data table:

Main components

Intel DAAL supports C ++ and Java, as well as Windows, Linux, and OS X. It can be used with any platform, such as Hadoop, Spark, R, Matlab, and others, but is not tied to any of them. In addition, there is support for local and distributed data sources, including CSV in files and in memory, MySQL, HDFS, and Resilient Distributed Dataset (RDD) objects from Apache Spark *.

The library consists of three main components: Data Management, Algorithms and Services.

Data management

This includes classes and utilities for obtaining data, primary processing and normalization, as well as their conversion to a numerical format. DAAL algorithms work with data in a special form - data tables. Therefore, the very first step when working with the library will be the conversion of data into these very tables.

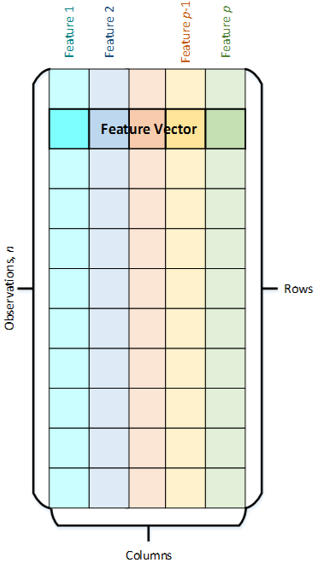

What are they like? Each object is characterized by a set of attributes (Features) - properties that characterize the object. For example, eye color, age, water temperature and so on. A set of attributes forms a Feature Vector of size p. These vectors, in turn, form a set of observations (Observations) of size n. In DAAL, data is stored in the form of tables in which the rows are Observations, and the columns are Properties.

Algorithms

Algorithms consist of classes that implement data analysis and modeling. These include decomposition, clustering, classification and regression algorithms, as well as associative rules.

Algorithms can be executed in the following modes:

- Batch processing

Algorithms work with the entire data set at once and produce the result. All library algorithms support this mode. - Online processing

There are more complex cases in which all data is not immediately available in its entirety, or, for example, is not stored in memory. In this case, a mode can be used in which work with data occurs in blocks that are loaded into memory gradually. Not all library algorithms have an online implementation. - Distributed Processing

Data is distributed across multiple compute nodes. An intermediate result is calculated on each node, which are eventually combined on the main node. As with online processing, not all library algorithms have a distributed implementation, but Intel engineers are working on it.

Services

Services contain classes and tools used in algorithms and data management. This includes various classes for allocating memory, handling errors, implementing collections, and common pointers.

Total

Intel DAAL library has many different features, and to talk about them in a single post will not work. I just showed why it is needed, for what purpose it appeared on the market and examined its main components. I would like to hear questions and comments of those who are interested in this issue and continue the conversation about this interesting library. Plans to talk about DAAL algorithms, as well as show code examples using it.

I note that today Intel DAAL is the only library for data analytics optimized for Intel architecture.