About how we tested static analysis on our project of a training simulator of endovascular surgery

I want to share with you a story about how we tested the PVS-Studio static code analyzer in our project and tried to determine what benefits can be derived from this tool. This article will not describe unique and interesting software errors for specialists. All bugs and shortcomings found in the code by static analysis turned out to be quite prosaic. I will describe here a look at this tool from the point of view of the project manager. Perhaps this view is not as accurate and unambiguous as the engineer’s assessment: the features of the organization of work in a particular project have their effect. But still, I think that the thoughts presented in the article may turn out to be interesting to those who think about using static analysis in their work. Or for those who encounter significant loss of resources on error fixes in their projects,

Introduction

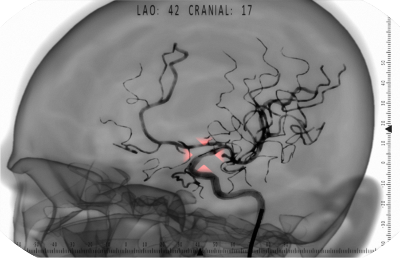

I work for Eidos-Medicine, a company that specializes in the development of virtual medical simulations. These are special hardware and software systems that allow you to simulate the performance of various surgical interventions in the educational process. The use of simulators allows medical students and interns to obtain the first practical skills in their specialty before being entrusted to a living patient. Our design team is developing a simulator of endovascular interventions. This area includes a lot of types of operations on blood vessels performed under the control of fluoroscopy: angioplasty, stenting, spiral embolization of aneurysms, endoprosthesis replacement of aortic aneurysms.

Our team has been working on the project in its current composition for a year and a half. Everything goes on as usual. Consulting surgeons are working with the analyst on the tactics of surgical interventions step by step, working with the requirements for the visual component. A 3D artist, using CT angiography, anatomical atlases and surgeons' advice, creates models for new clinical cases of the simulator. Top-level programmers are working on the visualization of fluoroscopy, the physics of the movement of endovascular instruments inside the arteries, a logical analysis of the actions of the cadet on the simulator to fix the correctness of the stages of the intervention. Circuit designers, microcontroller programmers and design engineers provide the work of various simulators of medical equipment used in the simulation, collecting data from sensors, their preliminary processing and sending to the program. In response, at the upper level, information is prepared for transmission to the controller, on the basis of which the indication on the equipment and the effects of tactile feedback corresponding to the course of the virtual intervention are provided, designed to minimize the conventions of the educational process.

And when the work is done, compiled, soldered, tied, milled and assembled, its results are sent to the tester. Testing with us is mostly manual. Automated tests minimum. Throughout the course of the development of the new version, the tester checks on his computer the current revisions of the program for performance, stability and adequacy of work. This is necessary in order to catch dangerous commits in time, since iterations on the version are quite long. However, the main testing of the release candidate still takes place on the simulator itself. There may be surprises. Someone misunderstood someone at the coordination of the communication protocol with the controller. Or the dynamics of the movement of tool simulators when working on the simulator is slightly different from the debugging control from the keyboard, and this "little" results in critical problems for the physical engine. Or, some third-party libraries used in the new version were not reported to the distribution. There can be many surprises, but, of course, trouble champions are floating errors that result in a program crash or critical problems that prevent the cadet from performing the exercise on the simulator in normal mode.

However, a lot of time is spent on fairly simple, easily calculated bugs. When adding new functionality, new errors are often introduced into the program. Most of them are caught during the work on the version, in the process of daily regression testing of the project. Having discovered a new error, the tester must determine which of the developers is responsible for it (which, incidentally, is not always obvious), and create a task in Redmine. After the programmer understands the problem and commits the fix, it will be necessary to carry out additional checks, confirming that the problem is really solved and that it can be closed. In total, it turns out in no way less than half a man-hour for the most trivial case. This is if the bug is reproduced quickly, and the programmer immediately understands what the problem is and what needs to be fixed in the code. If it takes 20-30 minutes to reproduce the error, then it will entail the loss of two man-hours, even in the case of the fastest and most trivial fix. Significant enough losses. And all the more offensive, because the causes of such errors often lie in the usual carelessness.

Static code analysis in the project

The idea to try to use a static code analyzer in the project came to me not by myself. It was brought to me by a colleague from the C ++ Russia conference, where he met the guys from PVS-Studio. I paused to think and release the current version, and then decided to try it all the same. I contacted the developers of PVS-Studio by mail and after a short correspondence I received a license key for a period of two weeks, and we started checking our project.

Here a few words about the features of the architecture of the project. We don’t have much code in C ++. Only about fifty libraries, but some of them contain just a few dozen lines of code. A significant part of the program logic is in the environment of the graphics engine. We integrate C ++ code into the project through the DLL. Thus, we implement some specific functionality that is not represented in the environment of the graphics engine. In addition, we transfer to the DLL complex or resource-intensive algorithms for dynamically changing the frame by frame or creating polygonal grids for rendering endovascular catheters and guides, simulating heartbeats and respiratory movements. In C ++, the logic of exercises simulating surgical interventions is also written, which ensures tracking of the stages of the operation and the correctness of the actions of the cadet. As a result, it turns out that the project has several very small C ++ libraries and several medium ones containing 2-3 thousand each. For that part of the program logic that is in the environment of the graphics engine, interesting tools for static code analysis are not available. Therefore, we tested with PVS-Studio only part of the project.

PVS-Studio got up on my computer easily and effortlessly and integrated into Visual Studio 2013. Andrey Karpov from the PVS-Studio team sent me a link to the user manual and something like the Quick Start Guide, which was even redundant, as it’s been hard to figure out The interface and functionality of a static analyzer can only be done using intuition and scientific poke technology.

After 15 minutes, I already checked the DLL code, which is responsible for modeling the process of spreading X-ray contrast material through the arteries. This library contains about 4 thousand lines of code. I was somewhat surprised that the analyzer did not record any errors of the first level in Solution. However, it should be noted that this solution has already passed dozens of hours of testing and has been working stably lately. So, what does the static analyzer draw our attention to:

V550 An odd precise comparison: t! = 0. It's probably better to use a comparison with defined precision: fabs (A - B)> Epsilon. objectextractpart.cpp 3401

D3DXVECTOR3 N = VectorMultiplication(

VectorMultiplication(V-VP, VN), VN);

float t = Qsqrt(Scalar(N, N));

if (t!=0)

{

N/=t;

V = V - N * DistPointToSurface(V, VP, N);

}Similar errors are repeated quite often in this library. I will not say that this came as a surprise to me. Already previously I encountered incorrect work with floating-point numbers in this project. However, there were no resources to systematically check the sources for this. According to the results of the verification, it became clear that you need to give the developer something to read to broaden his horizons in terms of working with floating-point numbers. I threw him links to a couple of good articles. Let's look at the result. It is difficult to say unambiguously whether this error causes real malfunctions in the program. The current solution sets a number of requirements for the initial polygonal network of arteries, along which the spreading of a radiopaque substance is simulated. If the requirements are not met, then the program may crash or obviously incorrect operation. Some of these requirements are obtained analytically, and some empirically. It is possible that this second part of the requirements grows just from incorrect work with floating-point numbers. It should be noted that not all found cases of using exact comparison of floating-point numbers were an error.

V807 Decreased Performance. Consider creating a reference to avoid using the 'Duct.TR [cIT]' expression repeatedly. objectextractpart.cpp 2689

for (k = 0; k < Duct.LIsize; k++)

{

cIT = Duct.ListIT[k];

if(DuctMain.TR[cIT].inScreen &&(Duct.TR[cIT].PNum > OneDev512))

{

tuv[0].y = Duct.TR[cIT].v0 * Duct.TR[cIT].PNum;

....

}

....

}About 20 similar messages in Solution. Interestingly, this library has very high performance requirements. In functions that work with vectors and matrices, literally every multiplication was considered at one time and looked for where to save. The loop in this code example goes through a very large number of iterations: up to tens of thousands. It is part of the particle system algorithms that provides angiography rendering. The peculiarity of the visualization of the radiopaque substance in the fluoroscopy picture is that the vessels directed perpendicular to the plane of the frame look darker. In this case, the x-ray radiation passes along the vessel, that is, it passes through a larger layer of the absorbing medium, is more attenuated, and less illuminates the film in the projection. This effect in the program is achieved through a system of translucent particles, arteries distributed inside the polygonal mesh. Polygonal meshes are very detailed. Particles in the system, respectively, are also very large. It will be interesting to conduct an experiment. Can we win a millisecond or two by correcting these inelegant places in the code? Perhaps the compiler does this optimization automatically, but why not try to give it a hint.

V669 Message: The 'cIT', 'j' arguments are non-constant references. The analyzer is unable to determine the position at which this argument is being modified. It is possible that the function contains an error. objectextractpart.cpp 2406

D3DXVECTOR3

ObjectExtractPart::GetD(D3Object& Duct, int& cIT, int& j){

return DuctMain.VP[DuctMain.TR[cIT].IP[2]].P

+ (

DuctMain.VP[DuctMain.TR[cIT].IP[0]].P

- DuctMain.VP[DuctMain.TR[cIT].IP[2]].P + (

DuctMain.VP[DuctMain.TR[cIT].IP[1]].P

- DuctMain.VP[DuctMain.TR[cIT].IP[0]].P

) * Duct.TR[cIT].tt[j].x

) * Duct.TR[cIT].tt[j].y

+ DuctMain.TR[cIT].CNR * Duct.TR[cIT].tt[j].z;

}In this case, the code is correct. The programmer’s error is only in the incorrect description of the function parameters. They should have been const int &.

Finding surprisingly few critical errors in the first test subject, we moved on to the more actively expanding Solution at the moment. Our next test subject consists of eight libraries that provide data transfer about what is happening during a virtual intervention from the graphics engine to the logic code of exercises for simulating surgical interventions. Data transfer in the opposite direction is also hanging on the same libraries, for example, to notify cadet errors or to signal the completion of the intervention phase. Due to this, we can write the logic of the exercises themselves only in C ++ without contacting the environment of the graphics engine.

Here the harvest was already more impressive, and we really found a couple of very dangerous places in the code:

V595 Message: The '_idiChannel' pointer was utilized before it was verified against nullptr. Check lines: 917, 918. logicinterface.cpp 917

int instType =

_idiChannel->GetActiveInstrumentTypeInGroup(instrumentId);

if (_alogChannel != NULL && _idiChannel != NULL) {

....

}The place of potential program crash. Testing did not reveal this error earlier due to the fact that until now when the application was working at this stage, the _idiChannel pointer was not NULL. But no one guarantees that with further development the situation will not change, and this bug previously put into the project will manifest itself.

V688 The 'chCameraMatrix' local variable possesses the same name as one of the class members, which can result in a confusion. angiographlog.cpp 323

class ANGIOGRAPHLOG_API AngiographLog: public ILogic

{

....

Aco_Matrix* chCameraMatrix;

Aco_Matrix* chProjectionMatrix;

....

}

D3DXMATRIX AngiographLog::GetCameraMatrix() {

D3DXMATRIX res;

Aco_Matrix* chCameraMatrix=(Aco_Matrix*)GetChild(CameraMatrix);

if ( chCameraMatrix != NULL) {

res = chCameraMatrix->GetMatrix();

}

return res;

}Four such positives in Solution in different files. In this case, it did not lead to errors. But in the future it could lead to misunderstanding and use of uninitialized pointers in the code.

V522 Dereferencing of the null pointer 'chInstrumentSubLineLengthIn' might take place. instrumentdatainterface.cpp 239

D3DXVECTOR3 InstrumentDataInterface::GetSubLineEndPos(....)

{

....

if(chInstrumentSubLineLengthIn != NULL)

chInstrumentSubLineLengthIn->SetFloat(subLineLengthIn);

else

chInstrumentSubLineLengthIn->SetFloat(0.0F);

....

}Here, as I imagine it, the developer first wrote the first two lines of code. Then he was distracted by something. Maybe even something very important. Returning again to work on the code, he had already added obvious stupidity. It happens. And in the meantime, a place for a potential program crash appeared in the code.

Dangerous places were discovered in the code due to incorrect work with pointers, and in other libraries:

V614 Potentially uninitialized pointer 'tabAntiPowerSpheres' used. getnewposbyheartbeat.cpp 175

void GetNewPosByHeartBeat::_precalc()

{

....

STL_Table *stlAntiPowerSpheres;

CSTL_Table *tabAntiPowerSpheres;

stlAntiPowerSpheres = (STL_Table *)GetChild(....);

if (stlAntiPowerSpheres != NULL)

tabAntiPowerSpheres = stlAntiPowerSpheres->getSTL_Table();

if (tabAntiPowerSpheres != NULL)

{

int tableSize = tabAntiPowerSpheres->getRowCount();

....

}

....

}This time the error is a little less obvious. If stlAntiPowerSpheres turned out to be NULL, then tabAntiPowerSpheres remains uninitialized, and indicates a random memory area. The check for NULL will be passed in this case, and then the program will crash when accessing the fields of the object. Testing did not reveal this point, probably for the reason that earlier everywhere in the code the call (STL_Table *) GetChild (CH_ANTIPOWER_SPHERES) did not return NULL.

In the end, I decided to check with a static analyzer one not tested solution, which is still in development and not implemented in the main project. As part of this solution, we are working on creating our own physical engine of a flexible string. There were already more errors. Here, for example, is a funny exhibit:

V527 It is odd that the false value is assigned to 'bool' type pointer. Probably meant: * outIsInScene = false. rpscene.cpp 79

bool rpScene::CheckIsRopeInScene(...., bool* outIsInScene)

{

if (mEngine == NULL)

{

outIsInScene = false;

return false;

}

else

{

*outIsInScene = mEngine->CheckIsRopeInScene(ropeToCheck);

return true;

}

}Here it can be noted that the analyzer in this case is only partially right. The outIsInScene parameter should not have been a pointer at all. But thanks anyway for pointing out this suspicious place in the code. Really a mistake.

I will not give all the triggers here. I will note in the end only two that have attracted attention.

V501 There are identical sub-expressions '(fabs (crossVect.x)> 1.192092896e-07F)' to the left and to the right of the '||' operator. rpmath.h 103

inline bool IsCollinearVectors(Vector3d vect1, Vector3d vect2)

{

Vector3d crossVect = Vector3dMultiply(vect1, vect2);

//проверяем вектор на близость к нулю;

return !((fabs(crossVect.x) > FLT_EPSILON) ||

(fabs(crossVect.y) > FLT_EPSILON) ||

(fabs(crossVect.x) > FLT_EPSILON));

}Here, on the one hand, is a common mistake that arose from the carelessness of a programmer. But, on the other hand, this kind of error would be very difficult to catch if you directly observe the result of the program, and not test the performance of individual methods with tests. In this function, the collinearity of two vectors was checked. For example, if the vector of the potential displacement of the point of the elastic string crossing the collision object turned out, with certain assumptions, collinear to the normal of the surface of the collision object at the intersection, this will affect how the rebound is calculated. But since a lot of interrelated factors influence the physical model, observing a working program it is not always possible to say exactly what exactly causes this or that inappropriate behavior.

There was another interesting actuation of the analyzer. At first, I didn’t even understand what the error was because the analyzer suspected something not in the code itself, but in the string literal:

V691 Empirical analysis. It is possible that a typo is present inside the string literal: "out_Radius". The 'RADIUS' word is suspicious. rpropeinstancecommand.cpp 93

....

mCommandsDescriptions[currCommandNr].name =

"Get Rope Fragments Count(Rope;out_Count)";

....

mCommandsDescriptions[currCommandNr].

params[PARAM_NR_FRAGMENTS_COUNT].name = "out_Radius";

....But it turned out that the analyzer was cursing about the case and, indeed, there should be another string literal. The string "out_Radius" in this place appeared as a result of copy-paste from the fragment a little higher. Then something was replaced in the code, but forgot to fix the string literal to the out_Count appropriate here.

Here is the code from which we copied:

....

mCommandsDescriptions[currCommandNr].name =

"Get Rope Fragment Radius(Rope; in_FragmentNr;out_Radius)";

....

mCommandsDescriptions[currCommandNr].

params[PARAM_NR_FRAGMENT_RADIUS].name = "out_Radius";

....How did it end?

It is clear that such a one-time verification of the project will not bring much benefit. The existing code has already passed quite a long test. There are not many errors in it, and many of those that exist do not manifest themselves during normal program operation. Will we now purchase PVS-Studio licenses? I have a positive attitude to the introduction of such a tool in the project. Obviously, the use of static analysis would free up some of the resources of the tester, and even the developers. Redmine would have fewer tasks in the Error tracker. Solved tasks would be less likely to be returned by testers for revision. However, before making the final decision, you need to evaluate exactly what commercial benefits the use of PVS-Studio will bring to us, and compare this with the cost of the product itself. The fact that that in our project relatively little dynamically developing code is in C ++. So far, we continue to work without this tool.

Reviews

I also handed over a temporary license key to developers from other project teams of Eidos-Medicine. I wanted the guys to try and draw conclusions about whether they need such an instrument in their work. I will give a few reviews in the article:

- Nikolay, a programmer from the team for the development of a simulator of laparoscopic surgery: “Not a bad thing. Well found uninitialized pointers and any dangerous work with them. ”

- Oleg, a programmer from the software team for industrial robots: “Great thing. But it’s hard to cram into an old project. We have ~ 9k warnings. True, there is a mode “ignore everything old and watch only new errors.” (The greater number of analyzer warnings in this project is explained by the fact that it is no longer part, but all the code is written in C ++. And the scale of software development in this the project team is noticeably larger.)

- Roman, a programmer from the software team for industrial robots: “A useful thing, but I think it makes no sense to use more than once a month.”

Andrey Karpov responded to the last comment, and asked for his answer in the article:

This is an inefficient use case, which we try to warn about in each article. In a nutshell - the sooner an error is found, the better. It makes no sense to look for a typo by running the debugger if it could be found immediately after compilation using static analysis.

If the reason for not using the analyzer regularly lies in its slow operation, then I propose to get acquainted with tips on increasing the speed of work. Perhaps they will help. If not, you can always organize automatic night checks (we will show you how to organize everything better).

If the reason is too many warnings, then for starters you can remove all warnings and work only with new ones ( how to incorporate static analysis into a large project ). ”

The article used photographs of Adele Valeev enzo2u .