iPhone XS: why is this brand new camera

- Transfer

Report of a startup engaged in an application to improve photography with iPhone

iPhone XS vs. iPhone X - iron-level camera changes

In the latest version of our application, we made a new function that displays detailed information about what your phone's camera is capable of. Users who received earlier access to the iPhone XS shared this information with us, which allowed us to describe the hardware specifications in detail.

After analyzing, we can give a more detailed overview of what's new in the iPhone XS camera, and more details about its technical capabilities than Apple wanted to reveal in the presentation.

This is exactly the specifications of iron - despite the fact that Apple has mainly focused on such software improvements as Smart HDR and the new portrait mode.

The lenses of the iPhone XS and XS Max, which are used for most of the shots, have undergone a change, giving a focal length equivalent to 26 mm. This is 2 mm less than the previous models, the iPhone 8 Plus and iPhone X, which had 28 mm wide-angle lenses.

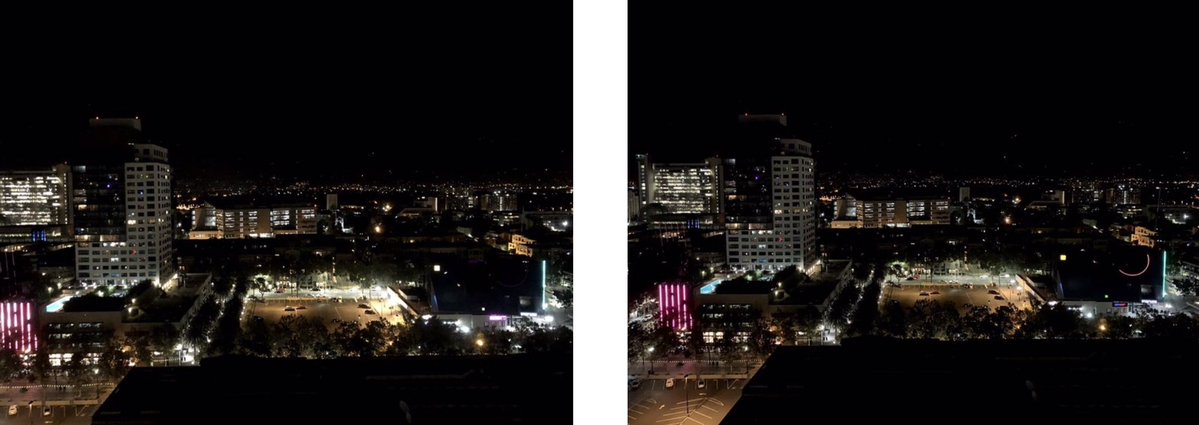

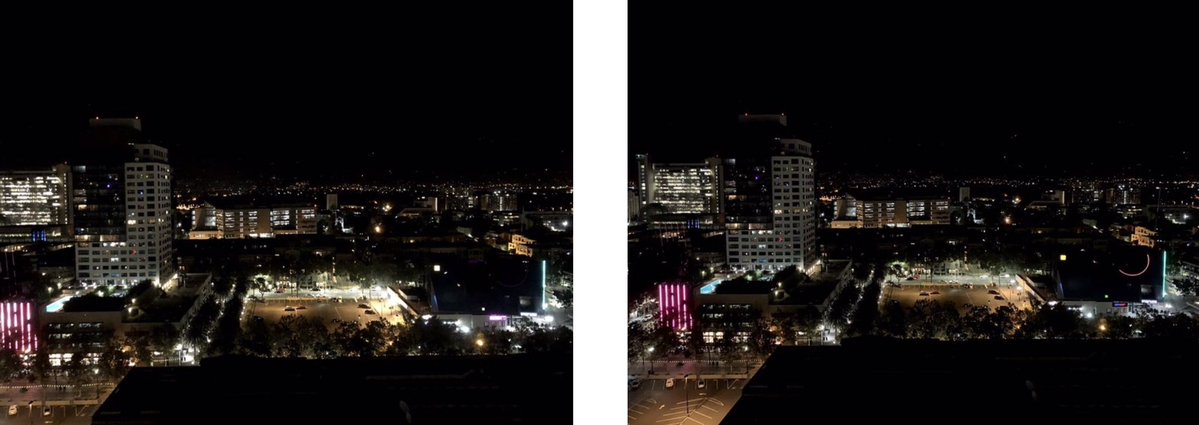

The user of our program and the iPhone XS sent us a photo, where you can see the difference:

Our application can not find out the size of the sensor, but all the data indicate that a new sensor has been installed in the iPhone XS. John Gruber confirmed that the new iPhone XS sensor is 30% larger - and Apple claims that the size of the pixels on the new sensor for the wide-angle camera is now 1.4 microns, which is 0.2 microns larger than the previous ones.

iPhone XS and XS Max are able to keep a second exposure. Previous iPhones could hold exposures up to 1/3 s. This ability can allow you to take all sorts of cool photos with a long exposure.

The new sensor - a new ISO range, slightly wider than the iPhone XS. The ISO now extends from 24 ISO to 2304 ISO, which provides a little more sensitivity in low light. The best of the previous iPhone sensors produced only 2112 ISO.

The telephoto lens has increased the ISO by 240, now its maximum value is not 1200, but 1440, which speaks of small adjustments to the sensor. Perhaps this means that with the same level of illumination we will see less noise.

The duration of the minimum exposure increased: on a wide-angle camera and a telephoto lens, this value changed from 1/91000 s to 1/22000 s and 1/45000 s, respectively. It is not clear what follows from this - it is necessary to test.

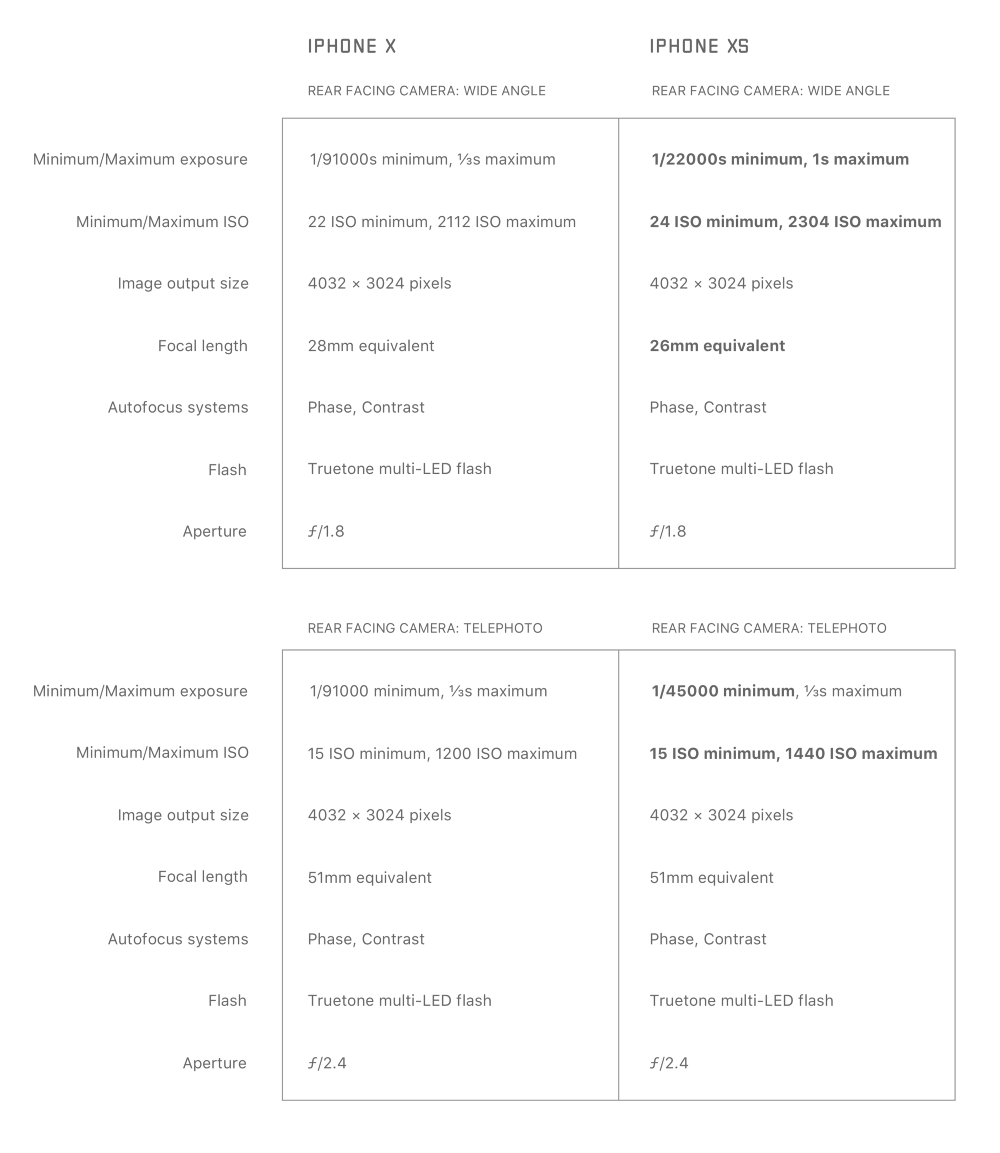

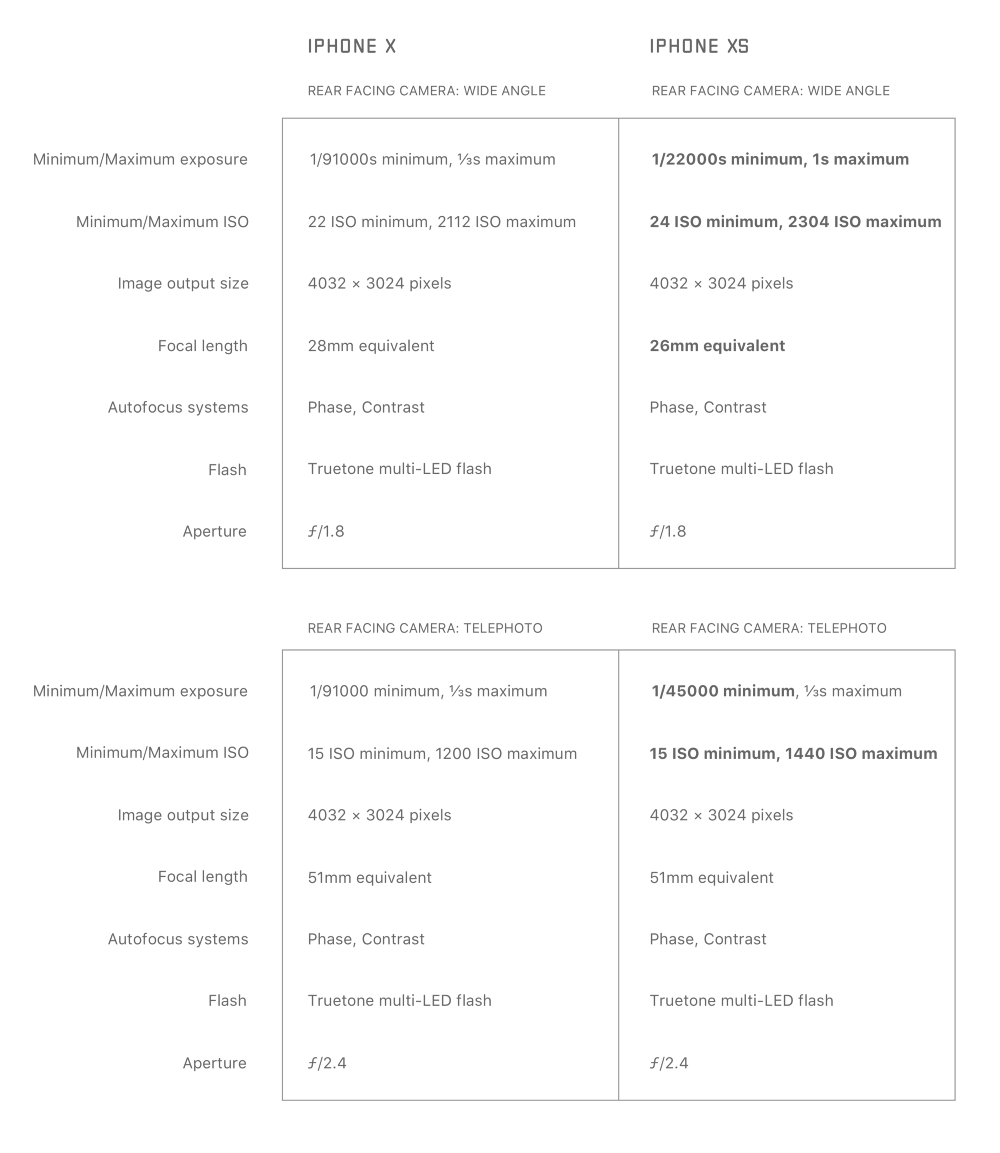

Comparison of full lists of technical details:

I was wondering why Apple’s presentation focused on changes in camera software, and not on its hardware. Having tested the cameras of the iPhone XS, I understood why.

The iPhone XS is not just enlarged sensor: it has completely redesigned the camera, and the biggest change was that it relies on a computational photograph.

The Apple are smart. They see that by cramming more and more electronics into a sensor the size of a nail, the results are getting less. Photography is the science of capturing light, it is limited to optics and physics.

The only way to get around the laws of physics is " computational photography ." Powerful chips in modern iPhones allow the company to take a bunch of photos — some of which are taken before you press the shutter button — and merge them into one perfect one.

iPhone XS will take a photo with increased and reduced exposure, take quick photos to freeze movement and maintain clarity throughout the frame, and even take the best parts of all these frames to create one image. This is what you get from the iPhone XS camera, and this is what makes it so effective in situations where you usually lose parts due to mixed light or strong contrast.

This is not a small adjustment of Auto HDR on the iPhone X. This is a completely new feature, a fundamental difference from the photos of all previous iPhones. In a sense, a completely new camera.

It does not exist. I don’t want to say that people invent something to advance on YouTube, but statements from the Internet should not be blindly believed.

It seems to people that the iPhone XS softens the image, for two reasons:

It is important to understand how the brain perceives clarity, and how artists make things seem clearer.

Everything works differently than in comedy series like CSI, where detectives shout “improve!” To the screen. You can not add lost parts. However, you can fool the brain by adding small contrasting areas.

Simply put, a dark or light frame next to a contrasting light or dark shape. Local contrast makes things seem clearer.

To improve the contrast, it is necessary to make the light areas brighter near the border, and the dark areas darker near the border.

The iPhone XS blends images with different exposures and reduces the brightness of bright areas and makes shadows less dark. Details remain, but it seems to us that the image is less contrasting, because local contrast is lost. In the photo above, the skin appears smoother because the light is not so sharp.

Observant people will notice that this affects not only the skin. Rough textures and almost everything dark - from cats to coals - looks smoother. This works reducing the amount of noise. iPhone XS makes it more aggressive than previous iPhones.

Having tested the iPhone XS with the X, we found that the XS prefers a higher shutter speed and an increased ISO level. In other words, he makes the photo faster, but because of this there is noise.

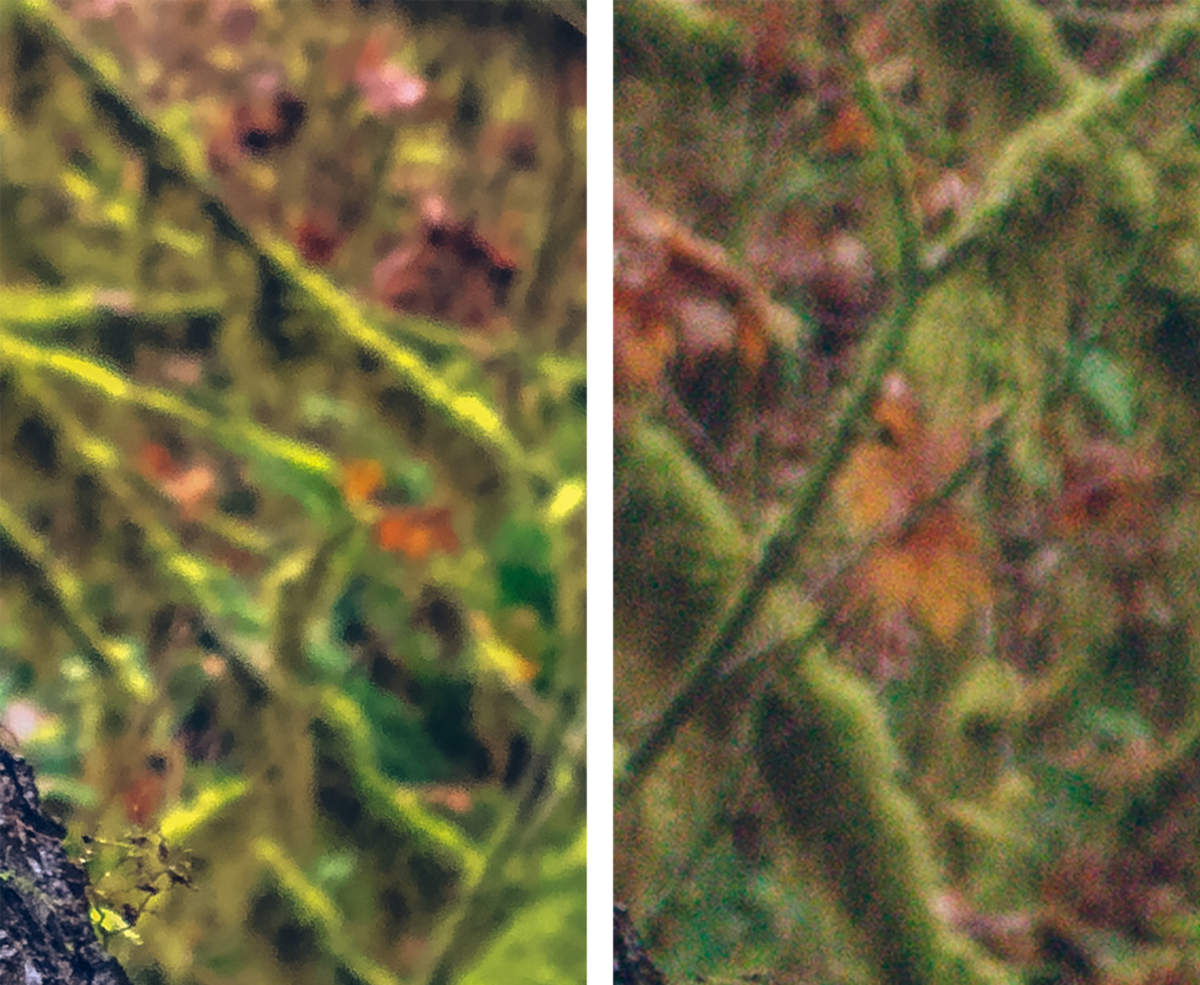

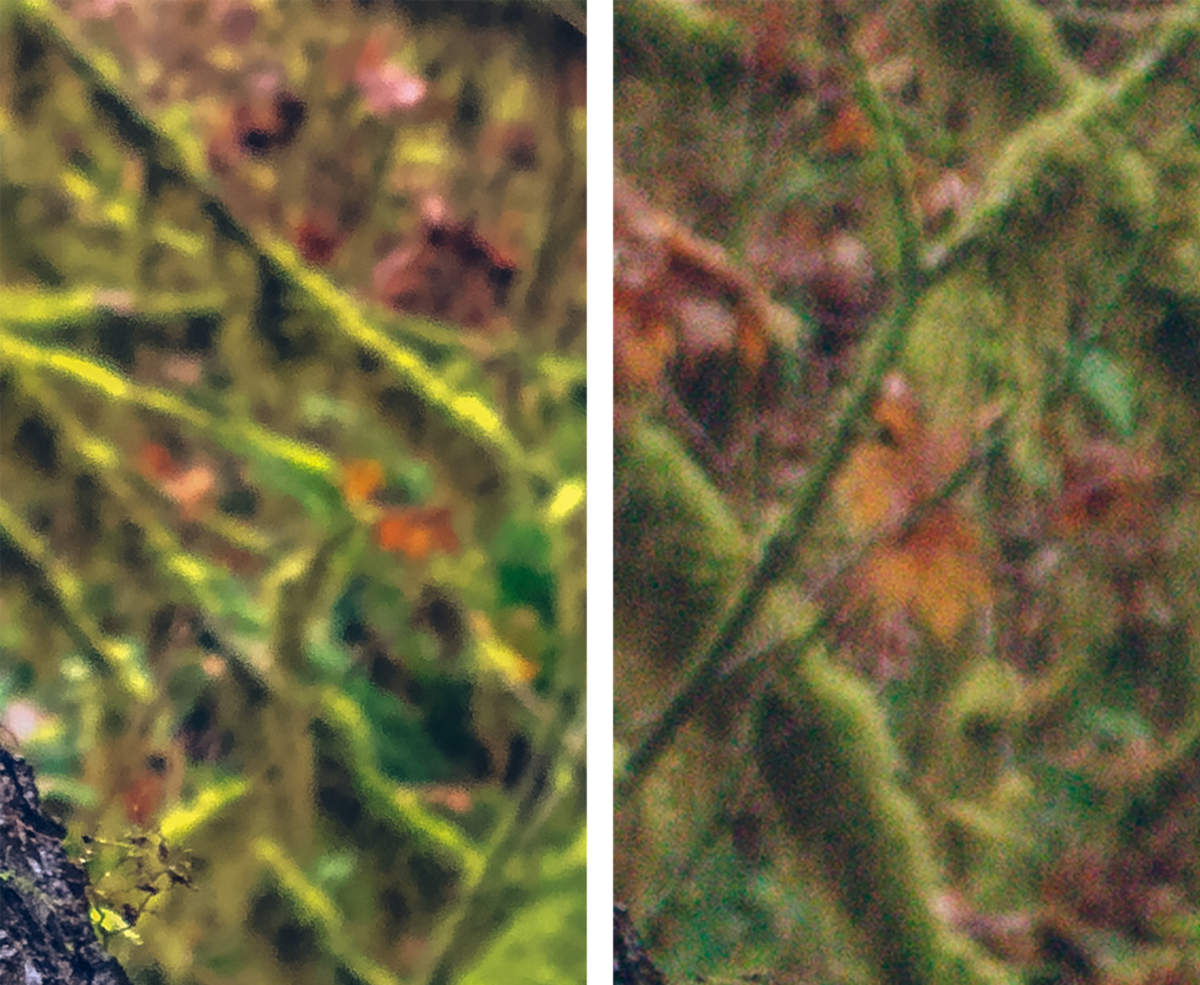

iPhone X RAW

iPhone XS RAW

In the RAW format, you can see that the iPhone XS (on the right) has much more noise. No noise is removed in this format. Why should the XS frame be so noisy?

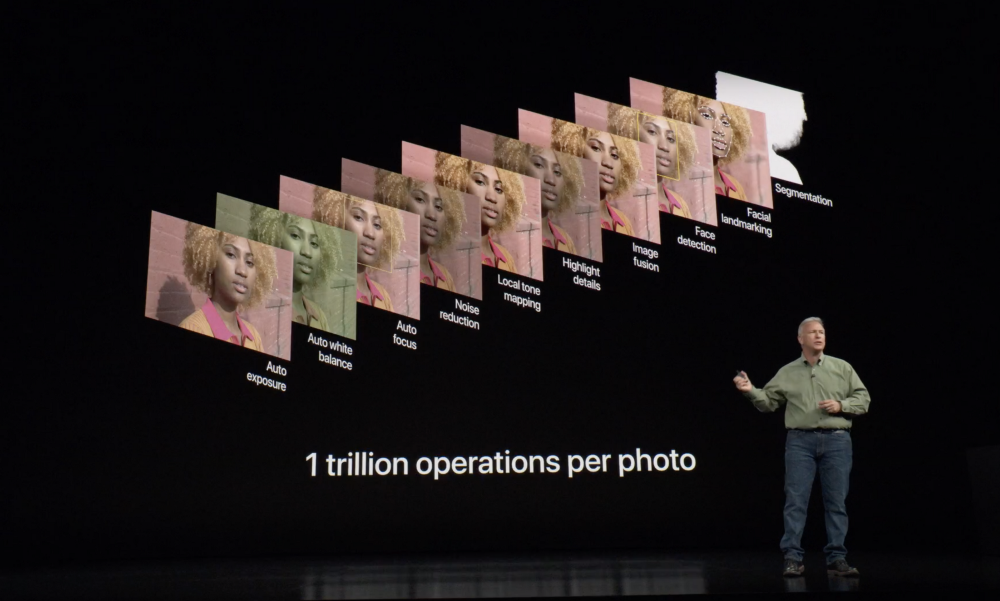

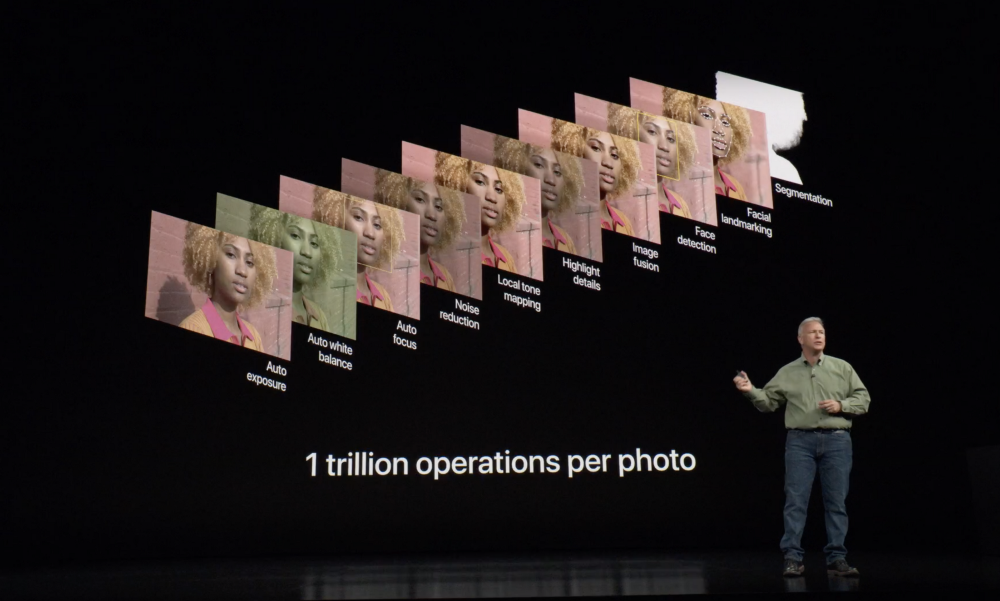

Remember that presentation with a sequence of frames showing how the iPhone camera works?

Unless you have bionic prostheses, you can not keep the phone so long without movement. In order to get clear and perfectly aligned images in such quantity, the iPhone needs to take pictures very quickly. This requires a higher shutter speed - which means there will be more noise in the image.

It must somehow be removed, and this imposes its own limitations: along with the noise, some details and local contrast are removed.

iPhone XS RAW - details in the reflection of more “smooth”

Smart HDR

But by and large, selfies become more smooth - especially faces!

Yes. Iron front camera works worse in low light than the main one. The selfie camera has a small sensor the size of a little finger's nail, so less light is falling into it, which means more noise and more noise removal.

The result is a smoother image, and Smart HDR, coupled with the computational photo pipeline, smoothes it out more than before.

The flip side of the coin is that selfies that usually get worse in mixed or harsh light (and that’s most of the lighting) are no longer blurred, and in most cases look better, just a little smoother.

The good news is that Apple can even tweak this a bit if people find that anti-aliasing works too much, but since there’s a choice between poor lighting with noise and too much anti-aliasing, it is logical that version 1.0 tends to anti-aliasing.

Regarding the statements that the software is particularly relevant to individuals: I photographed a lemon, rough paper and took selfies, and the level of smoothing was the same everywhere.

No, his camera is not worse than the iPhone X. The iPhone XS has a better camera. It has a higher dynamic range, but this is due to some tradeoffs in software. If you don’t like to do things in new ways, don’t worry.

Such a picture could not be done on previous iPhones

What Apple does is better for almost all cases: ordinary users will get better photos, there will be more details in the light and shadows, without any editing. Professionals will be able to return the contrast by slightly editing the pictures; the opposite will not work: the details are already lost in the contrast image.

Now you can take selfies and photos with sharp light, god light or other non-leveling light, and get an acceptable result. This is just magic!

There are only two minor problems.

Cameras are increasingly turning from simple tools into “smart devices” that use various complex operations to combine several images into one, and we wonder if we are looking at an “unedited” image.

Take this photo of Yosemite National Park:

It is edited. To properly expose the landscape, the photographer used a long exposure. Then he shot the stars with a shorter exposure, otherwise they would have turned into traces. Then combined the two photos into one. Technically speaking, this is a fake.

Back to the iPhone: Smart HDR takes different exposures and blends them together to get more detail in the shade and in the light. There is a certain degree of fake. Proponents of the purity of photos can be indignant about this.

iPhone XS, Smart HDR

iPhone XS

iPhone X

Without Smart HDR it is very difficult to make HDR manually so that the image does not look fake. There are a sabreddit dedicated horseradish HDR.

The average image, with Smart HDR turned off, still has a large dynamic range, but it doesn’t seem to be so “auto-adjusted.”

So now the camera works on iPhones. I bet that in the future it will work that way.

Yes, and this applies to the viewfinder of any application that takes a photo. Apple improves dynamic range on the fly, right in the video, so you will always see a “modified” image.

From a practical point of view, problems begin with the following: iPhone XS does not exhibit exposure like the iPhone X. And this is of great importance when shooting in RAW.

Take this snapshot:

iPhone X RAW

iPhone XS RAW

You will immediately notice that the second photo is overexposed. When you start editing the iPhone XS RAW file, you will see that the light parts of the image are lost.

Going deeper into details, you see the second problem: the iPhone X did an exposure of 1/60 s and ISO 40, and the iPhone XS did an exposure of 1/120 s and ISO 80. We suspect that to get the best photo for Smart HDR, the camera is just have to use lower exposure and higher ISO values.

We are making a camera application to receive photos in RAW, so this is very bad. Not only does RAW not benefit from the merging of several photos - iPhone photos are very noisy at ISO 200 levels. This is a step in the wrong direction.

The situation is further aggravated by the fact that the iPhone XS sensor noise is slightly more active and colored than the iPhone X.

Such noise is not so easy to remove during post-processing. This is not the soft grain of the film that we saw in the iPhone X and the iPhone 8 in RAW.

Today it turns out that if you shoot RAW on the iPhone XS, you will have to switch to manual mode and reduce the exposure. Otherwise, your RAW will be worse than JPEG from Smart HDR. All third-party camera applications suffer from this. Oddly enough, the RAW files from the iPhone X look much better than from the iPhone XS.

Fortunately, this can be changed. After the release of the iPhone XS, we, working at night and on weekends, came up with a solution of how to get the most out of the larger sensor and its deep pixels.

We made Smart RAW - a feature that uses the new touch technology in the iPhone XS, and makes photos in RAW better than the iPhone X could do. Smart RAW does not use any Smart HDR features — on the contrary, it avoids them so that you practically don’t need remove the noise.

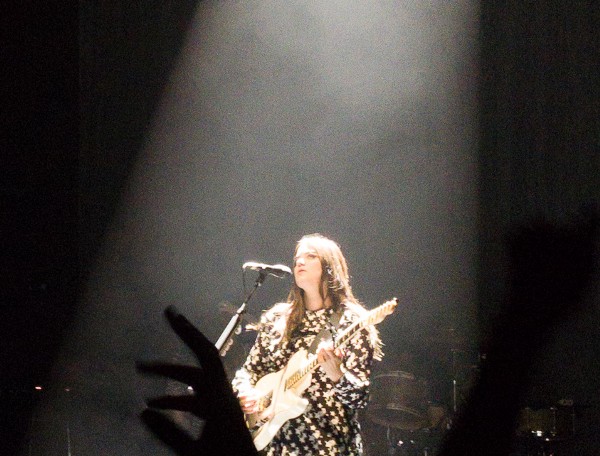

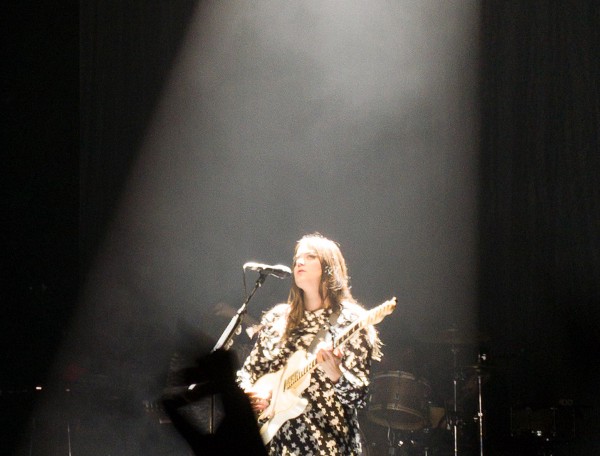

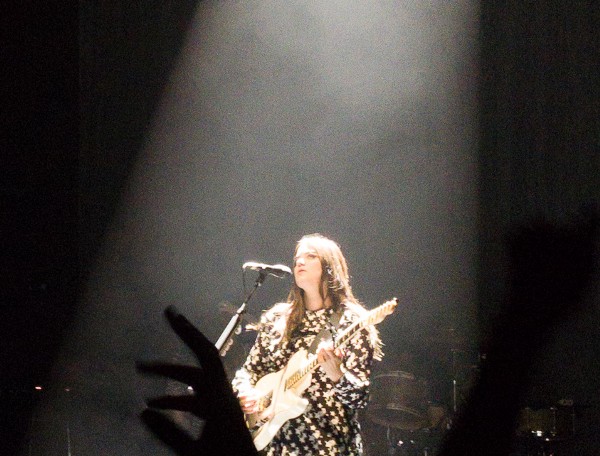

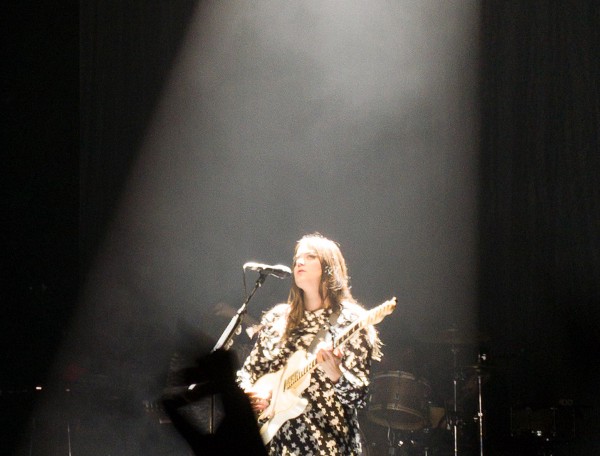

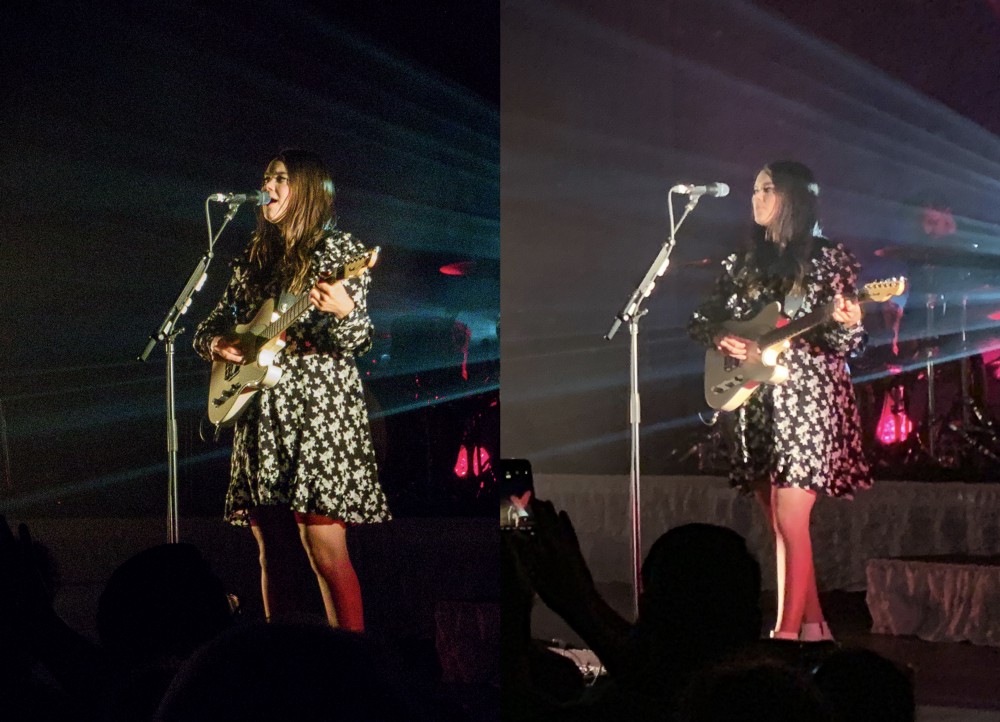

Now that we have bypassed the problems with the exposure of the iPhone XS, a surprising amount of detail can be found in the Smart RAW files. Let's look at the photo from the First Aid Kit concert and compare the options with and without

Smart RAW : Without Smart RAW

Smart RAW

Much better than the iPhone X. Here are a few comparisons of RAW images made with the iPhone XS and iPhone X.

The results are amazing: less noise, more details.

The best feature of Smart RAW: thanks to the adjustment of the iPhone XS sensor, we get maximum quality in images, more than ever. The transition from iPhone X to iPhone XS is a surprising increase in resolution and quality.

You may be interested in how this feature can be compared to Smart HDR, since shooting in RAW implies the absence of HDR. I took a snapshot that was challenging on exposure conditions, using Smart HDR and our new RAW:

Smart HDR

RAW

Without editing, they are almost the same; Smart HDR maybe a little better.

But RAW is only a starting point. Here are the same pictures after editing:

Smart HDR

RAW after editing

This dynamic range, like Smart HDR, we didn’t get - pay attention to how the sky becomes bluer - but came pretty close. I like more what RAW looks like after editing - a bit more upright.

But the main advantage: the details.

I prefer to shoot in RAW because of the clarity of the details, and on the iPhone XS with its larger pixels of details it became more than before. A perfect example is the leaves by the tree at the top right:

And so it is with most photos. When shooting in RAW, then you have to work on the image, but the results are often worth it. Of course, there is a lot of light in this image - you need to check what happens after sunset.

In situations of lack of light, there is a trade-off. The less light, the more noise. iPhone XS, along with Smart HDR in a regular shooting application, does an excellent job with such shots, but sometimes it doesn’t produce the clearest pictures.

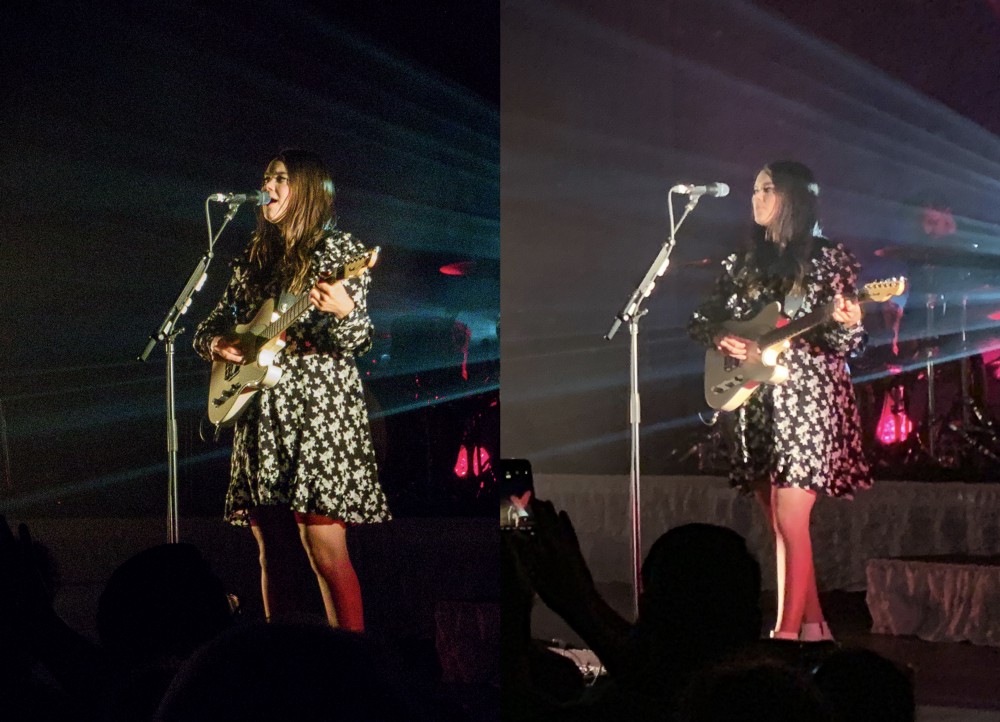

Photos with a lack of light will always be a lot of noise. Smart HDR aggressively hides it, losing in detail. If you need more details and do not interfere with a little noise, try our Smart RAW:

Halide Smart RAW on the left, iPhone Smart HDR on the right

There is noise in the shadows, and the skin looks coarser due to less blur of light and dark spots, but the detail is amazing compared with images taken in normal mode.

Smart RAW is still in test mode, and you need to collect a lot of photographic evidence that it works perfectly in all situations.

The iPhone XS has a completely new camera. This is not just another sensor, it is a completely new approach to photography, new for iOS. Because it relies so heavily on the fusion of exposures and computational photography, its images can be very different from those made in the same conditions on other iPhones.

But, since unlike the previous cameras, its advantages in quality are based on software, we can expect their improvements and changes. This is just the first version of iOS 12 and Smart HDR.

We, developers, need to update applications to take full advantage of the new sensor in the iPhone XS and XS Max. Since it is so different, it can not be treated as the sensor of any other iPhone, otherwise you will get unsatisfactory results.

If you are not very happy with some aspects of this wondrous new world of computing photography or Apple's image processing features, you can turn off active HDR processing in the camera settings, or shoot in RAW.

To disable it, go to Settings → Camera, and disable Smart HDR there. After that, open the camera and in the top menu a new HDR setting will appear. Click on it to disable HDR.

iPhone XS vs. iPhone X - iron-level camera changes

In the latest version of our application, we made a new function that displays detailed information about what your phone's camera is capable of. Users who received earlier access to the iPhone XS shared this information with us, which allowed us to describe the hardware specifications in detail.

After analyzing, we can give a more detailed overview of what's new in the iPhone XS camera, and more details about its technical capabilities than Apple wanted to reveal in the presentation.

This is exactly the specifications of iron - despite the fact that Apple has mainly focused on such software improvements as Smart HDR and the new portrait mode.

What's new?

Converted wide-angle lenses

The lenses of the iPhone XS and XS Max, which are used for most of the shots, have undergone a change, giving a focal length equivalent to 26 mm. This is 2 mm less than the previous models, the iPhone 8 Plus and iPhone X, which had 28 mm wide-angle lenses.

The user of our program and the iPhone XS sent us a photo, where you can see the difference:

New sensor

Our application can not find out the size of the sensor, but all the data indicate that a new sensor has been installed in the iPhone XS. John Gruber confirmed that the new iPhone XS sensor is 30% larger - and Apple claims that the size of the pixels on the new sensor for the wide-angle camera is now 1.4 microns, which is 0.2 microns larger than the previous ones.

Increase exposure time to 1 second

iPhone XS and XS Max are able to keep a second exposure. Previous iPhones could hold exposures up to 1/3 s. This ability can allow you to take all sorts of cool photos with a long exposure.

ISO range increase

The new sensor - a new ISO range, slightly wider than the iPhone XS. The ISO now extends from 24 ISO to 2304 ISO, which provides a little more sensitivity in low light. The best of the previous iPhone sensors produced only 2112 ISO.

The telephoto lens has increased the ISO by 240, now its maximum value is not 1200, but 1440, which speaks of small adjustments to the sensor. Perhaps this means that with the same level of illumination we will see less noise.

Minimum exposure changes

The duration of the minimum exposure increased: on a wide-angle camera and a telephoto lens, this value changed from 1/91000 s to 1/22000 s and 1/45000 s, respectively. It is not clear what follows from this - it is necessary to test.

Comparison of full lists of technical details:

Programmatic changes

I was wondering why Apple’s presentation focused on changes in camera software, and not on its hardware. Having tested the cameras of the iPhone XS, I understood why.

The iPhone XS is not just enlarged sensor: it has completely redesigned the camera, and the biggest change was that it relies on a computational photograph.

Smart camera

The Apple are smart. They see that by cramming more and more electronics into a sensor the size of a nail, the results are getting less. Photography is the science of capturing light, it is limited to optics and physics.

The only way to get around the laws of physics is " computational photography ." Powerful chips in modern iPhones allow the company to take a bunch of photos — some of which are taken before you press the shutter button — and merge them into one perfect one.

iPhone XS will take a photo with increased and reduced exposure, take quick photos to freeze movement and maintain clarity throughout the frame, and even take the best parts of all these frames to create one image. This is what you get from the iPhone XS camera, and this is what makes it so effective in situations where you usually lose parts due to mixed light or strong contrast.

This is not a small adjustment of Auto HDR on the iPhone X. This is a completely new feature, a fundamental difference from the photos of all previous iPhones. In a sense, a completely new camera.

What is this “soft filter” for selfies?

It does not exist. I don’t want to say that people invent something to advance on YouTube, but statements from the Internet should not be blindly believed.

It seems to people that the iPhone XS softens the image, for two reasons:

- Improved, more aggressive noise reduction due to the merger of different exposures.

- Merging exposures reduces sharpness, eliminating the contrast between light and shadow, resulting in different areas of the skin.

It is important to understand how the brain perceives clarity, and how artists make things seem clearer.

Everything works differently than in comedy series like CSI, where detectives shout “improve!” To the screen. You can not add lost parts. However, you can fool the brain by adding small contrasting areas.

Simply put, a dark or light frame next to a contrasting light or dark shape. Local contrast makes things seem clearer.

To improve the contrast, it is necessary to make the light areas brighter near the border, and the dark areas darker near the border.

The iPhone XS blends images with different exposures and reduces the brightness of bright areas and makes shadows less dark. Details remain, but it seems to us that the image is less contrasting, because local contrast is lost. In the photo above, the skin appears smoother because the light is not so sharp.

Observant people will notice that this affects not only the skin. Rough textures and almost everything dark - from cats to coals - looks smoother. This works reducing the amount of noise. iPhone XS makes it more aggressive than previous iPhones.

Why do you need to reduce the amount of noise?

Having tested the iPhone XS with the X, we found that the XS prefers a higher shutter speed and an increased ISO level. In other words, he makes the photo faster, but because of this there is noise.

iPhone X RAW

iPhone XS RAW

In the RAW format, you can see that the iPhone XS (on the right) has much more noise. No noise is removed in this format. Why should the XS frame be so noisy?

Remember that presentation with a sequence of frames showing how the iPhone camera works?

Unless you have bionic prostheses, you can not keep the phone so long without movement. In order to get clear and perfectly aligned images in such quantity, the iPhone needs to take pictures very quickly. This requires a higher shutter speed - which means there will be more noise in the image.

It must somehow be removed, and this imposes its own limitations: along with the noise, some details and local contrast are removed.

iPhone XS RAW - details in the reflection of more “smooth”

Smart HDR

But by and large, selfies become more smooth - especially faces!

Yes. Iron front camera works worse in low light than the main one. The selfie camera has a small sensor the size of a little finger's nail, so less light is falling into it, which means more noise and more noise removal.

The result is a smoother image, and Smart HDR, coupled with the computational photo pipeline, smoothes it out more than before.

The flip side of the coin is that selfies that usually get worse in mixed or harsh light (and that’s most of the lighting) are no longer blurred, and in most cases look better, just a little smoother.

The good news is that Apple can even tweak this a bit if people find that anti-aliasing works too much, but since there’s a choice between poor lighting with noise and too much anti-aliasing, it is logical that version 1.0 tends to anti-aliasing.

Regarding the statements that the software is particularly relevant to individuals: I photographed a lemon, rough paper and took selfies, and the level of smoothing was the same everywhere.

So, is the iPhone XS camera worse?

No, his camera is not worse than the iPhone X. The iPhone XS has a better camera. It has a higher dynamic range, but this is due to some tradeoffs in software. If you don’t like to do things in new ways, don’t worry.

Such a picture could not be done on previous iPhones

What Apple does is better for almost all cases: ordinary users will get better photos, there will be more details in the light and shadows, without any editing. Professionals will be able to return the contrast by slightly editing the pictures; the opposite will not work: the details are already lost in the contrast image.

Now you can take selfies and photos with sharp light, god light or other non-leveling light, and get an acceptable result. This is just magic!

There are only two minor problems.

Problem of authenticity

Cameras are increasingly turning from simple tools into “smart devices” that use various complex operations to combine several images into one, and we wonder if we are looking at an “unedited” image.

Take this photo of Yosemite National Park:

It is edited. To properly expose the landscape, the photographer used a long exposure. Then he shot the stars with a shorter exposure, otherwise they would have turned into traces. Then combined the two photos into one. Technically speaking, this is a fake.

Back to the iPhone: Smart HDR takes different exposures and blends them together to get more detail in the shade and in the light. There is a certain degree of fake. Proponents of the purity of photos can be indignant about this.

iPhone XS, Smart HDR

iPhone XS

iPhone X

Without Smart HDR it is very difficult to make HDR manually so that the image does not look fake. There are a sabreddit dedicated horseradish HDR.

The average image, with Smart HDR turned off, still has a large dynamic range, but it doesn’t seem to be so “auto-adjusted.”

So now the camera works on iPhones. I bet that in the future it will work that way.

Yes, and this applies to the viewfinder of any application that takes a photo. Apple improves dynamic range on the fly, right in the video, so you will always see a “modified” image.

RAW issues

From a practical point of view, problems begin with the following: iPhone XS does not exhibit exposure like the iPhone X. And this is of great importance when shooting in RAW.

Take this snapshot:

iPhone X RAW

iPhone XS RAW

You will immediately notice that the second photo is overexposed. When you start editing the iPhone XS RAW file, you will see that the light parts of the image are lost.

Going deeper into details, you see the second problem: the iPhone X did an exposure of 1/60 s and ISO 40, and the iPhone XS did an exposure of 1/120 s and ISO 80. We suspect that to get the best photo for Smart HDR, the camera is just have to use lower exposure and higher ISO values.

We are making a camera application to receive photos in RAW, so this is very bad. Not only does RAW not benefit from the merging of several photos - iPhone photos are very noisy at ISO 200 levels. This is a step in the wrong direction.

The situation is further aggravated by the fact that the iPhone XS sensor noise is slightly more active and colored than the iPhone X.

Such noise is not so easy to remove during post-processing. This is not the soft grain of the film that we saw in the iPhone X and the iPhone 8 in RAW.

Today it turns out that if you shoot RAW on the iPhone XS, you will have to switch to manual mode and reduce the exposure. Otherwise, your RAW will be worse than JPEG from Smart HDR. All third-party camera applications suffer from this. Oddly enough, the RAW files from the iPhone X look much better than from the iPhone XS.

Our solution: Smart RAW

Fortunately, this can be changed. After the release of the iPhone XS, we, working at night and on weekends, came up with a solution of how to get the most out of the larger sensor and its deep pixels.

We made Smart RAW - a feature that uses the new touch technology in the iPhone XS, and makes photos in RAW better than the iPhone X could do. Smart RAW does not use any Smart HDR features — on the contrary, it avoids them so that you practically don’t need remove the noise.

Now that we have bypassed the problems with the exposure of the iPhone XS, a surprising amount of detail can be found in the Smart RAW files. Let's look at the photo from the First Aid Kit concert and compare the options with and without

Smart RAW : Without Smart RAW

Smart RAW

Much better than the iPhone X. Here are a few comparisons of RAW images made with the iPhone XS and iPhone X.

The results are amazing: less noise, more details.

The best feature of Smart RAW: thanks to the adjustment of the iPhone XS sensor, we get maximum quality in images, more than ever. The transition from iPhone X to iPhone XS is a surprising increase in resolution and quality.

Smart RAW vs Smart HDR

You may be interested in how this feature can be compared to Smart HDR, since shooting in RAW implies the absence of HDR. I took a snapshot that was challenging on exposure conditions, using Smart HDR and our new RAW:

Smart HDR

RAW

Without editing, they are almost the same; Smart HDR maybe a little better.

But RAW is only a starting point. Here are the same pictures after editing:

Smart HDR

RAW after editing

This dynamic range, like Smart HDR, we didn’t get - pay attention to how the sky becomes bluer - but came pretty close. I like more what RAW looks like after editing - a bit more upright.

But the main advantage: the details.

I prefer to shoot in RAW because of the clarity of the details, and on the iPhone XS with its larger pixels of details it became more than before. A perfect example is the leaves by the tree at the top right:

And so it is with most photos. When shooting in RAW, then you have to work on the image, but the results are often worth it. Of course, there is a lot of light in this image - you need to check what happens after sunset.

In situations of lack of light, there is a trade-off. The less light, the more noise. iPhone XS, along with Smart HDR in a regular shooting application, does an excellent job with such shots, but sometimes it doesn’t produce the clearest pictures.

Photos with a lack of light will always be a lot of noise. Smart HDR aggressively hides it, losing in detail. If you need more details and do not interfere with a little noise, try our Smart RAW:

Halide Smart RAW on the left, iPhone Smart HDR on the right

There is noise in the shadows, and the skin looks coarser due to less blur of light and dark spots, but the detail is amazing compared with images taken in normal mode.

Smart RAW is still in test mode, and you need to collect a lot of photographic evidence that it works perfectly in all situations.

Results

The iPhone XS has a completely new camera. This is not just another sensor, it is a completely new approach to photography, new for iOS. Because it relies so heavily on the fusion of exposures and computational photography, its images can be very different from those made in the same conditions on other iPhones.

But, since unlike the previous cameras, its advantages in quality are based on software, we can expect their improvements and changes. This is just the first version of iOS 12 and Smart HDR.

We, developers, need to update applications to take full advantage of the new sensor in the iPhone XS and XS Max. Since it is so different, it can not be treated as the sensor of any other iPhone, otherwise you will get unsatisfactory results.

If you are not very happy with some aspects of this wondrous new world of computing photography or Apple's image processing features, you can turn off active HDR processing in the camera settings, or shoot in RAW.

To disable it, go to Settings → Camera, and disable Smart HDR there. After that, open the camera and in the top menu a new HDR setting will appear. Click on it to disable HDR.