Recognizing the physical activity of users with examples on R

- Tutorial

The task of recognizing the physical activity of users (Human activity Recognition or HAR) came across to me before only as educational tasks. Having discovered the possibilities of Caret R Package , a convenient wrapper for more than 100 machine learning algorithms, I decided to try it for HAR as well. The UCI Machine Learning Repository has several data sets for such experiments. Since the topic with dumbbells is not very close to me, I chose to recognize the activity of smartphone users .

The data in the set was obtained from thirty volunteers with a Samsung Galaxy S II attached to the belt. The signals from the gyroscope and accelerometer were specially processed and converted into 561 signs. For this, multi-stage filtering, Fourier transform and some standard statistical transformations were used, a total of 17 functions: from mathematical expectation to calculating the angle between vectors. I miss the details of the processing; they can be found in the repository. The training sample contains 7352 cases, the test sample contains 2947. Both samples are marked with labels corresponding to six activities: walking, walking_up, walking_down, sitting, standing and laying.

As the basic algorithm for the experiments, I chose Random Forest. The choice was based on the fact that RF has a built-in mechanism for assessing the importance of variables, which I wanted to experience, since the prospect of independently choosing the attributes from 561 variables scared me a little. I also decided to try the support vector machine (SVM). I know that this is a classic, but I have not had to use it before, it was interesting to compare the quality of models for both algorithms.

After downloading and unpacking the archive with the data, I realized that I would have to tinker with them. All parts of the set, names of signs, activity labels were in different files. I used the read.table () function to upload files to the R environment. I had to prohibit the automatic conversion of strings to factor variables, as it turned out that there were duplicate variable names. In addition, the names contained invalid characters. This problem was solved by the following construction with the sapply () function , which in R often replaces the standard for loop:

Glued all parts of the sets using the rbind () and cbind () functions . The first connects rows of the same structure, the second columns of the same length.

After I prepared the training and test samples, the question arose about the need for data preprocessing. Using the range () function in a loop, I calculated a range of values. It turned out that all the signs are within [-1.1] and therefore neither normalization nor scaling is necessary. Then I checked for signs with a strongly biased distribution. To do this, I used the skewness () function from the e1071 package :

Apply () is a function from the same category as sapply () , used when something needs to be done in columns or rows. train_data [, - 1] - a data set without a dependent variable Activity, 2 shows that you need to calculate the value in columns. This code outputs the three worst variables:

The closer these values are to zero, the less is the distortion of the distribution, but here it is, quite frankly, rather big. For this case, caret has an implementation of the BoxCox transformation. I read that Random Forest is not sensitive to such things, so I decided to leave the signs as they are and at the same time see how SVM handles this.

It remains to choose the criterion for the quality of the model: accuracy or fidelity Accuracy or the criterion of agreement Kappa. If the cases are not evenly distributed across classes, you need to use Kappa, this is the same Accuracy only taking into account the probability of randomly “pulling out” a particular class. Having done summary () for the Activity column, I checked the distribution:

Cases are distributed almost evenly, except maybe walking_down (some of the volunteers apparently did not like to go down the stairs), which means that you can use Accuracy.

Trained the RF model on a full set of attributes. For this, the following construction on R was used:

It provides k-fold cross-validation with k = 5. By default, three models with a different mtry value are trained (the number of attributes that are randomly selected from the entire set and considered as a candidate for each branch of the tree), and then the best one is selected by accuracy. The number of trees for all models is the same ntree = 100.

To determine the quality of the model in the test sample, I took the confusionMatrix (x, y) function from caret, where x is the vector of predicted values and y is the vector of values from the test sample. Here is part of its delivery:

Training on a full set of signs took about 18 minutes on a laptop with Intel Core i5. It could be done several times faster on OS X by using several processor cores using the doMC package , but for Windows there isn’t such a thing, as far as I know.

Caret supports several SVM implementations. I chose svmRadial (SVM with a kernel - a radial basis function), it is more often used with caret, and is a common tool when there is no special information about the data. To train a model with SVM, just change the value of the method parameter in the train () functionon svmRadial and remove the do.trace and ntree parameters. The algorithm showed the following results: Accuracy on the test sample - 0.952. At the same time, training a model with fivefold cross-validation took a little more than 7 minutes. I left a memo for myself: not to grab at Random Forest immediately.

The results of the built-in assessment of the importance of variables in RF can be obtained using the varImp () function from the caret package. A construction of the form plot (varImp (model), 20) will reflect the relative importance of the first 20 features:

“Acc” in the name indicates that this variable was obtained by processing the signal from the accelerometer, “Gyro”, respectively, from the gyroscope. If you look closely at the graph, you can see that among the most important variables there is no data from the gyroscope, which for me personally is surprising and inexplicable. (“Body” and “Gravity” are two components of the signal from the accelerometer, t and f are the time and frequency domains of the signal).

Substituting in RF the signs selected by importance is a meaningless exercise, he has already selected them and used them. But with SVM it can work out. I started with 10% of the most important variables and began to increase by 10% each time controlling accuracy, finding a maximum, first reduced the step to 5%, then to 2.5% and, finally, to one variable. The result - the maximum accuracy was around 490 signs and amounted to 0.9545, which is better than the value on the complete set of signs by only a quarter percent (an additional pair of correctly classified cases). This work could be automated, since caret has an implementation of RFE (Recursive feature elimination), it recursively removes and adds one variable each and controls the accuracy of the model. There are two problems with it, RFE is very slow (inside it has Random Forest), for a data set that is similar in number of features and cases, the process would take about a day. The second problem is the accuracy on the training sample, which the RFE evaluates, this is not at all the same as the accuracy on the test one.

The code that extracts from varImp and arranges, in descending order of importance, the names of a given number of attributes, looks like this:

To clear my conscience, I decided to try some other method of selecting signs. I chose the filtering of signs based on the calculation of information gain ratio (in the translation it appears as information gain or information gain), a synonym for Kullback-Leibler divergence. IGR is a measure of the differences between the probability distributions of two random variables.

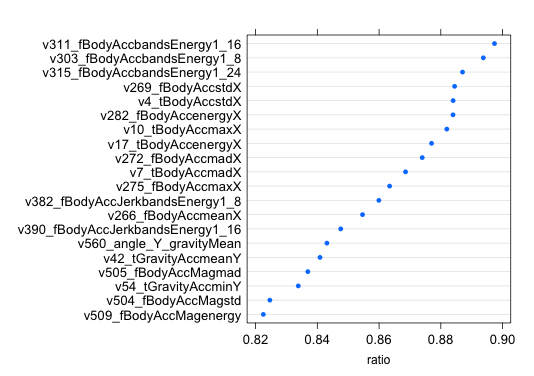

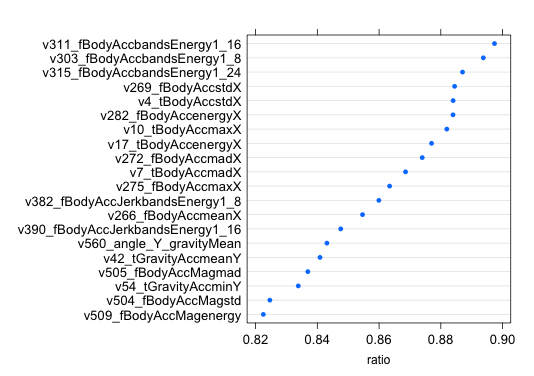

To calculate IGR, I used the information.gain () function from the FSelector package. For the package to work, you need a JRE. There, by the way, there are other tools that allow you to select features based on entropy and correlation. The IGR values are inverse to the “distance” between the distributions and are normalized [0,1], i.e. the closer to one, the better. After calculating the IGR, I ordered the list of variables in descending order of IGR, the first 20 looked as follows:

IGR gives a completely different set of “important” attributes, only five coincided with the important ones. There is no gyroscope in the top again, but there are a lot of signs with the X component. The maximum value of IGR is 0.897, the minimum is 0. Having received an ordered list of attributes, I dealt with it as well as with importance. I tested it on SVM and RF, it did not work out to significantly increase the accuracy.

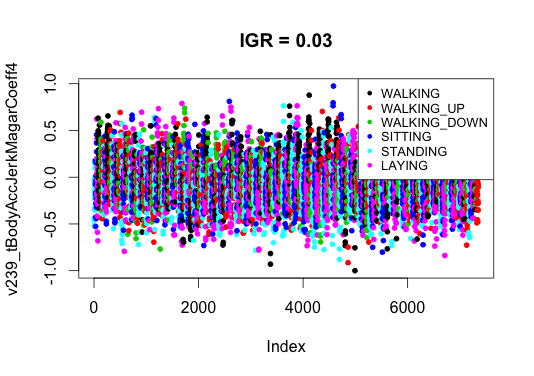

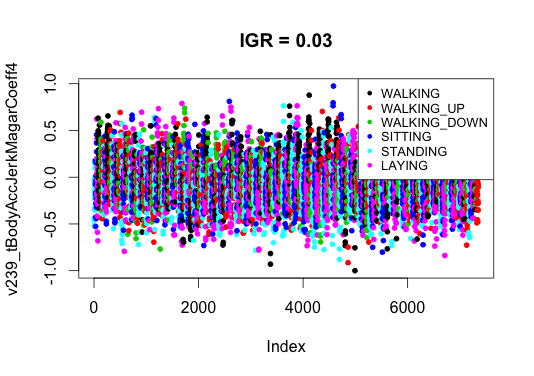

I think that the selection of signs in similar problems does not always work, and there are at least two reasons. The first reason is related to the construction of features. Researchers who prepared the data set tried to “survive” all the information from the signals from the sensors and probably did it consciously. Some signs give more information, some less (only one variable turned out to have an IGR equal to zero). This is clearly seen if we plot the values of the traits with different levels of IGR. For definiteness, I chose the 10th and 551st. It can be seen that for a sign with a high IGR, the points are well visually separable, with a low IGR they resemble a color mess, but obviously they carry some portion of useful information.

The second reason is that the dependent variable is a factor with more than two levels (six here). By achieving maximum accuracy in one class, we degrade performance in another. This can be shown on the mismatch matrices for two different sets of features with the same accuracy:

The upper version has fewer errors in the first two classes, in the lower - in the third and fifth.

To summarize:

1. SVM “made” Random Forest in my task, it works twice as fast and gives a better model.

2. It would be correct to understand the physical meaning of variables. And it seems that on a gyroscope in some devices you can save.

3. The importance of variables from RF can be used to select variables with other training methods.

4. Selection of attributes based on filtering does not always give an increase in the quality of the model, but it can reduce the number of attributes by reducing the training time with a slight loss in quality (using 20% important variables, the accuracy in SVM was only 3% below the maximum).

The code for this article can be found in myrepositories .

A few sitelinks:

UPD:

On the advice of kenoma , a Principal Component Analysis (PCA) was done. Used the option with the preProcess function from caret:

Cutoff 0.95, it turned out 102 components.

RF accuracy on the test sample: 0.8734 (5% lower than accuracy on the full set)

SVM accuracy: 0.9386 (one percent lower). I think this result is pretty good, so the tip was helpful.

Data

The data in the set was obtained from thirty volunteers with a Samsung Galaxy S II attached to the belt. The signals from the gyroscope and accelerometer were specially processed and converted into 561 signs. For this, multi-stage filtering, Fourier transform and some standard statistical transformations were used, a total of 17 functions: from mathematical expectation to calculating the angle between vectors. I miss the details of the processing; they can be found in the repository. The training sample contains 7352 cases, the test sample contains 2947. Both samples are marked with labels corresponding to six activities: walking, walking_up, walking_down, sitting, standing and laying.

As the basic algorithm for the experiments, I chose Random Forest. The choice was based on the fact that RF has a built-in mechanism for assessing the importance of variables, which I wanted to experience, since the prospect of independently choosing the attributes from 561 variables scared me a little. I also decided to try the support vector machine (SVM). I know that this is a classic, but I have not had to use it before, it was interesting to compare the quality of models for both algorithms.

After downloading and unpacking the archive with the data, I realized that I would have to tinker with them. All parts of the set, names of signs, activity labels were in different files. I used the read.table () function to upload files to the R environment. I had to prohibit the automatic conversion of strings to factor variables, as it turned out that there were duplicate variable names. In addition, the names contained invalid characters. This problem was solved by the following construction with the sapply () function , which in R often replaces the standard for loop:

editNames <- function(x) {

y <- var_names[x,2]

y <- sub("BodyBody", "Body", y)

y <- gsub("-", "", y)

y <- gsub(",", "_", y)

y <- paste0("v",var_names[x,1], "_",y)

return(y)

}

new_names <- sapply(1:nrow(var_names), editNames)

Glued all parts of the sets using the rbind () and cbind () functions . The first connects rows of the same structure, the second columns of the same length.

After I prepared the training and test samples, the question arose about the need for data preprocessing. Using the range () function in a loop, I calculated a range of values. It turned out that all the signs are within [-1.1] and therefore neither normalization nor scaling is necessary. Then I checked for signs with a strongly biased distribution. To do this, I used the skewness () function from the e1071 package :

SkewValues <- apply(train_data[,-1], 2, skewness)

head(SkewValues[order(abs(SkewValues),decreasing = TRUE)],3)

Apply () is a function from the same category as sapply () , used when something needs to be done in columns or rows. train_data [, - 1] - a data set without a dependent variable Activity, 2 shows that you need to calculate the value in columns. This code outputs the three worst variables:

v389_fBodyAccJerkbandsEnergy57_64 v479_fBodyGyrobandsEnergy33_40 v60_tGravityAcciqrX 14.70005 12.33718 12.18477

The closer these values are to zero, the less is the distortion of the distribution, but here it is, quite frankly, rather big. For this case, caret has an implementation of the BoxCox transformation. I read that Random Forest is not sensitive to such things, so I decided to leave the signs as they are and at the same time see how SVM handles this.

It remains to choose the criterion for the quality of the model: accuracy or fidelity Accuracy or the criterion of agreement Kappa. If the cases are not evenly distributed across classes, you need to use Kappa, this is the same Accuracy only taking into account the probability of randomly “pulling out” a particular class. Having done summary () for the Activity column, I checked the distribution:

WALKING WALKING_UP WALKING_DOWN SITTING STANDING LAYING

1226 1073 986 1286 1374 1407

Cases are distributed almost evenly, except maybe walking_down (some of the volunteers apparently did not like to go down the stairs), which means that you can use Accuracy.

Training

Trained the RF model on a full set of attributes. For this, the following construction on R was used:

fitControl <- trainControl(method="cv", number=5)

set.seed(123)

forest_full <- train(Activity~., data=train_data,

method="rf", do.trace=10, ntree=100,

trControl = fitControl)

It provides k-fold cross-validation with k = 5. By default, three models with a different mtry value are trained (the number of attributes that are randomly selected from the entire set and considered as a candidate for each branch of the tree), and then the best one is selected by accuracy. The number of trees for all models is the same ntree = 100.

To determine the quality of the model in the test sample, I took the confusionMatrix (x, y) function from caret, where x is the vector of predicted values and y is the vector of values from the test sample. Here is part of its delivery:

Reference

Prediction WALKING WALKING_UP WALKING_DOWN SITTING STANDING LAYING

WALKING 482 38 17 0 0 0

WALKING_UP 7 426 37 0 0 0

WALKING_DOWN 7 7 366 0 0 0

SITTING 0 0 0 433 51 0

STANDING 0 0 0 58 481 0

LAYING 0 0 0 0 0 537

Overall statistics

Accuracy: 0.9247

95% CI: (0.9145, 0.9339)

Training on a full set of signs took about 18 minutes on a laptop with Intel Core i5. It could be done several times faster on OS X by using several processor cores using the doMC package , but for Windows there isn’t such a thing, as far as I know.

Caret supports several SVM implementations. I chose svmRadial (SVM with a kernel - a radial basis function), it is more often used with caret, and is a common tool when there is no special information about the data. To train a model with SVM, just change the value of the method parameter in the train () functionon svmRadial and remove the do.trace and ntree parameters. The algorithm showed the following results: Accuracy on the test sample - 0.952. At the same time, training a model with fivefold cross-validation took a little more than 7 minutes. I left a memo for myself: not to grab at Random Forest immediately.

The importance of variables

The results of the built-in assessment of the importance of variables in RF can be obtained using the varImp () function from the caret package. A construction of the form plot (varImp (model), 20) will reflect the relative importance of the first 20 features:

“Acc” in the name indicates that this variable was obtained by processing the signal from the accelerometer, “Gyro”, respectively, from the gyroscope. If you look closely at the graph, you can see that among the most important variables there is no data from the gyroscope, which for me personally is surprising and inexplicable. (“Body” and “Gravity” are two components of the signal from the accelerometer, t and f are the time and frequency domains of the signal).

Substituting in RF the signs selected by importance is a meaningless exercise, he has already selected them and used them. But with SVM it can work out. I started with 10% of the most important variables and began to increase by 10% each time controlling accuracy, finding a maximum, first reduced the step to 5%, then to 2.5% and, finally, to one variable. The result - the maximum accuracy was around 490 signs and amounted to 0.9545, which is better than the value on the complete set of signs by only a quarter percent (an additional pair of correctly classified cases). This work could be automated, since caret has an implementation of RFE (Recursive feature elimination), it recursively removes and adds one variable each and controls the accuracy of the model. There are two problems with it, RFE is very slow (inside it has Random Forest), for a data set that is similar in number of features and cases, the process would take about a day. The second problem is the accuracy on the training sample, which the RFE evaluates, this is not at all the same as the accuracy on the test one.

The code that extracts from varImp and arranges, in descending order of importance, the names of a given number of attributes, looks like this:

imp <- varImp(model)[[1]]

vars <- rownames(imp)[order(imp$Overall, decreasing=TRUE)][1:56]

Feature Filtering

To clear my conscience, I decided to try some other method of selecting signs. I chose the filtering of signs based on the calculation of information gain ratio (in the translation it appears as information gain or information gain), a synonym for Kullback-Leibler divergence. IGR is a measure of the differences between the probability distributions of two random variables.

To calculate IGR, I used the information.gain () function from the FSelector package. For the package to work, you need a JRE. There, by the way, there are other tools that allow you to select features based on entropy and correlation. The IGR values are inverse to the “distance” between the distributions and are normalized [0,1], i.e. the closer to one, the better. After calculating the IGR, I ordered the list of variables in descending order of IGR, the first 20 looked as follows:

IGR gives a completely different set of “important” attributes, only five coincided with the important ones. There is no gyroscope in the top again, but there are a lot of signs with the X component. The maximum value of IGR is 0.897, the minimum is 0. Having received an ordered list of attributes, I dealt with it as well as with importance. I tested it on SVM and RF, it did not work out to significantly increase the accuracy.

I think that the selection of signs in similar problems does not always work, and there are at least two reasons. The first reason is related to the construction of features. Researchers who prepared the data set tried to “survive” all the information from the signals from the sensors and probably did it consciously. Some signs give more information, some less (only one variable turned out to have an IGR equal to zero). This is clearly seen if we plot the values of the traits with different levels of IGR. For definiteness, I chose the 10th and 551st. It can be seen that for a sign with a high IGR, the points are well visually separable, with a low IGR they resemble a color mess, but obviously they carry some portion of useful information.

The second reason is that the dependent variable is a factor with more than two levels (six here). By achieving maximum accuracy in one class, we degrade performance in another. This can be shown on the mismatch matrices for two different sets of features with the same accuracy:

Accuracy: 0.9243, 561 variables

Reference

Prediction WALKING WALKING_UP WALKING_DOWN SITTING STANDING LAYING

WALKING 483 36 20 0 0 0

WALKING_UP 1 428 44 0 0 0

WALKING_DOWN 12 7 356 0 0 0

SITTING 0 0 0 433 45 0

STANDING 0 0 0 58 487 0

LAYING 0 0 0 0 0 537

Accuracy: 0.9243, 526 variables

Reference

Prediction WALKING WALKING_UP WALKING_DOWN SITTING STANDING LAYING

WALKING 482 40 16 0 0 0

WALKING_UP 8 425 41 0 0 0

WALKING_DOWN 6 6 363 0 0 0

SITTING 0 0 0 429 44 0

STANDING 0 0 0 62 488 0

LAYING 0 0 0 0 0 537

The upper version has fewer errors in the first two classes, in the lower - in the third and fifth.

To summarize:

1. SVM “made” Random Forest in my task, it works twice as fast and gives a better model.

2. It would be correct to understand the physical meaning of variables. And it seems that on a gyroscope in some devices you can save.

3. The importance of variables from RF can be used to select variables with other training methods.

4. Selection of attributes based on filtering does not always give an increase in the quality of the model, but it can reduce the number of attributes by reducing the training time with a slight loss in quality (using 20% important variables, the accuracy in SVM was only 3% below the maximum).

The code for this article can be found in myrepositories .

A few sitelinks:

- Feature Selection with the Caret R Package

- Random Forests , Leo Breiman and Adele Cutler

UPD:

On the advice of kenoma , a Principal Component Analysis (PCA) was done. Used the option with the preProcess function from caret:

pca_mod <- preProcess(train_data[,-1],

method="pca",

thresh = 0.95)

pca_train_data <- predict(pca_mod, newdata=train_data[,-1])

dim(pca_train_data)

# [1] 7352 102

pca_train_data$Activity <- train_data$Activity

pca_test_data <- predict(pca_mod, newdata=test_data[,-1])

pca_test_data$Activity <- test_data$Activity

Cutoff 0.95, it turned out 102 components.

RF accuracy on the test sample: 0.8734 (5% lower than accuracy on the full set)

SVM accuracy: 0.9386 (one percent lower). I think this result is pretty good, so the tip was helpful.