How to farm Kaggle

* farm - (from the English. Farming) - a long and boring repetition of certain game actions with a specific purpose (gaining experience, resource extraction, etc.).

Introduction

Recently (October 1) a new session of an excellent course on DS / ML started (I highly recommend as an initial course to anyone who wants, as it is now called, “enter” DS). And, as usual, after the end of any course, graduates have a question - and where now to get practical experience in order to consolidate the still raw theoretical knowledge. If you ask this question in any profile forum, the answer is likely to be the same - go decide Kaggle. Kaggle is yes, but where to start and how to most effectively use this platform for leveling up practical skills? In this article, the author will try to give his own answers to these questions, as well as describe the location of the main rake on the field of competitive DS, to speed up the process of pumping and get from this fan.

A few words about the course from its creators:

The mlcourse.ai course is one of the large-scale activities of the OpenDataScience community. @yorko and company (~ 60 people) demonstrate that you can get cool skills outside the university and even absolutely free. The main idea of the course is the optimal combination of theory and practice. On the one hand, the presentation of the basic concepts is not without mathematics, on the other hand - a lot of homework, Kaggle Inclass competitions and projects will give, with a certain investment of strength on your part, excellent machine learning skills. It is impossible not to note the competitive nature of the course - there is a general rating of students, which strongly motivates. The course also differs in that it takes place in a truly lively community.

The course includes two Kaggle Inclass competitions. Both are very interesting, the construction of features works well in them. The first is user identification by the sequence of visited sites . The second is a prediction of the popularity of an article on Medium . The main benefit is from two homework assignments, where you have to be smart and beat the baselines in these competitions.

Having paid tribute to the course and its creators, we continue our history ...

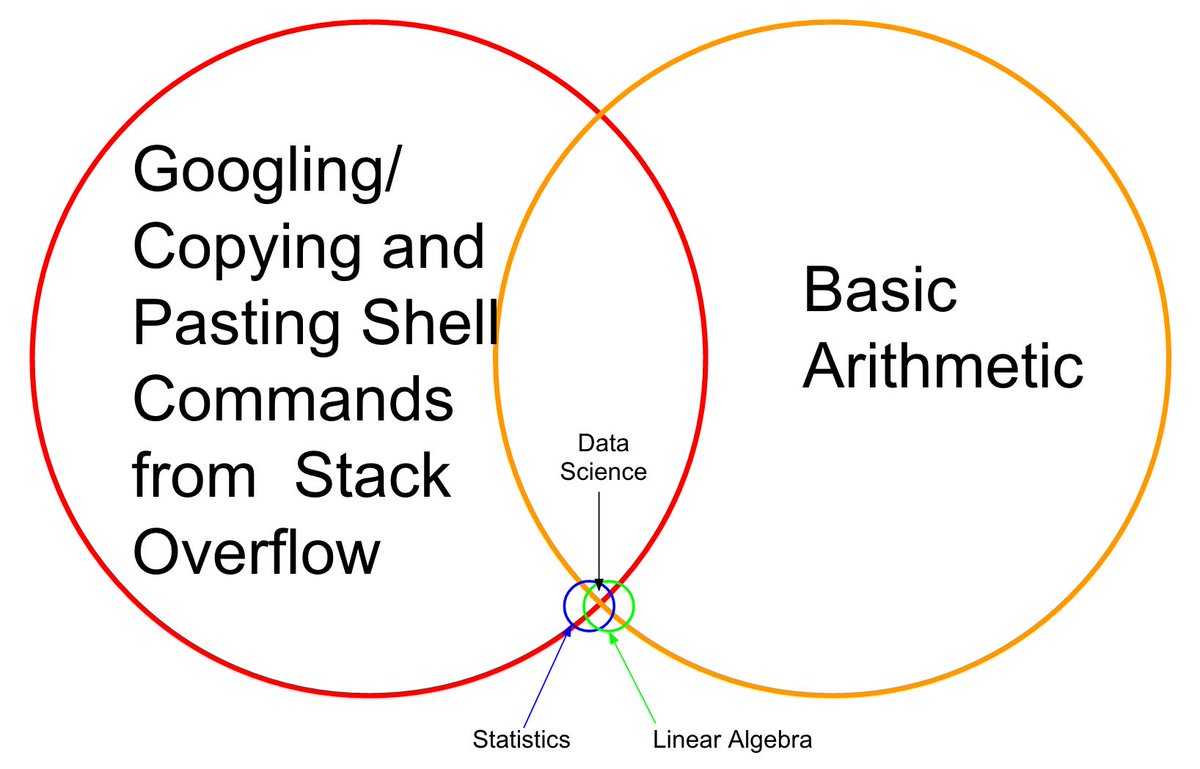

I remember myself a year and a half ago, a course (still the first version) from Andrew Ng was completed, specialization from MIPT was completed , a lot of books were read - a little bit of theoretical knowledge is full, but when trying to solve any basic combat task, a stupor arises. No, how to solve the problem - it is clear which algorithms to use - is also understandable, but the code is written very hard, with a minute call on the sklearn / pandas help, etc. Why is it so - there is no accrued pipelines and the feeling of the code "at your fingertips".

So it will not work, the author thought, and went to Kaggle. It was scary to start from the combat competition right away, and the first sign was Getting started the " House Prices: Advanced Regression Techniques " competition , in which that approach to efficient pumping described in this article took shape.

In what will be described further, there is no know-how, all the techniques, methods and techniques are obvious and predictable, but this does not detract from their effectiveness. At least by following them, the author managed to take the Kaggle Competition Master die for six months and three competitions in solo mode and, at the time of writing this article, enter the top-200 of the Kaggle world ranking . By the way, this answers the question of why the author at all allowed himself the courage to write an article of this kind.

In a nutshell, what is Kaggle?

Kaggle is one of the most well-known platforms for holding Data Science competitions. In each competition, the organizers post a description of the problem, the data for solving this task, a metric by which the decision will be assessed - and set time limits and prizes. Participants are given from 3 to 5 attempts (by the will of the organizers) per day for “submit” (sending their own solution).

The data is divided into a training sample (train) and test (test). For the training part, the value of the target variable (target) is known, for the test one - no. The task of the participants is to create a model that, being trained in the training part of the data, will give the maximum result on the test.

Each participant makes predictions for the test sample - and sends the result to Kaggle, then the robot (who knows the target variable for the test) evaluates the result sent, which is displayed on the leaderboard.

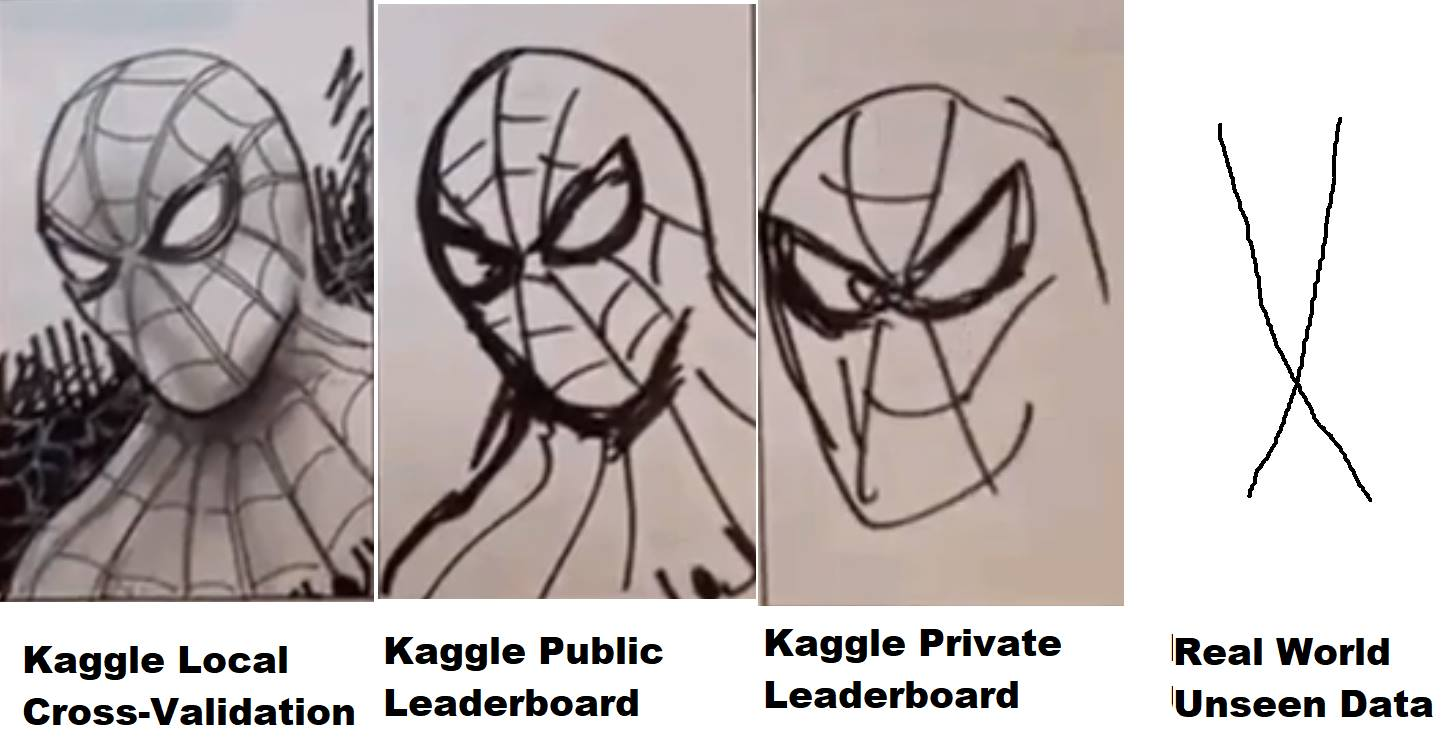

But not everything is so simple - test data, in turn, is divided in a certain proportion into a public (public) and private (private) part. During the competition, the submitted decision is evaluated, according to the metrics set by the organizers, on the public part of the data and is laid out on the leaderboard (so-called public leaderboard) - according to which participants can evaluate the quality of their models. The final decision (usually two - at the participant's choice) is evaluated on the private part of the test data - and the result falls on the private leaderboard, which is available only after the end of the competition and according to which, in fact, the final results are evaluated, prizes, buns, and medals are distributed.

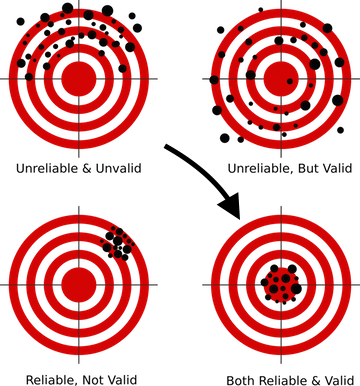

Thus, during the competition only information is available to participants as their model behaved (what result - or soon it showed) in the public part of the test data. If, in the case of a spherical horse in a vacuum, the private part of the data coincides in distribution and statisticians with the public one, everything is fine, but if not, then a model that showed itself well in public may not work on the private part, that is, trust (retrain). And it is here that what is called “flight” in the jargon, when people from 10 places in public fly down to 1000-2000 places on the private part due to the fact that their chosen model has retrained and failed to give the necessary accuracy for new data.

How to avoid it? To do this, first of all, it is necessary to build the correct validation scheme, what is taught in the first lessons in almost all DS courses. Because if your model cannot give the correct prediction for data that it has never seen - then you wouldn’t use any sophisticated technique, no matter how complex neural networks would be built - you cannot produce such a model, because her results are worth nothing.

For each competition on Kaggle a separate page is created on which there is a section with data, with a description of the metric - and the most interesting for us is the forum and the kernels.

Forum he and Kaggle forum, people write, discuss and share ideas. But kernels are more interesting. In fact, it is an opportunity to run your code, which has direct access to competition data in the Kaggle cloud (similar to Amazon AWS, Google GCE, etc.) Limited resources are allocated for each kernel, so if there is not a lot of data, then work with You can use them directly from the browser on the Kaggle website - write the code, launch it, submit the result. Two years ago, Kaggle was acquired by Google, so it’s no wonder that this functionality is used under the hood by the Google Cloud Engine.

Moreover there were several competitions (the recent one was Mercari ), where it was possible to work with data in general only through kernels. A very interesting format, leveling the difference in the gland of the participants and forcing the brain to turn on the subject of code optimization and approaches, since, naturally, the kernels had a hard resource limit, at that time - 4 cores / 16 GB RAM / 60 minutes run-time / 1 GB scratch and output disk space. While working on this competition, the author learned more about the optimization of neural networks than from any theoretical course. It was not enough for the gold, finished solo 23rd, but got a lot of experience and pleasure ...

Taking this opportunity, I want to say once again Thanks to colleagues from ods.ai - Arthur Stepanenko (arthur) , Konstantin Lopukhin (kostia) , Sergey Fironov (sergeif) for advice and support in this competition. In general, there were many interesting moments, Konstantin Lopukhin (kostia) , who took the first place together with Paweł Jankiewicz , then laid out what they called “ reference humiliation in 75 lines ” in chatika - the kernel of 75 lines of code, which gives the result to the gold zone of the leaderboard. This is, of course, a must see :)

Well, they were distracted, so - the people write the code and lay out the kernels with solutions, interesting ideas and other things. Usually, in each competition in a couple of weeks, one or two excellent kernel EDA (exploratory data analysis) appears with a detailed description of dataset, statistics, characteristics, etc. And a couple of baselines (basic solutions), which, of course, do not show the best result on the leaderboard, but they can be used as a starting point for creating your own solution.

Why Kaggle?

In fact, no matter what platform you play, just Kaggle is one of the first and most promoted, with an excellent community and a fairly comfortable environment (I hope they will finalize the kernels for stability and performance, but many people remember the hell that happened in Mercari ) But, in general, the platform is very convenient and self-sufficient, and its dies are still appreciated.

A small digression in general on the topic of competitive DS. Very often, in articles, conversations and other conversations, the thought sounds that this is all bullshit, the experience in competitions has no relation to real problems, and the people there are engaged in tyunit 5th decimal point, which is insanity and divorced from reality. Let's look at this issue a little more:

As practicing DS-specialists, unlike academy and science, we, in our work, should and will solve business problems. That is (here is a reference to CRISP-DM ) to solve the problem you need:

- understand business problem

- evaluate the data on the subject of whether they can hide the answer to this business problem

- collect additional data if there is not enough existing to receive a response

- select the metric that most accurately sapphds the business goal

- and only after that choose the model, convert the data under the selected model and "drain the bits". (WITH)

The first four items from this list are not taught anywhere (correct me if such courses have appeared - I will sign up without hesitation), then just learn from the experience of colleagues working in this industry. But the last point - starting with the choice of the model and further, can and should be pumped in competitions.

In any competition, most of the work for us was done by the organizers. We have a described business goal, an approximating metric is selected, data is collected - and our task is to build a working pipeline from all of this Lego. And it is here that the skills are pumped - how to work with passes, how to prepare data for neural networks and trees (and why neural networks require a special approach), how to correctly build validation, how not to retrain, how to choose hyper parameters, how ... ... yes a dozen and two “hows”, the competent execution of which distinguishes a good specialist from the people in our profession.

What you can farm on Kaggle

Basically, and this is reasonable, all newcomers come to Kaggle to gain and pump practical experience, but one should not forget that besides this there are at least two goals:

- Farm medals and dies

- Farm reputation in the Kaggle community

The main thing to remember is that these three goals are completely different, to achieve them different approaches are required, and you should not mix them up especially at the initial stage!

It is not for nothing that the “initial stage” is emphasized , when you pump through - these three goals will merge into one and will be solved in parallel, but while you are just starting out - do not mix them ! This way you will avoid pain, disappointment and resentment of this unjust world.

Let's go briefly on the objectives from the bottom up:

- Reputation - is pumped by writing good posts (and comments) on the forum and creating useful kernels. For example, EDA kernels (see above), posts describing non-standard techniques, etc.

- Medals are a very controversial and haute topic, but oh well. Bleeding public Kernels (*) is pumped through, participation in a team with a bias in the experience, and the creation of a top pipeline.

- Experience - pumped through the analysis of decisions and work on the bugs.

(*) blending of public kernels is a technique of farm medals, at which the laid out kernels are selected with the maximum soon on the public leaderboard, their predictions are averaged (blends), the result is submitted. As a rule, this method leads to a hard overfit (retraining on the train) and flying to a private flight, but sometimes it allows you to get a submission almost in silver. The author, at the initial stage, does not recommend such an approach (read below about the belt and pants).

I recommend the first goal to choose "experience" and stick to it until the moment when you feel ready to work on two / three goals at the same time.

There are two more points worth mentioning (Vladimir Iglovikov (ternaus) - thanks for the reminder).

The first is the conversion of efforts invested in Kaggle into a new, more interesting and / or highly paid job. No matter how now the Kaggle dies are leveled, but for understanding people, the line in the summary of the "Kaggle Competition Master", and other achievements, all the same, are worth something.

As an illustration of this point, two interviews ( one , two ) with our colleagues Sergey Mushinsky (cepera_ang) and Alexander Buslaev (albu)

And also the opinion of Valery Babushkina ( venheads) :

Valery Babushkin - Head of Data Science at X5 Retail Group (the current number of units is 30 people + 20 vacancies from 2019)

Team Leader, Analytics, Yandex Advisor

Kaggle Competition Master is an excellent proxy metric for evaluating a future team member. Of course, in connection with the latest events in the form of teams of 30 people each and naked steam locomotives, a little more thorough study of the profile is required than before, but this is still a matter of a few minutes. A person who has achieved the title of master, is most likely able to write at least medium quality code, is reasonably versed in machine learning, knows how to clean the data and build stable solutions. If the master can still not boast with a master, then the fact of participation is also a plus, at least the candidate knows about the existence of Kagla and was not lazy and spent time learning it. And if something other than the public Kernel was launched and the resulting solution surpassed its results (which is pretty easy to verify), This is a reason for a detailed conversation about the technical details, which is much better and more interesting than classical questions on the theory, the answers to which give less understanding of how people will cope with their work in the future. The only thing to be afraid of and what I have come across is that some people think that the work of DS is about as Kagle, which is fundamentally wrong. Many still think that DS = ML, which is also a mistake

The second point is that many problems can be solved in the form of pre-prints or articles, which on the one hand allows the knowledge that the collective mind gave birth to during the competition not to die in the wilds of the forum, and on the other adds one more line to the authors portfolio and +1 visibility, which in any case has a positive effect on the career and on the citation index.

Authors (in alphabetical order):

Andrei O., Ilya, albu, aleksart, alex.radionov, almln, alxndrkalinin, cepera_ang, dautovri, davydov, fartuk, golovanov, ikibardin, kes, mpavlov, mvakhrushev, n01z3, rakhlin, rauf, resolut, scitator, selim_sef, shvetsiya, snikolenko, ternaus, twoleggedeye, versus, vicident, zfturbo

How to avoid the pain of losing medals

Score!

I will explain. In almost every competition, closer to its end, a kernel is laid out on the public with a solution that shifts the entire leaderboard up, well, and you, with your decision, respectively, down. And every time on the forum begins the pain! As so, here I had a decision on silver, and now I don’t even draw on bronze. What business, return all back.

Remember - Kaggle is a competitive DS. Where you are on the leaderboard is up to you. Not from the guy who laid out the kernel, not from the stars came together or not, but from how much effort you put into the decision and whether you used all the possible ways to improve it.

If Public Kernel knocks you from your place on the leaderboard - this is not your place.

Instead of pouring out the pain of the injustice of the world - thank this guy. Seriously, Public Kernel with a better solution than yours means that you missed something in your pipelines. Find what exactly, improve your pipeline - and go around the whole crowd of hamsters with the same soon. Remember, to return to your place you just need to be a little bit better than this public.

How I was upset by this moment in the first competition, my hands were already falling, here you are in silver - and here you are in the bottom of the leaderboard. Nothing, you just have to get together, to understand where and what you missed - to alter your decision - and to return to the place.

Moreover, this moment will be present only at an early stage of your competitive process. The more experienced you become, the less the laid kernels and stars will influence you. In one of the last competitions ( Talking Data , in which our team took 8th place ) they also laid out such a kernel, but he was honored with just one line in our team chat from Pavel Pleskov (ppleskov) : " Guys, I messed it up with our decision it just got worse - we throw it away . " That is, all the useful signal that this kernel was pulling out of the data was already pulled out by our models.

And about the medals - remember:

"a belt without equipment is needed only to maintain pants" (C)

Where, on what and how to write code.

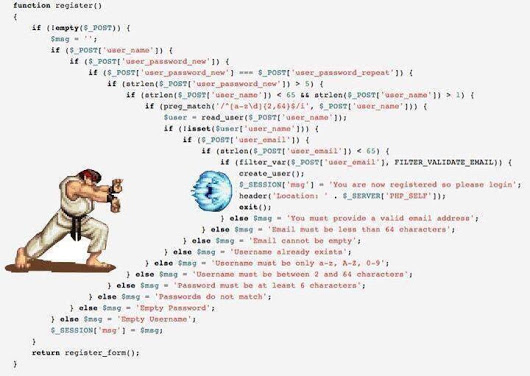

Here is my recommendation - python 3.6 on jupyter notebook under ubuntu . Python has long become the de facto standard in DS, given the huge number of libraries and the community, jupyter , especially with the presence of jupyter_contrib_nbextensions very convenient for rapid prototyping, analysis and data processing, ubuntu is convenient in itself, plus some of the data processing is sometimes easier to do in bash :)

After installing jupyter_contrib_nbextensions, I immediately recommend that you include:

- Collapsible headings (helps a lot in organizing blocks of code)

- Code folding (same)

- Split cells (rarely, but useful if you need to debug something in parallel)

And your life will become much simpler and more pleasant.

As soon as your pipelines become more or less stable, I recommend to immediately take the code into separate modules. Believe me - you will rewrite it more than once and not two or even five. But it normal.

There is just the opposite approach when participants try to use jupyter notebook as rarely as possible and only when necessary, preferring to write the pipelines immediately with scripts. (An adherent of such an option is, for example, (Vladimir Iglovikov (ternaus) )

And there are those who are trying to combine jupyter with an IDE, for example pycharm .

Each approach has the right to life, each has its pros and cons, as they say "the taste and color of all markers are different." Choose what you want to work.

But in any case, make it a rule.

save the code for each submission / OOF (see below) .

(*) OOF - out of folds , a technique for obtaining model predictions for the training part of datasets using cross-validation. Indispensable for the further assembly of several solutions in the ensemble. It is taught again in courses or easily googled.

How? Well, there are at least three options:

- For each competition, a separate repository is created on the githaba or bitback and the code for each submission is commited to the repository with a commentary containing the resulting speed, model parameters, etc.

- The code of each submission is collected in a separate archive, with the name of the file, in which all the meta information of the submission is specified (same speed, parameters, etc.)

- It uses a version control system sharpened specifically for DS / ML. For example https://dvc.org .

In general, in the community there is a tendency of a gradual transition to the third option, because both the first and second have their flaws, but they are simple, reliable and, frankly, for Kaggle they are quite enough.

Yes, more about python for those who are not programmers - do not be afraid of it. Your task is to understand the basic structure of the code and the basic essence of the language in order to understand other people's kernels and write your own libraries. There are many good beginner courses on the web, perhaps in the comments they will tell you exactly where. Unfortunately (or fortunately) I cannot assess the quality of such courses, so I don’t provide references in the article.

So, go to the framework

Note

All further description will be based on working with tabular and textual data. Pictures, which are now very much on Kaggle - this is a separate topic with separate frameworks. At the basic level, it’s good to be able to handle them, at least in order to get rid of something like ResNet / VGG and pull features, but deeper and more subtle work with them is a separate and very extensive topic that is not covered in this article.

The author honestly admits that he is not very good at pictures. The only attempt to join the beauty was in the Camera Identification competition , in which, by the way, our teams with the [ ods.ai ] tag blew up the entire leaderboard to such an extent that the Kaggle admins had to come to us in a weak place to make sure that everything was within rules - and reassure the community. So, in this competition, I got honorable silver from the 46th place, and when I read the description of top solutions from our colleagues, I realized that to climb higher and not shine - they actually use black magic with augmentation, good data of 300GB, sacrifices and other things.

In general, if you want to start with pictures, then you need other frameworks and other tutorials.

the main goal

Your task is to write pipelines (designed in the form of jupyter notebooks + modules) for the following tasks:

- EDA (exploratory data analysis) . Here you have to make a note - there are specially trained people at Kaggle :), who in each competition are sawing stunning EDA kernels. You can hardly pass them, but you still have to understand how you can look at the data, because In combat missions, this specially trained person will be you. Therefore, we study approaches, expand our libraries.

- Data Cleaning is all about data cleaning. Emissions, omissions, etc.

- Data preparation - all that concerns the preparation of data for the model. Several blocks:

- Overall

- For regressions / neural networks

- For trees

- Special (time series, pictures, FM / FFM )

- Text ( Vectorizers, TF-IDF , Embeddings )

- Models

- Linear models

- Tree models

- Neural Networks

- Exotic (FM / FFM)

- Feature selection

- Hyperparameters search

- Ensemble

In the kernels, usually all these tasks are assembled into a single code, which is understandable, but I highly recommend for each of these subtasks to have a separate laptop and a separate module (set of modules). So it will be easier for you later.

Warning a possible holivar - the structure of this framework is not the ultimate truth, there are many other ways to structure your pipelines - this is just one of them.

Data is transferred between modules either in the form of CSV or feather / pickle / hdf - which is more convenient for you and what your soul is used to or is used to.

In fact, a lot still depends on the amount of data, in TalkingData, for example, I had to go through memmap to get around the lack of memory when creating a dataset for lgb.

In other cases - the main data is stored in hdf / feather, something small (such as a set of selected attributes) - in CSV . I repeat - there are no templates, who are accustomed to what, and work with that.

First stage

Go to any Getting started competition (as already mentioned, the author began with House Prices: Advanced Regression Techniques ), and begin to create our laptops. We read public kernels, copy pieces of code, procedures, approaches, etc. etc. We run the data through the pipeline, submit it - we look at the result, improve and so on in a circle.

The task at this stage is to assemble an effectively working full-cycle pipeline - from loading and clearing data to the final submission.

A sample list of what should be ready and working 100% before proceeding to the next step:

- EDA . (dataset statistics, boxsplots, scatter of categories, ...)

- Data Cleaning. (skips through fillna, cleaning categories, combining categories)

- Data preparation

- General (processing of categories - label / ohe / frequency, projection of numerical into categories, transformation of numerical, binning)

- For regressions (different scaling)

- Models

- Linear models (various regressions - ridge / logistic)

- Tree models (lgb)

- Feature selection

- grid / random search

- Ensemble

- Regression / lgb

Go to battle

Choose any competition you like and ... begin :)

We look at the data, read the forums and build a sustainable validation scheme . There will be no normal scheme - you will fly on the leaderboard, as in competitions from Mercedes, Santander and others. Look at the Mercedes leaderboard , for example (green arrows and numbers mean how many positions people climbed in leaderboards compared to public, red ones - how many flew down):

I also recommend reading the article and speech by Danila Savenkov (danila_savenkov) :

Also this topic is well covered in the How to Win a Data Science Competition: Learn from Top Kagglers "

As long as there is no working validation scheme, we don’t go further !!!

- We run the data through our formed pipeline and submit the result

- We grab for the head, become psychotic, calm down ... and continue ...

- We read all the kernels for the used techniques and approaches

- We read all the discussions on the forum

- Rework / supplement pipelines with new techniques

- Go to paragraph 1.

Remember - our goal at this stage is to gain experience ! Fill our pipelines with working approaches and methods, fill our modules with working code. We don’t bother with the medals - or rather, it’s great if you can immediately take your place on the leaderboard, but if not, we’re not steaming. We did not come here for five minutes, medals and dies will not go anywhere.

Here the competition is over, you are somewhere out there, everything seems to be - grab the next?

NOT!

What do you do next:

- Wait five days. Do not read the forum, forget about Kaggle at this time. Give the brain a rest and blur the view.

- Go back to the competition. During these five days, according to the rules of good tone, all the tops will post a description of their decisions - in posts on the forum, in the form of kernels, in the form of gitkhabovsky repositories.

And here begins your personal hell!

- You take several sheets of A4 format, on each write the name of the module from the above framework (EDA / Preparation / Model / Ensemble / Feature selection / Hyperparameters search / ...)

- Consistently read all the decisions, write out on the appropriate leaflets new techniques for you, methods, approaches.

And the worst:

- Consistently for each module, write (peep) the implementation of these approaches and methods, expanding your pipeline and libraries.

- In the post-submission mode, run the data through your updated pipeline until you have a solution to the gold zone, or the patience and nerves are gone.

And only after that go to the next competition.

No, I'm not fucked up. Yes, it can be easier. You decide.

Why wait for 5 days, and not read immediately, because the forum can ask questions? At this stage (in my opinion) it is better to read already formed threads with discussion of decisions, questions that you may have - either someone has already asked, or they are better not to ask at all, but look for the answer yourself)

Why do it all this way? Well, once again - the task of this stage is to build a base of solutions, methods and approaches. Combat work base. So that in the next competition you don’t waste time, but immediately say - aha, mean target encoding can come in here , and by the way, I have the correct code for this through the folds in the folds. Or oh! I remember then the ensemble came in through scipy.optimize , and by the way, my code is already ready.

Something like this...

We leave to the operating mode

In this mode, we solve several competitions. Each time we notice that there are less and less records on the leaflets, and more and more code in the modules. Gradually, the task of analysis is reduced to the fact that you just read the description of the solution, say yeah, oh, oh, that's how it is! And you add one or two new spells or approaches in your piggy bank.

After this mode is changed to the mode of work on the errors. You have the base ready, now you just need to apply it correctly. After each competition, reading the description of the decisions, look - what you did not do, what could be done better, what you missed, well, or where you specifically lazhanulis, as I happened in Toxic . It walked quite well, into the underbelly of gold, and flew down to its private position by 1,500 positions. It's a shame to tears ... but he calmed down, found a mistake, wrote a post in a slaka - and learned a lesson.

The fact that one of the descriptions of the top solution will be written from your nickname can serve as a sign of the final exit to the working mode.

What should be approximately in the pipeline by the end of this stage:

- All sorts of options for pre-processing and creating numeric features - projections, relationships,

- Different methods of working with categories - Mean target encoding in the correct version, frequencies, label / ohe,

- Various schemes of embeddings over text (Glove, Word2Vec, Fasttext)

- Various text vectoring schemes (Count, TF-IDF, Hash)

- Several validation schemes (N * M for standard cross-validation, time-based, by group)

- Bayesian optimization / hyperopt / something else for selecting hyper parameters

- Shuffle / Target permutation / Boruta / RFE - for feature selection

- Linear models - in the same style over a single data set.

- LGB / XGB / Catboost - in the same style over a single data set

The author made metaclasses separately for linear and tree-based models, with a single external interface, in order to level the differences in API between different models. But now it is possible to run in a single key with one line, for example, LGB or XGB over one processed data set.

- Several neural networks for all occasions (do not take pictures yet) - embeddings / CNN / RNN for text, RNN for sequences, Feed-Forward for everything else. It is good to understand and be able to auto-encoders .

- The lgb / regression / scipy ensemble - for regression and classification tasks

- It’s good to already be able to Genetic Algorithms , sometimes they come in well

Summarize

Any sport, and competitive DS is also a sport, it is a lot of sweat and a lot of work. This is neither good nor bad, it is a fact. Participation in competitions (if you approach the process correctly) pumps technical skills very well, plus more or less shakes the sporting spirit when you really don't want to do something, breaks everything directly - but you get up to the laptop, redo the model, run it on to gnaw this unfortunate 5th decimal place.

So decide Kaggle - farm experience, medals and fan!

A couple of words about the author's pipelines

In this section I will try to describe the main idea of the pipelines and modules assembled in a year and a half. Again, this approach does not pretend to be universal or unique, but suddenly it will help someone.

- All code for feature-engineering, except for mean target encoding, is placed in a separate module as functions. Tried to collect through objects, it turned out cumbersome, and in this case it is not necessary.

- All functions of feature-engineering are executed in the same style and have a single signature of call and return:

defdo_cat_dummy(data, attrs, prefix_sep='_ohe_', params=None):

# do somethingreturn _data, new_attrsAt the input we pass dataset, attributes for work, prefix for new attributes and additional parameters. At the output we get a new dataset with new attributes and a list of these attributes. Next, this new dataset is stored in a separate pickle / feather.

What it gives is that we get the opportunity to quickly assemble datasets for learning from pre-generated cubes. For example, for categories we do three processing at once - Label Encoding / OHE / Frequency, save to three separate feathers, and then at the modeling stage we just play with these blocks, creating elegant datasets for learning in one elegant movement.

pickle_list = [

'attrs_base',

'cat67_ohe',

# 'cat67_freq',

]

short_prefix = 'base_ohe'

_attrs, use_columns, data = load_attrs_from_pickle(pickle_list)

cat_columns = []If you need to build another dataset, we change pickle_list, reboot, and work with the new dataset.

The main set of functions over tabular data (real and categorical) includes various categories coding, projection of numeric attributes onto categorical, as well as various transformations.

defdo_cat_le(data, attrs, params=None, prefix='le_'):

defdo_cat_dummy(data, attrs, prefix_sep='_ohe_', params=None):

defdo_cat_cnt(data, attrs, params=None, prefix='cnt_'):

defdo_cat_fact(data, attrs, params=None, prefix='bin_'):

defdo_cat_comb(data, attrs_op, params=None, prefix='cat_'):

defdo_proj_num_2cat(data, attrs_op, params=None, prefix='prj_'):A universal Swiss knife for combining attributes, to which we transfer the list of initial attributes and the list of conversion functions, as a result we get, as usual, a list of new attributes.

defdo_iter_num(data, attrs_op, params=None, prefix='comb_'):Plus various additional specific converters.

For processing text data, a separate module is used, which includes various methods of preprocessing, tokenization, lemmatization / stemming, translation into a frequency table, and so on. etc. Everything is standard using sklearn , nltk and keras .

Time series are also processed by a separate module, with the transformation functions of the original dataset both for regular tasks (regression / classification) and for sequence-to-sequence. Thank you to François Chollet for finishing keras in order to build seq-2-seq models not like voodoo ritual for calling demons.

In the same module, by the way, are the functions of the usual statistical analysis of the series - a test for stationarity, STL-decomposition, etc ... It helps a lot at the initial stage of the analysis in order to "touch" the series and see what it is.

Functions that cannot be applied immediately to the entire dataset, but need to be used inside the folds during cross-validation are moved to a separate module:

- Mean target encoding

- Upsampling / Downsampling

They are transmitted inside the class of the model (read about the model below) at the training stage.

_fpreproc = fpr_target_enc

_fpreproc_params = fpr_target_enc_params

_fpreproc_params.update(**{

'use_columns' : cat_columns,

})

- A metaclass has been created for modeling, which generalizes the concept of a model, with abstract methods: fit / predict / set_params /, etc. For each specific library (LGB, XGB, Catboost, SKLearn, RGF, ...), an implementation of this metaclass was created.

That is, to work with LGB, we create a model

model_to_use = 'lgb'

model = KudsonLGB(task='classification')For XGB:

model_to_use = 'xgb'

metric_name= 'auc'

task='classification'

model = KudsonXGB(task=task, metric_name=metric_name)And all functions further operate with model.

For validation, several functions were created, which immediately considered both prediction and OOF for several seeders during cross-validation, as well as a separate function for ordinary validation via train_test_split. All validation functions operate on meta-model methods, which provides model-independent code and makes it easy for any other library to connect to the pipeline.

res = cv_make_oof( model, model_params, fit_params, dataset_params, XX_train[use_columns], yy_train, XX_Kaggle[use_columns], folds, scorer=scorer, metric_name=metric_name, fpreproc=_fpreproc, fpreproc_params=_fpreproc_params, model_seed=model_seed, silence=True ) score = res['score']For feature selection, nothing interesting, standard RFE, and my favorite shuffle permutation in all possible options.

Bayesian optimization is mainly used to search for hyperparameters, again in a unified form so that you can run a search for any model (via the cross-validation module). This unit lives in the same laptop as the simulation.

For the ensembles, several functions have been done, standardized for regression and classification problems based on the Ridge / Logreg, LGB, Neural network and my favorite scipy.optimize.

A small explanation is that each model from the pipeline gives two files as a result: sub_xxx and oof_xxx , which are the predictions for the test and OOF prediction for the train. Next, in the ensemble module from the specified directory, we load pairs of predictions from all models into two data frames - df_sub / df_oof . Well, after that we look at the correlations, select the best ones, then we build models of the 2nd level over df_oof and apply them to df_sub .

Sometimes, searching for the best subset of models, it is good to search for genetic algorithms (the author uses this library ), sometimes - the method from Caruana . In the simplest cases, standard regressions and scipy.optimize work fine.

Neural networks live in a separate module, the author uses keras in a functional style , yes, not as flexible as pytorch , but still enough. Again, written universal functions for training, invariant to the type of network.

This pipeline was once again tested in a recent competition from Home Credit , attentive and careful use of all units and modules brought 94th place and silver.

The author is generally ready to express a seditious thought that for tabular data and a normally made pipeline, the final submit in any competition should fly into the top 100 leaderboard. Naturally there are exceptions, but in general, this statement seems to be true.

About teamwork

It’s not all that simple, deciding Kaggle in a team or solo depends a lot on the person (and the team), but my advice for those who are just starting out is to try a solo. Why? I will try to explain my point of view:

- First, you will understand your strengths, see weaknesses and, in general, be able to assess your potential as a DS practice.

- Secondly, even working in a team (unless this is not an established team with division of roles), you will still have to wait for a ready-made complete solution - that is, you should already have working pipelines. (" Submission or not ") (C)

- And thirdly, optimally, when the level of players in a team is about the same (and quite high), then you can learn something really high-level and useful) In weak teams (there is nothing derogatory, I'm talking about the level of training and experience on Kaggle) imho it is very difficult to learn something, it is better to nibble the forum and the kernels. Yes, you can farm medals, but see Above about goals and a belt for maintaining pants)

Useful tips from the captain evidence and promised card rake :)

These tips reflect the author’s experience, are not a dogma, and can (and should) be verified by their own experiments.

Always start with the construction of valid validation - it will not be her, all other efforts will fly into the furnace. Take another look at the Mercedes leaderboard .

The author is really pleased that in this competition he built a stable cross-validation scheme (3x10 folds), which retained speed and brought the legitimate 42nd place)

If competent validation is built - always trust the results of your validation . If your models' fastness improves on validation, but deteriorates in public, it is wiser to trust validation. When analyzing, just count that piece of data on which the public leaderboard is considered to be another fold. You don't want to make your model a single fold?

If the model and the scheme allows - always do OOF predictions and save near the model. At the stage of the ensemble you never know what will fire.

Always keep near the result / OOF code to get them . No matter on githab, locally, anywhere. Twice I managed that in the ensemble the best model is the one that was made two weeks ago "out of the box", and for which the code was not preserved. Pain.

Hammer on the selection of the "right" sid for cross-validation , he himself sinned it first. Better choose any three and do 3xN cross-validation. The result will be more stable and easier.

Do not chase the number of models in the ensemble - better less, but more varied - more varied by models, by preprocessing, by datasets. In the worst case, in terms of parameters, for example, one deep tree with rigid regularization, one shallow tree.

To select a feature, use shuffle / boruta / RFE , remember that the feature importance in different tree-based models is the metrics in parrots on the sawdust bag.

Personal opinion of the author (or may not coincide with the reader's opinion) a Bayesian optimization > random search> hyperopt for selection hyperparameters. (">" == better)

It is better to handle the leading kernel kernel posted on public as follows:

- There is a time - we look at what's new there and embed ourselves

- Less time - we remake it on our validation, we do OOF - and we fasten it to the ensemble

- There is no time at all - stupidly blend with our best solution and we look soon.

How to choose two final submissions - by intuition, of course. But seriously, everyone usually practices the following approaches:

- Conservative submit (on sustainable models) / risky submit.

- With the best soon on OOF / public leaderboard

Remember - everything is a figure and the possibilities of its processing depend only on your imagination. Use classification instead of regression, treat sequences as a picture, etc.

And finally:

useful links

Are common

http://ods.ai/ - for those who want to join the best DS community :)

https://mlcourse.ai/ - course site ods.ai

https://www.Kaggle.com/general/68205 - post course on Kaggle

In general, I highly recommend in the same mode as described in the article to view the mltrainings video cycle - many interesting approaches and techniques.

Video

- very good video about how to become a grandmaster :) by Pavel Pleskov (ppleskov)

- video about hacking, non-standard approach and mean target encoding on the example of the BNP Paribas competition from Stanislav Semenov (stasg7)

- Another video with Stanislav "What does Kaggle teach"

Courses

In more detail the methods and approaches to solving problems on Kaggle can be found in the second course of specialization , " How to Win a Data Science Competition: Learn from Top Kagglers"

Extracurricular reading:

- Laurae ++, XGBoost / LightGBP parameters

- FastText - embedding for text from Facebook

- WordBatch / FTRL / FM-FTRL - a set of libraries from @anttip

- Another FTRL implementation

- Bayesian Optimization - a library for the selection of hyper parameters

- Regularized Greedy Forest (RGF) library - another tree method

- A Kaggler's Guide to Model Stacking in Practice

- ELI5 is an excellent library for visualizing model weights from Konstantin Lopukhin (kostia)

- Feature selection: target permutations - and follow the links inside

- Feature Importance Measures Measures for Tree Models

- Feature selection with null importance

- Briefly about autoencoders

- Slideshare Kaggle Presentation

- And another one

- And in general there are a lot of interesting things.

- Winning solutions of kaggle competitions

- Data Science Glossary on Kaggle

Conclusion

The theme Data Science in general and competitive Data Science in particular are as inexhaustible as atom (C). In this article, the author only slightly opened the topic of pumping practical skills using competitive platforms. If it became interesting - connect, look around, save up experience - and write your articles. The more good content, the better for all of us!

Anticipating questions — no, the pipelines and author’s libraries have not yet been freely available.

Many thanks to the colleagues from ods.ai: Vladimir Iglovikov (ternaus) , Yuri Kashnitsky (yorko) , Valery Babushkin ( venheads) , Alexey Pronkin (pronkin_alexey) , Dmitry Petrov (dmitry_petrov) , Artur Kuzin (i01z3) , and the production graphs (i) . article before publication, for edits and reviews.

Special thanks to Nikita Zavgorodnoye (njz) for the final proofreading.

Thank you for your attention, I hope this article will be useful to someone.

My nickname in Kaggle / ods.ai : kruegger