It's just about minimal graph-based ideal hashing

Imagine that we are faced with the classic task of obtaining data on some key. Moreover, the amount of data and their keys is known in advance.

How to solve a similar problem?

If the number of key-value pairs is obviously small, then it makes sense to just hardcode. But if there are many millions of such values?

You can simply browse through the list until you find the desired key value. But then the complexity will be linear O (N) , which, in this case, should upset any engineer. And what is the complexity of the algorithm then required? Which does not depend on the amount of data and is performed in a fixed time, i.e., with constant complexity O (1) .

How can I get data for a fixed time?

If you arrange the data in memory sequentially, then knowing the offset where the necessary information is located, you can get access that will not depend on the initial amount of data.

In other words, we need to find a way to convert the key to the offset where the data will be. The offset is just an integer: [0, n - 1], where n is the number of stored values.

Hash functions come to the rescue, whose task is precisely to convert the keys to integers. Such numbers can be interpreted as offsets at which data is stored.

But, unfortunately, hash functions give rise to collisions, that is, two different keys can give the same offset.

Based on the task, we have a fixed and predetermined amount of data, which makes it possible to avoid such problems.

An ideal hash function is a hash function that converts a previously known static set of keys into a range of integers [0, m - 1] without collisions, that is, one key corresponds to only one unique value. And if the number of resulting values is the same as the number of incoming keys, such a function is called the Minimal Perfect Hash Function.

Let's imagine and imagine that each key corresponds to one unique edge, and, accordingly, two vertices.

Then the key can be represented in the form of such a primitive graph:

Figure 1. The graph where the edge corresponds to the key and is described through two vertices.

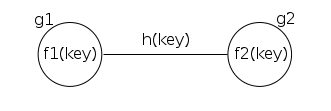

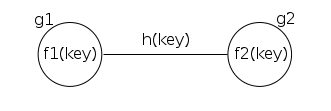

Since the key is represented in the form of an edge, and the edge always connects at least (because there are still hypergraphs) two vertices, then the desired function may look something like this:

h (key) = g (node1, node2)

where h (key) is the minimum ideal hash function whose value describes the edge, key is the key, g () is some unknown function depending on the vertices of the graph node1 and node2. Since they must depend on the key, it turns out

h (key) = g (f1 (key), f2 (key))

In fact, for f1 and f2, you can choose any hash functions that will be used for one key.

It remains to determine the unknown function g () , which describes the relationship between the two nodes f1 (key), f2 (key) and the edge.

For simplicity, we define the value of an edge as the sum of the values of the nodes connected by this edge.

Since the edge corresponds to only one key, this is the desired value, the result of the minimum ideal function.

h (key) = (g1 (f1 (key)) + g2 (f2 (key))) mod m

where h (key) is the edge value, f1 (key) is the first node of the graph, g1 () is the value of this node, f2 (key) is the second node, g2 () is the value of the second node, m is the number of edges.

Even simpler, the

value of the minimum ideal function = (value of the node 1 + values of the node 2) mod the number of edges.

Figure 2. Representation of one key in a graph.

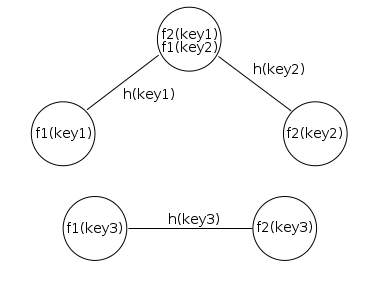

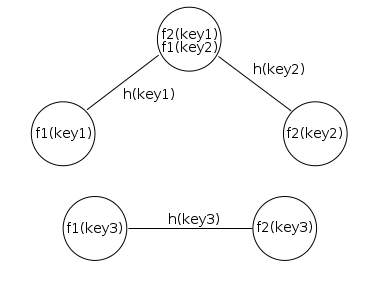

In other words, hash functions are used to determine the node numbers, and there are often collisions, so a single node can contain many edges.

Fig. 3. Collisions in hash functions generate several edges on one vertex. Also, the vertices of one key may not connect with the vertices of another key.

Having determined how the nodes of the graph are interconnected with their edges, we first determine the values of the edges h (key) (this is just an incremental index), which will be deliberately unique, and then select the values of the nodes.

For example, the value of the next node can be calculated as follows:

g2 (f2 (key)) = h (key) - g1 (f1 (key))

or the

value of node 2 = the value of the edge - the value of node 1 It

remains only to go through the graph, take 0 as the initial value of the first node of the subgraph and count all the others.

Unfortunately, this approach does not work if the graph is looped. Since there is no way to guarantee in advance that the graph is not looped, if a loop is detected, you will have to redraw the graph with other hash functions.

Looping is best verified by removing vertices with only one edge. If the vertices remain, then the graph is looped.

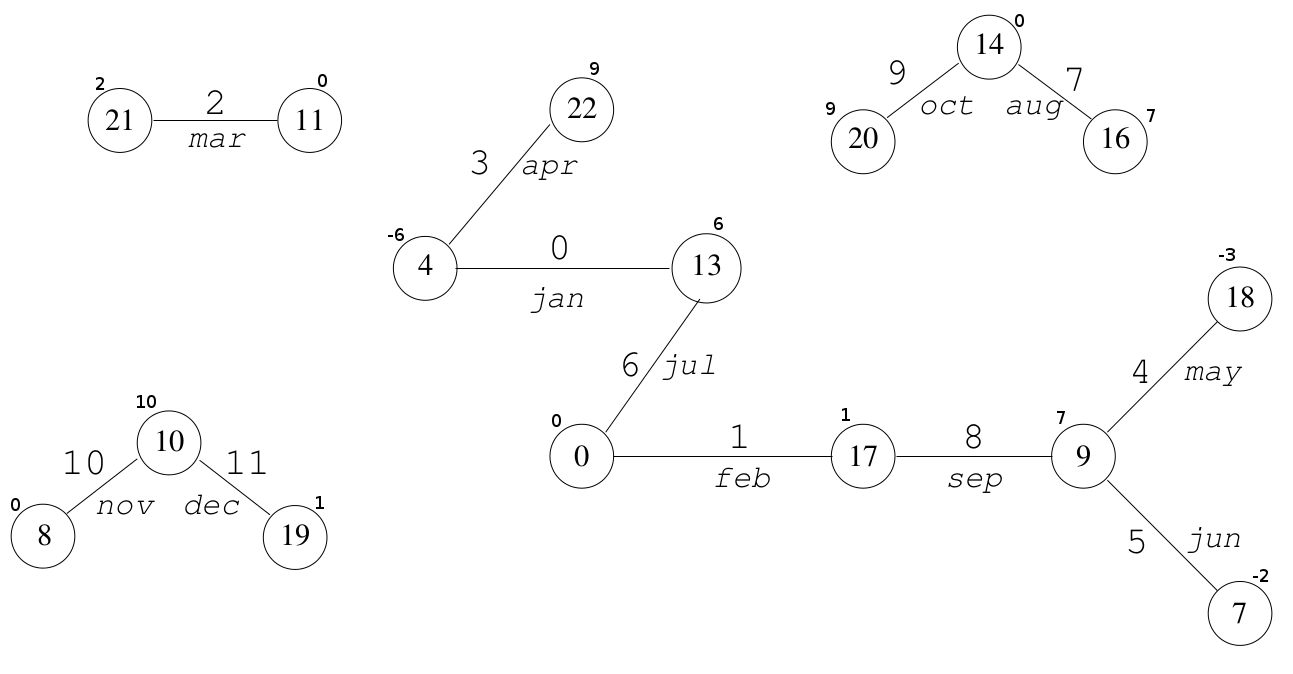

Now it’s time to describe the algorithm itself using an example.

Task: There are many keys consisting of the names of 12 months. It is necessary to create a minimum ideal hash function, where each month is translated into only one unique integer in the range [0, 11].

1. We go through all the keys and add vertices and edges. To do this, select two hash functions f1 and f2 .

For example:

For the key jan we get the number of the first node f1 (jan) : = 4 and the number of the second node f 2 (jan) : = 13

For the key feb , f1 (feb) : = 0, f 2 (feb) : = 17

and so Further.

2. Check if the graph is looped, if so, then repeat step # 1 again and change the hash of the function f1 / f2 .

The probability of a cycle appearing in a graph depends on the number of possible vertices. Therefore, we introduce the concept as a graph size factor. The number of nodes is defined as:

m = c * n

where m is the number of nodes in the graph, c is the constant size factor, n is the number of keys.

3. In the case of successful creation of a loop without graphs, you need to go through all the nodes and calculate their value using the formula

g2 (f2 (key)) = h (key) - g1 (f1 (key))

or the

value of the child node = edge index - node value ancestor

For example, we assign the node 0 to the value 0 and go along its edge with the index 6:

g2 (13) = 6 - 0 = 6, the node with the number 13 gets the value 6, etc.

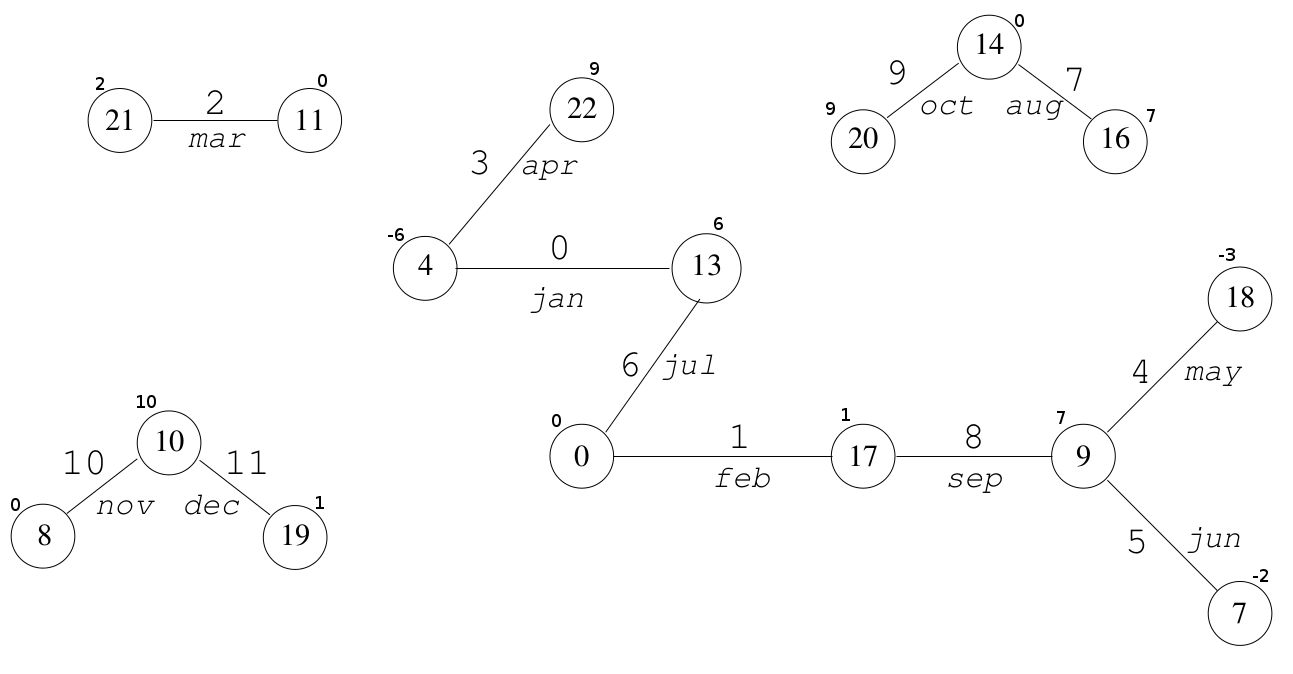

As a result, we have such a graph.

Figure 4. The resulting graph, where the names of the months are the keys and random hash functions are used to obtain vertex numbers. The numbers near the nodes are the value of the node.

Let's say we need to get a unique index for the sep key .

We use the same hash functions that were used to create the graph:

f1 (sep): = 17

f2 (sep): = 9

Having received the numbers of the vertices, add their values:

h (sep) = (g (17) + g (9 )) mod 12 = (1 + 7) mod 12 = 8

This algorithm is called CHM and is implemented in the CMPH library .

And as you can see, creating a hash table has linear complexity O (N) , and index search by key is constant O (1) .

Do you remember the bearded puzzle from Google?

How to copy an unidirectional list in linear time if the list node looks like this?

And the solution was supposed to clone the nodes so that two copies of the node were in a row, and then split this list with copies of the nodes into two lists, each with a copy. As a result, the complexity is linear.

Now imagine at your location the minimum ideal hash function that can store its index in a list by pointer and save a pointer by index. And it will be possible to solve the problem this way:

1. We go through the list, we get an array of node pointers and their index.

2. Create minimal ideal hash functions for node pointers.

3. Create a new list, get an array of node indices and their pointers.

4. We create other ideal functions for the indices of the second list in order to obtain the address by index.

5. We go through the new list, and we get the address of the old node, we get the index from it, and from the second hash function we get the address already in the second list by index.

As a result, the list will be copied in linear time.

Although, of course, the idea is to duplicate the nodes and then divide them into two lists, more beautiful and faster.

Thanks for the effort.

1. ZJ Czech, G. Havas, and BS Majewski. An optimal algorithm for generating minimal perfect hash functions ., Information Processing Letters, 43 (5): 257-264, 1992.

2. Minimal Perfect Hash Functions - Introduction .

3. mphf - My implementation of CHM in C ++.

How to solve a similar problem?

If the number of key-value pairs is obviously small, then it makes sense to just hardcode. But if there are many millions of such values?

You can simply browse through the list until you find the desired key value. But then the complexity will be linear O (N) , which, in this case, should upset any engineer. And what is the complexity of the algorithm then required? Which does not depend on the amount of data and is performed in a fixed time, i.e., with constant complexity O (1) .

How can I get data for a fixed time?

Hashing

If you arrange the data in memory sequentially, then knowing the offset where the necessary information is located, you can get access that will not depend on the initial amount of data.

In other words, we need to find a way to convert the key to the offset where the data will be. The offset is just an integer: [0, n - 1], where n is the number of stored values.

Hash functions come to the rescue, whose task is precisely to convert the keys to integers. Such numbers can be interpreted as offsets at which data is stored.

But, unfortunately, hash functions give rise to collisions, that is, two different keys can give the same offset.

Based on the task, we have a fixed and predetermined amount of data, which makes it possible to avoid such problems.

Perfect hashing

An ideal hash function is a hash function that converts a previously known static set of keys into a range of integers [0, m - 1] without collisions, that is, one key corresponds to only one unique value. And if the number of resulting values is the same as the number of incoming keys, such a function is called the Minimal Perfect Hash Function.

Consider an example of a minimal ideal hash function based on random graphs.

Let's imagine and imagine that each key corresponds to one unique edge, and, accordingly, two vertices.

Then the key can be represented in the form of such a primitive graph:

Figure 1. The graph where the edge corresponds to the key and is described through two vertices.

Since the key is represented in the form of an edge, and the edge always connects at least (because there are still hypergraphs) two vertices, then the desired function may look something like this:

h (key) = g (node1, node2)

where h (key) is the minimum ideal hash function whose value describes the edge, key is the key, g () is some unknown function depending on the vertices of the graph node1 and node2. Since they must depend on the key, it turns out

h (key) = g (f1 (key), f2 (key))

In fact, for f1 and f2, you can choose any hash functions that will be used for one key.

It remains to determine the unknown function g () , which describes the relationship between the two nodes f1 (key), f2 (key) and the edge.

This is where the tricks begin

For simplicity, we define the value of an edge as the sum of the values of the nodes connected by this edge.

Since the edge corresponds to only one key, this is the desired value, the result of the minimum ideal function.

h (key) = (g1 (f1 (key)) + g2 (f2 (key))) mod m

where h (key) is the edge value, f1 (key) is the first node of the graph, g1 () is the value of this node, f2 (key) is the second node, g2 () is the value of the second node, m is the number of edges.

Even simpler, the

value of the minimum ideal function = (value of the node 1 + values of the node 2) mod the number of edges.

Figure 2. Representation of one key in a graph.

In other words, hash functions are used to determine the node numbers, and there are often collisions, so a single node can contain many edges.

Fig. 3. Collisions in hash functions generate several edges on one vertex. Also, the vertices of one key may not connect with the vertices of another key.

That's where the salt is

Having determined how the nodes of the graph are interconnected with their edges, we first determine the values of the edges h (key) (this is just an incremental index), which will be deliberately unique, and then select the values of the nodes.

For example, the value of the next node can be calculated as follows:

g2 (f2 (key)) = h (key) - g1 (f1 (key))

or the

value of node 2 = the value of the edge - the value of node 1 It

remains only to go through the graph, take 0 as the initial value of the first node of the subgraph and count all the others.

Unfortunately, this approach does not work if the graph is looped. Since there is no way to guarantee in advance that the graph is not looped, if a loop is detected, you will have to redraw the graph with other hash functions.

Looping is best verified by removing vertices with only one edge. If the vertices remain, then the graph is looped.

On a simple example

Now it’s time to describe the algorithm itself using an example.

Task: There are many keys consisting of the names of 12 months. It is necessary to create a minimum ideal hash function, where each month is translated into only one unique integer in the range [0, 11].

1. We go through all the keys and add vertices and edges. To do this, select two hash functions f1 and f2 .

For example:

For the key jan we get the number of the first node f1 (jan) : = 4 and the number of the second node f 2 (jan) : = 13

For the key feb , f1 (feb) : = 0, f 2 (feb) : = 17

and so Further.

2. Check if the graph is looped, if so, then repeat step # 1 again and change the hash of the function f1 / f2 .

The probability of a cycle appearing in a graph depends on the number of possible vertices. Therefore, we introduce the concept as a graph size factor. The number of nodes is defined as:

m = c * n

where m is the number of nodes in the graph, c is the constant size factor, n is the number of keys.

3. In the case of successful creation of a loop without graphs, you need to go through all the nodes and calculate their value using the formula

g2 (f2 (key)) = h (key) - g1 (f1 (key))

or the

value of the child node = edge index - node value ancestor

For example, we assign the node 0 to the value 0 and go along its edge with the index 6:

g2 (13) = 6 - 0 = 6, the node with the number 13 gets the value 6, etc.

As a result, we have such a graph.

Figure 4. The resulting graph, where the names of the months are the keys and random hash functions are used to obtain vertex numbers. The numbers near the nodes are the value of the node.

Lucap

Let's say we need to get a unique index for the sep key .

We use the same hash functions that were used to create the graph:

f1 (sep): = 17

f2 (sep): = 9

Having received the numbers of the vertices, add their values:

h (sep) = (g (17) + g (9 )) mod 12 = (1 + 7) mod 12 = 8

This algorithm is called CHM and is implemented in the CMPH library .

And as you can see, creating a hash table has linear complexity O (N) , and index search by key is constant O (1) .

Afterword

Do you remember the bearded puzzle from Google?

How to copy an unidirectional list in linear time if the list node looks like this?

struct list_node

{

list_node * next;

list_node * random;

};

And the solution was supposed to clone the nodes so that two copies of the node were in a row, and then split this list with copies of the nodes into two lists, each with a copy. As a result, the complexity is linear.

Now imagine at your location the minimum ideal hash function that can store its index in a list by pointer and save a pointer by index. And it will be possible to solve the problem this way:

1. We go through the list, we get an array of node pointers and their index.

2. Create minimal ideal hash functions for node pointers.

3. Create a new list, get an array of node indices and their pointers.

4. We create other ideal functions for the indices of the second list in order to obtain the address by index.

5. We go through the new list, and we get the address of the old node, we get the index from it, and from the second hash function we get the address already in the second list by index.

As a result, the list will be copied in linear time.

Although, of course, the idea is to duplicate the nodes and then divide them into two lists, more beautiful and faster.

Thanks for the effort.

Read

1. ZJ Czech, G. Havas, and BS Majewski. An optimal algorithm for generating minimal perfect hash functions ., Information Processing Letters, 43 (5): 257-264, 1992.

2. Minimal Perfect Hash Functions - Introduction .

3. mphf - My implementation of CHM in C ++.