How to get instances in Google Cloud, configure access and hook Google Bucket to it. Howto

Good to all who read!

I’m placing this know-how in hot pursuit with the aim, firstly, not to forget how to do it, and secondly, with the goal of helping someone create instances in the Google cloud.

Tasks to be solved:

Question: “Why cloud.google?” Leave on the conscience of the customer. The moped was not mine, I just figured out a new VPS control system for myself. And the following is proposed there (I state thesis):

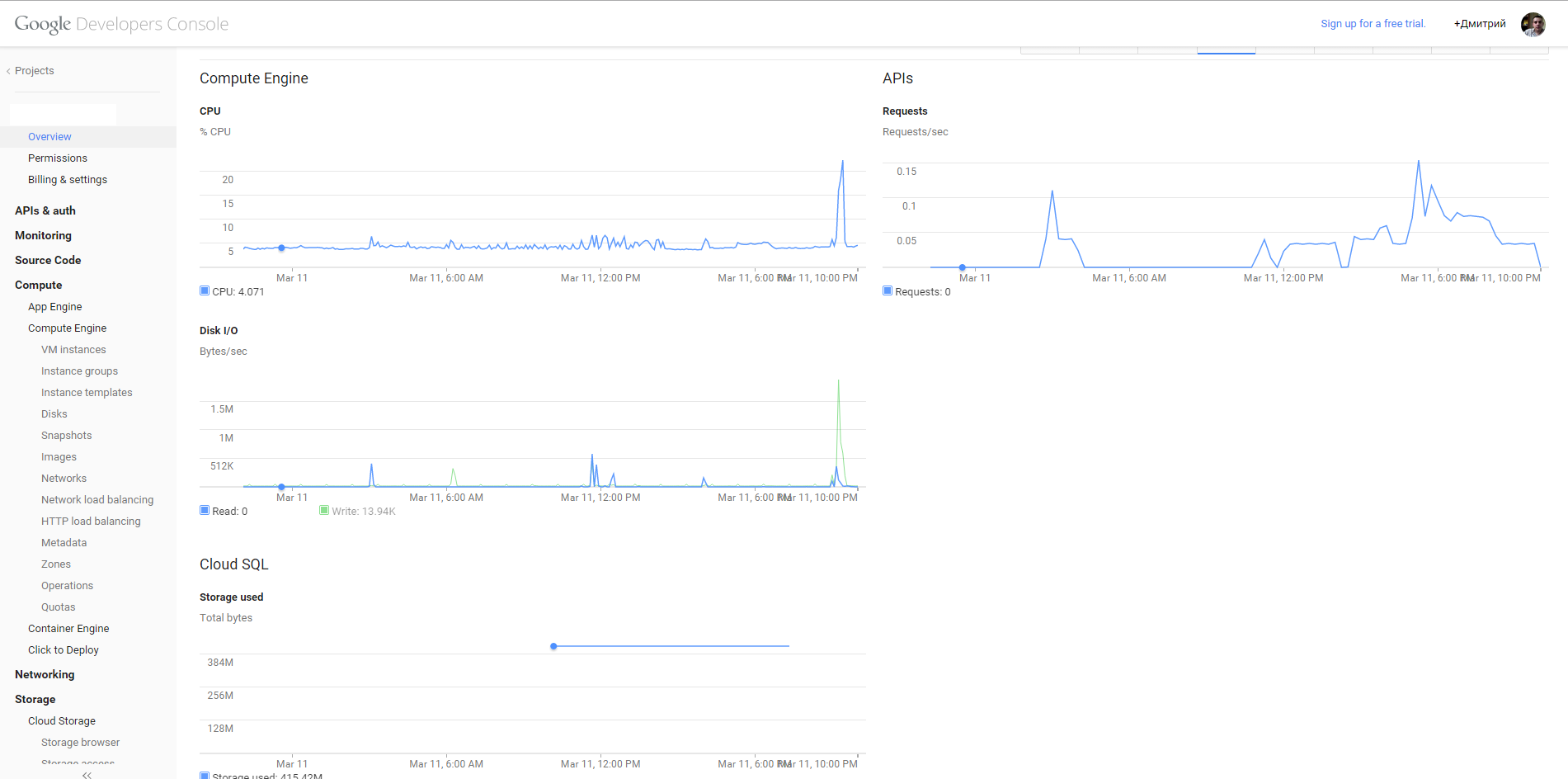

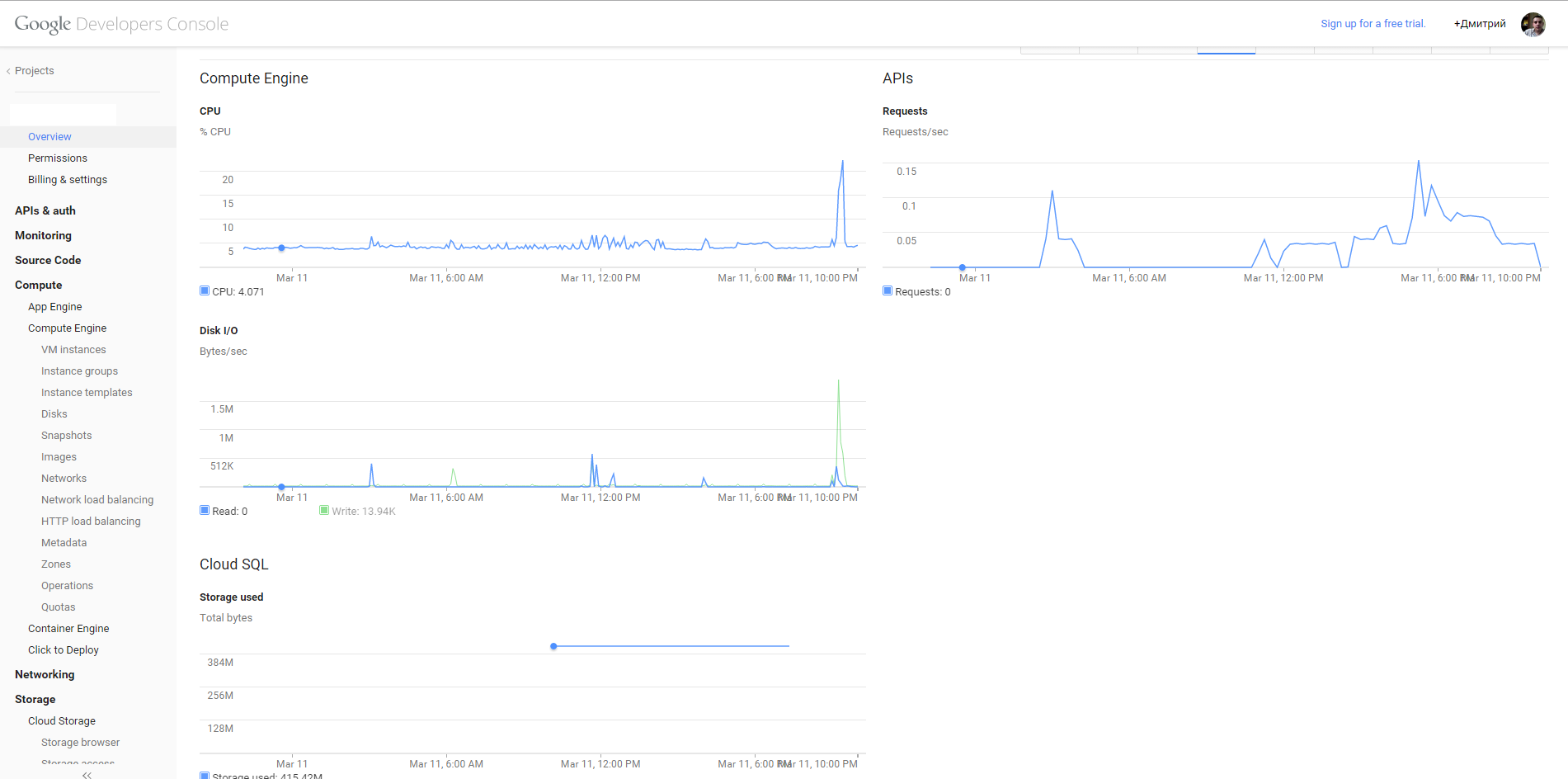

Used the Compute Engine, Storage, Networking, Storage section.

Here hautushechka end who opened at least one spoiler - well done.

A big request. Based on previous experience writing articles on Habrahabre, I ask you to justify the minus. Otherwise, I risk never understanding what my shortcomings are: in the style of presentation, in the specifics, in the perception and presentation of information by me. For each comment I will send positive rays.

UPD <03/12/2015>: It turned out that sending through port 25 was closed in the Google Cloud instance (and connect to another server on port 25). Absolutely. Instead, it is suggested that you use a relay mail server sending on port 587.

I’m placing this know-how in hot pursuit with the aim, firstly, not to forget how to do it, and secondly, with the goal of helping someone create instances in the Google cloud.

Tasks to be solved:

- three instances in different regions of the Europe zone

- shared disk for two instances

- http load balancer

- MySQL cloud base

- from the third instance upload files to Google Bucket

Question: “Why cloud.google?” Leave on the conscience of the customer. The moped was not mine, I just figured out a new VPS control system for myself. And the following is proposed there (I state thesis):

- App Engine - Service for applications and code collaboration. Who cares about the documentation?

- Compute Engine - actually VPS, disks for them, firewall (s), balancers (more about them later, still at a loss why there are two of them), snapshot and quota management

- Networking - Cloud DNS from Google and VPN

- Storage - cloud storage, data storage and cloud SQL (MySQL)

- BigData - something super simple and completely obscure to me

Used the Compute Engine, Storage, Networking, Storage section.

1. Creating an instance.

I begin to deploy any virtual machine by designing a disk for it. Below is the

screenshot : The screenshot shows the areas where you can create a disk. We are interested in Europe. I draw your attention to the fact that the data center “a” in Europe will be closed. An inquisitive reader can look for the location of the centers b, c and d in fact, I am not concerned about the physical location.

In my account you can create “Standard Persistent Disk” with a maximum size of up to 240 Gb. Primitive read / write speed test drive below:

Source type for the disk (Source Type): Image, Snapshot, Blank, respectively, a pre-installed OS image, from the snapshot of the system or an empty disk.

I was personally interested in Debian, besides it, Google suggests deploying CentOS, CoreOS, OpenSUSE, Ubuntu, RHEL, Sles, and Windows Server 2008. I

created a 10 GB disk with Debian Wheezy in the “B” and “C” Europe areas. Then in the area "C" deleted. The challenge is to deploy a production server and make it a mirror. And if so, then we will deploy the disk in the “C” area from the snapshot of the disk in the “B” area.

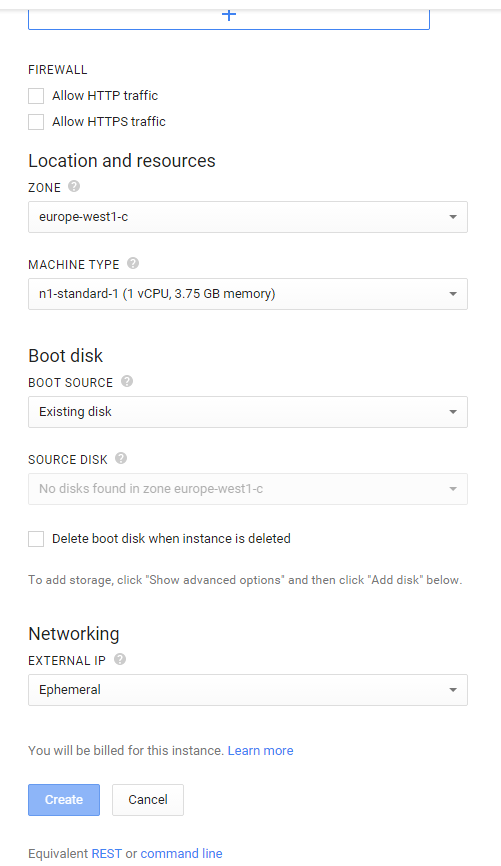

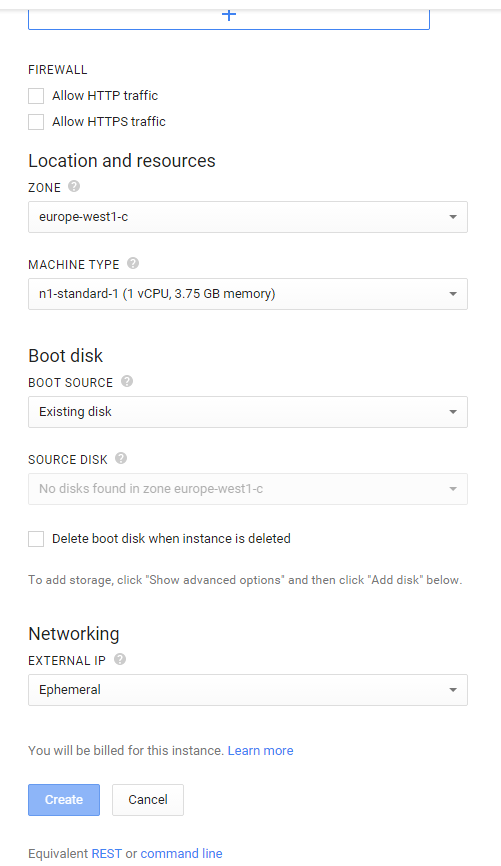

Now create the instance itself:

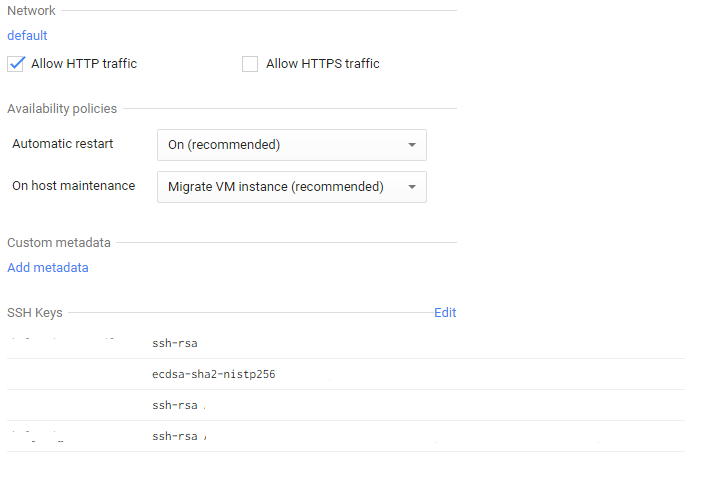

A list of possible (available to me) VPS options, though it covers the choice of default firewall options for google: HTTP Traffic and HTTPS Traffic

We note both options, go ahead - select Exiting Disk and say which disk to connect to the machine. I must say that when I clung to the second disk, everything was done “hot” - / dev / sdb appeared, which I successfully crashed and mounted without rebooting the instance.

By the way, a disk can be deleted during instance deletion: you need to select the corresponding item below the disk type selection.

When creating the Networking section, only the IP address is available - an internal network or a white IP.

By the way, ISPanel still does not understand that there are Amazon, Google and other services that do not register an IP address in the network settings. Installation and licensing of the panel is complicated by waiting for technical support or creating a virtual interface with the desired ISPanel address. Well, uncomfortable!

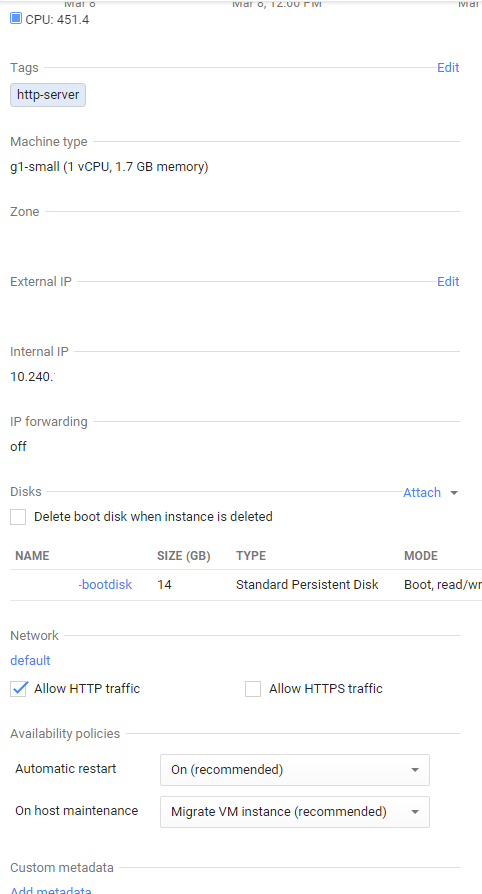

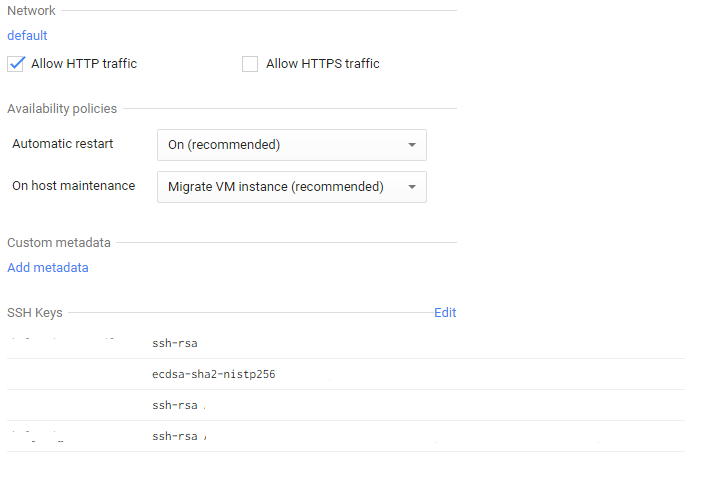

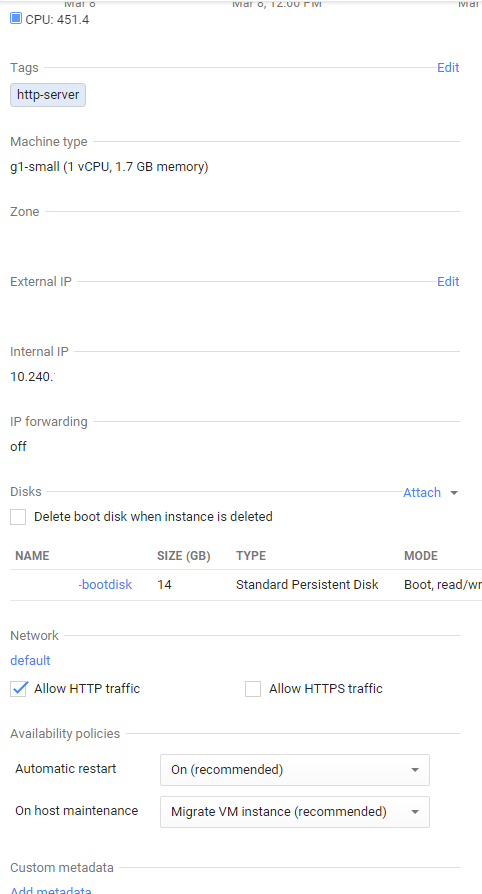

When an instance is created, you can go into its settings and see:

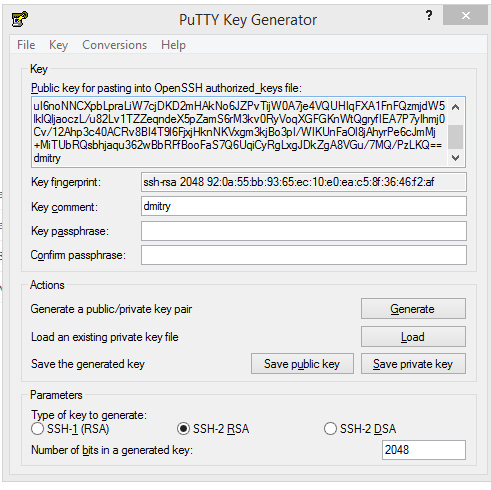

And you will be puzzled by the question: “how to get access via SSH?”. So I actually studied the subject for about 30 minutes, the following came out:

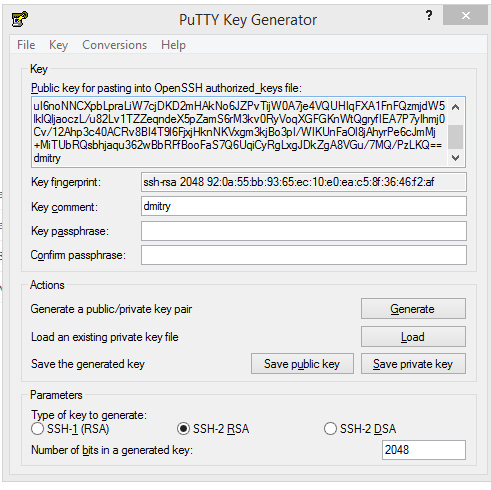

In the SSH key control unit, the key generated by, for example, PuttyGen is entered.

a) Run

b) press Generate

c) chat with the mouse

d) get the key

e) change the Key Comment to the username

e) Save public key

g) Save the private key - do not password protect the file

h) Copy / Paste from the window in the SSH Keys line of the form ssh-rsa ABRAKADABRA dmitry

If we stuck a white IP, then you can go to log in with the username in the created instance (in Putty the key file is specified in the settings: Connection-> SSH-> Auth). And you can go to the console through the web interface from Google (at the top of the SSH button). And you can probably configure a VPN from the appropriate section to access a closed server, have not tried it.

screenshot : The screenshot shows the areas where you can create a disk. We are interested in Europe. I draw your attention to the fact that the data center “a” in Europe will be closed. An inquisitive reader can look for the location of the centers b, c and d in fact, I am not concerned about the physical location.

In my account you can create “Standard Persistent Disk” with a maximum size of up to 240 Gb. Primitive read / write speed test drive below:

sync; dd if=/dev/zero of=/tempfile bs=1M count=4096; sync && dd if=/tempfile of=/dev/null bs=1M count=4096 && /sbin/sysctl -w vm.drop_caches=3 && dd if=/tempfile of=/dev/null bs=1M count=4096

4096+0 records in

4096+0 records out

4294967296 bytes (4.3 GB) copied, 112.806 s, 38.1 MB/s

4096+0 records in

4096+0 records out

4294967296 bytes (4.3 GB) copied, 52.036 s, 82.5 MB/s

vm.drop_caches = 3

4096+0 records in

4096+0 records out

4294967296 bytes (4.3 GB) copied, 52.7394 s, 81.4 MB/s

Source type for the disk (Source Type): Image, Snapshot, Blank, respectively, a pre-installed OS image, from the snapshot of the system or an empty disk.

I was personally interested in Debian, besides it, Google suggests deploying CentOS, CoreOS, OpenSUSE, Ubuntu, RHEL, Sles, and Windows Server 2008. I

created a 10 GB disk with Debian Wheezy in the “B” and “C” Europe areas. Then in the area "C" deleted. The challenge is to deploy a production server and make it a mirror. And if so, then we will deploy the disk in the “C” area from the snapshot of the disk in the “B” area.

Now create the instance itself:

A list of possible (available to me) VPS options, though it covers the choice of default firewall options for google: HTTP Traffic and HTTPS Traffic

We note both options, go ahead - select Exiting Disk and say which disk to connect to the machine. I must say that when I clung to the second disk, everything was done “hot” - / dev / sdb appeared, which I successfully crashed and mounted without rebooting the instance.

By the way, a disk can be deleted during instance deletion: you need to select the corresponding item below the disk type selection.

When creating the Networking section, only the IP address is available - an internal network or a white IP.

By the way, ISPanel still does not understand that there are Amazon, Google and other services that do not register an IP address in the network settings. Installation and licensing of the panel is complicated by waiting for technical support or creating a virtual interface with the desired ISPanel address. Well, uncomfortable!

When an instance is created, you can go into its settings and see:

And you will be puzzled by the question: “how to get access via SSH?”. So I actually studied the subject for about 30 minutes, the following came out:

In the SSH key control unit, the key generated by, for example, PuttyGen is entered.

a) Run

b) press Generate

c) chat with the mouse

d) get the key

e) change the Key Comment to the username

e) Save public key

g) Save the private key - do not password protect the file

h) Copy / Paste from the window in the SSH Keys line of the form ssh-rsa ABRAKADABRA dmitry

If we stuck a white IP, then you can go to log in with the username in the created instance (in Putty the key file is specified in the settings: Connection-> SSH-> Auth). And you can go to the console through the web interface from Google (at the top of the SSH button). And you can probably configure a VPN from the appropriate section to access a closed server, have not tried it.

2. Instance cloning

It turned out to be easier than ever:

In the disks, create a new disk from the snapshot in the desired region and fasten it to the instance. This completes the cloning. It took 10 minutes.

- Compute Engine - New Snapshot

- call a snapshot and select the desired drive

- Create

In the disks, create a new disk from the snapshot in the desired region and fasten it to the instance. This completes the cloning. It took 10 minutes.

3. Firewall

Compute Engine -> Networks -> Default (Network) -> Firewall rules - New

When setting up a firewall, we are guided by common sense. The syntax is simple.

I must say that Google Chrome was strange and started up for about 30 minutes and did not make any rules. Saved Mozilla, however, it took a really long time to get the rule for the firewall, it took me about 3 minutes.

When setting up a firewall, we are guided by common sense. The syntax is simple.

I must say that Google Chrome was strange and started up for about 30 minutes and did not make any rules. Saved Mozilla, however, it took a really long time to get the rule for the firewall, it took me about 3 minutes.

4. Load balancing

I did not understand how Network Load Balancing differs from HTTP Load Balancing. And there and there, by default, http is tested on port 80. We start a new balancer, select the instances that we analyze, assign an IP.

I will not add anything else, everything is just like a mallet.

I will not add anything else, everything is just like a mallet.

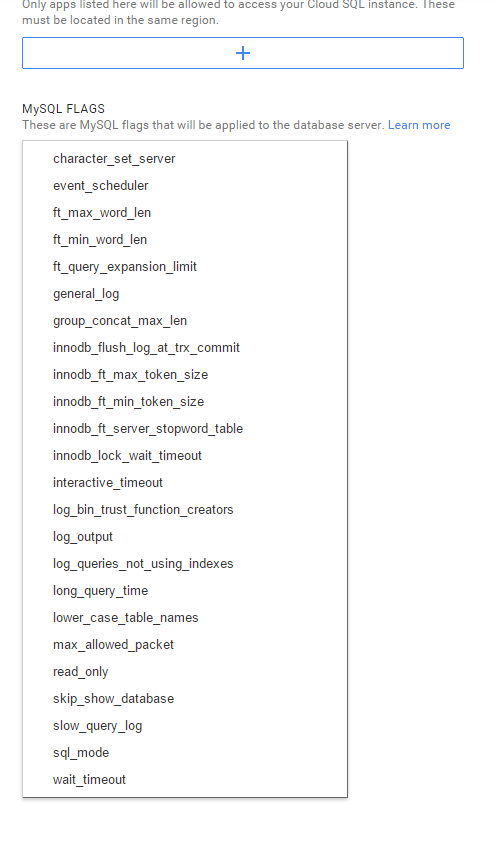

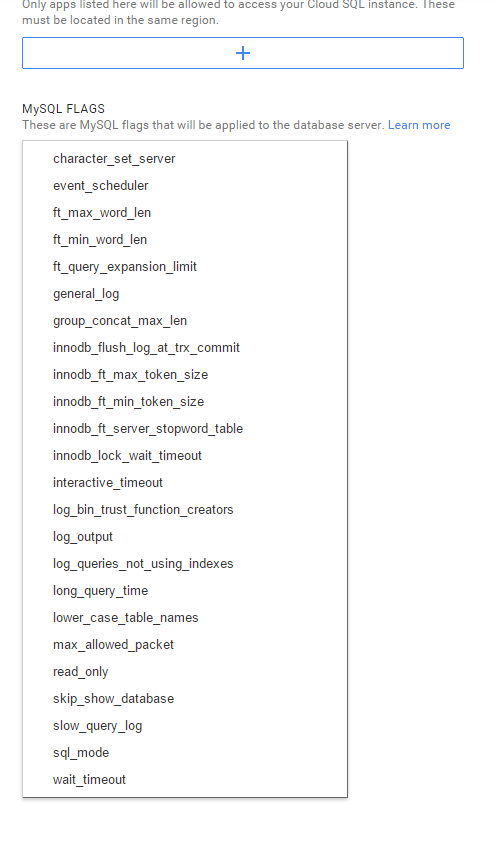

5. Cloud SQL

To create a cloud-based MySQL database-optimized instance, go to Storage -> Cloud SQL -> New. I chose the second one in the list: 1Gb RAM, 250 Gb disk. Tested the 800Mb base - it flies. Well, from the instance we redirected ISPanel to the "external" MySQL server.

You can access the databases from PHP, Phyton, JAVA, console, etc. I will give an example for PHP:

And make users through the special console.

And give access from selected instances or IP addresses.

In the Cloud SQL management console, through the EDIT button, you can find the usual settings for my.cnf.

You can access the databases from PHP, Phyton, JAVA, console, etc. I will give an example for PHP:

// Using PDO_MySQL (connecting from App Engine)

$db = new pdo('mysql:unix_socket=/cloudsql/<тут был ID>:<а тут имя Cloud SQL>'),

'root', // username

'' // password

);

// Using mysqli (connecting from App Engine)

$sql = new mysqli(

null, // host

'root', // username

'', // password

'', // database name

null,

'/cloudsql/<тут был ID>:<а тут имя Cloud SQL>'

);

// Using MySQL API (connecting from APp Engine)

$conn = mysql_connect(':/cloudsql/<тут был ID>:<а тут имя Cloud SQL>,

'root', // username

'' // password

);

And make users through the special console.

And give access from selected instances or IP addresses.

In the Cloud SQL management console, through the EDIT button, you can find the usual settings for my.cnf.

6. Cling Cloud Storage

Unknowingly, I created Bucket from the web interface, although it would have been easier to go into the server console and create from there. Google has an API that is preinstalled in instances. I took advantage of gsutil:

First you need to update:

Log In:

The link was 10 lines long, I shortened it a bit. Using this link, we give access from a Google user, get an ID that we drive into the verification code:

We have access, in my case, to Bucket. Well, or we can create:

I threw a file there.

We can synchronize the Bucket and the system directory:

Well and so on and so forth.

In order to mount Bucket gs: // zp-storage / as a directory, you need to use a couple of third-party utilities:

s3fuse is a utility that is used for similar purposes , for example, to mount Amazon S3. They write that it can be used to mount Cloud Storage (google), but for some reason I did not find a reasonable config with at least a comment on this action.

gcsfs is a utility that works with Cloud Storage (google) .

From the dependency packages s3fuse did not stand up, collected from source. The build is in progress ./configure && make && make install, and here is a list of dependencies:

But gcsfs got out of the deb package.

Required changes to conf files for connecting Google Cloud Storage:

The last command launches authorization via WEB under the link of the form

accounts.google.com/o/oauth2/auth?client_idbLaBlABBBBLLLLAAAA

With the authorization code, which must be entered in the line to create a connection token to Storage.

mount like this:

gsutil

Usage: gsutil [-D] [-DD] [-h header]... [-m] [-o] [-q] [command [opts...] args...]

First you need to update:

gcloud components update

Log In:

root@host:~# gcloud auth login

You are running on a GCE VM. It is recommended that you use

service accounts for authentication.

You can run:

$ gcloud config set account ``ACCOUNT''

to switch accounts if necessary.

Your credentials may be visible to others with access to this

virtual machine. Are you sure you want to authenticate with

your personal account?

Do you want to continue (Y/n)? y

Go to the following link in your browser:

https://accounts.google.com/o/oauth2/auth?

Enter verification code:

The link was 10 lines long, I shortened it a bit. Using this link, we give access from a Google user, get an ID that we drive into the verification code:

We have access, in my case, to Bucket. Well, or we can create:

:~# gsutil ls

gs://storage/

ИЛИ ниже создает Bucket

gsutil mb

CommandException: The mb command requires at least 1 argument. Usage:

gsutil mb [-c class] [-l location] [-p proj_id] uri..

Смотрим что вышло

:~# gsutil ls gs://storage/

gs://storage/gcsfs_0.15-1_amd64.deb

I threw a file there.

We can synchronize the Bucket and the system directory:

gsutil rsync -d -r gs://zp-storage/ /usr/src

Well and so on and so forth.

In order to mount Bucket gs: // zp-storage / as a directory, you need to use a couple of third-party utilities:

s3fuse is a utility that is used for similar purposes , for example, to mount Amazon S3. They write that it can be used to mount Cloud Storage (google), but for some reason I did not find a reasonable config with at least a comment on this action.

gcsfs is a utility that works with Cloud Storage (google) .

From the dependency packages s3fuse did not stand up, collected from source. The build is in progress ./configure && make && make install, and here is a list of dependencies:

aptitude install mpi-default-bin mpi-default-dev libboost-all-dev povray libxml++2.6-2 libxml++2.6-dev libfuse-dev libfuse

But gcsfs got out of the deb package.

Required changes to conf files for connecting Google Cloud Storage:

nano /etc/gcsfs.conf

bucket_name=<имя bucket-а>

service=google-storage

gs_token_file=/etc/gs.token

touch /etc/gs.token

gcsfs_gs_get_token /etc/gs.token

The last command launches authorization via WEB under the link of the form

accounts.google.com/o/oauth2/auth?client_idbLaBlABBBBLLLLAAAA

With the authorization code, which must be entered in the line to create a connection token to Storage.

:/etc# /usr/bin/gcsfs

Usage: gcsfs [options]

Options:

-f stay in the foreground (i.e., do not daemonize)

-h, --help print this help message and exit

-o OPT... pass OPT (comma-separated) to FUSE, such as:

allow_other allow other users to access the mounted file system

allow_root allow root to access the mounted file system

default_permissions enforce permissions (useful in multiuser scenarios)

gid= force group ID for all files to

config= use rather than the default configuration file

uid= force user ID for all files to

-v, --verbose enable logging to stderr (can be repeated for more verbosity)

-vN, --verbose=N set verbosity to N

-V, --version print version and exit

mount like this:

/usr/bin/gcsfs -o allow_other /<каталог>

Here hautushechka end who opened at least one spoiler - well done.

A big request. Based on previous experience writing articles on Habrahabre, I ask you to justify the minus. Otherwise, I risk never understanding what my shortcomings are: in the style of presentation, in the specifics, in the perception and presentation of information by me. For each comment I will send positive rays.

UPD <03/12/2015>: It turned out that sending through port 25 was closed in the Google Cloud instance (and connect to another server on port 25). Absolutely. Instead, it is suggested that you use a relay mail server sending on port 587.