Parse Microsoft Office localization file format

Have you ever noticed that the AGGREGATE function in Excel in the second set of arguments has an incorrect description of the arguments? In fact, the second set of argument descriptions is mixed up from the second and first sets. This bug is accurately reproduced in Excel 2010, 2013. I was wondering why this is happening, because Microsoft cannot be so negligent in the interface of one of its main products. The result was a complete analysis of the MS Office localization file format.

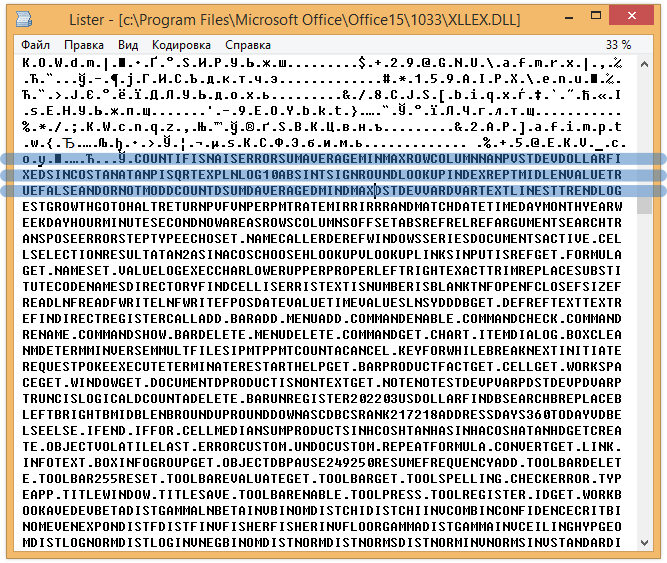

After a short search for descriptions of function arguments by the contents of files in the office folder, the file c: \ Program Files \ Microsoft Office \ Office15 \ 1033 \ XLINTL32.DLL was found . Where 1033 is the LCID of the localization language ( more on msdn ).

From a cursory glance, it became clear that, in principle, I found what I was looking for. The argument descriptions for the AGGREGATE function for both options in the file were correct. It turned out that Excel incorrectly parses its own localization file. Then it was decided to write your Excel localization file parser, or at least understand the format of MS Office localization files.

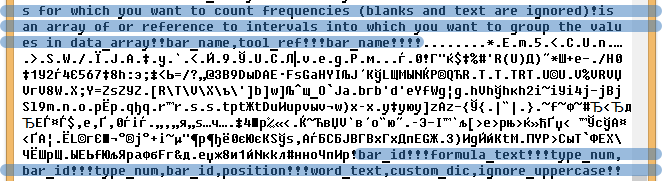

To begin with, it was decided to write the parser only of the descriptions of the arguments and functions, since by a quick look at the file, which is presented above, it seemed that the format is quite simple - an exclamation mark is used as a separator between the text, and what text means what can be understood experimentally .

As a result, after a slightly thoughtful reading of the file, such a scheme for describing arguments and functions was revealed:

According to this scheme, a parser was quickly written that quite successfully coped with its work, but there were several problems:

But in principle, with a lot of dirty hacks, it was possible to pull out function descriptions for almost all localizations.

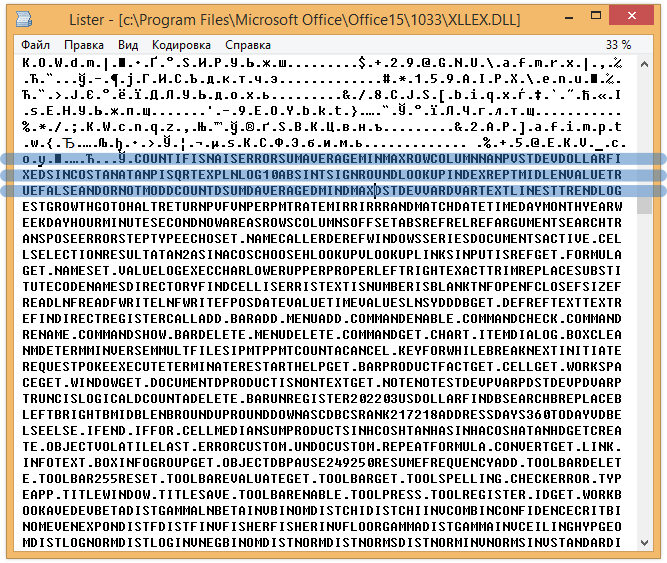

It remains to solve the problem of which function which description belongs. To do this, I again started searching the contents of files in MS Office folders, only this time I was looking for function names. And I was lucky: next to the XLINTL32.DLL file with the descriptions of the functions was the XLLEX.DLL file, in which there was something similar to the names of the functions:

Only they walked somehow in a row and without spaces. And if for English it was still possible to parse this text by hand into separate function names, then for Arabic or Thai I just could not do it.

In principle, it became clear here that it was time to sort out the format of Excel localization files already, or to get into this business and go to bed. The first one was chosen.

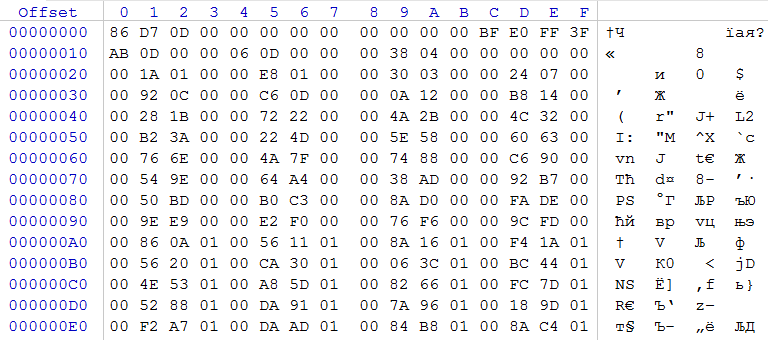

At first I noticed that both the descriptions of the functions and the names of the functions are stored in dll files in the resource with the name “1” and type “234”. Thoughtful study of the resource dump from the XLLEX.DLL file (this is the one with the function names) led me to the following discovery: between the sections with normal text there are sections with crooks that should have a certain meaning. Then it was decided to study these areas more deeply, using WinHEX and a calculator. Let’s take the site of the crooks that go in front of the site with the names of the functions:

The first two bytes: 01 00 - I don’t know what they mean yet. The second two bytes are 56 02 - if you turn them over, it turns out 0256, and if you also transfer from the hexadecimal system to decimal, you get 598. Exactly as many function names below in the block of meaningful text. This is already pleasing. We look further: the following pairs of bytes, if they are interchanged, are similar to an increasing sequence. Indeed, these bytes are the offset of the description of an individual function from the end of the krakozyabr block. In fact, the screenshot from the XLLEX.DLL file shows that the first function is COUNT - 5 bytes (0005h-0000h), the second - IF - 2 bytes (0007h-0005h), the third - ISNA - 4 bytes (000Bh-0007h) .

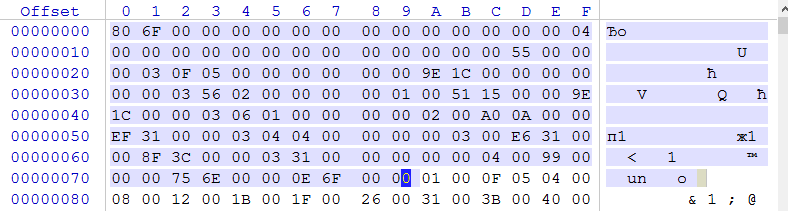

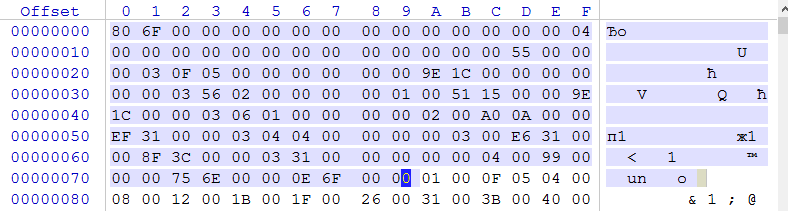

This is all very good, but how to determine where the krakozyabr block begins, in which the lengths of the names of the functions are given. Indeed, in each localization, this block has its own offset. Then I began to dig the header of the resource dump from the XLLEX.DLL file.

The first 4 bytes are the size of the resource. Further, I was interested in the bytes that are located at the offset 33h 34h - their value - 0256 - is exactly the same as the number of function names recorded in the file. In addition, every 17 bytes 03h is repeated, and the last 4 bytes in the area selected in the screenshot - 0E 6F 00 00 just equal the number equal to the size of the resource = the size of the selected area + 4 + 4 - 1. That is, in fact, this the size of the part of the file where the data is.

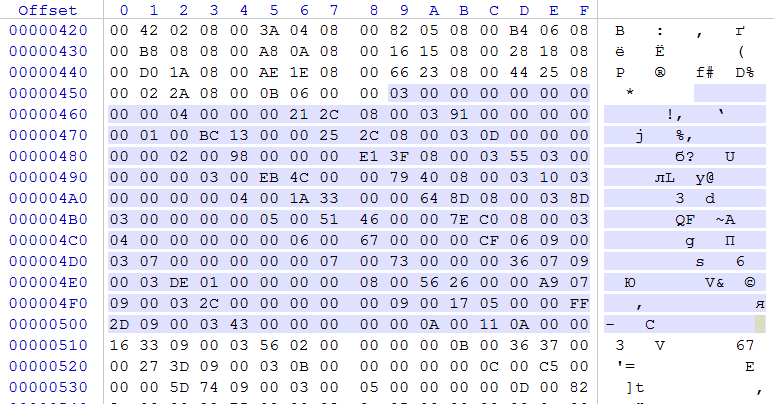

Now you can write out all the bytes that are between repeating 03h and group them a little:

After long searches of different options that these bytes can mean, the following pattern was highlighted:

Basically, this data is already enough to make an automatic parser of the XLLEX.DLL file and pull out the names of all functions in all languages and a lot of other information. But in the process there was one problem: only a very small part of the localizations stores data in UTF-8 format. Most of the data is stored in some completely incomprehensible formats: each character is encoded by 1 byte with some offset relative to the table of this language in UTF-8. For example, the Cyrillic "C" and "H" were written as A1 and A7, and in the table UTF8 they have numbers D0A1 and D0A7, but at the same time, "p" was written as C0, although it should be D180.

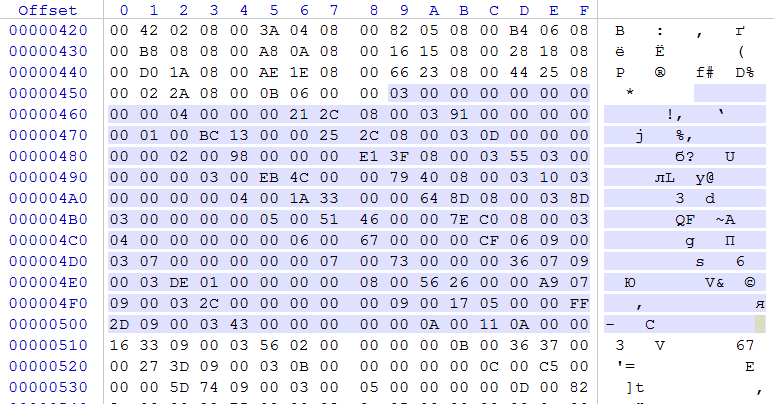

To solve this problem, I first naturally tried to understand how Excel itself translates strings from such obscure encoding at least in UTF-8. To do this, it was necessary to compare the block descriptions for several languages, I took Russian localization and English:

The beginning of the block description for English localization:

Some conclusions were drawn from this: the first two bytes in the block description are the encoding. If the first byte = 0, then the text in this block is written in Unicode LE, and here everything is simple. If the first byte of the encoding = 01, then you need to look at the second byte. If the second byte = 00, then the text is encoded in a simple UTF-8 encoding, here, too, you do not need to rack your brains. But what if the second byte is not 0?

At first, I simply compiled a dictionary: the value of the second byte is the offset in the UTF-8 table. This quickly bored me, and I began to look for a pattern. It soon became clear that the offset in the UTF-8 table can be defined as: offset = (byte2-80h) * 4 + C0h. The only problem is that for some groups of encodings C0h had to be changed to another number.

As a result, the text conversion functions began to look like this:

After all this, it was already possible to accurately and correctly extract all the localized text from the XLLEX.DLL file, but this method was completely unsuitable for the file with descriptions of functions and arguments of XLINTL32.DLL. Here I had to start everything almost from the very beginning, but it was already easier.

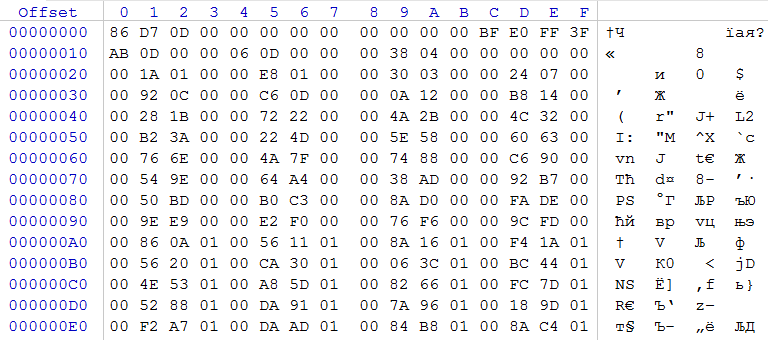

To begin with, in the XLINTL32.DLL file, I tried to find something already familiar and similar to the data from the XLLEX.DLL file. A familiar picture began at offset 0459h:

Those. starting with 04B1h there were block descriptions, the same as in the XLLEX.DLL file, but above this offset everything was somehow incomprehensible. And not all text from the resource obeyed the rules that were derived based on the analysis of the XLLEX.DLL file.

It was decided in the future those blocks that I have already learned to recognize as blocks of the second type, and those that I still do not know how to call blocks of the first type, because they went in the XLINTL32.DLL file above blocks of the second type.

The text of blocks of the first type began almost immediately after the end of the map of blocks of the second type, it remains to find where the file contains the map of blocks of the first type, and how to determine the text separator in the blocks of the first type themselves. For study, this block was selected:

Such lines are clearly visible in it: “Cut, copy, and paste”, “Print”, “For charts”, etc. In addition, a “characteristic” ladder of zeros and increasing values is visible in hex codes. The first two values in this ladder - 46h and 6Eh - the difference between them in decimal 40, because the text is explicitly set in Unicode LE, the length of “Cut, copy, and paste” will be 20 * 2 = 40. It converges. Let's check another pair of values: 78h-6Eh = 10/2 = 5 - exactly the length of the “Print”. We rewrite beautifully all the bytes from the offset 07BE66h to the start of the meaningful text:

00 46000000

00 6E000000

00 78000000

...

FF E2020000

The total length of the resulting statement is 07BEABh - 07BE66h + 1 = 46h - somewhere it already was. It turns out that the descriptions of elements in a block of the first type look like this: 1 byte is the type of element, 4 bytes are the offset of the element relative to the beginning of this block. As it turned out later, the types of elements in a block of the first type are 00h — plain text in Unicode, 0Ah — some obscure krakozyabry, FFh — the last element in this block.

Now the last thing is left: to deal with the resource header and find out how the offsets are determined for all blocks.

To begin with, I remembered that the description of all blocks of the second type ends at offset 0A67h, and begins at offset 0459h, so the length of the description of blocks of the second type is 0A67h-0459h + 1 = 060Fh, and at 0455h there is a four-byte number 060Bh: 060Bh + 4 = 060Fh . It turns out that at 455h the length of the description section of the blocks of the second type is recorded.

In order to understand how the displacements of the blocks of the first type from the beginning of the resource are described, it was decided to compile for each block of the first type a table of displacements of the beginning of this block and its length.

The first block of the first type begins where the descriptions of blocks of the second type - 0A68h end.

And between the beginning of the resource and offset 0455h there were bytes very similar to the increasing sequence:

Let's try to subtract from 01E8h (offset 25h) the number 011Ah (offset 21h): 01E8h-011Ah = CEh, just the length of the second block. For fun: subtract 0330h (offset 29h) from 01E8h (offset 25h): 0330h-01E8 = 0148h, and 011Ah - it looks like the length of the first block. It turns out that from the 1Dh offset there are descriptions of the offsets of blocks of the first type. They are recorded in the form of offsets of the beginning of the block from the end of the descriptions of blocks of the second type (or the beginning of the section with the contents of the blocks - to whom it is more convenient). It remains to understand what kind of bytes are between 04h and 1D. If we subtract 1D (the beginning of the description of the displacements of blocks of the first type) and 0455h (the offset along which the length of the description of the blocks of the second type is stored, i.e. the descriptions of the blocks of the first type end): 0455h-1D = 0438h, just such a number lies at the offset 19h. What is in the remaining twenty-one bytes between 04h and 19h is a mystery to me. Yes, and especially did not want to understand, because in all office localization files this offset is the same.

My program for reading Microsoft Office localization files: Link to Ya.

UPD disk : 01/16/2016

It turned out that blocks of the first type are sometimes encoded with an additional dictionary, which is written to the very end of the file. Apparently this was done to reduce the file size, because A dictionary can have many letters represented by one byte. Actually the link is now updated source code.

Among the names of Excel functions, there are those that are not described anywhere in the documentation, and you cannot use them in formulas. Why their names are localized for me is still a mystery. Here are some of these features:

On long winter holidays, I was even more bored, and I set out to determine how Excel calculates the line height based on the font. After several tens of hours spent in OllyDbg and IDA, almost 2,000 lines of C # code were born, which give 100% coincidence of line height with Excel for all fonts, their sizes and parameters. In addition, several interesting features of Excel internals were clarified, but this topic is already for a separate article.

Forehead option

After a short search for descriptions of function arguments by the contents of files in the office folder, the file c: \ Program Files \ Microsoft Office \ Office15 \ 1033 \ XLINTL32.DLL was found . Where 1033 is the LCID of the localization language ( more on msdn ).

From a cursory glance, it became clear that, in principle, I found what I was looking for. The argument descriptions for the AGGREGATE function for both options in the file were correct. It turned out that Excel incorrectly parses its own localization file. Then it was decided to write your Excel localization file parser, or at least understand the format of MS Office localization files.

To begin with, it was decided to write the parser only of the descriptions of the arguments and functions, since by a quick look at the file, which is presented above, it seemed that the format is quite simple - an exclamation mark is used as a separator between the text, and what text means what can be understood experimentally .

As a result, after a slightly thoughtful reading of the file, such a scheme for describing arguments and functions was revealed:

- All descriptions are written in some kind of internal function order for Excel, which did not coincide with the function order described in the specification for the xls format.

- Each description of the function is written in the following form: “!” + Comma-separated descriptions of the function arguments + [“!” + The second set of arguments, if any] + ”!!” + description of the function itself + ”!” + Descriptions of arguments shared by “ ! ”

- Not all functions have a description, there are even completely empty functions that are written in the file like this: !!! - and that’s it.

According to this scheme, a parser was quickly written that quite successfully coped with its work, but there were several problems:

- Between the descriptions of some functions, and specifically between the descriptions for functions numbered 249 and 250, as well as between 504 and 505 there were incomprehensible crackers, and then again normal descriptions:

- Descriptions of some functions did not fit the chosen scheme and crutches had to be written for them.

- It is not clear what function which description belongs.

- The offset of the beginning of the block of function descriptions for different localizations was different, and I had to recognize this offset by hand and enter it into the dictionary in the parser. What kind of automation can we talk about here?

- In some localizations, the descriptions were in UTF-8 format, in other UTF-16s, somewhere in general half of the descriptions were in UTF-8, the other in UTF-16.

But in principle, with a lot of dirty hacks, it was possible to pull out function descriptions for almost all localizations.

Going deeper

It remains to solve the problem of which function which description belongs. To do this, I again started searching the contents of files in MS Office folders, only this time I was looking for function names. And I was lucky: next to the XLINTL32.DLL file with the descriptions of the functions was the XLLEX.DLL file, in which there was something similar to the names of the functions:

Only they walked somehow in a row and without spaces. And if for English it was still possible to parse this text by hand into separate function names, then for Arabic or Thai I just could not do it.

In principle, it became clear here that it was time to sort out the format of Excel localization files already, or to get into this business and go to bed. The first one was chosen.

At first I noticed that both the descriptions of the functions and the names of the functions are stored in dll files in the resource with the name “1” and type “234”. Thoughtful study of the resource dump from the XLLEX.DLL file (this is the one with the function names) led me to the following discovery: between the sections with normal text there are sections with crooks that should have a certain meaning. Then it was decided to study these areas more deeply, using WinHEX and a calculator. Let’s take the site of the crooks that go in front of the site with the names of the functions:

The first two bytes: 01 00 - I don’t know what they mean yet. The second two bytes are 56 02 - if you turn them over, it turns out 0256, and if you also transfer from the hexadecimal system to decimal, you get 598. Exactly as many function names below in the block of meaningful text. This is already pleasing. We look further: the following pairs of bytes, if they are interchanged, are similar to an increasing sequence. Indeed, these bytes are the offset of the description of an individual function from the end of the krakozyabr block. In fact, the screenshot from the XLLEX.DLL file shows that the first function is COUNT - 5 bytes (0005h-0000h), the second - IF - 2 bytes (0007h-0005h), the third - ISNA - 4 bytes (000Bh-0007h) .

This is all very good, but how to determine where the krakozyabr block begins, in which the lengths of the names of the functions are given. Indeed, in each localization, this block has its own offset. Then I began to dig the header of the resource dump from the XLLEX.DLL file.

The first 4 bytes are the size of the resource. Further, I was interested in the bytes that are located at the offset 33h 34h - their value - 0256 - is exactly the same as the number of function names recorded in the file. In addition, every 17 bytes 03h is repeated, and the last 4 bytes in the area selected in the screenshot - 0E 6F 00 00 just equal the number equal to the size of the resource = the size of the selected area + 4 + 4 - 1. That is, in fact, this the size of the part of the file where the data is.

Now you can write out all the bytes that are between repeating 03h and group them a little:

| 03 | 0F05 | 00000000 | 0000 | 9E1C0000 | 00000000 |

| 03 | 5602 | 00000000 | 0100 | 51150000 | 9E1C0000 |

| 03 | 0601 | 00000000 | 0200 | A00A0000 | EF310000 |

| 03 | 0404 | 00000000 | 0300 | E6310000 | 8F3C0000 |

| 03 | 3100 | 00000000 | 0400 | 99,000,000 | 756E0000 |

- 1 byte is the type of block (types 02, 03, 04 are found in office files — ordinary lines, 01 — it looks like a WordBasic function table, there the whole description goes in the form of a function name and some kind of index for each function).

- 2 bytes - the number of elements in the block.

- 4 bytes - I don’t know. In all the files that I watched, this value is always 0, it may be reserved.

- 2 bytes - serial number of the block.

- 4 bytes - block size

- 4 bytes - the offset of the block from the end of the block description, in the case of our file from 7Ah.

We deal with encodings

Basically, this data is already enough to make an automatic parser of the XLLEX.DLL file and pull out the names of all functions in all languages and a lot of other information. But in the process there was one problem: only a very small part of the localizations stores data in UTF-8 format. Most of the data is stored in some completely incomprehensible formats: each character is encoded by 1 byte with some offset relative to the table of this language in UTF-8. For example, the Cyrillic "C" and "H" were written as A1 and A7, and in the table UTF8 they have numbers D0A1 and D0A7, but at the same time, "p" was written as C0, although it should be D180.

To solve this problem, I first naturally tried to understand how Excel itself translates strings from such obscure encoding at least in UTF-8. To do this, it was necessary to compare the block descriptions for several languages, I took Russian localization and English:

The beginning of the block description for English localization:

- English: 0100 5602 0500 ... (the second two bytes, as we explained above, are the number of elements in the block, the third two bytes are the length of the first element (COUNT function is 5 bytes) ...)

- Russian: 0184 5602 0400 ... (the second two bytes are the number of elements, the third two bytes are the length of the first element (the COUNT function is 4 bytes) ...)

Some conclusions were drawn from this: the first two bytes in the block description are the encoding. If the first byte = 0, then the text in this block is written in Unicode LE, and here everything is simple. If the first byte of the encoding = 01, then you need to look at the second byte. If the second byte = 00, then the text is encoded in a simple UTF-8 encoding, here, too, you do not need to rack your brains. But what if the second byte is not 0?

At first, I simply compiled a dictionary: the value of the second byte is the offset in the UTF-8 table. This quickly bored me, and I began to look for a pattern. It soon became clear that the offset in the UTF-8 table can be defined as: offset = (byte2-80h) * 4 + C0h. The only problem is that for some groups of encodings C0h had to be changed to another number.

As a result, the text conversion functions began to look like this:

C # Code

#region Convert

int GetCharSize(int blockIndex) {

if(block2Encoding[blockIndex][0] == 0)

return 2;

if(block2Encoding[blockIndex][0] == 1)

return 1;

if (block2Encoding[blockIndex][0] == 2)

return 2;

if (block2Encoding[blockIndex][0] == 3)

return 1;

return 1;

}

byte[] Convert(byte[] array, int blockIndex) {

byte encodingByte1 = block2Encoding[blockIndex][0];

byte encodingByte2 = block2Encoding[blockIndex][1];

if(encodingByte1 == 0 || encodingByte1 == 2)

return Convert0000(array);

if(encodingByte2 < 0x80)

return ConvertFromUTF8(array, 0x00, 0xC2);

int d = encodingByte2 - 0x80;

d *= 4;

byte byte1;

byte byte2;

if(d < 0x20) {

byte1 = 0;

byte2 = (byte) (0xC0 + d);

}

else if(d < 0x40) {

byte1 = 0xE0;

byte2 = (byte) (0xA0 + (d - 0x20));

}

else {

d -= 0x40;

byte1 = (byte) (0xE1 + d / 0x40);

byte2 = (byte) (0x80 + d % 0x40);

}

return ConvertFromUTF8(array, byte1, byte2);

}

byte[] ConvertFromUTF8(byte[] array, byte byte1, byte byte2) {

List result = new List();

foreach(byte b in array) {

if(b <= 0xFF / 2)

result.Add(b);

else {

if(byte1 != 0)

result.Add(byte1);

byte d = (byte) (byte2 + (b - 1) / 0xBF);

result.Add(d);

d = b;

if(b >= 0xC0) {

d = (byte) (b - 0xC0 + 0x80);

}

result.Add(d);

}

}

return result.ToArray();

}

byte[] Convert0000(byte[] array) {

return Encoding.Convert(Encoding.Unicode, Encoding.UTF8, array);

}

#endregion

We get to the core

After all this, it was already possible to accurately and correctly extract all the localized text from the XLLEX.DLL file, but this method was completely unsuitable for the file with descriptions of functions and arguments of XLINTL32.DLL. Here I had to start everything almost from the very beginning, but it was already easier.

To begin with, in the XLINTL32.DLL file, I tried to find something already familiar and similar to the data from the XLLEX.DLL file. A familiar picture began at offset 0459h:

Those. starting with 04B1h there were block descriptions, the same as in the XLLEX.DLL file, but above this offset everything was somehow incomprehensible. And not all text from the resource obeyed the rules that were derived based on the analysis of the XLLEX.DLL file.

It was decided in the future those blocks that I have already learned to recognize as blocks of the second type, and those that I still do not know how to call blocks of the first type, because they went in the XLINTL32.DLL file above blocks of the second type.

The text of blocks of the first type began almost immediately after the end of the map of blocks of the second type, it remains to find where the file contains the map of blocks of the first type, and how to determine the text separator in the blocks of the first type themselves. For study, this block was selected:

Such lines are clearly visible in it: “Cut, copy, and paste”, “Print”, “For charts”, etc. In addition, a “characteristic” ladder of zeros and increasing values is visible in hex codes. The first two values in this ladder - 46h and 6Eh - the difference between them in decimal 40, because the text is explicitly set in Unicode LE, the length of “Cut, copy, and paste” will be 20 * 2 = 40. It converges. Let's check another pair of values: 78h-6Eh = 10/2 = 5 - exactly the length of the “Print”. We rewrite beautifully all the bytes from the offset 07BE66h to the start of the meaningful text:

00 46000000

00 6E000000

00 78000000

...

FF E2020000

The total length of the resulting statement is 07BEABh - 07BE66h + 1 = 46h - somewhere it already was. It turns out that the descriptions of elements in a block of the first type look like this: 1 byte is the type of element, 4 bytes are the offset of the element relative to the beginning of this block. As it turned out later, the types of elements in a block of the first type are 00h — plain text in Unicode, 0Ah — some obscure krakozyabry, FFh — the last element in this block.

Now the last thing is left: to deal with the resource header and find out how the offsets are determined for all blocks.

To begin with, I remembered that the description of all blocks of the second type ends at offset 0A67h, and begins at offset 0459h, so the length of the description of blocks of the second type is 0A67h-0459h + 1 = 060Fh, and at 0455h there is a four-byte number 060Bh: 060Bh + 4 = 060Fh . It turns out that at 455h the length of the description section of the blocks of the second type is recorded.

In order to understand how the displacements of the blocks of the first type from the beginning of the resource are described, it was decided to compile for each block of the first type a table of displacements of the beginning of this block and its length.

The first block of the first type begins where the descriptions of blocks of the second type - 0A68h end.

| Bias | Length |

|---|---|

| 0A68h | 011Ah |

| 0B82h | 00CEh |

| 0C50h | 0148h |

Let's try to subtract from 01E8h (offset 25h) the number 011Ah (offset 21h): 01E8h-011Ah = CEh, just the length of the second block. For fun: subtract 0330h (offset 29h) from 01E8h (offset 25h): 0330h-01E8 = 0148h, and 011Ah - it looks like the length of the first block. It turns out that from the 1Dh offset there are descriptions of the offsets of blocks of the first type. They are recorded in the form of offsets of the beginning of the block from the end of the descriptions of blocks of the second type (or the beginning of the section with the contents of the blocks - to whom it is more convenient). It remains to understand what kind of bytes are between 04h and 1D. If we subtract 1D (the beginning of the description of the displacements of blocks of the first type) and 0455h (the offset along which the length of the description of the blocks of the second type is stored, i.e. the descriptions of the blocks of the first type end): 0455h-1D = 0438h, just such a number lies at the offset 19h. What is in the remaining twenty-one bytes between 04h and 19h is a mystery to me. Yes, and especially did not want to understand, because in all office localization files this offset is the same.

My program for reading Microsoft Office localization files: Link to Ya.

UPD disk : 01/16/2016

It turned out that blocks of the first type are sometimes encoded with an additional dictionary, which is written to the very end of the file. Apparently this was done to reduce the file size, because A dictionary can have many letters represented by one byte. Actually the link is now updated source code.

Short file structure specification

4 bytes - resource size

21 bytes - not known

4 bytes - number of blocks of type 1 * 4 = number of bytes that describe a map of blocks of type 1

* Start of description of a map of blocks of the first type *

4 bytes - offset of type 1 blocks from the end of the description of blocks. The difference between two adjacent values is the length of type 1 block.

* End of the description of the map of the blocks of the first type *

4 bytes - the number of bytes that describes the map of the blocks of 2 types = the number of blocks of the 2nd type * 17 bytes

* Start of the description of the map of the blocks of the second type *

1 byte - the block type of

2 bytes - the number of elements in the type 2 block

4 bytes - I don’t know. In my opinion it is always 0, it can be reserved.

2 bytes - serial number of block 2

4 bytes - block length of the second type

4 bytes - offset of the block of the second type from the end of the block description

* End of the description of the map of blocks of the second type *

4 bytes - data length for blocks of the 2nd type

* Blocks of the first type *

1 byte - element type - if FF - this the last element in this block, if 00 is plain text in Unicode, if 0A is xs.

4 bytes - element offset relative to the beginning of this block

Next elements

* End of blocks of the first type *

* Blocks of the second type *

1 byte - first byte of encoding

1 byte - second byte of encoding

2 bytes - number of elements in the block

* Map of elements of the block of the second type *

2 bytes - offset relative to the beginning of the block elements. Begins with the second element. If the offset goes over FFFF, then after the offset two more bytes are added = how many times by FFFF you need to take.

* The end of the card of the elements of the block of the second type *

* The end of the blocks of the second type *

*********************

Encoding: The

encoding is defined as follows:

if the second byte == 0, then this Simple Unicode Little Endian.

if the second byte == 1, then the elements are encoded in UTF8 and the offset can be determined by the first byte.

*********************

Blocks of the second type are of several more types

2, 3, 4 - ordinary lines

1 - it looks like a WordBasic table, there at the beginning there is a table of some indexes (maybe indexes of WordBasic functions)

21 bytes - not known

4 bytes - number of blocks of type 1 * 4 = number of bytes that describe a map of blocks of type 1

* Start of description of a map of blocks of the first type *

4 bytes - offset of type 1 blocks from the end of the description of blocks. The difference between two adjacent values is the length of type 1 block.

* End of the description of the map of the blocks of the first type *

4 bytes - the number of bytes that describes the map of the blocks of 2 types = the number of blocks of the 2nd type * 17 bytes

* Start of the description of the map of the blocks of the second type *

1 byte - the block type of

2 bytes - the number of elements in the type 2 block

4 bytes - I don’t know. In my opinion it is always 0, it can be reserved.

2 bytes - serial number of block 2

4 bytes - block length of the second type

4 bytes - offset of the block of the second type from the end of the block description

* End of the description of the map of blocks of the second type *

4 bytes - data length for blocks of the 2nd type

* Blocks of the first type *

1 byte - element type - if FF - this the last element in this block, if 00 is plain text in Unicode, if 0A is xs.

4 bytes - element offset relative to the beginning of this block

Next elements

* End of blocks of the first type *

* Blocks of the second type *

1 byte - first byte of encoding

1 byte - second byte of encoding

2 bytes - number of elements in the block

* Map of elements of the block of the second type *

2 bytes - offset relative to the beginning of the block elements. Begins with the second element. If the offset goes over FFFF, then after the offset two more bytes are added = how many times by FFFF you need to take.

* The end of the card of the elements of the block of the second type *

* The end of the blocks of the second type *

*********************

Encoding: The

encoding is defined as follows:

if the second byte == 0, then this Simple Unicode Little Endian.

if the second byte == 1, then the elements are encoded in UTF8 and the offset can be determined by the first byte.

*********************

Blocks of the second type are of several more types

2, 3, 4 - ordinary lines

1 - it looks like a WordBasic table, there at the beginning there is a table of some indexes (maybe indexes of WordBasic functions)

It is interesting

Among the names of Excel functions, there are those that are not described anywhere in the documentation, and you cannot use them in formulas. Why their names are localized for me is still a mystery. Here are some of these features:

- GOTO (reference);

- HALT (cancel_close);

- ECHO (logical);

- WINDOWS (type_num, match_text);

- INPUT (message_text, type_num, title_text, default, x_pos, y_pos, help_ref);

- ADD.TOOLBAR (bar_name, tool_ref).

PS

On long winter holidays, I was even more bored, and I set out to determine how Excel calculates the line height based on the font. After several tens of hours spent in OllyDbg and IDA, almost 2,000 lines of C # code were born, which give 100% coincidence of line height with Excel for all fonts, their sizes and parameters. In addition, several interesting features of Excel internals were clarified, but this topic is already for a separate article.