The Art of Feature Engineering in Machine Learning

Hi, Habr!

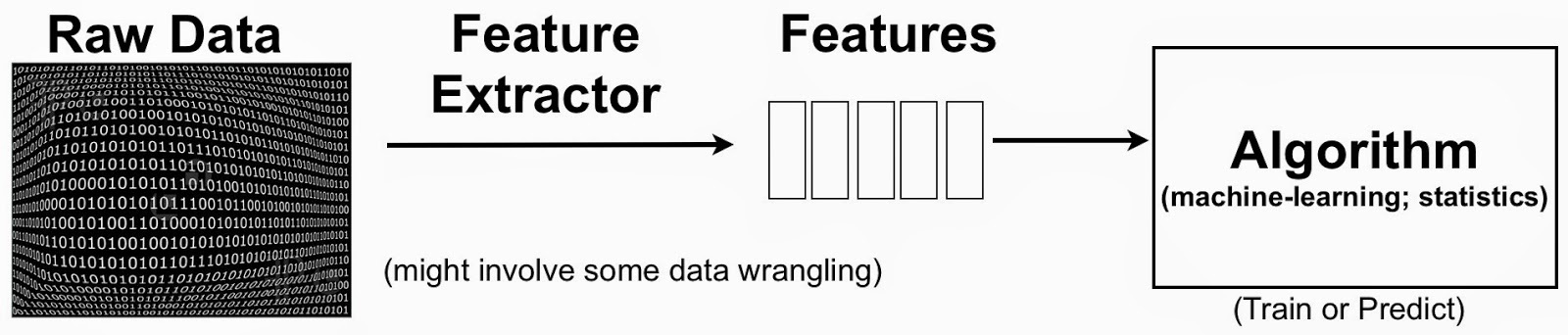

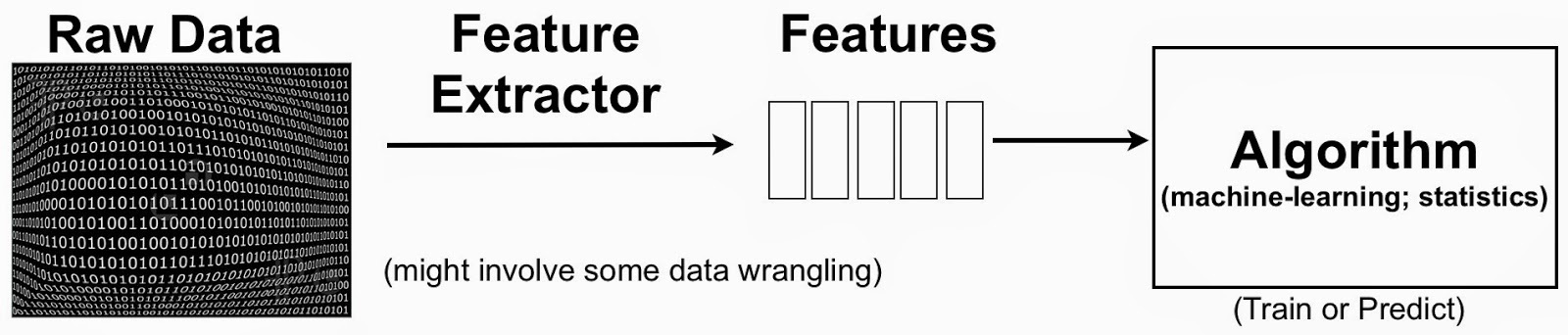

In the previous article ( “Introduction to Machine Learning with Python and Scikit-Learn” ), we introduced you to the basic steps for solving machine learning problems. Today we’ll talk more about techniques that can significantly increase the quality of the developed algorithms. One of these techniques is Feature Engineering . Just note that this is a kind of art, which can be learned only by solving a huge number of tasks. Nevertheless, with experience some common approaches are developed, which I would like to share in this article.

So, as we already know, almost any task begins with the creation ( Engineering ) and selection ( Selection) signs. The methods for selecting traits have been studied quite well and there are already a large number of algorithms for this (we will talk more about them next time). But the task of creating signs is a kind of art and completely falls on the shoulders of Data Scientist. It is worth noting that this very task is often the most difficult in practice and it is thanks to the successful selection and creation of signs that very high-quality algorithms are obtained. Often, simple algorithms with well-chosen attributes will win on kaggle.com (excellent examples are the Heritage Provider Network Health Prize or Feature Engineering and Classifier Ensemble for KDD Cup 2010 )

Probably the most famous and understandable example of Feature Engineering, many of you have already seen in the course of Andrew Ng. An example was as follows: using linear models, the price of a house is predicted depending on many features, among which there are such as the length of the house and the width. Linear regression in this case predicts the price of a house as a linear combination of width and length. But after all, any healthy person understands that the price of a house primarily depends on the area of the house, which is not expressed in any way through a linear combination of length and width. Therefore, the quality of the algorithm significantly increases if the length and width are noticed on their product. Thus, we get a new sign, which most strongly affects the price of the house, and also reduce the dimension of the sign space. In my opinion, this is the simplest and most obvious example of creating attributes. Note that it’s very difficult to come up with a method, which for any given task would give a technique for constructing attributes. That is why the post is called "Art Feature Engineering." However, there are a number of simple methods and techniques that I would like to share from my own experience:

Suppose our objects have features that take values on a finite set. For example, the color ( color ), which may be blue ( blue ), red ( red ), green ( green ), or its value may be unknown ( unknown ). In this case, it is useful to add features of the form is_red , is_blue , is_green , is_red_or_blue and other possible combinations.

If there is a date or time among the signs - as a rule, it very often helps to add signs that correspond to the time of the day, the amount of elapsed time from a certain moment, highlighting seasons, seasons, quarters. It also helps to split the time into hours, minutes, and seconds (if time is given in Unix-Time or ISO format). There are a lot of options in this place, each of which is selected for a specific task.

If the variable is real, its rounding or dividing into the whole and real parts (with subsequent normalization) often helps. Or, it often helps to cast a numerical sign into a categorical one. For example, if there is such a sign as mass, then you can enter signs of the form “mass is greater than X” , “mass from X to Y” .

If there is a sign whose value is a finite number of lines, then you should not forget that the lines themselves often contain information. A good example is the task of Titanic: Machine Learning from Disaster , in which the names of the sailors had the prefixes “Mr.” , “Mrs.” and “Miss.” , By which it is easy to extract the gender.

Often, as a sign, you can also add the result of other algorithms. For example, if the classification problem is solved, you can first solve the auxiliary clustering problem, and take the object cluster as a sign in the initial task. This usually happens based on the initial analysis of the data in the case when the objects are well clustered.

It also makes sense to add features that aggregate features of a certain object, thereby also reducing the dimension of the feature description. As a rule, this is useful in tasks in which one object contains several parameters of the same type. For example, a person who has several cars of different values. In this case, you can consider the signs corresponding to the maximum / minimum / average cost of the car of this person.

This item should rather be related more to practical tasks from real life than to machine learning competitions. In more detail about this there will be a separate article, now we only note that in order to effectively solve a problem, it is often necessary to be an expert in a specific field and understand what affects a specific target variable. Returning to the example with the price of an apartment, everyone knows that the price depends primarily on the area, however, in a more complex subject area, such conclusions are difficult to make.

So, we examined several techniques for creating ( Engineering ) features in machine learning tasks, which can help significantly increase the quality of existing algorithms. Next time we’ll talk more about selection methods ( Selection) signs. Fortunately, everything will be easier there, because there are already developed techniques for selecting features, while creating features, as the reader has probably noticed, is an art!

In the previous article ( “Introduction to Machine Learning with Python and Scikit-Learn” ), we introduced you to the basic steps for solving machine learning problems. Today we’ll talk more about techniques that can significantly increase the quality of the developed algorithms. One of these techniques is Feature Engineering . Just note that this is a kind of art, which can be learned only by solving a huge number of tasks. Nevertheless, with experience some common approaches are developed, which I would like to share in this article.

So, as we already know, almost any task begins with the creation ( Engineering ) and selection ( Selection) signs. The methods for selecting traits have been studied quite well and there are already a large number of algorithms for this (we will talk more about them next time). But the task of creating signs is a kind of art and completely falls on the shoulders of Data Scientist. It is worth noting that this very task is often the most difficult in practice and it is thanks to the successful selection and creation of signs that very high-quality algorithms are obtained. Often, simple algorithms with well-chosen attributes will win on kaggle.com (excellent examples are the Heritage Provider Network Health Prize or Feature Engineering and Classifier Ensemble for KDD Cup 2010 )

Probably the most famous and understandable example of Feature Engineering, many of you have already seen in the course of Andrew Ng. An example was as follows: using linear models, the price of a house is predicted depending on many features, among which there are such as the length of the house and the width. Linear regression in this case predicts the price of a house as a linear combination of width and length. But after all, any healthy person understands that the price of a house primarily depends on the area of the house, which is not expressed in any way through a linear combination of length and width. Therefore, the quality of the algorithm significantly increases if the length and width are noticed on their product. Thus, we get a new sign, which most strongly affects the price of the house, and also reduce the dimension of the sign space. In my opinion, this is the simplest and most obvious example of creating attributes. Note that it’s very difficult to come up with a method, which for any given task would give a technique for constructing attributes. That is why the post is called "Art Feature Engineering." However, there are a number of simple methods and techniques that I would like to share from my own experience:

Categorical signs

Suppose our objects have features that take values on a finite set. For example, the color ( color ), which may be blue ( blue ), red ( red ), green ( green ), or its value may be unknown ( unknown ). In this case, it is useful to add features of the form is_red , is_blue , is_green , is_red_or_blue and other possible combinations.

Dates & Time

If there is a date or time among the signs - as a rule, it very often helps to add signs that correspond to the time of the day, the amount of elapsed time from a certain moment, highlighting seasons, seasons, quarters. It also helps to split the time into hours, minutes, and seconds (if time is given in Unix-Time or ISO format). There are a lot of options in this place, each of which is selected for a specific task.

Numeric variables

If the variable is real, its rounding or dividing into the whole and real parts (with subsequent normalization) often helps. Or, it often helps to cast a numerical sign into a categorical one. For example, if there is such a sign as mass, then you can enter signs of the form “mass is greater than X” , “mass from X to Y” .

String Characteristics Processing

If there is a sign whose value is a finite number of lines, then you should not forget that the lines themselves often contain information. A good example is the task of Titanic: Machine Learning from Disaster , in which the names of the sailors had the prefixes “Mr.” , “Mrs.” and “Miss.” , By which it is easy to extract the gender.

Results of other algorithms

Often, as a sign, you can also add the result of other algorithms. For example, if the classification problem is solved, you can first solve the auxiliary clustering problem, and take the object cluster as a sign in the initial task. This usually happens based on the initial analysis of the data in the case when the objects are well clustered.

Aggregated Attributes

It also makes sense to add features that aggregate features of a certain object, thereby also reducing the dimension of the feature description. As a rule, this is useful in tasks in which one object contains several parameters of the same type. For example, a person who has several cars of different values. In this case, you can consider the signs corresponding to the maximum / minimum / average cost of the car of this person.

Adding New Features

This item should rather be related more to practical tasks from real life than to machine learning competitions. In more detail about this there will be a separate article, now we only note that in order to effectively solve a problem, it is often necessary to be an expert in a specific field and understand what affects a specific target variable. Returning to the example with the price of an apartment, everyone knows that the price depends primarily on the area, however, in a more complex subject area, such conclusions are difficult to make.

So, we examined several techniques for creating ( Engineering ) features in machine learning tasks, which can help significantly increase the quality of existing algorithms. Next time we’ll talk more about selection methods ( Selection) signs. Fortunately, everything will be easier there, because there are already developed techniques for selecting features, while creating features, as the reader has probably noticed, is an art!