The human factor in software development: psychological and mathematical aspects

Software product development is a process in which the human factor plays a very important role. In the article we will talk about various psychological and mathematical laws and principles. Some of these principles and laws are well known to you, some are not very well known, and some will help explain your behavior or the behavior of your employees and colleagues.

Software development is a non-linear process

Software development is a non-linear process. If 5 developers are allocated for the project, who must develop the product in 5 months (25 people / month), then 25 developers will not be able to do the same work in 1 month (the same 25 people / month).

Brooks, in his book Mythical Man-Month, gives a wonderful expression: 9 women cannot give birth to a baby in 1 month. By this, he hints at the existence of a certain limit to which you can shorten the development time. Steve McConnell claims that this threshold is defined as 25% of the original estimates.

And yes, most likely, 10 developers will also not be able to do the initial amount of work in 2.5 months, since the joint actions of 10 developers on a project lasting 5 months are likely to lead to deadlock or other communication problems.

Project Evaluation and Error Price

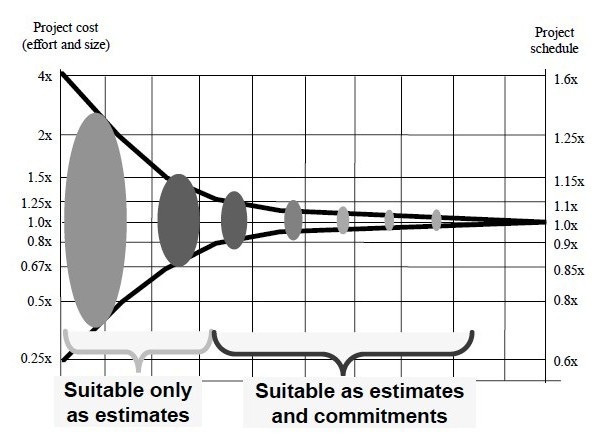

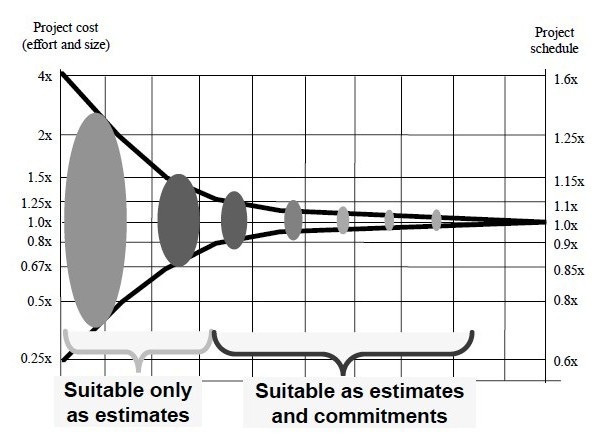

To illustrate the “error price”, an uncertainty cone is often used - a graph on the horizontal axis of which the time is indicated, and on the vertical axis - the value of the error that is laid down when assessing the complexity. With the passage of time, as more and more data become known about the project being evaluated, about what exactly and under what conditions it is necessary to do, the “spread” of error becomes less and less.

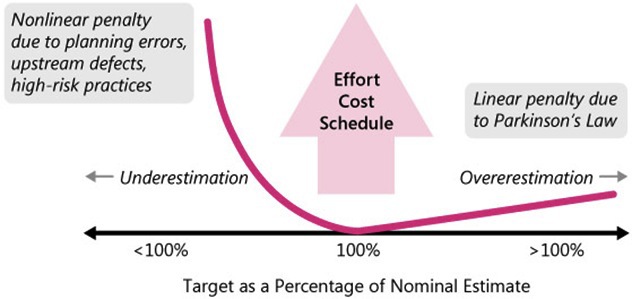

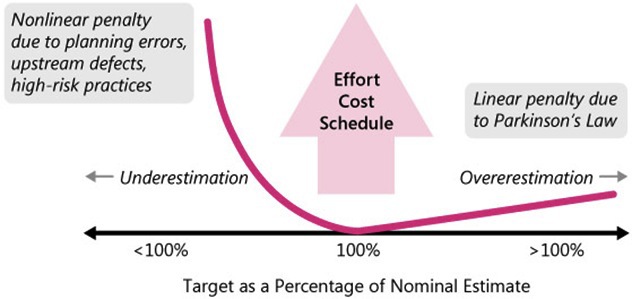

How does the price of error rise in the case of revaluation and underestimation? It turns out that when revaluing the error price increases linearly, when underestimating the error price increases exponentially.

In the first case, linear growth is explained by Parkinson's law, which says: work fills the time allotted to it. Also, this law is called "student syndrome" - no matter how much time is given to complete the course, it is still done until the last day before passing.

Continuing the topic of project evaluation, let's look at various approaches.

A simple approach. We take the number of hours, multiply by the hourly rate, add 20-30% (risks): (N * hourly_rate) * [1,2-1,3] = project_cost

20-30% - according to statistics, the average value of underestimation of projects. Subsequently, this value can be 10% (which means that you have a brilliant team), sometimes 50% (well, too, normally), often even more.

The whole reason is that people are optimists. And they also give optimistic estimates. Therefore, it is desirable to provide two ratings - optimistic and pessimistic (I’ll tell you a secret - pessimistic can also be shown to customers, most of them react normally).

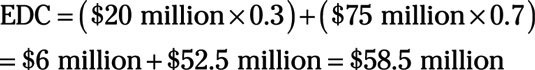

A more complex approach:

Where:

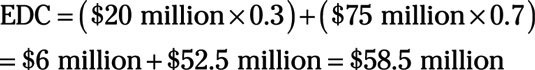

The final estimate is $ 58.5 million. It is much higher than the “average” value, which is $ 47.5 million (an error of about 23%) and which most managers will most likely choose as a compromise. Thus, one more confirmation that on average you need to add 20-30% to your “normal” assessment.

Well, since we are talking about "average" values, we must add that any decision made by the majority will always be worse than the decision made by a limited number of people. That is why important decisions in large corporations are made by the board of directors, and not by popular vote of all employees. And that is why the decisions made by all team members are likely to be worse than the decision made by the project manager in conjunction with, for example, team lead.

Technical debt

A conscious compromise solution, when the customer and the contractor clearly understand all the advantages of a quick, albeit not an ideal technical solution, which will have to be paid for later. This term was coined by Ward Cunningham.

Common causes of technical debt:

In most cases, technical debt is written off due to the disrupted deadlines for the project, hiring additional staff or in the form of overtime.

Pareto principle (principle 20/80) The

rule of thumb, named after the economist and sociologist Wilfredo Pareto, in the most general form is formulated as "20% of the effort gives 80% of the result, and the remaining 80% of the effort gives only 20% of the result." You need to understand that the numbers 20 and 80 are conditional (for example, Google, Apple and Microsoft love the distribution of 30 by 70 for their application store), but this does not change the general meaning.

The Pareto principle is a well-known rule that can be applied to various areas of life, but in IT this principle shows itself in all its glory.

The most important consequences of the Pareto law:

Also, one should not forget about Pareto efficiency - this is the state of the system in which the value of each particular indicator characterizing the system cannot be improved without worsening the others.

In a simplified form - the rule of the triangle: quickly, efficiently, inexpensively - select any 2 points.

One percent

rule A rule that describes the uneven participation of an Internet audience in the creation of content. It is alleged that in general, the vast majority of users only browses the Internet, but does not take an active part in the discussion (on forums, in online communities, etc.).

More generally, it describes the number of employees generating new ideas or showing proactivity.

Consider an example. You held a certain event, which was attended by 30 people. You want to receive feedback by conducting questionnaires after the event. Does that make sense? If you do not try to come up with some kind of synthetic conditions, then there is no point in such a questionnaire, since ... 0.3 people will respond to it. Even if one person answers (which is equivalent to 3%, which in itself is an excellent option), then 1 answer is unlikely to suit you. It is easier to ask the opinion of people at the exit from the building :-)

The Peter

Principle The Peter principle says: in a hierarchical system, each individual tends to rise to the level of his incompetence.

Features:

It is for this reason that 37signals refused to promote employees on the career ladder, but offer employees to improve their skills and knowledge (and, therefore, payment) as part of their position.

For a deeper immersion in the topic, I recommend the books Rework and Remote.

Hanlon Razor A

statement about the probable role of human error in the causes of unpleasant events, which reads: never ascribe evil intent to something that can be explained by stupidity.

Hanlon's razor is one of my favorite principles. In most cases, all the jambs are trying to push into the "actions of unforeseen forces", "conspiracy theory", although they are easily explained by the usual stupidity of employees.

In continuation of this topic, one must also recall Murphy’s law - a philosophical principle that is formulated as follows: if there is a possibility that some kind of trouble can happen, then it will definitely happen. Such a law of meanness.

In formal form:

The consequence of Murphy’s law in software development: you need to implement “protection from the fool” - additional checks, additional levels of abstraction and isolation and other techniques, better known as “best practices”.

And finally, I’ll mention another effect - the Dunning - Kruger effect. This is a metacognitive distortion, which consists in the fact that people with a low level of qualification make erroneous conclusions, make unsuccessful decisions, and at the same time are unable to recognize their mistakes due to the low level of their qualifications. This leads to their overestimation of their own abilities, while really highly qualified people, on the contrary, tend to lower their abilities and suffer from insufficient self-confidence, considering others more competent. Thus, less competent people generally have a higher opinion of their own abilities than is characteristic of competent people, who also tend to assume that others evaluate their abilities as low as they do.

And since people tend to believe more in those who speak confidently and impudently, the truth, said quietly and without straining, may not be heard, and the decision made can be made on the basis of incorrect or inaccurate data. Therefore, it is very important to correlate all assessments and decisions with what an employee said in order to listen to a more competent, but less courageous employee next time.

I hope that an understanding of the principles and laws described will help you develop software more efficiently and always complete it on time and on budget!

Software development is a non-linear process

Software development is a non-linear process. If 5 developers are allocated for the project, who must develop the product in 5 months (25 people / month), then 25 developers will not be able to do the same work in 1 month (the same 25 people / month).

Brooks, in his book Mythical Man-Month, gives a wonderful expression: 9 women cannot give birth to a baby in 1 month. By this, he hints at the existence of a certain limit to which you can shorten the development time. Steve McConnell claims that this threshold is defined as 25% of the original estimates.

And yes, most likely, 10 developers will also not be able to do the initial amount of work in 2.5 months, since the joint actions of 10 developers on a project lasting 5 months are likely to lead to deadlock or other communication problems.

Project Evaluation and Error Price

To illustrate the “error price”, an uncertainty cone is often used - a graph on the horizontal axis of which the time is indicated, and on the vertical axis - the value of the error that is laid down when assessing the complexity. With the passage of time, as more and more data become known about the project being evaluated, about what exactly and under what conditions it is necessary to do, the “spread” of error becomes less and less.

How does the price of error rise in the case of revaluation and underestimation? It turns out that when revaluing the error price increases linearly, when underestimating the error price increases exponentially.

In the first case, linear growth is explained by Parkinson's law, which says: work fills the time allotted to it. Also, this law is called "student syndrome" - no matter how much time is given to complete the course, it is still done until the last day before passing.

Continuing the topic of project evaluation, let's look at various approaches.

A simple approach. We take the number of hours, multiply by the hourly rate, add 20-30% (risks): (N * hourly_rate) * [1,2-1,3] = project_cost

20-30% - according to statistics, the average value of underestimation of projects. Subsequently, this value can be 10% (which means that you have a brilliant team), sometimes 50% (well, too, normally), often even more.

The whole reason is that people are optimists. And they also give optimistic estimates. Therefore, it is desirable to provide two ratings - optimistic and pessimistic (I’ll tell you a secret - pessimistic can also be shown to customers, most of them react normally).

A more complex approach:

Where:

- C bc (project cost - optimistic estimate)

- C wc (project cost - pessimistic assessment)

- P bc (probability of an optimistic scenario)

- P wc (probability of a pessimistic scenario)

The final estimate is $ 58.5 million. It is much higher than the “average” value, which is $ 47.5 million (an error of about 23%) and which most managers will most likely choose as a compromise. Thus, one more confirmation that on average you need to add 20-30% to your “normal” assessment.

Well, since we are talking about "average" values, we must add that any decision made by the majority will always be worse than the decision made by a limited number of people. That is why important decisions in large corporations are made by the board of directors, and not by popular vote of all employees. And that is why the decisions made by all team members are likely to be worse than the decision made by the project manager in conjunction with, for example, team lead.

Technical debt

A conscious compromise solution, when the customer and the contractor clearly understand all the advantages of a quick, albeit not an ideal technical solution, which will have to be paid for later. This term was coined by Ward Cunningham.

Common causes of technical debt:

- The pressure of the business, when the business demands to release something before all the necessary changes are made, will accumulate technical debt, including these incomplete changes.

- Lack of processes or understanding when a business has no idea about technical debt, and makes decisions without considering the consequences.

- The absence of loosely coupled components created, when the components are not based on modular programming, the software is not flexible enough to adapt to changing business needs.

- Lack of tests - encouraging quick development and risky fixes (“crutches”) to fix bugs.

- Lack of documentation when code is created without the necessary supporting documentation. The work required to create supporting documentation is also a debt that must be paid.

- Lack of interaction when the knowledge base is not spread across the organization and business performance suffers, or younger developers are not properly trained by their mentors.

- Parallel development simultaneously in two or more branches can cause the accumulation of technical debt, which ultimately will need to be replenished to merge the changes together. The more changes that are made in isolation, the greater the total debt.

- Delayed refactoring - while project requirements are being created, it may become apparent that parts of the code have become cumbersome and need to be redesigned to support future requirements. The longer refactoring is delayed and the more code is written that uses the current state of the project, the more debt accumulates that will have to be paid at the time of subsequent refactoring.

- Lack of knowledge when a developer simply does not know how to write high-quality code.

In most cases, technical debt is written off due to the disrupted deadlines for the project, hiring additional staff or in the form of overtime.

Pareto principle (principle 20/80) The

rule of thumb, named after the economist and sociologist Wilfredo Pareto, in the most general form is formulated as "20% of the effort gives 80% of the result, and the remaining 80% of the effort gives only 20% of the result." You need to understand that the numbers 20 and 80 are conditional (for example, Google, Apple and Microsoft love the distribution of 30 by 70 for their application store), but this does not change the general meaning.

The Pareto principle is a well-known rule that can be applied to various areas of life, but in IT this principle shows itself in all its glory.

The most important consequences of the Pareto law:

- There are few significant factors, and there are many trivial factors - only isolated actions lead to important results.

- Most of the efforts do not produce the desired results.

- What we see is not always true - there are always hidden factors.

- What we expect to receive as a result, as a rule, differs from what we receive (hidden forces always act).

- It is usually too difficult and tedious to understand what is happening, and often it is not necessary - you just need to know if your idea is working or not, and change it so that it works, and then maintain the situation until the idea stops work.

- Most successful events are due to the action of a small number of highly productive forces; most troubles are associated with the action of a small number of highly destructive forces.

- Most actions, group or individual, are a waste of time. They do not give anything real to achieve the desired result.

Also, one should not forget about Pareto efficiency - this is the state of the system in which the value of each particular indicator characterizing the system cannot be improved without worsening the others.

In a simplified form - the rule of the triangle: quickly, efficiently, inexpensively - select any 2 points.

One percent

rule A rule that describes the uneven participation of an Internet audience in the creation of content. It is alleged that in general, the vast majority of users only browses the Internet, but does not take an active part in the discussion (on forums, in online communities, etc.).

More generally, it describes the number of employees generating new ideas or showing proactivity.

Consider an example. You held a certain event, which was attended by 30 people. You want to receive feedback by conducting questionnaires after the event. Does that make sense? If you do not try to come up with some kind of synthetic conditions, then there is no point in such a questionnaire, since ... 0.3 people will respond to it. Even if one person answers (which is equivalent to 3%, which in itself is an excellent option), then 1 answer is unlikely to suit you. It is easier to ask the opinion of people at the exit from the building :-)

The Peter

Principle The Peter principle says: in a hierarchical system, each individual tends to rise to the level of his incompetence.

Features:

- It is a special case of general observation: any well-working thing or idea will be used in increasingly difficult conditions until it causes a disaster.

- According to the principle of Peter, a person working in any hierarchical system will be promoted until he takes a place where he is unable to cope with his duties, that is, he will be incompetent. This level is called the level of incompetence of this employee.

- There is no way to determine in advance at what level an employee will reach a level of incompetence.

It is for this reason that 37signals refused to promote employees on the career ladder, but offer employees to improve their skills and knowledge (and, therefore, payment) as part of their position.

When the number of employees at 37signals exceeded 25, something strange happened. An employee left her who had too many ambitions. She wanted a raise, but the flat structure of the company did not imply the presence of managers. After that, Jason Fried wrote a column in which he explained why it is so important for them to maintain this particular structure of the organization. According to him, usually companies tend to develop “vertical” ambitions in employees, that is, a desire to move up the career ladder. And at 37signals they try to take those who are close to “horizontal” ambitions, that is, the need to become more professional in what you love more than anything. The village

For a deeper immersion in the topic, I recommend the books Rework and Remote.

Hanlon Razor A

statement about the probable role of human error in the causes of unpleasant events, which reads: never ascribe evil intent to something that can be explained by stupidity.

Hanlon's razor is one of my favorite principles. In most cases, all the jambs are trying to push into the "actions of unforeseen forces", "conspiracy theory", although they are easily explained by the usual stupidity of employees.

In continuation of this topic, one must also recall Murphy’s law - a philosophical principle that is formulated as follows: if there is a possibility that some kind of trouble can happen, then it will definitely happen. Such a law of meanness.

In formal form:

For any n there is m, and m <n, such that if n is large enough to fulfill Murphy’s law under these specific conditions, then m tests are enough for at least one of them to give an undesirable result.

The consequence of Murphy’s law in software development: you need to implement “protection from the fool” - additional checks, additional levels of abstraction and isolation and other techniques, better known as “best practices”.

And finally, I’ll mention another effect - the Dunning - Kruger effect. This is a metacognitive distortion, which consists in the fact that people with a low level of qualification make erroneous conclusions, make unsuccessful decisions, and at the same time are unable to recognize their mistakes due to the low level of their qualifications. This leads to their overestimation of their own abilities, while really highly qualified people, on the contrary, tend to lower their abilities and suffer from insufficient self-confidence, considering others more competent. Thus, less competent people generally have a higher opinion of their own abilities than is characteristic of competent people, who also tend to assume that others evaluate their abilities as low as they do.

And since people tend to believe more in those who speak confidently and impudently, the truth, said quietly and without straining, may not be heard, and the decision made can be made on the basis of incorrect or inaccurate data. Therefore, it is very important to correlate all assessments and decisions with what an employee said in order to listen to a more competent, but less courageous employee next time.

I hope that an understanding of the principles and laws described will help you develop software more efficiently and always complete it on time and on budget!