Ilya Grigorik on the implementation of HTTP / 2

Well-known specialist in server and client optimization, co-author of WebRTC, author of the book “High Perfomance Browser Networking” Ilya Grigorik from Google published the presentation “HTTP / 2 all the things!” , which explains how to configure the server side under HTTP 2.0 to increase page loading speed and reduce latency, compared to HTTP 1.1.

Connection View mode in the browser shows the loading of the elements of the Yahoo.com homepage in HTTP 1.1

Ilya begins by saying that for modern sites, most of the delays are waiting for the resources to load, while the bandwidth is not a limiting factor (blue in the Connection View diagram) According to statistics, to load an average web page, the browser makes 78 requests to 12 different hosts (the total size of the downloaded files is 1232 KB).

For the 1 million largest Internet sites, according to Alexa, on a 5-megabyte channel, the average page load time is 2.413 s , while the CPU runs only 0.735 s, and the rest is to wait for resources from the network (latency).

HTTP 1.1 problems consist in a large number of requests, limited concurrency, pipelining of requests that do not work in practice, the presence of competitive TCP flows, and overhead of service traffic.

Another problem is that webmasters go overboard with domain sharding. They add too many shards to circumvent the browser limit on 6 simultaneous connections to one server, and because of this they harm themselves. Traffic is duplicated many times, there are traffic jams, retransmissions, etc.

Problems with a large number of requests when developing applications for HTTP 1.1 are also solved incorrectly: through concat (creating large monolithic code fragments, expensive cache invalidation, deferred execution of JSS / CSS) and inline (duplication of resources on each page, broken prioritization).

What to do?

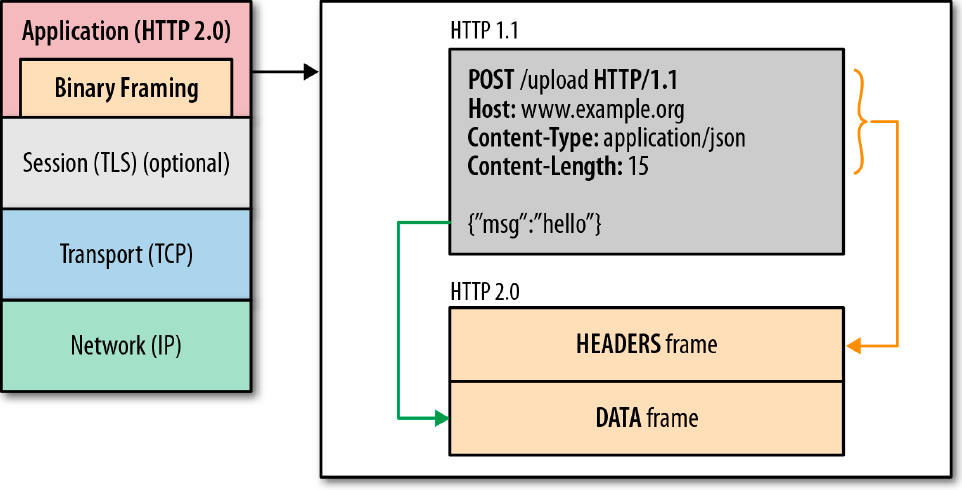

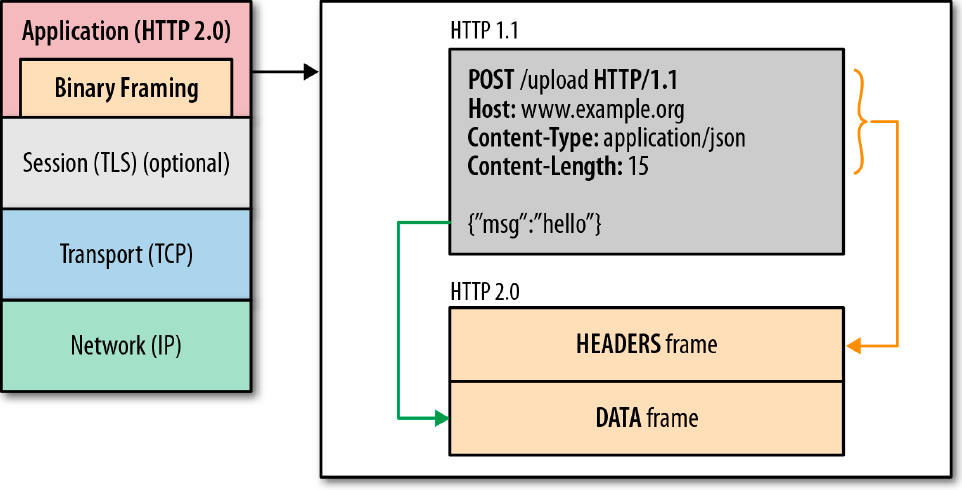

Everything is very simple. New HTTP 2.0 fixes many flaws of HTTP 1.1, Ilya Grigorik is sure. HTTP 2.0 does not require the establishment of multiple connections and reduces latency while maintaining the familiar semantics of HTTP 1.1.

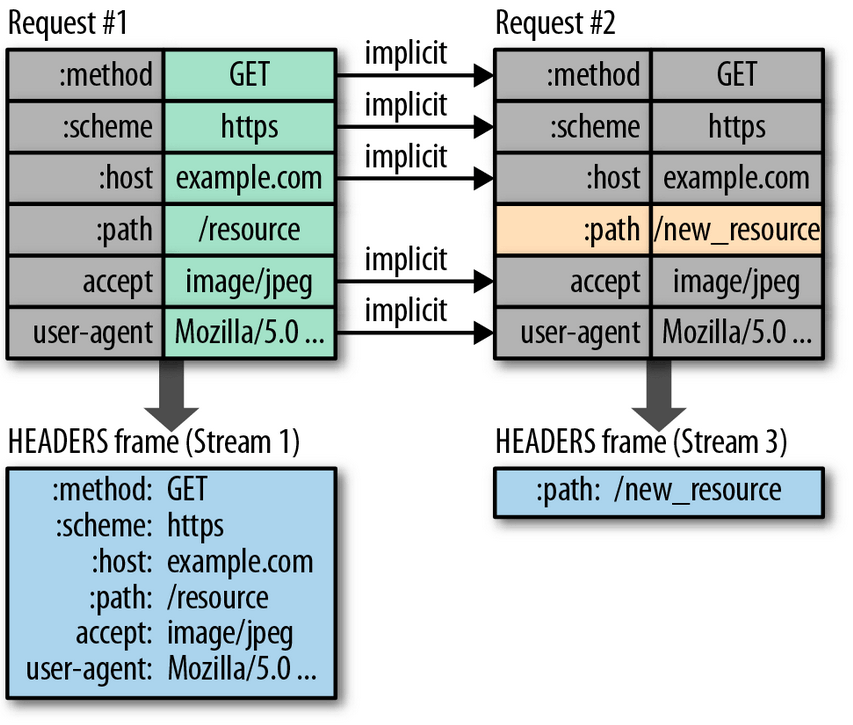

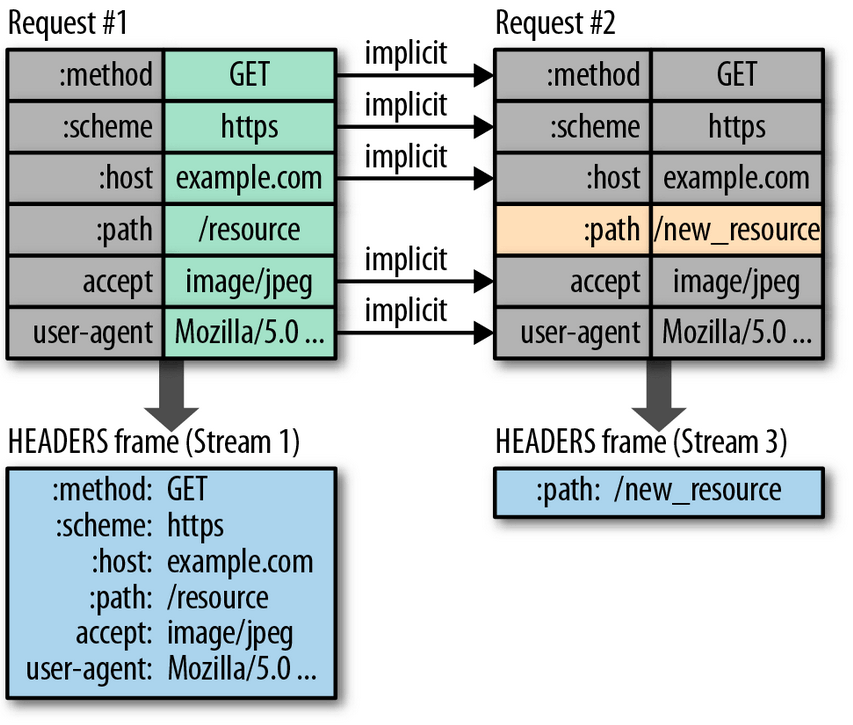

First, all streams are mixed by splitting into frames (HEADERS, DATA, etc.), which are sent over a single TCP connection. For frames, priority and flow control apply. And server-push replaces inline. And, of course, effective header compression, up to the point that HEADER is “compressed” to 9 bytes (only changes are transmitted).

How specifically to take advantage of HTTP 2.0 to optimize server applications? This is the most interesting part of the presentation of Ilya Grigorik.

Sharding specifically hits HTTP / 2 performance, breaks frame prioritization, flow control, and more.

If necessary, you can implement sharding through

Streams are no longer a limitation, so you can correctly distribute resources among modules, set a caching strategy for each of them.

The server can now issue several responses to one request. The client orders one thing, and the server can give him something else. Each resource is cached independently (it is not necessary to cache each request, there is smart push). By the way, with the help of server push-notification you can also cancel the entry in the client cache!

Smart push is generally a great thing. The server itself analyzes the traffic and builds dependencies, what resources the client requests, depending on the referrer. For example, index.html → {style.css, app.js} . In accordance with these laws, rules are created for further push notifications to new customers.

Ilya Grigorik emphasizes that in the case of HTTP / 2, the servers must be configured correctly. This was not so critical in the days of HTTP / 1.1, but now it is. For example, in HTTP / 1.1, the browser itself first sent important requests (index.html, style.css), holding on to the secondary ones (hero.jpg, other.jpg, more.jpg, etc.), but now it doesn't. So if you do not configure the server to process important requests in the first place, then performance will suffer.

Further, HTTP / 2 allows you to finely control the flow. For example, you can first send the first few kilobytes of the image (so that the browser decodes the header and determines the size of the image), then important scripts, and then the rest of the image.

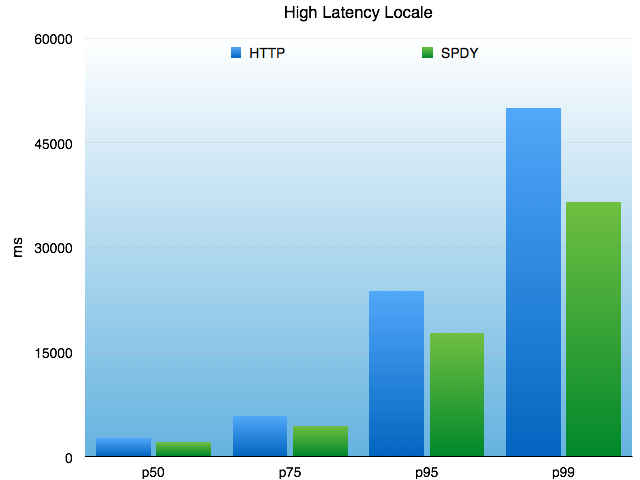

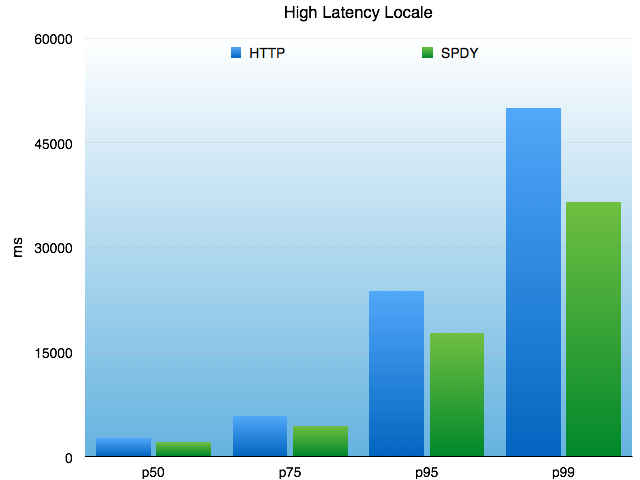

Testing showed that when using SPDY, latency and page loading speed are reduced by 30-40%.

For example, Twitter tested the delayed access to the API in December 2013. Millisecond results are shown in the graph for the 50th, 75th, 95th and 99th percentiles (median). Interestingly, SPDY is more useful when a client is connected via the worst latency locale.

Google published its statistics on increasing page loading speed a year ago .

Native HTTP / 2 support will appear in the next stable version of Chrome 39 and the next stable version of Firefox 34, so in just a few months, most browsers on the Internet will support HTTP / 2 and will be able to take advantage of the new protocol (since SPDY became part of HTTP / 2, now his separate incarnation is being withdrawn from circulation).

Github maintains a list of well-known HTTP / 2 implementations in C #, NodeJS, C ++, Perl, etc. The nghttp2 library is also included there. In general, Ilya Grigorik encourages everyone to optimize their servers for HTTP / 2 and check the correct operation of TLS .

Connection View mode in the browser shows the loading of the elements of the Yahoo.com homepage in HTTP 1.1

Ilya begins by saying that for modern sites, most of the delays are waiting for the resources to load, while the bandwidth is not a limiting factor (blue in the Connection View diagram) According to statistics, to load an average web page, the browser makes 78 requests to 12 different hosts (the total size of the downloaded files is 1232 KB).

For the 1 million largest Internet sites, according to Alexa, on a 5-megabyte channel, the average page load time is 2.413 s , while the CPU runs only 0.735 s, and the rest is to wait for resources from the network (latency).

HTTP 1.1 problems consist in a large number of requests, limited concurrency, pipelining of requests that do not work in practice, the presence of competitive TCP flows, and overhead of service traffic.

Another problem is that webmasters go overboard with domain sharding. They add too many shards to circumvent the browser limit on 6 simultaneous connections to one server, and because of this they harm themselves. Traffic is duplicated many times, there are traffic jams, retransmissions, etc.

Problems with a large number of requests when developing applications for HTTP 1.1 are also solved incorrectly: through concat (creating large monolithic code fragments, expensive cache invalidation, deferred execution of JSS / CSS) and inline (duplication of resources on each page, broken prioritization).

What to do?

Everything is very simple. New HTTP 2.0 fixes many flaws of HTTP 1.1, Ilya Grigorik is sure. HTTP 2.0 does not require the establishment of multiple connections and reduces latency while maintaining the familiar semantics of HTTP 1.1.

First, all streams are mixed by splitting into frames (HEADERS, DATA, etc.), which are sent over a single TCP connection. For frames, priority and flow control apply. And server-push replaces inline. And, of course, effective header compression, up to the point that HEADER is “compressed” to 9 bytes (only changes are transmitted).

How specifically to take advantage of HTTP 2.0 to optimize server applications? This is the most interesting part of the presentation of Ilya Grigorik.

Eliminate domain sharding

Sharding specifically hits HTTP / 2 performance, breaks frame prioritization, flow control, and more.

If necessary, you can implement sharding through

altName. If one IP and one certificate are used, then HTTP / 2 will be able to open a single connection for all shards.Get rid of unnecessary optimizations (concat, CSS sprites)

Streams are no longer a limitation, so you can correctly distribute resources among modules, set a caching strategy for each of them.

Implement server-push instead of inline

The server can now issue several responses to one request. The client orders one thing, and the server can give him something else. Each resource is cached independently (it is not necessary to cache each request, there is smart push). By the way, with the help of server push-notification you can also cancel the entry in the client cache!

Smart push is generally a great thing. The server itself analyzes the traffic and builds dependencies, what resources the client requests, depending on the referrer. For example, index.html → {style.css, app.js} . In accordance with these laws, rules are created for further push notifications to new customers.

Ilya Grigorik emphasizes that in the case of HTTP / 2, the servers must be configured correctly. This was not so critical in the days of HTTP / 1.1, but now it is. For example, in HTTP / 1.1, the browser itself first sent important requests (index.html, style.css), holding on to the secondary ones (hero.jpg, other.jpg, more.jpg, etc.), but now it doesn't. So if you do not configure the server to process important requests in the first place, then performance will suffer.

Further, HTTP / 2 allows you to finely control the flow. For example, you can first send the first few kilobytes of the image (so that the browser decodes the header and determines the size of the image), then important scripts, and then the rest of the image.

Testing showed that when using SPDY, latency and page loading speed are reduced by 30-40%.

For example, Twitter tested the delayed access to the API in December 2013. Millisecond results are shown in the graph for the 50th, 75th, 95th and 99th percentiles (median). Interestingly, SPDY is more useful when a client is connected via the worst latency locale.

Google published its statistics on increasing page loading speed a year ago .

| Google news | Google sites | Google drive | Google maps | |

| By median | -43% | -27% | -23% | -24% |

| 5th percentile (quick connections) | -32% | -thirty% | -fifteen% | -20% |

| 95th percentile (slow compounds) | -44% | -33% | -36% | -28% |

Github maintains a list of well-known HTTP / 2 implementations in C #, NodeJS, C ++, Perl, etc. The nghttp2 library is also included there. In general, Ilya Grigorik encourages everyone to optimize their servers for HTTP / 2 and check the correct operation of TLS .