Development of a new media platform. Stage of delivery of video content to users

Hello everybody!

With this article we want to open a series of materials on the development of a service that can be attributed to the new media class. The service is a large group of applications, which includes tools for the distribution and playback of video content on different platforms, second-screen applications and many other interactive products designed to expand the capabilities of consumers of online broadcasts.

The topic is quite extensive, so we decided to start the story about developing a new media service from one of its basic stages, namely, from the process of delivering video content to users in live mode. This article will describe the overall architecture of the solution.

Immediately, we note that the solution described below (like the story itself) does not claim to be any novelty or genius, but the topic is quite relevant, the development is just in the process, so it would be very useful for us to get a third-party look at the problem.

Task

Any mass event that is covered using several cameras is usually presented to the viewer in the director's version: editing directors glue one single, non-alternative video sequence, which is transmitted to the viewer.

Our task is to develop a service that will allow the viewer to choose from which camera to watch the event while watching an Internet broadcast, and will provide an opportunity to review key moments of the event.

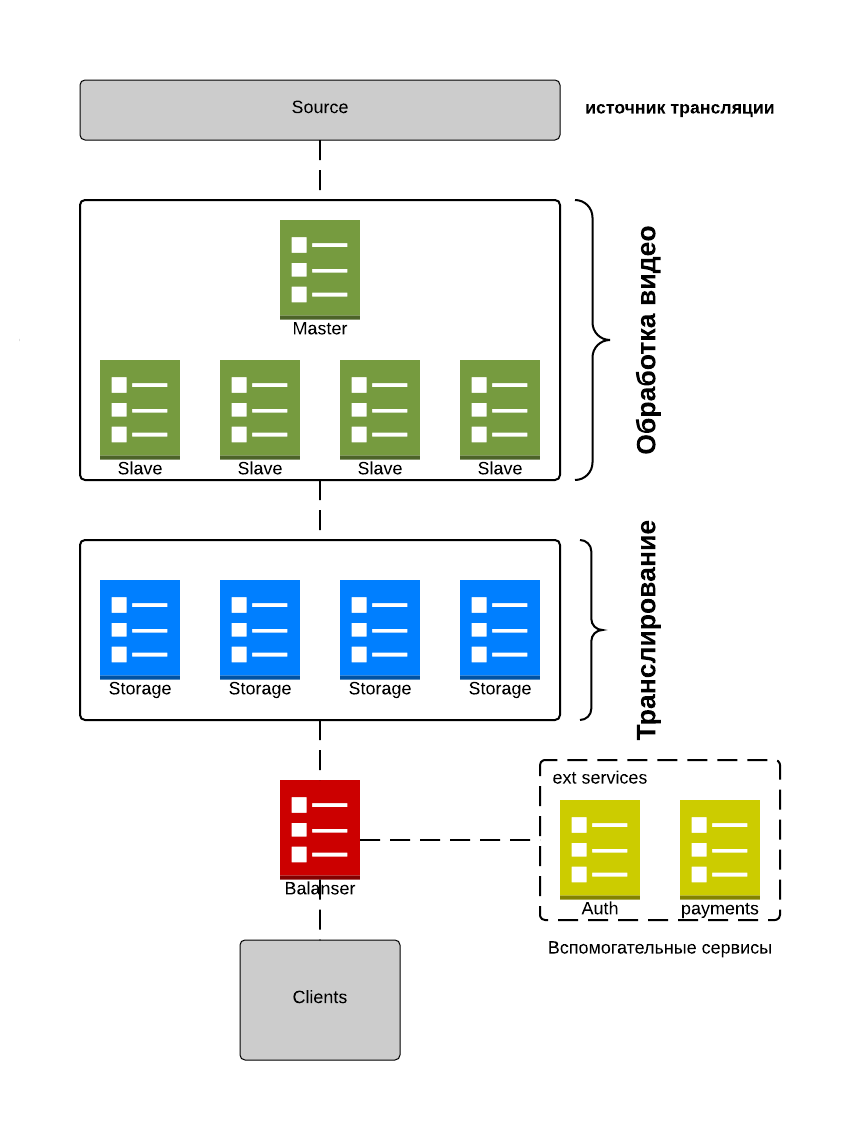

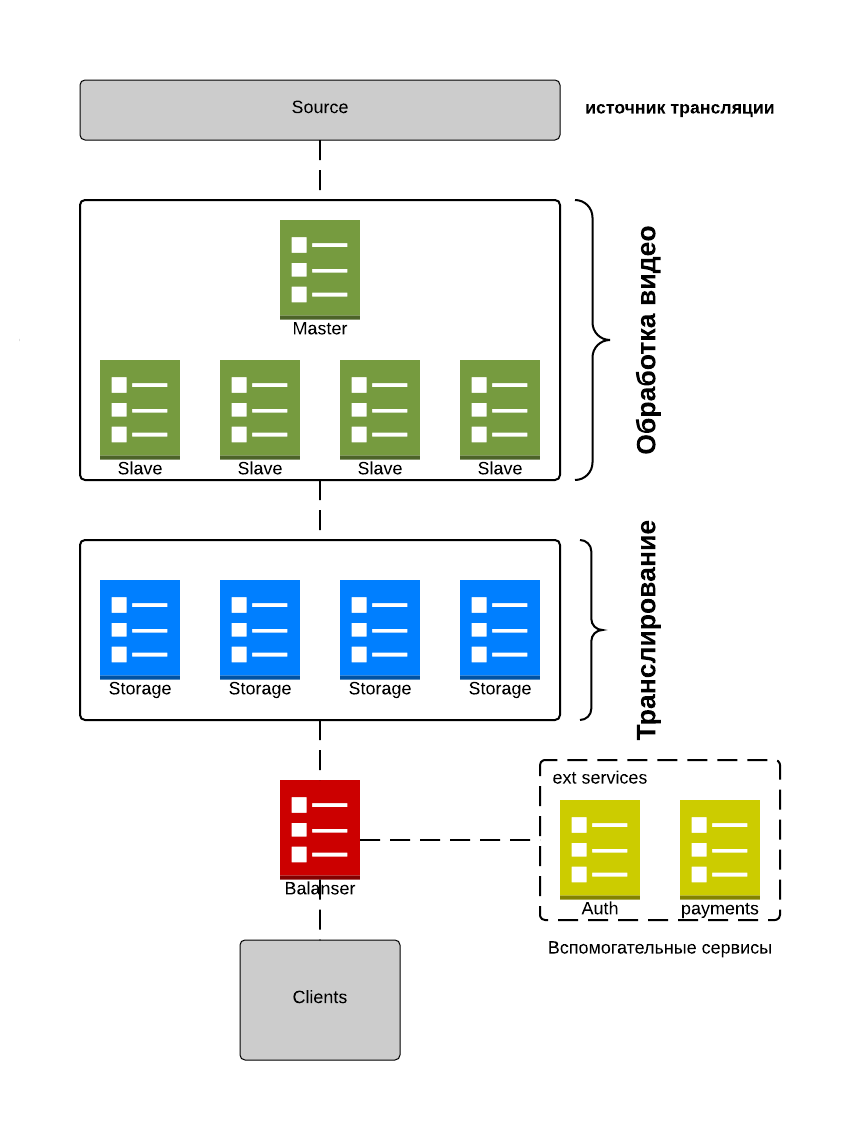

Figure 1. General architecture of the solution

We have the following scheme:

The master server monitors the broadcast status of broadcast sources and distributes streams among slave servers. Slave servers, in turn, process these streams and send the result to Storage specified in the configuration received from the master server.

Storage caches live broadcast data, and also stores the stream in FS. Master server configuration allows you to configure Storage servers in various modes - data replication, data type, etc.

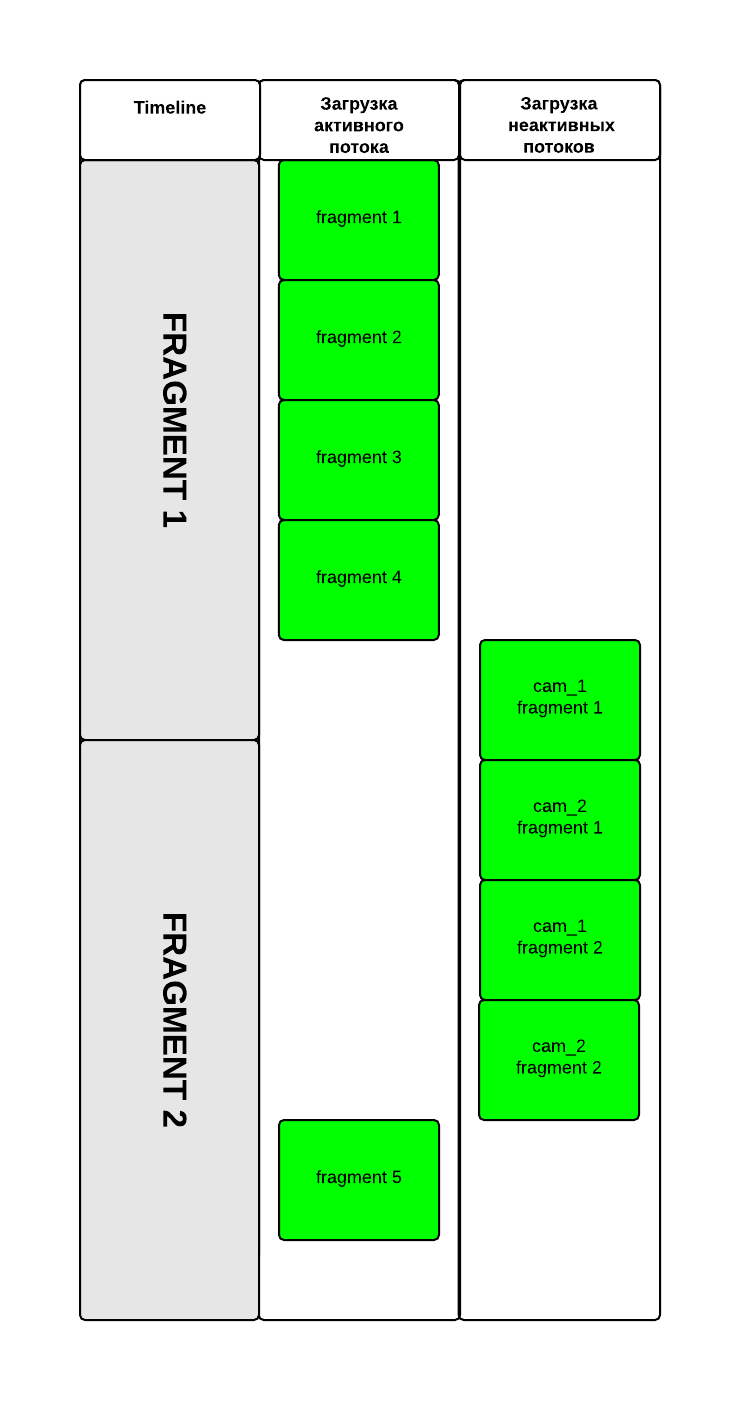

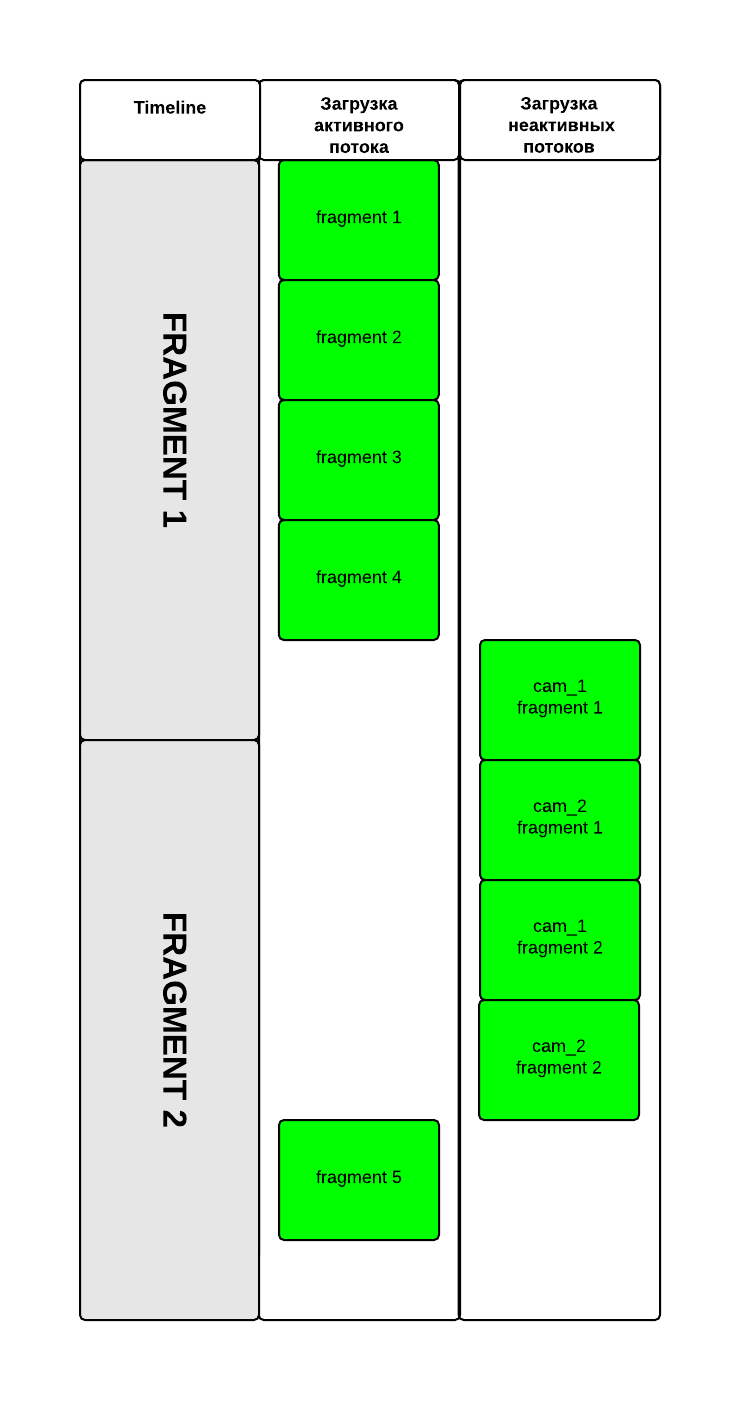

Figure 2. How broadcasting happens to clients

A load scheduler is used to distribute the load. The balancer is the client entry point into the system. It provides clients access to servers, and also filters out unnecessary or expired requests. The first customer request is always directed to the balancer. The balancer, depending on the settings, redirects the client to either the authorization server or binds the video broadcast to the server. Depending on the number of users, the number of balancers can be increased. We use separate instances to load historical fragments and fragments of online broadcasting, for load balancing.

Figure 3. Caching

At the time of online broadcasting, the server caches stream fragments before storing them in storage. When clients contact it, it distributes fragments asynchronously.

Buffering of video fragments on the client occurs using two download queues. First of all, fragments of the active stream are loaded. After the optimal number of fragments for smooth playback is loaded, control is transferred to the second stream, which begins to synchronously load camera fragments for a given timeline section. This avoids broadcast delays when switching cameras. At the time of switching, the buffer of the selected camera is loaded into the main stream queue.

Instead of a conclusion

In this material, we tried to briefly and schematically outline our approach to designing a delivery site for video content in real time to the broadcast consumer. In the following articles, we will tell you what we got as a result, and dwell in more detail on how this will interact with other parts of our large new media service (in particular, with its client part).

Thanks for attention :)

With this article we want to open a series of materials on the development of a service that can be attributed to the new media class. The service is a large group of applications, which includes tools for the distribution and playback of video content on different platforms, second-screen applications and many other interactive products designed to expand the capabilities of consumers of online broadcasts.

The topic is quite extensive, so we decided to start the story about developing a new media service from one of its basic stages, namely, from the process of delivering video content to users in live mode. This article will describe the overall architecture of the solution.

Immediately, we note that the solution described below (like the story itself) does not claim to be any novelty or genius, but the topic is quite relevant, the development is just in the process, so it would be very useful for us to get a third-party look at the problem.

Task

Any mass event that is covered using several cameras is usually presented to the viewer in the director's version: editing directors glue one single, non-alternative video sequence, which is transmitted to the viewer.

Our task is to develop a service that will allow the viewer to choose from which camera to watch the event while watching an Internet broadcast, and will provide an opportunity to review key moments of the event.

Figure 1. General architecture of the solution

We have the following scheme:

The master server monitors the broadcast status of broadcast sources and distributes streams among slave servers. Slave servers, in turn, process these streams and send the result to Storage specified in the configuration received from the master server.

Storage caches live broadcast data, and also stores the stream in FS. Master server configuration allows you to configure Storage servers in various modes - data replication, data type, etc.

Figure 2. How broadcasting happens to clients

A load scheduler is used to distribute the load. The balancer is the client entry point into the system. It provides clients access to servers, and also filters out unnecessary or expired requests. The first customer request is always directed to the balancer. The balancer, depending on the settings, redirects the client to either the authorization server or binds the video broadcast to the server. Depending on the number of users, the number of balancers can be increased. We use separate instances to load historical fragments and fragments of online broadcasting, for load balancing.

Figure 3. Caching

At the time of online broadcasting, the server caches stream fragments before storing them in storage. When clients contact it, it distributes fragments asynchronously.

Buffering of video fragments on the client occurs using two download queues. First of all, fragments of the active stream are loaded. After the optimal number of fragments for smooth playback is loaded, control is transferred to the second stream, which begins to synchronously load camera fragments for a given timeline section. This avoids broadcast delays when switching cameras. At the time of switching, the buffer of the selected camera is loaded into the main stream queue.

Instead of a conclusion

In this material, we tried to briefly and schematically outline our approach to designing a delivery site for video content in real time to the broadcast consumer. In the following articles, we will tell you what we got as a result, and dwell in more detail on how this will interact with other parts of our large new media service (in particular, with its client part).

Thanks for attention :)