Back to future data centers

What is the day ahead for us? In what world will we live in 20-30 years? The future is so exciting and uncertain. As in antiquity, and now the flow of people coming with questions to oracles, magicians, seers, does not dry out. Often people are interested in not so much tomorrow, but a much more distant future, boundless in terms of the length of human life. It would seem that “complete nonsense”, but such is the human essence. Starting from the 19th century, new visionaries came to the place of exalted elders - science fiction writers.

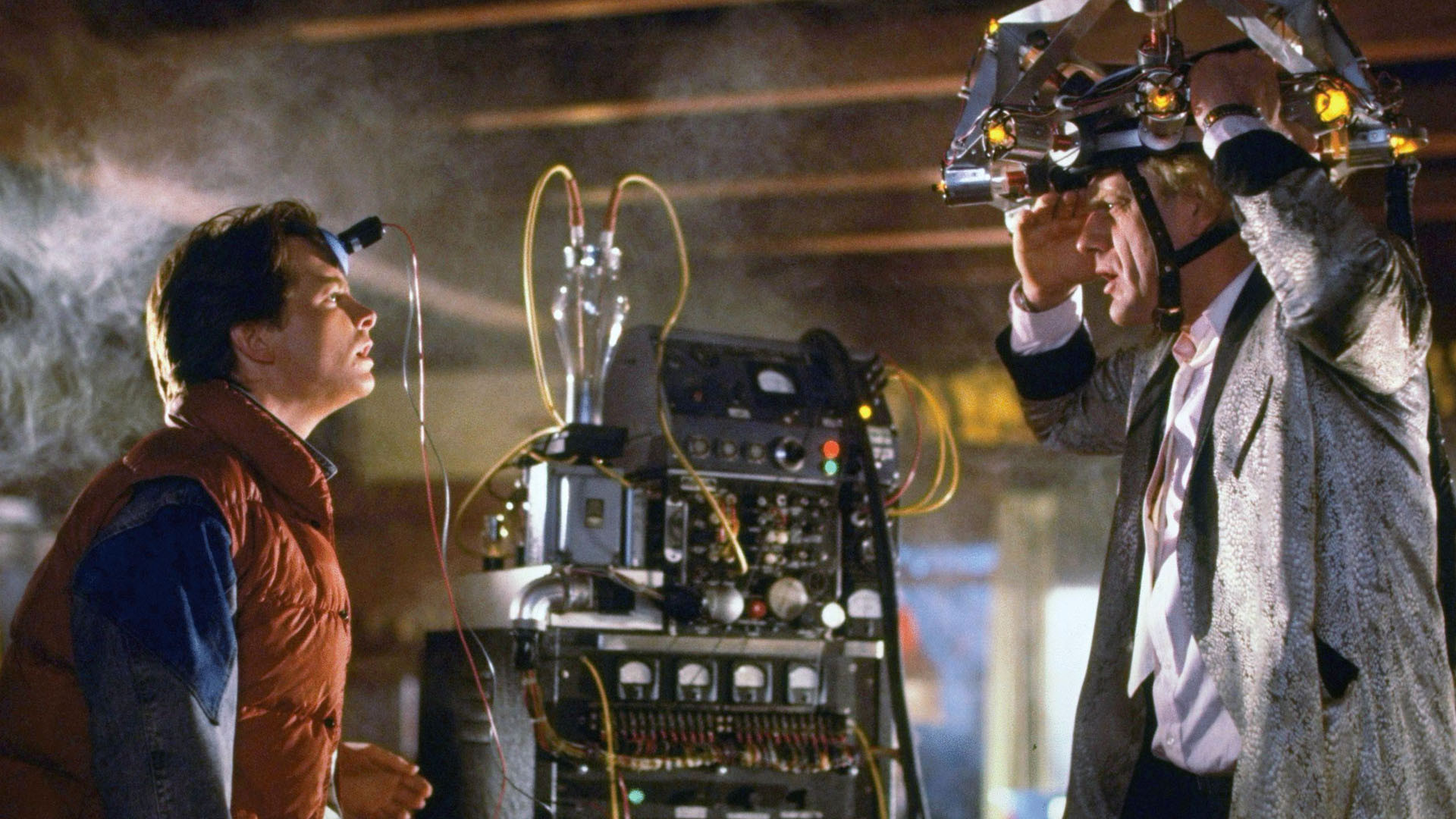

Having recently reviewed the second part of the magnificent trilogy “Back to the Future”, which appeared on the wide screen in 1989, bitterly noticed that in the impending 2015, the streets of our cities will not be filled with flying cars, home energy reactors that process garbage, utopia, holographic cinemas - a pipe myth, even a dustproof cover for a magazine over the past quarter century has not been invented. At the same time, in some areas of our daily lives, foresight was pretty accurate.

Reflections inspired by the above, and prompted a more detailed understanding of those innovations that affected data storage centers. Yes, for the past many years, the same Intel has been updating its processor lineups, the software also does not stand still, but does this give us the right to talk about some serious changes in the functioning of data centers? Let's take a closer look at the innovations that we have heard a lot about over the past 10 years. At what stage of implementation are they now?

Low Energy Efficiency Ratio (PUE)

When in 2007 Green Grid first introduced its Energy Efficiency Measure (PUE), National Laboratory Lawrence Barclay published a report on the results of a study of 20 data centers, which showed that the average PUE was 2.2. Obviously, companies are not always interested in bringing the PUE value to the minimum possible, energy efficiency is associated with large financial investments and technical difficulties that create additional problems in the construction of new data centers. But in this direction, IT giants such as Google and Facebook provide a good example. Their data centers are characterized by PUE in the 1.2-1.3 region, which makes the company a leader in energy efficiency. At the same time, material published in 2013 based on information from an analysis of world research organizations showed that at the time of the study, the average PUE level for existing data centers in the world was a little over 2.0. The solution to the energy efficiency problem of data centers is the primary goal that must be solved to reduce the cost of operating the IT infrastructure.

Downtime

For a long time, information system engineers came to the idea that all network equipment should work with maximum productivity 24 hours a day. At the moment, we are faced with the fact that due to the widespread availability of server platforms, we have many isolated clusters that work permanently, exist solely to support the operation of specific programs or some network options, which in turn leads to the existence of a huge number of servers, which most of their time is simply not involved. A report published by research company McKinsey showed that in data centers where the study was conducted, the percentage of aimlessly idle servers reached 30%. Although much has been done over the past 10 years towards virtualization, which has helped reduce server downtime, the task is still quite acute. Large-scale Internet companies are already using virtualization quite effectively, and this is one of the factors that increase the profitability of doing business. According to an assessment conducted in 2014 by the marketing company Gartner, up to 70% of the workloads on x86 platforms relate specifically to virtualization systems. In the meantime, the owners of the data centers will not bring the existing facilities to a reasonable minimum, the servers will continue to devour such valuable electric energy in idle time. relate specifically to virtualization systems. In the meantime, the owners of the data centers will not bring the existing facilities to a reasonable minimum, the servers will continue to devour such valuable electric energy in idle time. relate specifically to virtualization systems. In the meantime, the owners of the data centers will not bring the existing facilities to a reasonable minimum, the servers will continue to devour such valuable electric energy in idle time.

Water cooling

This kind of cooling, of course, was not invented today. Back in the 60s of the twentieth century, for the uninterrupted operation of massive power waste computers, liquid-based cooling schemes were used. For modern super computers, liquid cooling is also not a novelty, but the use of such technologies in data centers is not common. Often these are experimental facilities; the use of such systems is rather rare. At the same time, the theory tells us that liquids can remove excess heat from the working equipment of server cabinets thousands of times more efficiently than air does. But, unfortunately, the owners of data centers, both large and not very, are still afraid to widely use this technology because of the need to change the existing infrastructure, change the culture of personnel working with equipment, placed in a liquid medium, the absence of examples of large data centers with such a functioning system. A successful example of the use of liquid cooling can be the National Renewable Energy Laboratory (Perigrin, USA), where the data center created in 2013, providing the supercomputer located there, shows PUE at a phenomenal level of 1.06! Also, the installed system in the city of Perington during the cold months can send the heat released from the equipment to the heating of the National Laboratory campus. It is such positive examples that should become the impetus for the widespread use of liquid cooling technology. where the data center created in 2013, which provides the supercomputer located there, shows PUE at a phenomenal level of 1.06! Also, the installed system in the city of Perington during the cold months can send the heat released from the equipment to the heating of the National Laboratory campus. It is such positive examples that should become the impetus for the widespread use of liquid cooling technology. where the data center created in 2013, which provides the supercomputer located there, shows PUE at a phenomenal level of 1.06! Also, the installed system in the city of Perington during the cold months can send the heat released from the equipment to the heating of the National Laboratory campus. It is such positive examples that should become the impetus for the widespread use of liquid cooling technology.

Peak load distribution

Peak loads are truly the scourge of network infrastructure and the nightmare of engineers. It so happened that our rhythm of life during the day dictates the uneven consumption of data center capacities. Accordingly, so that we do not have problems loading the pages of our favorite social networks in the time interval from approximately 19:00 to 21:00 local time, network service companies are forced to contain an excessive amount of computing power, which the rest of the time, in general, then, and not in demand. Yes, of course, network engineers find ways to solve this problem: here both caching and intelligent systems for redistributing the load between server clusters. But imagine for a minute what would happen, if it were possible to eliminate (minimize) the latency of the network? This would radically change the entire existing telecommunications infrastructure on Earth. In the meantime, we have the facts: 64 milliseconds is necessary for an electromagnetic wave in order to cover the distance to the floor of the Earth's equator. Considering the irregularities of the routes, the delay in the response of the request associated with the functioning of the network infrastructure, and a bunch of other factors, organizing a fairly efficient transfer of computing resources between different parts of the world is quite problematic at the moment, companies run the risk of getting a bunch of furious customers that are not satisfied with the service they offer.

Data Center Infrastructure Management (DCIM)

Another of the great beginnings, which is now in a "precarious" state. The philosophy of DCIM includes the principle of creating uniform standards for the management and functioning of data centers. The standardization of equipment, software, and management approaches can make a huge contribution to reducing the cost of both manufacturing and maintaining network infrastructure. The movement in this direction can be called the appearance of unified coefficients PUE, CUE, DCeР. A recent consolidated statement by major IT giants to pay more attention to open source software and to implement inter-corporate projects on its basis is another step towards this goal. But due to various kinds of interests, both state and commercial, the process of unity is very slow. In the end, we pay for the costs of fragmentation.

Modular data centers

Here it is worth noting the obvious progress. Since 2003, Google’s first patented technology, the technology of containers stuffed with server equipment that can be delivered anywhere in the world within 24 hours and set up a virtually full-fledged data node there has been truly amazing. Of course, do not forget about the necessary telecommunication channels, and about the site where these containers will be installed, and the maintenance staff plays a very important role. But still, this is an obvious breakthrough. At the moment, the container approach to creating new data centers has pleased the main participants in the IT market. Mobility of equipment transfer, speed of its deployment, unification, high assembly quality by highly qualified workers at the parent plants - all these qualities were appreciated by both civil organizations and the military. The number of existing examples of technology application is in the hundreds.

Data Centers North of Latitude 48

The construction of data centers in the climatic conditions of the north, like some other ideas that excite consciousness, have not found wide support today. According to some expert estimates, it is most efficient to build data centers just north of latitude 48, which is where the most favorable conditions for low-cost atmospheric cooling of server rooms will be. If we analyze the existing geography of the placement of data centers, then only Europe can at least be noted in this regard. The data centers in Sweden and Finland immediately come to mind, which only costs one Google data center in Luleå. But these are still rather isolated cases, and global providers are more likely to focus on the proximity of their infrastructural brainchildren to the direct consumers of their capacities, preferring the lowest possible latency of the network,

The main line - no risks

Looking at the main trends in existing data centers and designing new ones, the conclusion suggests itself. Such young and rapidly developing IT companies, revolutionaries of their kind, are leading a rather conservative, if not ossified, model of market behavior, which impedes the widespread introduction of new, promising developments. Operators and designers are not ready to take any, even the smallest, risks for the sake of increasing the efficiency of the existing network infrastructure, even if a positive result in the direction of progress has already been shown with concrete examples. Probably, if you delve into all the nuances in detail, maybe this has its own sort of higher logic. But the conclusion suggests itself is a little pessimistic: how we did not wait for the flying cars predicted by the creators of the Back to the Future trilogy in 2015,