Yujin: fearless sapper robots, smart robotic vacuum cleaners, empathic teacher robots

For two weeks, the cute Korean iRobi (q) robot from Yujin , which manufactures iClebo Arte robotic vacuum cleaners (for everyone), nurse robots (for medical purposes), sapper robots (for the military), nanny robots, and hunks, stayed in Hackspace for two weeks. robot teachers (for kids).

It is noteworthy that the method of simultaneous navigation and mapping (SLAM from the English. Simultaneous Location and Mapping) is almost the same for all Yujin robots (so you can buy a vacuum cleaner to understand how a military robot works).

Under the cut is a short description of the Yujin robots line, a few funny adventures of a smiling robot in Hackspace and a few photos of what this robot educator has inside.

SLAM

SLAM - Simultaneous Localization And Mapping - A method for simultaneously navigating and building a map - a method used by robots and autonomous vehicles to build a map in an unknown space or to update a map in a previously known space with simultaneous control of the current location and distance traveled.

The method of simultaneous navigation and map building (SLAM) is a concept that links two independent processes into a continuous cycle of sequential calculations, in which the results of one process participate in the calculations of another process.

Map building is the problem of integrating information collected from robot sensors. In this process, the robot answers the question: “What does the world look like?”

The main aspects in building a map are the presentation of environmental data and the interpretation of sensor data.

On the contrary, localization is a problem of determining the location of a robot on a map. At the same time, the robot, as it were, answers the question “Where am I?”

Localization can be divided into two types - local and global.

Local localization allows you to track the location of the robot on the map when its initial location is known, and global localization is the location of the robot in an unfamiliar place (for example, when a robot is stolen).

The robot is faced with the tasks of: a) building a map and b) localizing the robot on this map. In practice, these two tasks cannot be solved independently of each other. Before the robot can answer the question of what the environment looks like (based on a series of observations), it needs to know where these observations were made. At the same time, it is difficult to assess the current position of the robot without a map.

So it turns out that SLAM is a typical chicken and egg problem: a map is needed for localization, and localization is needed to create a map.

SLAM is implemented using several technologies: odometry (data from the robot wheels), 1d and 2d laser range meters, 3D High Definition LiDAR, 3D Flash LIDAR, 2d and 3d sonars, one or more 2d video cameras. There are also tactile SLAM systems (register touch), radar SLAM, wifi-SLAM. (There is also exotic FootSLAM)

Articles on the Habré on this topic

In MIT, we developed a real-time map-building system for rescuers ;

What does a robot need to build a map? ;

Test robotic vacuum cleaners: iRobot Roomba 780, Moneual MR7700, iClebo Arte and Neato XV-11 .

Educational video

Yujin Company

Yujin was founded in 1988 for industrial and military purposes.

Robot line

Sapper robot (miner?)

more pictures of a military robot

Teacher Robot

Interactive pronunciation training + character Cafero

robotic wheelchairs

- waiter robot

(takes batteries instead of tips)

GoCart porter robot

For hotel and industry and healthcare

iRobi

TTX

Battery charge 3 hours

7-inch WVGA touchscreen

Voice recognition

1.3 megapixel camera

40 GB hard drive (for karaoke)

Cost - $ 4,598

2000 Korean kindergartens were equipped with similar robots

(now kindergartens probably equip League of Legends game)

How much love in his eyes and a shy blush:

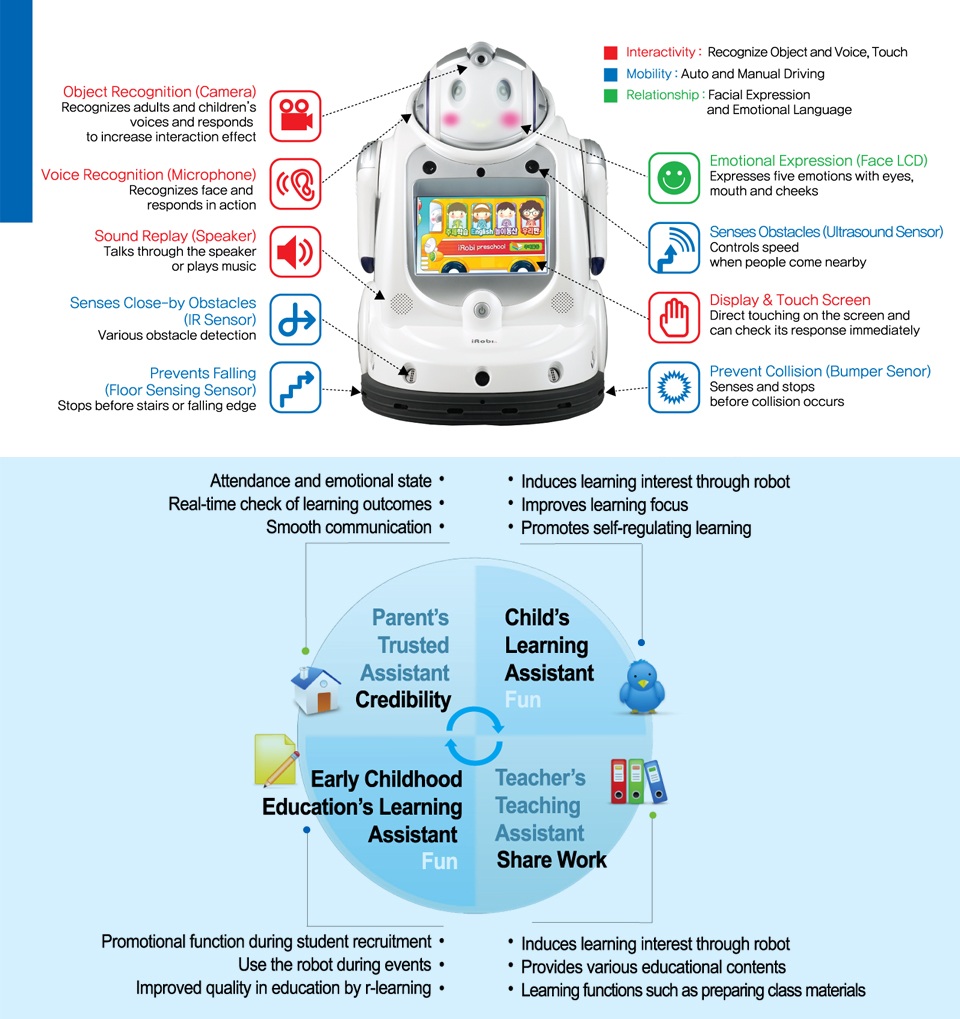

features

Video recognition (Camera)

The robot can recognize the image and respond to the person or actions

voice recognition (Microphone)

voice recognition

emotion expression (face LED)

The robot can "express" 5 of emotion LEDs (eyes, lips and cheeks)

Obstacle detection (Ultrasound sensor)

Ultrasonic obstacle detection. The robot pauses if it detects a person or obstacle in its path.

Audio playback (Speaker) The

robot speaks and plays music.

Display & touch screen

(Now you won’t surprise anyone, but in 2007 it was cool)

Close obstacle detection (IR sensor)

IR sensor to avoid obstacles

Fall prevention (Floor detection sensor)

Avoidance falls. The robot stops in front of the stairs and “cliffs” (in our case, it saved itself from falling from the table)

Collision detection (Bumper sensor) The

bumper is equipped with touch sensors. When triggered, the robot stops

The robot is equipped with a camera to build a room map. With its help, he scans the ceiling, producing up to 24 frames per second, to form the exact structure of the room, determine the joints of walls and the location of partitions.

Device:

Areas of application

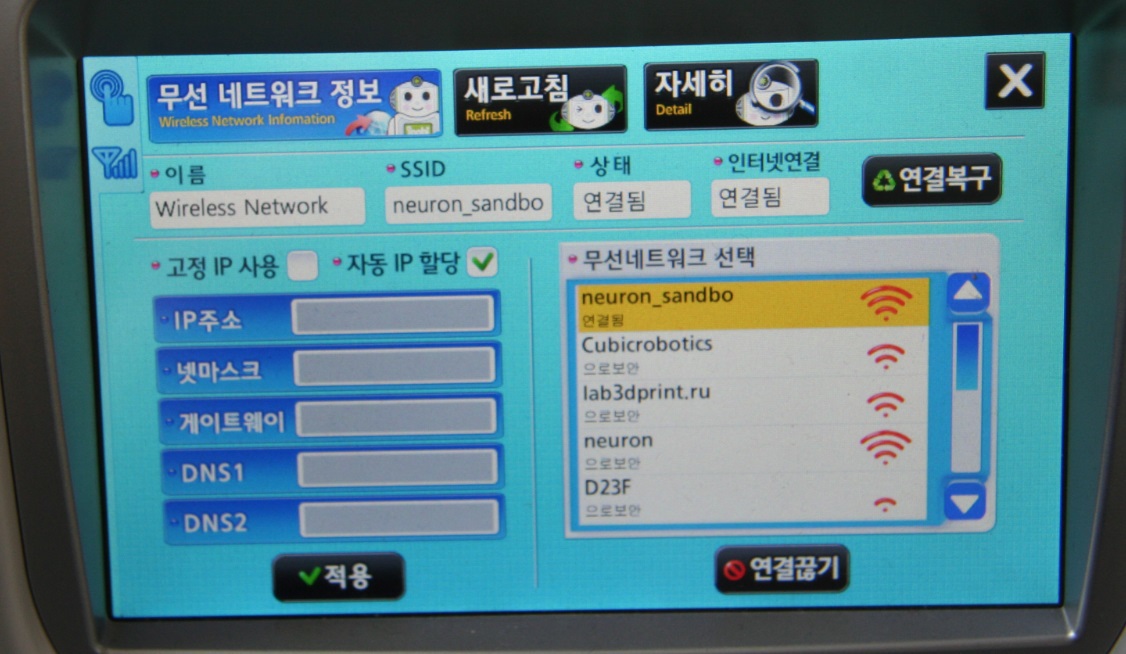

Internet connection menu:

We pump through the skill of exhaustive search of all buttons and learning the Korean language by typing.

Main menu: The

upper two rows of actions require an Internet connection, the lower ones are offline. It seems that somewhere on the server (cloud?) Your account is created and it is even possible that there was a consultant person

Photographs you when you photograph it.

A photograph takes place when you touch his hand.

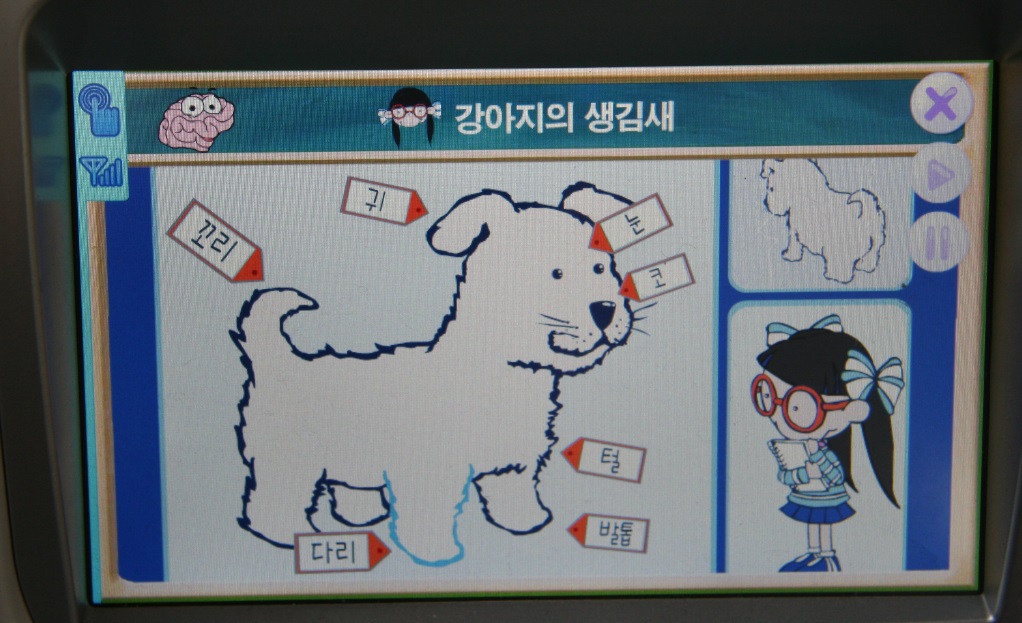

With such a teacher you will quickly learn Korean.

(The big-eyed brain pleased)

Oh, these Korean cultural codes:

(Memory development game)

Karaoke mode:

(While the Korean song is playing, the robot travels and waves its hands)

Reflection:

Paul Ekman's Theory of Lies also works for robots:

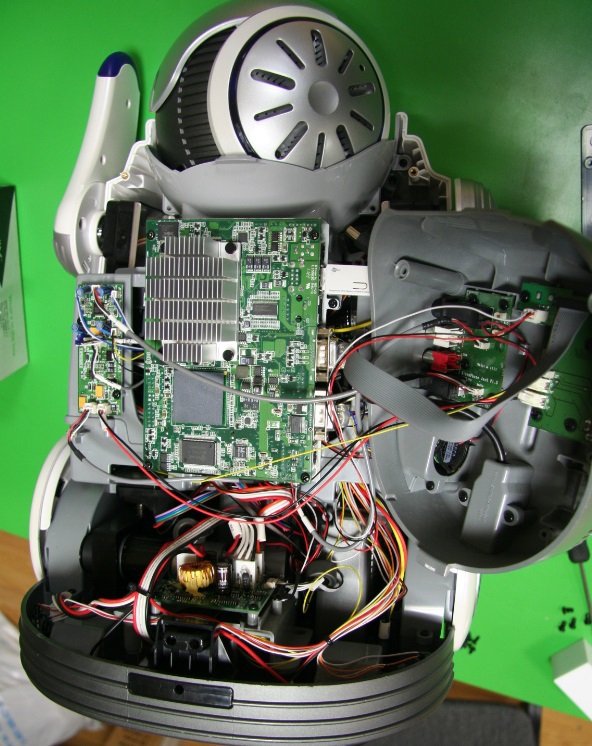

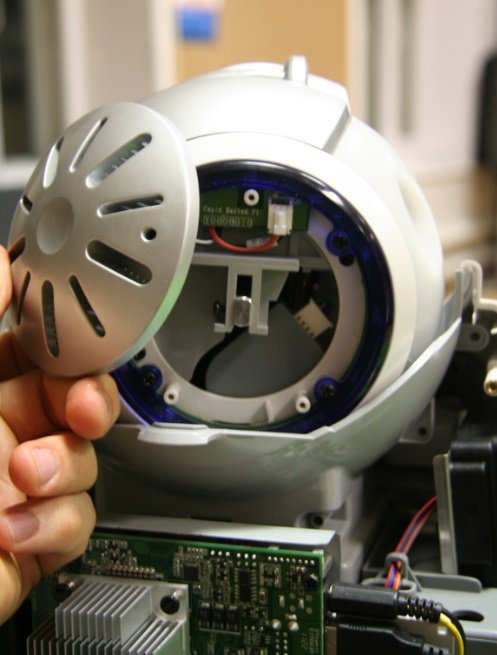

Insides

“Luke, I’m your father”

Where without her

Under my arm is a

Touchscreen hard drive from the back:

Back:

Wi-Fi module: I

didn’t go deep into my head, removed my ear and looked - there are motors and wires from the camera:

IR sensor:

Funny cases

Once, shouting “I want to eat, I’m going to search for a socket” (he spoke in English, an approximate translation), the robot rushed towards adventures, and since it was on the table, we realized that now it will happen ... But no , he drove to the edge, timidly leaned out a couple of centimeters, said "Come on, you do not care" (the translation is not verbatim, but the meaning is something like this), went into sleep mode.

When I put the robot in the box, quietly pressed the “On” key, and since it takes 20-30 seconds to load, I calmly continued packing it, but then it suddenly came to life, stirred its arms and began to mumble something. My heart sank, I immediately remembered how I shoved the kitten into my bag.

Azimov was right that humanity will have problems with the emotional perception of robots.

Only registered users can participate in the survey. Please come in.

Is it worth creating a similar version of a Russian-language robot for teaching children?

- 60.9% Yes 78

- 22.6% No 29

- 13.2% Don't Know 17

- 3.1% I am already creating it 4