Remote control of UART via the Web

Let's start with iron

I worked somehow in one factory, where they sculpted any kind of electronics, which were not very complex, and sometimes fell under the definition of “Internet of Things”. For the most part, all sorts of sensors for security systems: smoke, noise, penetration, fire and all other sensors. The product range was the broadest, batches were sometimes less than 500 pieces, and almost each product had to do a separate Test Fixture - in fact, just a tin box in which the product was put on the tests, pressed against the lid, and from the bottom the contact needles were pressed to the contact points on a printed circuit board, something like this:

I worked somehow in one factory, where they sculpted any kind of electronics, which were not very complex, and sometimes fell under the definition of “Internet of Things”. For the most part, all sorts of sensors for security systems: smoke, noise, penetration, fire and all other sensors. The product range was the broadest, batches were sometimes less than 500 pieces, and almost each product had to do a separate Test Fixture - in fact, just a tin box in which the product was put on the tests, pressed against the lid, and from the bottom the contact needles were pressed to the contact points on a printed circuit board, something like this:Thus it was possible to physically communicate with the device. We had a rather common communication protocol in the industry - RS232 (COM-port, a type of UART). In the box were also put all sorts of simple controlled devices for testing the final product. All of these auxiliary instrumentation devices were controlled in the same way. The whole construction was very flimsy, and all sorts of problems were part of the everyday routine.

The spectrum of problems was very wide - poor contacts, confused polarity during installation, problems with the product under test, with measuring devices, with contact needles, with test code ... you never know what! But it was necessary to constantly test, and if tests began to “crumble” somewhere, one of the engineers had to stomp on the line, and start checking everything manually.

First of all, Docklight was launched - a good utility for “communication” via COM ports, but having a lot of restrictions. And here we are getting closer to the bottom line.

What did not suit me Docklight?

Well, let's go.

- The first problem - Docklight runs only under Windows. So, the installation of a “nerve center” in the form of a RaspberryPi, to which all devices would be connected, or something similar, could be forgotten. I had to put the NUC - the cheapest solution in this situation. Heavy, rather large, and not the cheapest. By the way, when these Test Fixtures were dragged from place to place, the NUCs fought very, very (although I admit that they have quite a strong construction).

- The second - remote access could be carried out only through Desktop sharing - it was inhibited even through a local network, and even through the Internet it was completely lame.

- Third, each device had its own set of commands, and Docklight could download a file with a list of commands. If, say, it was necessary to share with someone in the department a similar list - then either send an email with a file, or ... send a link to a file in a shared folder! Naturally, each Docklight installation required such files locally, and all this had to be done dozens (if not hundreds of times) manually — for each NUC, each engineer dragged his favorite and convenient command lists. And in the courtyard of 2019, let me remind you ...

- Fourth - Docklight does not allow you to automatically associate a COM port with a device name: for example, when connecting Power Supply, Windows will communicate with the device via COM12. If you want to manually “pull the strings”, then in Docklight you need to open COM12. How can we find out that we are talking about Power Supply, and not, say, SwitchBoard? Well, every time you can look in the device manager, and try not to forget which device is connected to which port. At the same time, no one guarantees that if you simply pull out the device, and then plug it in again, the old port will remain behind this device. In short, every time you need to do it manually. And believe me, by the end of the day my head was spinning from this.

- Fifth, a separate copy of the program was needed for each port, and, naturally, all operations had to be done individually for each device, and although Docklight supports script writing, the interaction between the individual instances does not exist.

Further. There was no integration with any other product. It seems to be a trifle, but here's a situation for you when it brought it to a white heat: the test fell, and you need to figure out the reason. First of all, you need to connect to the devices, and see if they are dead at all. We go to the device manager, look at which port our device is sitting on, open the Docklight, initiate communication with our port ... Error. Damn it Forgot to stop the service, which is installed on the NUC, and holds all ports. Exclusive, you know. Okay, we slow down the service, open the port, load the file with the device commands, send commands, get (or don't get) the answers, solve the problem. We run the test again, it crashes again ... Oh, well, damn, you forgot to close the Docklight and restart the service. Everything seems to be no errors. But this is for the next couple of hours, until again something is not zaglyuch. And believe me

Well, of course, about any extensions, ext. There could be no fichah or the like - the product is closed, it has been written for a long time (it doesn’t seem to be specially developed), there is no customization.

Well, I decided to do something of my own, but by correcting (or improving) the situation with the problems described.

It turned out something like Zabbix, but with sharpening for a specific situation.

So what's the difference?

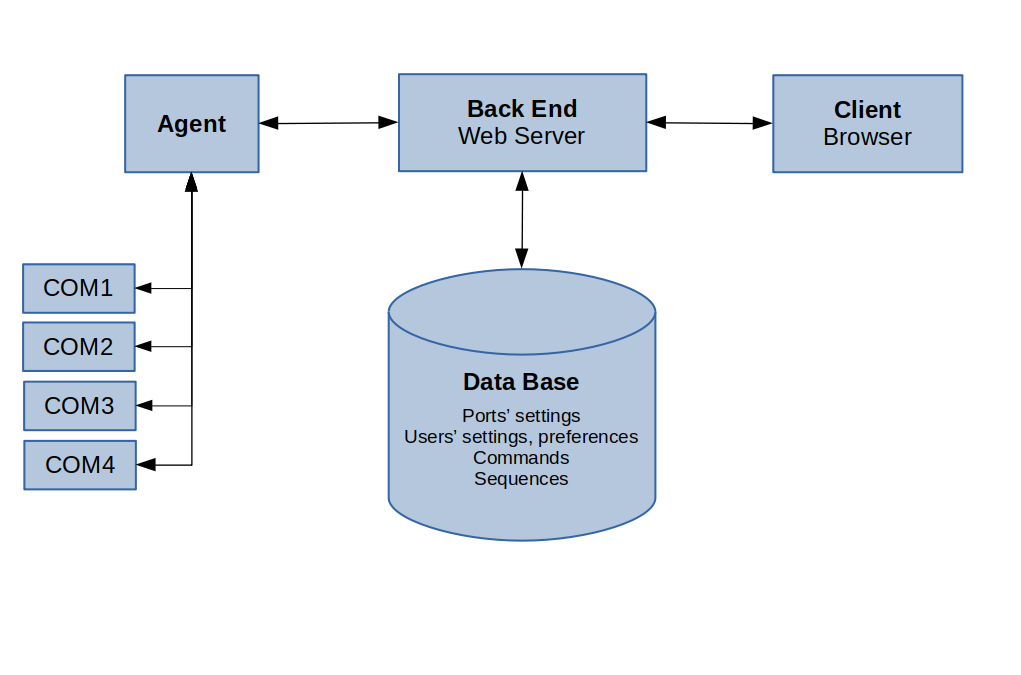

Perhaps it makes sense to start with a general description of the architecture, and then go into details.

The scheme looks like this:

We have an Agent, which runs at the station to which our devices are physically connected. Agent was written in Python, so it works without problems on Windows, Linux, and you can safely finish it for use on RaspberryPi and similar devices. The program is highly undemanding to resources, and very stable. Agent is constantly connected via Websocket with the server (back end), and all port settings and their parameters are received from there, both during initialization and during updates. Agent'a has its own GUI for settings and monitoring in case of anything (maybe the connection was broken, maybe the license is expired).

Further. The Server (also known as the back end) rises from the docker (and that’s why it’s elementary not only launched in amazon or Google Cloud, but also on any not very powerful machine on the local network with Linux onboard). Written on Django in conjunction with Redis (for websocket support). It stores all the settings, and provides a connection between the user GUI (just a page written in ReactJS) and through the Agent - with our devices. Communication is two-way, fully asynchronous. All settings are stored in Postgres and Mongo.

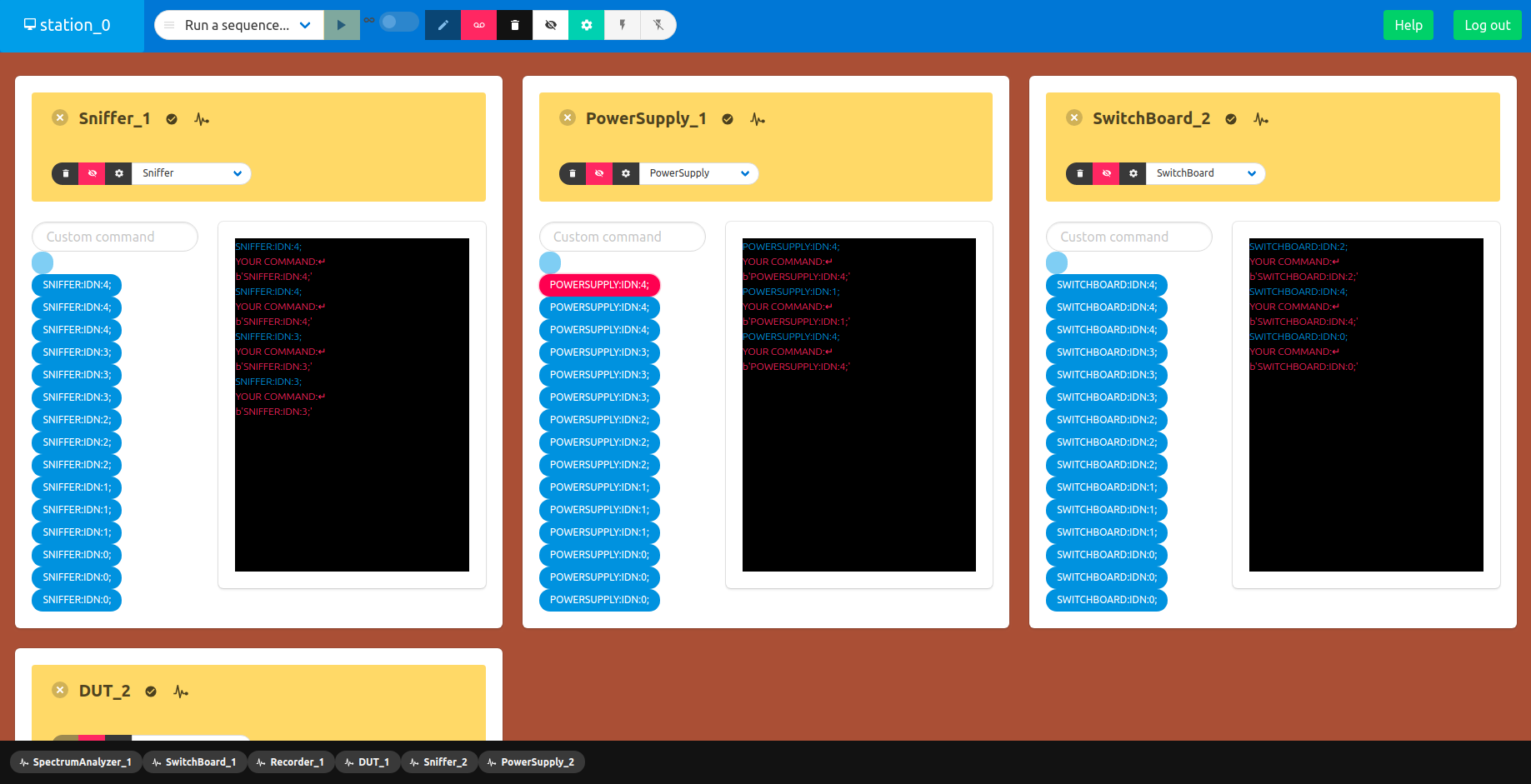

Well, and, perhaps, the most important part of the system is the client itself (simply, a page in the browser, for greater dynamism, written on ReactJS).

Yes, visual design is far from perfect, but this is a fixable matter.

Well, on this you can round up, add just a few words about the status of the project and the demo.

- This is a rather early alpha, designed rather to demonstrate potential convenience and check the level of interest.

- Play with the demo here

To log in

username: operator_0

password: 123456789

Select QA_Test and any station (this is just an attempt to simulate the structure of the enterprise - the ports are connected to the stations, they are divided into departments, and each office has its own structure)

In principle, if there is interest, I will add support for https, and I will build Agent ʻa for different platforms, as well as finish all the other features.

I will be glad to any feedback and suggestions. Criticism is welcome!