The new science of glancing around the corner

- Transfer

Researchers of computer vision discovered a hidden world of visual signals at our disposal, where there are imperceptible movements, giving out what was said, and vague images of what is around the corner.

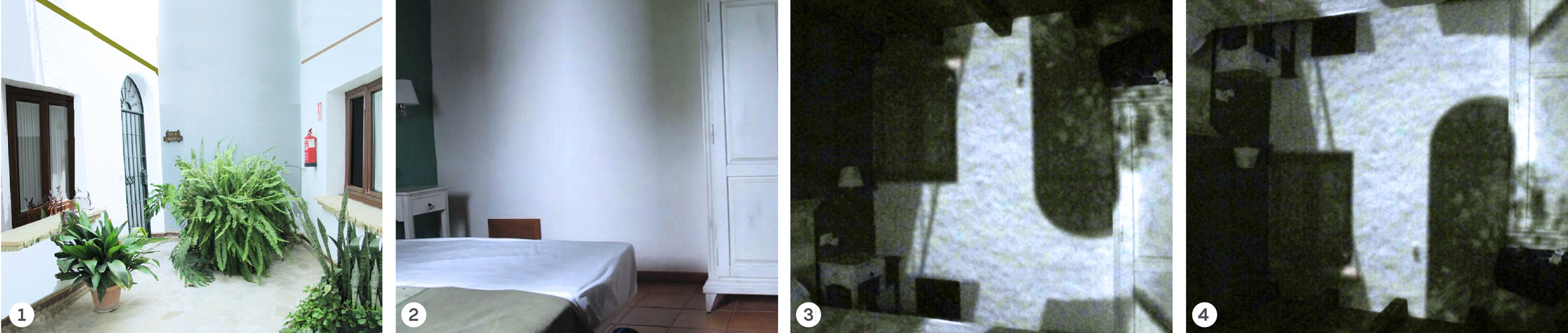

Computer vision specialist Antonio Torralba , relaxing on the coast of Spain in 2012, noticed random shadows on the wall of his hotel room that nothing seemed to cast. As a result, Torralba realized that the spots on the wall that had changed color were not shadows, but dim, upturned images of the patio outside. The window worked like a pinhole — the simplest kind of camera in which the rays of light pass through a small hole and form an inverted image on the other side. On the sunlit wall, this image could hardly be discerned. But Torralba realized that our world is filled with visual information that our eyes do not perceive.

“These images are hidden from us,” he said, “but they constantly surround us.”

His experience allowed him and his colleague, Bill Freeman , also a professor at the Massachusetts Institute of Technology, to realize that the world is filled with “random cameras”, as they call them: windows, corners, house plants and other ordinary objects that create hidden images of their surroundings. These images are 1000 times less bright than anything else, and usually they are not visible to the naked eye. “We have come up with ways to isolate these images and make them visible,” said Freeman.

They learned how much visual information is hiding right in front of everyone. In the first work, they showed that when shooting with the help of an ordinary iPhone the changes of light on the wall of the room, from the resulting video, you can recreate the scene outside the window. Last fall they and their colleaguesreported that you can detect a person moving around the corner by shooting the ground near the corner with a camera. This summer, they demonstrated that they can video a home plant, and then recreate a three-dimensional image of the entire room based on the shadows cast by the leaves of the plant. Or they can turn leaves into a “ visual microphone, ” increasing their vibrations and recognizing speech.

1) Patio outside the hotel room, where Antonio Torralba noticed that the window works like a pinhole. 2) Blurred image of the patio on the wall; 3) it can be sharpened by covering most of the window with cardboard to reduce the size of the hole. 4) If you turn it upside down, you can see the scene outside.

“Our Mary had a ram,” says a man in an audio recording, recreated from the movements of an empty bag from under the chips, which scientists removed through a soundproof window in 2014 (these are the first words recorded by Thomas Edison in 1877 on a phonograph).

Studies about looking at corners and building assumptions about objects that are not directly visible, or “building images not in direct view,” began in 2012 with the work of Torralba and Freeman on a random camera, and another critical work done by a separate group of scientists from MIT under the leadership of Ramesh Raskar. In 2016, in particular, and thanks to their results, the Department of Advanced Research Projects of the United States Department of Defense (DARPA) launched the REVEAL program worth $ 27 million (Revolutionary Examination of Visibility by Exploiting Active Light-fields - a revolutionary improvement in visibility using active light fields). The program funds emerging laboratories throughout the country. Since then, the flow of new ideas and mathematical tricks makes the construction of images not in line of sight all the more powerful and practical.

In addition to the obvious use for military and reconnaissance purposes, researchers are studying the use of technology in robots, robotic vision, medical photography, astronomy, space exploration and rescue missions.

Torralba said that he and Freeman at the very beginning of the work had no ideas on the practical application of technology. They simply understood the basics of imaging and what a camera is, from which a more complete study of the behavior of light and its interaction with objects and surfaces naturally developed. They began to see things that no one could think of. Psychological studies, according to Torralba, show that “people are terribly ill able to interpret shadows. Perhaps one of the reasons for this is that many things we see are not shadows. And as a result, the eyes threw attempts to comprehend them. ”

Random cameras

Rays of light that carry the image of the world outside our field of view, constantly fall on the walls and other surfaces, and then reflected and fall into our eyes. But why are these visual leftovers so weak? Just too many rays go in too many directions, and the images blur.

To form an image, it is necessary to seriously limit the rays falling on the surface, and only see their specific set. This makes camera pinhole. The original idea of Torralba and Freeman in 2012 was that in our environment there are quite a lot of objects and various properties that naturally limit the rays of light and form weak pictures that the computer is able to recognize.

The smaller the pinhole aperture, the sharper the image will turn out, since each point of the object being studied will emit only one light beam at a right angle, which will be able to pass through the hole. The window at the Torralba Hotel was too big for the image to be sharp, and he and Freeman realized that, in general, useful random pinhole cameras were quite rare. However, they realized that anti-pin holes (“point” cameras), consisting of any small object blocking light, form images in abundance.

Bill Freeman

Antonio Torralba

Imagine that you are shooting the inner wall of a room through a gap in the blinds. You will not see much. Suddenly, a hand appears in your field of view. Comparison of the light intensity on the wall with and without an arm provides useful information about the scene. The set of rays of light falling on the wall in the first frame is momentarily blocked by the hand in the next. Subtracting the data of the second frame from the data of the first, as Freeman says, “one can calculate what the hand has blocked” —a set of light rays representing the image of a part of a room. “If you study what blocks the light and what passes the light,” he said, “you can expand the range of places where you can meet pinhole cameras.”

Together with the study of random cameras that perceive small changes in intensity, Freeman and his colleagues developed algorithms that determine and enhance small color changes, such as a change in the color of a person’s face during ebb and flow of blood, as well as tiny movements. it was possible to record the conversation, removing the bag of chips. Now they can easily notice a movement in one hundredth of a pixel, which under normal conditions would simply drown in noise. Their method mathematically transforms images into sinusoid configurations. In the resulting space, noise does not dominate the signal, since sinusoids represent average values taken over many pixels, so the noise is distributed over them. Thanks to this, researchers can determine the shifts of sine waves from one video frame to another, amplify these shifts,

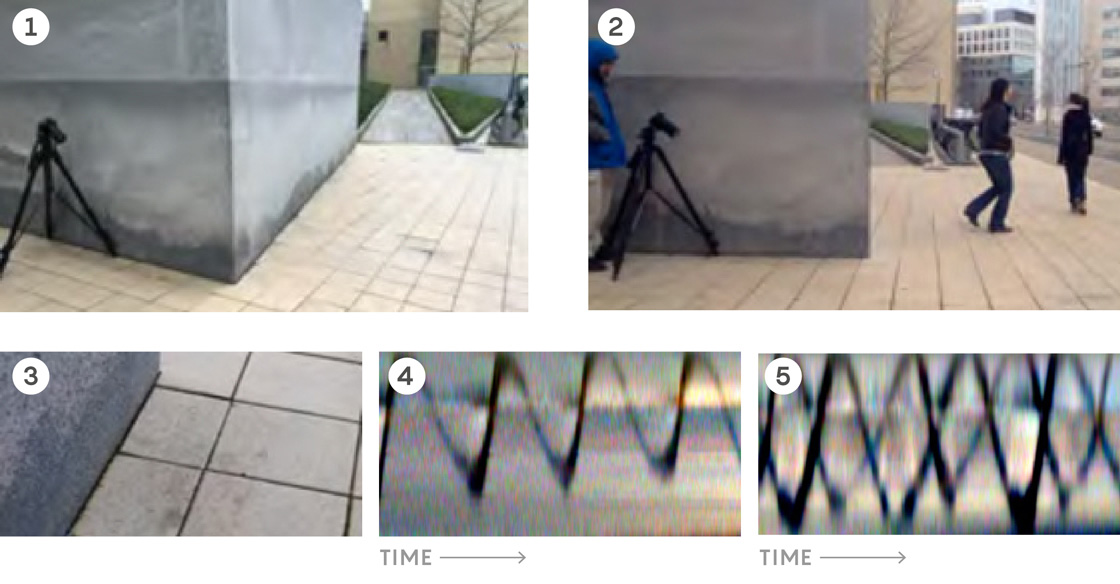

Now they have begun to combine all these tricks to extract hidden visual information. The study, described last October by Katie Bowman (then a student under Freeman, now a scientist from the Harvard-Smithsonian Astrophysical Center), showed that the corners of buildings work like cameras that create a rough image of what is around the corner.

Removing the penumbra on the ground near the corner (1), you can get information about objects that are around the corner (2). When invisible objects begin to move, the light and shadows from them move at different angles to the wall. Small changes in intensity and color usually cannot be distinguished with the naked eye (3), but can be enhanced with algorithms. Primitive videos with light going at different angles to the penumbra give the presence of one moving person around the corner (4) and two (5).

The edges and corners, like pinholes with dotted cameras, prevent the passage of sunlight. Using ordinary cameras, the same iPhones, in daylight, Bowman and his colleagues shot penumbra at the corner of the building - an area with shadows highlighted by a subset of light rays coming from a hidden area around the corner. If, for example, a man in a red shirt passes there, this shirt will send a small amount of red light in partial shade, and this light will move in partial shade while the person is walking, invisible to the ordinary eye, but detectable after post-processing.

In a revolutionary paper published in June, Freeman and his colleagues recreated the “light field” of the room — a picture of the intensity and direction of the rays of light in the room — from the shadows cast by the deciduous plant standing next to the wall. The leaves worked as point cameras, each of which blocked its own set of light rays. Comparison of the shadow of each sheet with the rest of the shadows gave this missing set of rays, and made it possible to obtain an image of a part of the hidden scene. Given the parallax, the researchers were then able to bring all these images together.

This approach provides a much sharper image than the earlier work with random cameras, because the algorithm incorporates pre-acquired knowledge of the world. Knowing the shape of the plant, believing that natural images should be smooth, and taking into account several other assumptions, the researchers were able to draw certain conclusions regarding signals containing noise, which helped make the final image sharper. The technology of working with the light field "requires knowledge of the world to create a reconstruction, but also gives you a lot of information," said Torralba.

Diffused light

In the meantime, Freeman, Torralba and their protégés reveal images that were hidden in another site of the MIT campus Ramesh Raskar, a computer vision specialist who spoke at TED, intends to “change the world” and chooses an approach called “active imaging”. It uses specialized expensive laser camera systems to create high-resolution images that reflect what is around the corner.

Ramesh Raskar

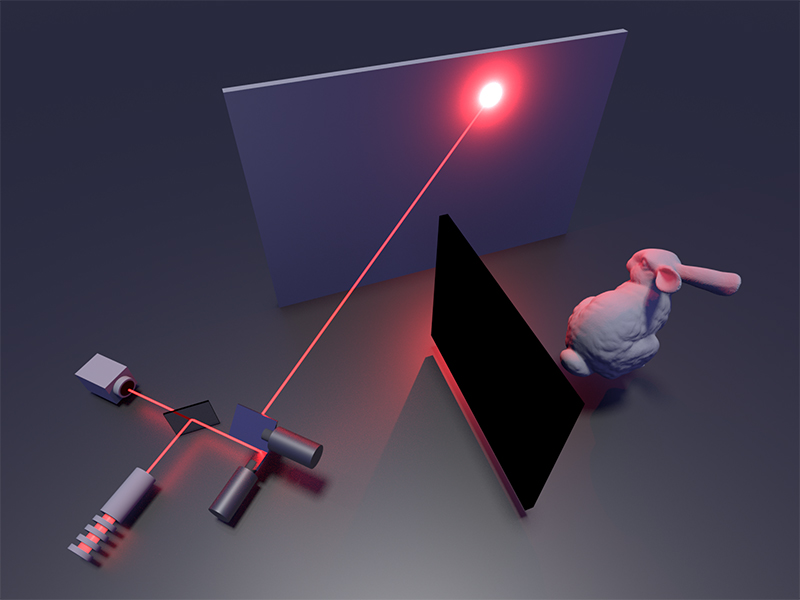

In 2012, as part of the implementation of the idea that visited him five years ago, Raskar and the team first created the technology in which it is necessary to emit laser pulses into the wall. A small part of the scattered light will be able to bypass the obstacle. And after a short time after each pulse, they use a “camera of flashes,” recording individual photons at speeds of billions of frames per second, in order to detect photons that bounced off the wall. By measuring the time spent by photons on their return, researchers can find out how far they flew, and in detail to recreate the three-dimensional geometry of objects hidden behind an obstacle, in which the photons are scattered. One of the difficulties is that to form a three-dimensional image it is necessary to conduct a raster scan of the wall with a laser. Suppose a man is hiding around the corner. "Then the light - reflected from a certain point on the head, from a certain point on the shoulder, and from a certain point on the knee can arrive at the camera at the same time, - said Rascar. But if you shine a little laser to another place, then the light from these three points will no longer arrive at the camera at the same time. ” It is necessary to combine all the signals and solve the “inverse problem” to recreate the hidden three-dimensional geometry.

The original Raskar algorithm for solving the inverse problem required too much computational resources, and the apparatus itself cost half a million dollars. But serious work has been done to simplify mathematics and reduce costs. In March, the journal Nature published work , sets a new standard for efficient and economical construction of three-dimensional images of the object - in the reconstituted figure rabbit - located around the corner. The authors, Matthew O'Toole , David Lindel and Gordon Wetstein of Stanford University have developed a new powerful algorithm for solving the inverse problem and used relatively inexpensive SPAD cameras- semiconductor devices, the frame rate of which is lower than that of flash cameras. Raskar, who previously worked as a curator for two authors of the work, called it "very clever" and "one of my favorites."

Previous algorithms were drowning in details: researchers usually tried to detect returning photons that did not reflect from the point of the wall into which the laser was shining, so that the camera could avoid collecting the scattered laser light. But having sent the laser and the camera almost to one point, the researchers were able to map the outgoing and incoming photons from one " light coneDispersed from the surface, light forms an expanding sphere of photons that looms a cone, spreading in space-time. O'Toole (who has since changed his job from Stanford to Carnegie Mellon University) translated the physics of light cones - developed by teacher Albert Einstein , Hermann Minkowski at the beginning of the XX century -.. in a concise expression relating the flight of the photon with the location of scattering surfaces He called his translation of the "transformation of the light cone"

Robomobili already using LIDAR systems etc. I'm building a live image, and you can imagine that someday acquire the SPAD, to see around corners "in the near future, these sensors will be available in a portable format," -. predicts Andreas Velten, the first author of the initial work of Raskar of 2012, now leading the group engaged in the construction of active images in the University of Wisconsin. The challenge now is to “handle more complex scenes” and realistic scenarios, Welten said, “and not just the careful creation of a scene with a white object and a black backdrop. We need technology that allows us to direct the device and press a button. ”

Where are the things

Researchers from the Freeman group began to combine passive and active approaches. The work, conducted under the direction of the researcher Christos Trumpulides, shows that with active imaging using a laser, a point camera of a known shape located around the corner can be used to recreate the hidden scene without using the photon flight time information at all. “And this should be ours with the help of a conventional CCD sensor ,” said Trumpulidis.

Building images not in direct visibility will ever be able to help rescue teams and autonomous robots. Welten collaborates with NASA's Jet Propulsion Laboratory, working on a project aimed at building images of objects inside the Moon's caves at a distance. And Rascar and the company use their approach to read the first few pages of a closed book, and to see in the fog.

In addition to audio reconstruction, the Freeman motion enhancement algorithm can help create medical devices and security systems, as well as detectors of small astronomical movements. This algorithm is “a very good idea,” said David Hogg, an astronomer and data specialist at New York University and the Institute of Flatrion. "I thought - we just have to use it in astronomy."

Regarding privacy issues raised by recent discoveries, Freeman refers to his experience. “I have been thinking about this issue very, very much throughout my career,” he says. Glasses, a fan of tinkering with cameras, who has been involved in photography all his life, Freeman said that at the beginning of his career he did not want to work on anything that would have some kind of military or espionage potential. But over time, he began to think that “technology is a tool that can be used in different ways. If you try to avoid everything that can have at least some military use, you will not think of anything useful. ” He says that even in the case of the military, “there is a very wide range of possibilities for using things. You can help someone survive. And, in principle, to know where things are located is useful. ”

But most of all he is not pleased with the technological possibilities, but simply the discovery of a phenomenon that was hidden in public view. “It seems to me that the world is full of everything that has yet to be discovered,” he said.