Monitoring PostgreSQL + php-fpm + nginx + disk using Zabbix

- From the sandbox

- Tutorial

A lot of information on the network on Zabbix, a lot of self-written templates, I want to present my modifications to the audience.

Zabbix is a very convenient and flexible monitoring tool. If you want - a hundred monitors, if you want - a thousand stations, but if you don’t want to - follow one server, skim the cream in all sections. I would not mind giving it to github if anyone collects similar ones.

It so happened that we decided to put on the hosting a database with a wrapper from php-fpm + nginx. The database is postgres. Thoughts to collect data on the operation of the machine were even before buying a hosting - this is necessary, it is useful! The hard drive brakes on our VDS station served as a magic pendell for the implementation of the system - at the beginning of the script, we put the time and the measured speed into the file every minute, and then build the graphs in Excel, compare it as it has / become, and take quantitative statistics. And this is just one parameter! What if VDS is not to blame, but our applications that run on it. In general, you need to monitor a lot, you need to monitor conveniently!

I will not dwell on how to install the server, there are many options and documentation on this subject. I used the official:

https://www.zabbix.com/documentation/en/2.2/manual/installation/install_from_packages

As the operating system - CentOS 6.5 The

files you will need are in the archive habr-zabbix-mons.zip

To the agent station besides zabbix- agent be sure to put zabbix-sender:

Instead of “Your_addr_agent_” put the name / ip of the machine, as the agent on the server added, the “Host name” field.

I use the hdparm program. You can use another if you have preferences:

We select the section that we will monitor:

Add to /etc/zabbix/zabbix_agentd.d/user.conf

Allow sudo to run without the console (disable requiretty) and add the command that we run as zabbix:

We increase the time it takes to request a parameter in the agent config, because hdparm takes 3-10 or more seconds to measure, depending on the jumps in speed, apparently.

Also on the server, you need to fix the agent response timeout

We go into the Zabbix Web Admin and add in the configuration to the host (Configuration-> Hosts-> Your_server_agent-> Items) or to some template, for example OS Linux (Configuration-> Templates-> Template OS Linux-> Items) a new parameter - click “Create Item”:

Done! We have a parameter, and there is a graph on it. If you added to the template, attach the template to the host. Admire!

In our case, what happened before July 31 is bad, although the average speed was high, but very often it went below 1MB / s. Now (after transferring us to the next other node), it is stable and rarely drops, while there was a minimum of 5-6MB / s. I think that on the previous node it did not fall at all because of the disk, but because of the employment of some other more important resources, but the main thing is that we see failures!

A good parameter, but do not remove it too often, because during these 3-10 seconds of measurement the disk will be very busy, I recommend it every 10-60 minutes, or turn it off altogether if you like the statistics and will not torment the hoster.

It would seem, why monitor the logs? I connected the metric, but the analytics, and watch everything there. But these guys will not show us bots that do not start js on the page, as well as people if they have js disabled. The proposed solution will show the frequency by which robots crawl your pages, and help to prevent a high load on your server from search bots. Well, any statistics, if you dig into loghttp.sh

Add to /etc/zabbix/zabbix_agentd.d/user.conf

We put loghttp.sh in / etc / zabbix / scripts, in the same folder we execute

Check the path to access.log

We import loghttp.xml into zabbix templates: Configuration -> Templates, in the header line “CONFIGURATION OF TEMPLATES” look for the “Import” button on the right, select the file, import.

We connect the template to the host: Configuration-> Hosts-> Your_server_agent, tab “Templates”, in the field “Link new templates” we begin to write “Logs”, a drop-down list will appear - select our template. “Add”, “Save”.

The template says to monitor the log every 10 minutes, so take your time to look at the charts, but you can check the logs. On the client side “/var/log/zabbix/zabbix_agentd.log” and on the server side “/tmp/zabbix_server.log” or “/var/log/zabbix/zabbix_server.log”.

If all is well, then soon you will be able to observe a similar picture:

Google well done - crawls at one speed, Sometimes Mail arrives, rarely Bing, and Yahoo can not be seen. Yandex indexed with varying success, but a lot - I would have shown it in the search results, it would have been great :)

Google and Yandex on the chart on the left scale, the rest on the right. The value on the scale is the number of visits from measurement to measurement, i.e. in 10 minutes. You can set 1 hour, but then we risk missing many visits at the time of log rotation.

Why monitor nginx - I don’t know yet, there have never been problems with it. But let it be, for statistics. I tried to use a set of ZTC templates, but I really don’t like the blinking python processes in memory, 10 MB each. I want it natively, I want bash! And most importantly - in one request to collect all the parameters. This is exactly what I wanted to achieve when I monitored all the services — minimum server load and maximum parameters.

You can find a lot of similar scripts, but since I approached the monitoring of the Web server comprehensively, I post my version.

We will teach nginx to give the status page, add the configuration for localhost

Remember to apply the changes:

Add to /etc/zabbix/zabbix_agentd.d/user.conf

We put nginx.sh in / etc / zabbix / scripts, in the same folder we execute

If you did not install curl in the previous step, you must install:

Just in case, check that the paths are correct in nginx.sh in the SENDER and CURL variables.

Import loghttp.xml into zabbix templates, connect the template to the host.

Well, enjoy the pictures!

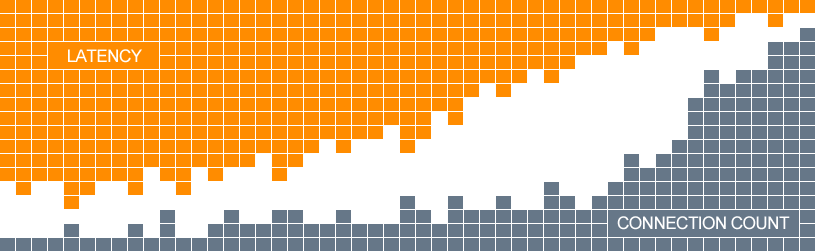

This monitor can inform that nginx is not working, or that it started to respond too slowly. By default, the threshold is this: if over the past 10 measurements the nginx reaction rate has not dropped below 10ms, create a Warning. The monitor will report if the server returns an incorrect status (nginx is in memory, but responds with rubbish).

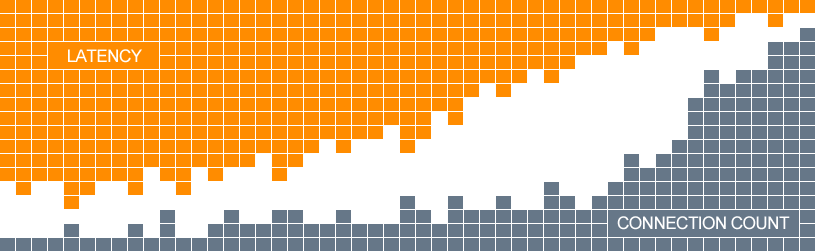

It is useful to implement if you use a dynamic set of processes (pm = dynamic in /etc/php-fpm.d/www.conf) by default or consciously. The monitor can warn about the unavailability of the service, or its slowdown.

I tried to poll the service without nginx, but I could not find such an installed program that would help in interacting with php-fpm. Tell me the options, if anyone knows.

Perhaps php-fpm does not give status, check

If something is changed, we use:

Add to /etc/zabbix/zabbix_agentd.d/user.conf

We put php-fpm.sh in / etc / zabbix / scripts, in the same folder we execute

In php-fpm.sh, write the path to the FastCGI server (listen parameter in /etc/php-fpm.d/www.conf)

If cgi-fcgi is not installed, you must install:

Import php-fpm.xml into zabbix templates, connect the template to the host.

This is the main course! His chef cooked the longest :)

pg_monz was chosen as a prototype - open_source, supported, many parameters, works with the latest version of postgres. The disadvantage is global - I collect all the parameters for the service, because I don’t know which one and when it will “jump out”.

When I figured it out with pg_monz and turned on collecting all the parameters - according to the databases and tables, about 700 pieces in total, the server load increased 10 times! (most likely with pgbouncer it will not be so noticeable) Although the parameters were collected once every 300 seconds. It is understandable - for each parameter psql is launched and executes a query, often to the same tables, just to different fields. In general, only the names of fields and tables are left of pg_monz. Well, try!

Add to /etc/zabbix/zabbix_agentd.d/user.conf

We put psql * .sh in / etc / zabbix / scripts, in the same folder we execute

Make sure, just in case, that nginx.sh and psql_db_stats.sh in the PSQLC variable have the correct path to psql.

Import psql.xml into zabbix templates. If you do not plan to collect data on databases and tables, then immediately disable the “PSQL DB list” and “PSQL table list” templates on the “Discovery” tab. And if you’re planning, first set a macro on the “Macros” tab of the agent {$ PGTBL_REGEXP} - the name of the table that you will monitor in detail. Although, most likely, in the beginning you will want to see all the tables :) We

connect the template to the host, see how the data is collected ...

All template parameters (inherited from pg_monz) and their default values can be viewed on the “Macros” tab of the template. I will try to make a description of these parameters:

* a regular expression is used as a parameter, for example,

org - all tables and schemes that contain the substring org

\. (organization | resource | okved) $ - tables with the names organization, resource, okved in any

^ msn \ scheme . - all tables in the msn schema

It turns out that the parameters {$ PGDB_REGEXP} and {$ PGTBL_REGEXP} are not just names, this is a substring that will be searched in the names of all databases and table schemas.

Only regulars will not work all, but only those that do not contain the characters \, ', ",`, *,?, [,], {,}, ~, $,!, &,;, (,) , <,>, |, #, @, 0x0a. If you want to remove this restriction, edit /etc/zabbix/zabbix_agentd.conf

www.postgresql.org/docs/9.3/static/functions-matching.html#FUNCTIONS-POSIX-REGEXP

If you do not see the “PSQL error log” parameter, then most likely the path is incorrect {$ PGLOGDIR} - look for the file “postgresql-Sun.log” on the agent - where it lies, write this folder and write it into a macro.

As is customary in the best homes - dessert!

This article looks at the entire chain - from the user's request to the data, except for one node - php itself. To open this box a little bit, let's try to collect statistics of the opcache accelerator built into php5 using the opcache_get_status function.

Add to /etc/zabbix/zabbix_agentd.d/user.conf

We put php-opc. * In / etc / zabbix / scripts, in the same folder we execute

Write the path to the FastCGI server in php-opc.sh (listen parameter in /etc/php-fpm.d/www.conf)

If you did not set fcgi to monitor php-fpm, you need to set:

Import php-opc.xml into zabbix templates, connect the template to the host.

While creating triggers, I reduced the memory size for the cache for my opcache by 2 times. So it’s useful to see these statistics. If the project on this server were not replenished with new modules, it would reduce by 4 times easily.

I don’t know yet what other parameters can be monitored. These are the main services that run on our VDS machine. I’ll share it, of course. It would be nice to add a mysql script template, but no task yet. If you really need to - I’ll do it :) You

can discuss the approach - I’ve got separate lotions for each service, and this is also not bad - I took the script, added the config, filled in the template, and it’s done.

If you collect these monitors in one package, then, of course, you need to do additional discovery for each service - there may be several nginx, php-fpm, postgres. They can listen to both ports and sockets.

ZabbixServer 2.2.4

ZabbixAgent 2.2.4

hdparm 9.43

nginx 1.6.1

php-fpm 5.3.3

PostgreSQL 9.3.4

php-opcache 5.5.15

Templates and scripts here: http://www.uralati.ru /frontend/for_articles/2014-08-habr-zabbix-mons.zip

For monitoring php-fpm and pfp-opcache in the archive there is a version of scripts for working with curl (with the same templates). The description of the settings is commented out in the corresponding php scripts - * _ curl.sh , see upd2

Zabbix is a very convenient and flexible monitoring tool. If you want - a hundred monitors, if you want - a thousand stations, but if you don’t want to - follow one server, skim the cream in all sections. I would not mind giving it to github if anyone collects similar ones.

It so happened that we decided to put on the hosting a database with a wrapper from php-fpm + nginx. The database is postgres. Thoughts to collect data on the operation of the machine were even before buying a hosting - this is necessary, it is useful! The hard drive brakes on our VDS station served as a magic pendell for the implementation of the system - at the beginning of the script, we put the time and the measured speed into the file every minute, and then build the graphs in Excel, compare it as it has / become, and take quantitative statistics. And this is just one parameter! What if VDS is not to blame, but our applications that run on it. In general, you need to monitor a lot, you need to monitor conveniently!

I will not dwell on how to install the server, there are many options and documentation on this subject. I used the official:

https://www.zabbix.com/documentation/en/2.2/manual/installation/install_from_packages

As the operating system - CentOS 6.5 The

files you will need are in the archive habr-zabbix-mons.zip

To the agent station besides zabbix- agent be sure to put zabbix-sender:

yum install -y http://repo.zabbix.com/zabbix/2.2/rhel/6/x86_64/zabbix-2.2.4-1.el6.x86_64.rpm yum install -y http://repo.zabbix.com/zabbix/2.2/rhel/6/x86_64/zabbix-agent-2.2.4-1.el6.x86_64.rpm yum install -y http://repo.zabbix.com/zabbix/2.2/rhel/6/x86_64/zabbix-sender-2.2.4-1.el6.x86_64.rpm

[root@fliber ~]# vi /etc/zabbix/zabbix_agentd.conf

LogFileSize=1

Hostname=Ваш_агент_addr

Server=123.45.67.89

ServerActive=123.45.67.89

Instead of “Your_addr_agent_” put the name / ip of the machine, as the agent on the server added, the “Host name” field.

chkconfig zabbix-agent --level 345 on service zabbix-agent start

Monitor the speed of the hard drive

I use the hdparm program. You can use another if you have preferences:

yum install hdparm

We select the section that we will monitor:

[root@fliber ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/vda1 219608668 114505872 104106808 78% /

tmpfs 11489640 0 11489640 0% /dev/shm

Add to /etc/zabbix/zabbix_agentd.d/user.conf

UserParameter=hdparm.rspeed,sudo /sbin/hdparm -t /dev/vda1 | awk 'BEGIN{s=0} /MB\/sec/ {s=$11} /kB\/sec/ {s=$11/1024} END{print s}'

Allow sudo to run without the console (disable requiretty) and add the command that we run as zabbix:

[root@fliber ~]# visudo

#Defaults requiretty

zabbix ALL=(ALL) NOPASSWD: /sbin/hdparm -t /dev/vda1

We increase the time it takes to request a parameter in the agent config, because hdparm takes 3-10 or more seconds to measure, depending on the jumps in speed, apparently.

[root@fliber ~]# vi /etc/zabbix/zabbix_agentd.conf

Timeout=30

service zabbix-agent restart

Also on the server, you need to fix the agent response timeout

[root@pentagon ~]# vi /usr/local/etc/zabbix_server.conf

Timeout=30

We go into the Zabbix Web Admin and add in the configuration to the host (Configuration-> Hosts-> Your_server_agent-> Items) or to some template, for example OS Linux (Configuration-> Templates-> Template OS Linux-> Items) a new parameter - click “Create Item”:

Name: Hdparm: HDD speed

Key: hdparm.rspeed

Type of information: Numeric (float)

Units: MB/s

Update interval (in sec): 601

Applications: Filesystems

Name: Hdparm: HDD read speed

Y axis MIN value: Fixed 0.0000

Items: Add: “Hdparm: HDD speed”

Done! We have a parameter, and there is a graph on it. If you added to the template, attach the template to the host. Admire!

In our case, what happened before July 31 is bad, although the average speed was high, but very often it went below 1MB / s. Now (after transferring us to the next other node), it is stable and rarely drops, while there was a minimum of 5-6MB / s. I think that on the previous node it did not fall at all because of the disk, but because of the employment of some other more important resources, but the main thing is that we see failures!

A good parameter, but do not remove it too often, because during these 3-10 seconds of measurement the disk will be very busy, I recommend it every 10-60 minutes, or turn it off altogether if you like the statistics and will not torment the hoster.

Monitor nginx logs

It would seem, why monitor the logs? I connected the metric, but the analytics, and watch everything there. But these guys will not show us bots that do not start js on the page, as well as people if they have js disabled. The proposed solution will show the frequency by which robots crawl your pages, and help to prevent a high load on your server from search bots. Well, any statistics, if you dig into loghttp.sh

Add to /etc/zabbix/zabbix_agentd.d/user.conf

UserParameter=log.http.all,/etc/zabbix/scripts/loghttp.sh

We put loghttp.sh in / etc / zabbix / scripts, in the same folder we execute

chmod o + x loghttp.sh yum install curl chown zabbix: zabbix / etc / zabbix / scripts service zabbix-agent restart

Check the path to access.log

[root@fliber ~]# vi loghttp.sh

LOG=Путь_к_логу_nginx

We import loghttp.xml into zabbix templates: Configuration -> Templates, in the header line “CONFIGURATION OF TEMPLATES” look for the “Import” button on the right, select the file, import.

We connect the template to the host: Configuration-> Hosts-> Your_server_agent, tab “Templates”, in the field “Link new templates” we begin to write “Logs”, a drop-down list will appear - select our template. “Add”, “Save”.

The template says to monitor the log every 10 minutes, so take your time to look at the charts, but you can check the logs. On the client side “/var/log/zabbix/zabbix_agentd.log” and on the server side “/tmp/zabbix_server.log” or “/var/log/zabbix/zabbix_server.log”.

If all is well, then soon you will be able to observe a similar picture:

Google well done - crawls at one speed, Sometimes Mail arrives, rarely Bing, and Yahoo can not be seen. Yandex indexed with varying success, but a lot - I would have shown it in the search results, it would have been great :)

Google and Yandex on the chart on the left scale, the rest on the right. The value on the scale is the number of visits from measurement to measurement, i.e. in 10 minutes. You can set 1 hour, but then we risk missing many visits at the time of log rotation.

Monitor nginx

Why monitor nginx - I don’t know yet, there have never been problems with it. But let it be, for statistics. I tried to use a set of ZTC templates, but I really don’t like the blinking python processes in memory, 10 MB each. I want it natively, I want bash! And most importantly - in one request to collect all the parameters. This is exactly what I wanted to achieve when I monitored all the services — minimum server load and maximum parameters.

You can find a lot of similar scripts, but since I approached the monitoring of the Web server comprehensively, I post my version.

We will teach nginx to give the status page, add the configuration for localhost

server {

listen localhost;

server_name status.localhost;

keepalive_timeout 0;

allow 127.0.0.1;

deny all;

location /server-status {

stub_status on;

}

access_log off;

}

Remember to apply the changes:

service nginx reload

Add to /etc/zabbix/zabbix_agentd.d/user.conf

UserParameter=nginx.ping,/etc/zabbix/scripts/nginx.sh

We put nginx.sh in / etc / zabbix / scripts, in the same folder we execute

chmod o + x nginx.sh service zabbix-agent restart

If you did not install curl in the previous step, you must install:

yum install curl

Just in case, check that the paths are correct in nginx.sh in the SENDER and CURL variables.

Import loghttp.xml into zabbix templates, connect the template to the host.

Well, enjoy the pictures!

This monitor can inform that nginx is not working, or that it started to respond too slowly. By default, the threshold is this: if over the past 10 measurements the nginx reaction rate has not dropped below 10ms, create a Warning. The monitor will report if the server returns an incorrect status (nginx is in memory, but responds with rubbish).

Monitor php-fpm

It is useful to implement if you use a dynamic set of processes (pm = dynamic in /etc/php-fpm.d/www.conf) by default or consciously. The monitor can warn about the unavailability of the service, or its slowdown.

I tried to poll the service without nginx, but I could not find such an installed program that would help in interacting with php-fpm. Tell me the options, if anyone knows.

Perhaps php-fpm does not give status, check

[root@fliber ~]# vi /etc/php-fpm.d/www.conf

pm.status_path = /status

If something is changed, we use:

service php-fpm reload

Add to /etc/zabbix/zabbix_agentd.d/user.conf

UserParameter=php.fpm.ping,/etc/zabbix/scripts/php-fpm.sh

We put php-fpm.sh in / etc / zabbix / scripts, in the same folder we execute

chmod o + x php-fpm.sh service zabbix-agent restart

In php-fpm.sh, write the path to the FastCGI server (listen parameter in /etc/php-fpm.d/www.conf)

[root@fliber ~]# vi /etc/zabbix/scripts/php-fpm.sh

LISTEN='127.0.0.1:9000'

If cgi-fcgi is not installed, you must install:

yum install fcgi

Import php-fpm.xml into zabbix templates, connect the template to the host.

Monitor PostgreSQL

This is the main course! His chef cooked the longest :)

pg_monz was chosen as a prototype - open_source, supported, many parameters, works with the latest version of postgres. The disadvantage is global - I collect all the parameters for the service, because I don’t know which one and when it will “jump out”.

When I figured it out with pg_monz and turned on collecting all the parameters - according to the databases and tables, about 700 pieces in total, the server load increased 10 times! (most likely with pgbouncer it will not be so noticeable) Although the parameters were collected once every 300 seconds. It is understandable - for each parameter psql is launched and executes a query, often to the same tables, just to different fields. In general, only the names of fields and tables are left of pg_monz. Well, try!

Add to /etc/zabbix/zabbix_agentd.d/user.conf

UserParameter=psql.ping[*],/etc/zabbix/scripts/psql.sh $1 $2 $3 $4 $5

UserParameter=psql.db.ping[*],/etc/zabbix/scripts/psql_db_stats.sh $1 $2 $3 $4 "$5"

UserParameter=psql.db.discovery[*],psql -h $1 -p $2 -U $3 -d $4 -t -c "select '{\"data\":['||string_agg('{\"{#DBNAME}\":\"'||datname||'\"}',',')||' ]}' from pg_database where not datistemplate and datname~'$5'"

UserParameter=psql.t.discovery[*],/etc/zabbix/scripts/psql_table_list.sh $1 $2 $3 $4 "$5" "$6"

We put psql * .sh in / etc / zabbix / scripts, in the same folder we execute

chmod o + x psql * .sh service zabbix-agent restart

Make sure, just in case, that nginx.sh and psql_db_stats.sh in the PSQLC variable have the correct path to psql.

Import psql.xml into zabbix templates. If you do not plan to collect data on databases and tables, then immediately disable the “PSQL DB list” and “PSQL table list” templates on the “Discovery” tab. And if you’re planning, first set a macro on the “Macros” tab of the agent {$ PGTBL_REGEXP} - the name of the table that you will monitor in detail. Although, most likely, in the beginning you will want to see all the tables :) We

connect the template to the host, see how the data is collected ...

All template parameters (inherited from pg_monz) and their default values can be viewed on the “Macros” tab of the template. I will try to make a description of these parameters:

| Macro | Default | Description |

|---|---|---|

| {$ PGDATABASE} | postgres | The name of the database to connect |

| {$PGHOST} | 127.0.0.1 | Хост PostgreSQL (относительно Zabbix агента, если там же: 127.0.0.1) |

| {$PGLOGDIR} | /var/lib/pgsql/9.3/data/pg_log | Каталог с логами PostgreSQL |

| {$PGPORT} | 5432 | Номер порта PostgreSQL |

| {$PGROLE} | postgres | Имя пользователя для подключения к PostgreSQL |

| {$PGDB_REGEXP} | . (все базы) | Название базы для сбора подробных сведений* |

| {$PGTBL_REGEXP} | . (все таблицы) | Название таблицы для сбора подробных сведений* |

| {$PGCHECKPOINTS_THRESHOLD} | 10 | Если количество checkpoint’ов превысит данный порог, сработает триггер |

| {$PGCONNECTIONS_THRESHOLD} | 2 | Если среднее количество сессий за последние 10 минут превысит установленный порог, сработает триггер |

| {$PGDBSIZE_THRESHOLD} | 1073741824 | Если размер базы превысит оговоренный лимит в байтах, сработает триггер |

| {$PGTEMPBYTES_THRESHOLD} | 1048576 | Если скорость записи во временные файлы за последние 10 минут превысит PGTEMPBYTES_THRESHOLD в байтах, сработает триггер |

| {$PGCACHEHIT_THRESHOLD} | 90 | Если за последние 10 минут среднее попадание в кэш будет ниже порога, сработает триггер на базу |

| {$PGDEADLOCK_THRESHOLD} | 0 | Как только количество мертвых блокировок превысит установленный предел, сработает триггер |

| {$PGSLOWQUERY_SEC} | 1 | Если запрос выполняется дольше PGSLOWQUERY_SEC секунд, то считать его медленным |

| {$PGSLOWQUERY_THRESHOLD} | 1 | Если среднее количество медленных запросов за последние 10 минут превысит порог, то сработает триггер |

org - all tables and schemes that contain the substring org

\. (organization | resource | okved) $ - tables with the names organization, resource, okved in any

^ msn \ scheme . - all tables in the msn schema

It turns out that the parameters {$ PGDB_REGEXP} and {$ PGTBL_REGEXP} are not just names, this is a substring that will be searched in the names of all databases and table schemas.

Only regulars will not work all, but only those that do not contain the characters \, ', ",`, *,?, [,], {,}, ~, $,!, &,;, (,) , <,>, |, #, @, 0x0a. If you want to remove this restriction, edit /etc/zabbix/zabbix_agentd.conf

UnsafeUserParameters=1

www.postgresql.org/docs/9.3/static/functions-matching.html#FUNCTIONS-POSIX-REGEXP

If you do not see the “PSQL error log” parameter, then most likely the path is incorrect {$ PGLOGDIR} - look for the file “postgresql-Sun.log” on the agent - where it lies, write this folder and write it into a macro.

Monitor php-opcache

As is customary in the best homes - dessert!

This article looks at the entire chain - from the user's request to the data, except for one node - php itself. To open this box a little bit, let's try to collect statistics of the opcache accelerator built into php5 using the opcache_get_status function.

Add to /etc/zabbix/zabbix_agentd.d/user.conf

UserParameter=php.opc.ping,/etc/zabbix/scripts/php-opc.sh

UserParameter=php.opc.discovery,/etc/zabbix/scripts/php-opc.sh discover

We put php-opc. * In / etc / zabbix / scripts, in the same folder we execute

chmod o + r php-opc.php chmod o + x php-opc.sh service zabbix-agent restart

Write the path to the FastCGI server in php-opc.sh (listen parameter in /etc/php-fpm.d/www.conf)

[root@fliber ~]# vi /etc/zabbix/scripts/php-opc.sh

LISTEN='127.0.0.1:9000'

If you did not set fcgi to monitor php-fpm, you need to set:

yum install fcgi

Import php-opc.xml into zabbix templates, connect the template to the host.

While creating triggers, I reduced the memory size for the cache for my opcache by 2 times. So it’s useful to see these statistics. If the project on this server were not replenished with new modules, it would reduce by 4 times easily.

Conclusion

I don’t know yet what other parameters can be monitored. These are the main services that run on our VDS machine. I’ll share it, of course. It would be nice to add a mysql script template, but no task yet. If you really need to - I’ll do it :) You

can discuss the approach - I’ve got separate lotions for each service, and this is also not bad - I took the script, added the config, filled in the template, and it’s done.

If you collect these monitors in one package, then, of course, you need to do additional discovery for each service - there may be several nginx, php-fpm, postgres. They can listen to both ports and sockets.

Versions

CentOS release 6.5 (Final)ZabbixServer 2.2.4

ZabbixAgent 2.2.4

hdparm 9.43

nginx 1.6.1

php-fpm 5.3.3

PostgreSQL 9.3.4

php-opcache 5.5.15

Templates and scripts here: http://www.uralati.ru /frontend/for_articles/2014-08-habr-zabbix-mons.zip

For monitoring php-fpm and pfp-opcache in the archive there is a version of scripts for working with curl (with the same templates). The description of the settings is commented out in the corresponding php scripts - * _ curl.sh , see upd2

upd1

Теперь «HOST=Ваш_агент_addr», «SERVER=Ваш_сервер_addr» задавать не нужно.

Но нужно обязательно задать «Hostname=Ваш_агент_addr» в zabbix_agentd.conf. Значение «system.hostname» не годится.

Устаревшие шаблоны и скрипты тут: скачать

Спасибо за подсказку xenozauros.

Но нужно обязательно задать «Hostname=Ваш_агент_addr» в zabbix_agentd.conf. Значение «system.hostname» не годится.

Устаревшие шаблоны и скрипты тут: скачать

Спасибо за подсказку xenozauros.

upd2

Теперь для мониторинга php-fpm не нужно прописывать конфиг nginx

Теперь для мониторинга php-opcache не нужно прописывать конфиг nginx

Но требуется прописывание LISTEN до php-fpm в скриптах и установка cgi-fcgi:

This switch from curl to fcgi allowed to reduce the time it takes to execute monitoring requests by 2 times.

Also, copyright and checking the correct paths to programs have been added. Now, when running a script from the console, it can swear, if not from the console, then the error will go to the server logs as the wrong answer.

Outdated templates and scripts here: download

server {...

location ~ ^/(status|ping)$ {

include /etc/nginx/fastcgi_params;

fastcgi_pass 127.0.0.1:9000;

fastcgi_param SCRIPT_FILENAME status;

}

...}

service nginx reload

Теперь для мониторинга php-opcache не нужно прописывать конфиг nginx

server {...

location /opc-status {

include /etc/nginx/fastcgi_params;

fastcgi_pass 127.0.0.1:9000;

fastcgi_param SCRIPT_FILENAME /etc/zabbix/scripts/php-opc.php;

}

...}

service nginx reload

Но требуется прописывание LISTEN до php-fpm в скриптах и установка cgi-fcgi:

yum install fcgi

This switch from curl to fcgi allowed to reduce the time it takes to execute monitoring requests by 2 times.

Also, copyright and checking the correct paths to programs have been added. Now, when running a script from the console, it can swear, if not from the console, then the error will go to the server logs as the wrong answer.

Outdated templates and scripts here: download