Lossless data compression algorithms

- Transfer

Part one is historical .

Existing data compression algorithms can be divided into two large classes - with and without losses. Lossy algorithms are typically used to compress images and audio. These algorithms allow you to achieve large degrees of compression due to selective loss of quality. However, by definition, it is impossible to restore the original data from a compressed result.

Lossless compression algorithms are used to reduce the size of the data, and work in such a way that it is possible to restore the data exactly as it was before compression. They are used in communications, archivers and some algorithms for compressing audio and graphic information. Further we will consider only lossless compression algorithms.

The basic principle of compression algorithms is based on the fact that information is partially repeated in any file containing nonrandom data. Using statistical mathematical models, you can determine the likelihood of a certain combination of characters repeating. After that, you can create codes for the selected phrases and assign the shortest codes to the most frequently repeated phrases. For this, various techniques are used, for example: entropy coding, repetition coding, and compression using a dictionary. With their help, an 8-bit character, or a whole line, can be replaced with just a few bits, thus eliminating unnecessary information.

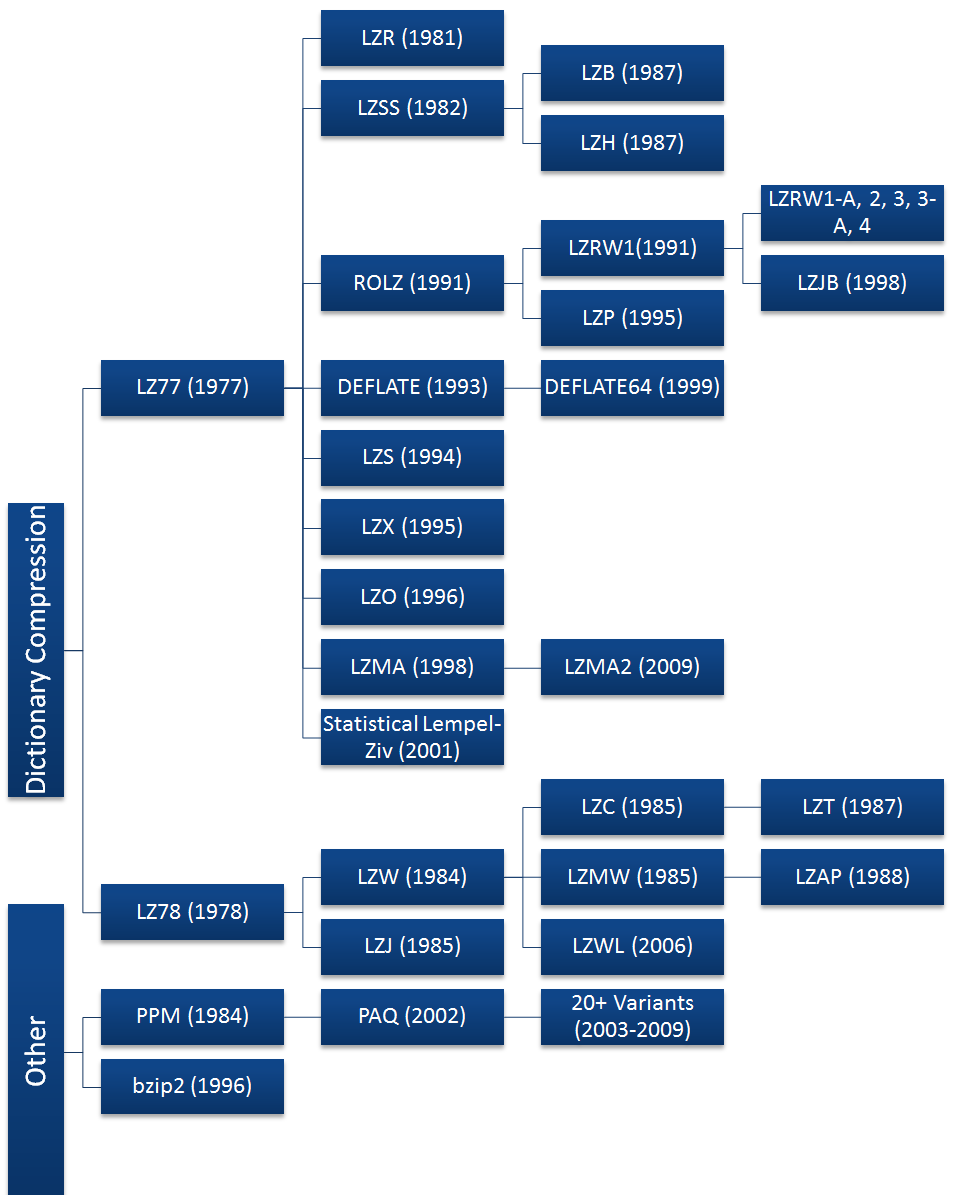

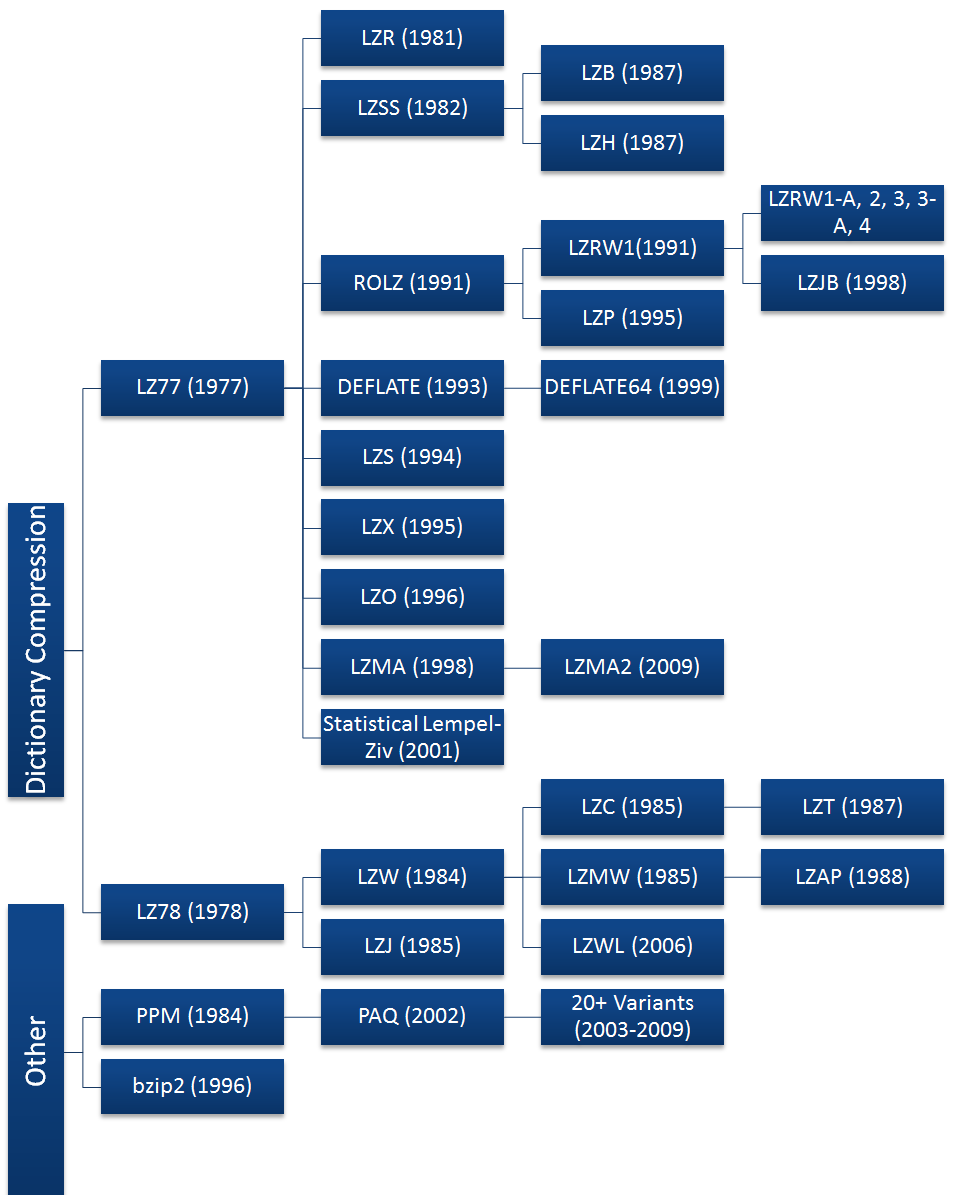

Algorithm hierarchy:

Although data compression has become widespread with the Internet and after the invention of Lempel and Ziv algorithms (LZ algorithms), several earlier compression examples can be cited. Morse, inventing his code in 1838, reasonably assigned the most frequently used letters in the English language, “e” and “t”, the shortest sequences (dot and dash, respectively). Shortly after the appearance of mainframes in 1949, the Shannon-Fano algorithm was invented, which assigned codes to the symbols in the data block based on the probability of their occurrence in the block. The probability of a character appearing in a block was inversely proportional to the length of the code, which made it possible to compress the data representation.

David Huffman was a student in a class with Robert Fano and chose the search for an improved method of binary data encoding as a study paper. As a result, he managed to improve the Shannon-Fano algorithm.

Earlier versions of the Shannon-Fano and Huffman algorithms used predefined codes. Later, for this, they began to use codes created dynamically based on data intended for compression. In 1977, Lempel and Ziv published their LZ77 algorithm, based on the use of a dynamically created dictionary (it is also called a "sliding window"). In 78, they published the LZ78 algorithm, which first parses data and creates a dictionary, instead of creating it dynamically.

The LZ77 and LZ78 algorithms gained great popularity and caused a wave of improvers, of which DEFLATE, LZMA and LZX survived to this day. Most popular algorithms are based on LZ77, because the LZ78 derivative of LZ78 was patented by Unisys in 1984, after which they started trolling everyone and everyone, including even the use of GIF images. At this time, on UNIX, a variation of the LZW algorithm called LZC was used, and because of rights problems, their use had to be minimized. Preference was given to the DEFLATE algorithm (gzip) and the Burroughs-Wheeler transform, BWT (bzip2). Which was good, as these algorithms almost always outperform LZW in compression.

By 2003, the patent expired, but the train had already left and the LZW algorithm was preserved, perhaps, only in GIF files. Dominant are the algorithms based on LZ77.

In 1993, there was another battle of patents - when Stac Electronics discovered that its LZS algorithm was used by Microsoft in a disk compression program shipped with MS-DOS 6.0. Stac Electronics sued and they managed to win the case, as a result of which they received more than $ 100 million.

Large corporations used compression algorithms to store ever-increasing amounts of data, but the true spread of the algorithms occurred with the birth of the Internet in the late 80s. The channel capacity was extremely narrow. To compress data transmitted over the network, ZIP, GIF and PNG formats were invented.

Tom Henderson invented and released the first commercially successful ARC archiver in 1985 (System Enhancement Associates). ARC was popular among BBS users as she was one of the first to be able to compress several files into an archive, in addition, her sources were open. ARC used a modified LZW algorithm.

Phil Katz, inspired by the popularity of ARC, released PKARC in shareware format, which improved compression algorithms by rewriting them in Assembler. However, he was sued by Henderson and convicted. PKARC so openly copied ARC that sometimes typos in comments in the source code were even repeated.

But Phil Katz was not taken aback, and in 1989 he greatly changed the archiver and released PKZIP. After he was attacked already in connection with the patent for the LZW algorithm, he changed the basic algorithm to a new one, called IMPLODE. The format was again replaced in 1993 with the release of PKZIP 2.0, and the replacement was DEFLATE. Among the new features was the function of dividing the archive into volumes. This version is still widely used, despite its venerable age.

Graphics Interchange Format (GIF) was created by CompuServe in 1987. As you know, the format supports lossless image compression and is limited to a palette of 256 colors. Despite all the efforts of Unisys, she was not able to stop the distribution of this format. It is still popular, especially in connection with animation support.

A little worried about patent issues, CompuServe launched the Portable Network Graphics (PNG) format in 1994. Like ZIP, she used the new trendy DEFLATE algorithm. Although DEFLATE was patented by Katz, he did not make any claims.

Now it is the most popular compression algorithm. In addition to PNG and ZIP, it is used in gzip, HTTP, SSL and other data transfer technologies.

Unfortunately, Phil Katz did not live up to the DEFLATE triumph; he died of alcoholism in 2000 at the age of 37. Citizens - excessive drinking is dangerous to your health! You may not live to see your triumph!

ZIP reigned unchallenged until the mid-90s, but in 1993 the simple Russian genius Evgeny Roshal came up with his own format and RAR algorithm. Its latest versions are based on PPM and LZSS algorithms. Now, ZIP is perhaps the most common of the formats, RAR - until recently, it was the standard for distributing various low-legal content over the Internet (due to increased throughput, files are more often distributed without archiving), and 7zip is used as the format with the best compression for an acceptable working time. In the UNIX world, the tar + gzip bundle is used (gzip is an archiver, and tar combines several files into one, because gzip does not know how).

Note perev.Personally, in addition to the above, I came across an ARJ archiver (Archived by Robert Jung), which was popular in the 90s in the BBS era. It supported multi-volume archives, and just like RAR after it, it was used to distribute games and other warez. There was also a HA archiver from Harri Hirvola, which used HSC compression (it didn’t find a clear explanation - only “limited context model and arithmetic coding”), which handled the compression of long text files.

In 1996, a version of the BWT open source bzip2 algorithm appeared, and quickly gained popularity. In 1999, the 7-zip program with the 7z format appeared. In compression it competes with RAR, its advantage is openness, as well as the ability to choose between bzip2, LZMA, LZMA2 and PPMd algorithms.

In 2002, another archiver, PAQ, appeared. Author Matt Mahoney used an improved version of the PPM algorithm using a technique called “contextual mixing”. It allows you to use more than one statistical model to improve prediction of the frequency of occurrence of characters.

Of course, God knows him, but apparently, the PAQ algorithm is gaining popularity due to a very good compression ratio (although it works very slowly). But due to the increase in computer speed, the speed of work becomes less critical.

On the other hand, the Lempel-Ziv – Markov LZMA algorithm is a compromise between the speed and compression ratio and can generate many interesting branches.

Another interesting technology is “substring enumeration” or CSE, which is still little used in programs.

In the next part, we will consider the technical side of the mentioned algorithms and the principles of their work.

Introduction

Existing data compression algorithms can be divided into two large classes - with and without losses. Lossy algorithms are typically used to compress images and audio. These algorithms allow you to achieve large degrees of compression due to selective loss of quality. However, by definition, it is impossible to restore the original data from a compressed result.

Lossless compression algorithms are used to reduce the size of the data, and work in such a way that it is possible to restore the data exactly as it was before compression. They are used in communications, archivers and some algorithms for compressing audio and graphic information. Further we will consider only lossless compression algorithms.

The basic principle of compression algorithms is based on the fact that information is partially repeated in any file containing nonrandom data. Using statistical mathematical models, you can determine the likelihood of a certain combination of characters repeating. After that, you can create codes for the selected phrases and assign the shortest codes to the most frequently repeated phrases. For this, various techniques are used, for example: entropy coding, repetition coding, and compression using a dictionary. With their help, an 8-bit character, or a whole line, can be replaced with just a few bits, thus eliminating unnecessary information.

History

Algorithm hierarchy:

Although data compression has become widespread with the Internet and after the invention of Lempel and Ziv algorithms (LZ algorithms), several earlier compression examples can be cited. Morse, inventing his code in 1838, reasonably assigned the most frequently used letters in the English language, “e” and “t”, the shortest sequences (dot and dash, respectively). Shortly after the appearance of mainframes in 1949, the Shannon-Fano algorithm was invented, which assigned codes to the symbols in the data block based on the probability of their occurrence in the block. The probability of a character appearing in a block was inversely proportional to the length of the code, which made it possible to compress the data representation.

David Huffman was a student in a class with Robert Fano and chose the search for an improved method of binary data encoding as a study paper. As a result, he managed to improve the Shannon-Fano algorithm.

Earlier versions of the Shannon-Fano and Huffman algorithms used predefined codes. Later, for this, they began to use codes created dynamically based on data intended for compression. In 1977, Lempel and Ziv published their LZ77 algorithm, based on the use of a dynamically created dictionary (it is also called a "sliding window"). In 78, they published the LZ78 algorithm, which first parses data and creates a dictionary, instead of creating it dynamically.

Rights Issues

The LZ77 and LZ78 algorithms gained great popularity and caused a wave of improvers, of which DEFLATE, LZMA and LZX survived to this day. Most popular algorithms are based on LZ77, because the LZ78 derivative of LZ78 was patented by Unisys in 1984, after which they started trolling everyone and everyone, including even the use of GIF images. At this time, on UNIX, a variation of the LZW algorithm called LZC was used, and because of rights problems, their use had to be minimized. Preference was given to the DEFLATE algorithm (gzip) and the Burroughs-Wheeler transform, BWT (bzip2). Which was good, as these algorithms almost always outperform LZW in compression.

By 2003, the patent expired, but the train had already left and the LZW algorithm was preserved, perhaps, only in GIF files. Dominant are the algorithms based on LZ77.

In 1993, there was another battle of patents - when Stac Electronics discovered that its LZS algorithm was used by Microsoft in a disk compression program shipped with MS-DOS 6.0. Stac Electronics sued and they managed to win the case, as a result of which they received more than $ 100 million.

Deflate's growing popularity

Large corporations used compression algorithms to store ever-increasing amounts of data, but the true spread of the algorithms occurred with the birth of the Internet in the late 80s. The channel capacity was extremely narrow. To compress data transmitted over the network, ZIP, GIF and PNG formats were invented.

Tom Henderson invented and released the first commercially successful ARC archiver in 1985 (System Enhancement Associates). ARC was popular among BBS users as she was one of the first to be able to compress several files into an archive, in addition, her sources were open. ARC used a modified LZW algorithm.

Phil Katz, inspired by the popularity of ARC, released PKARC in shareware format, which improved compression algorithms by rewriting them in Assembler. However, he was sued by Henderson and convicted. PKARC so openly copied ARC that sometimes typos in comments in the source code were even repeated.

But Phil Katz was not taken aback, and in 1989 he greatly changed the archiver and released PKZIP. After he was attacked already in connection with the patent for the LZW algorithm, he changed the basic algorithm to a new one, called IMPLODE. The format was again replaced in 1993 with the release of PKZIP 2.0, and the replacement was DEFLATE. Among the new features was the function of dividing the archive into volumes. This version is still widely used, despite its venerable age.

Graphics Interchange Format (GIF) was created by CompuServe in 1987. As you know, the format supports lossless image compression and is limited to a palette of 256 colors. Despite all the efforts of Unisys, she was not able to stop the distribution of this format. It is still popular, especially in connection with animation support.

A little worried about patent issues, CompuServe launched the Portable Network Graphics (PNG) format in 1994. Like ZIP, she used the new trendy DEFLATE algorithm. Although DEFLATE was patented by Katz, he did not make any claims.

Now it is the most popular compression algorithm. In addition to PNG and ZIP, it is used in gzip, HTTP, SSL and other data transfer technologies.

Unfortunately, Phil Katz did not live up to the DEFLATE triumph; he died of alcoholism in 2000 at the age of 37. Citizens - excessive drinking is dangerous to your health! You may not live to see your triumph!

Modern archivers

ZIP reigned unchallenged until the mid-90s, but in 1993 the simple Russian genius Evgeny Roshal came up with his own format and RAR algorithm. Its latest versions are based on PPM and LZSS algorithms. Now, ZIP is perhaps the most common of the formats, RAR - until recently, it was the standard for distributing various low-legal content over the Internet (due to increased throughput, files are more often distributed without archiving), and 7zip is used as the format with the best compression for an acceptable working time. In the UNIX world, the tar + gzip bundle is used (gzip is an archiver, and tar combines several files into one, because gzip does not know how).

Note perev.Personally, in addition to the above, I came across an ARJ archiver (Archived by Robert Jung), which was popular in the 90s in the BBS era. It supported multi-volume archives, and just like RAR after it, it was used to distribute games and other warez. There was also a HA archiver from Harri Hirvola, which used HSC compression (it didn’t find a clear explanation - only “limited context model and arithmetic coding”), which handled the compression of long text files.

In 1996, a version of the BWT open source bzip2 algorithm appeared, and quickly gained popularity. In 1999, the 7-zip program with the 7z format appeared. In compression it competes with RAR, its advantage is openness, as well as the ability to choose between bzip2, LZMA, LZMA2 and PPMd algorithms.

In 2002, another archiver, PAQ, appeared. Author Matt Mahoney used an improved version of the PPM algorithm using a technique called “contextual mixing”. It allows you to use more than one statistical model to improve prediction of the frequency of occurrence of characters.

The future of compression algorithms

Of course, God knows him, but apparently, the PAQ algorithm is gaining popularity due to a very good compression ratio (although it works very slowly). But due to the increase in computer speed, the speed of work becomes less critical.

On the other hand, the Lempel-Ziv – Markov LZMA algorithm is a compromise between the speed and compression ratio and can generate many interesting branches.

Another interesting technology is “substring enumeration” or CSE, which is still little used in programs.

In the next part, we will consider the technical side of the mentioned algorithms and the principles of their work.