NetApp SnapManager for Oracle & SAN Network

SnapManager is a set of NetApp utilities that automates the removal of so-called Application-Consistent Backup ( ACB ) and Crash-Consistent Snapshots ( CCS ) without stopping applications using NetApp FAS series storage systems , archiving, backing up, testing copies and archives, cloning adapting the inclined data to other hosts, restoring, and other functions through the GUI interface by the application operator alone without involving specialists in servers, networks and storage systems .

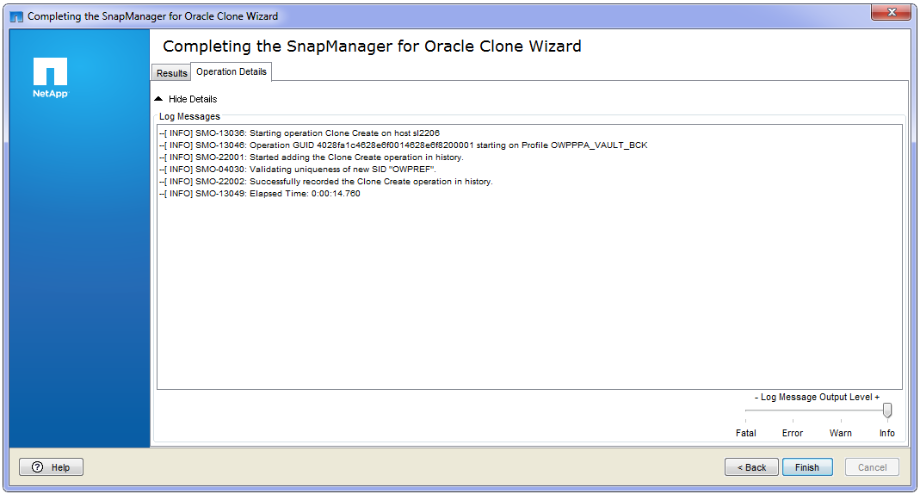

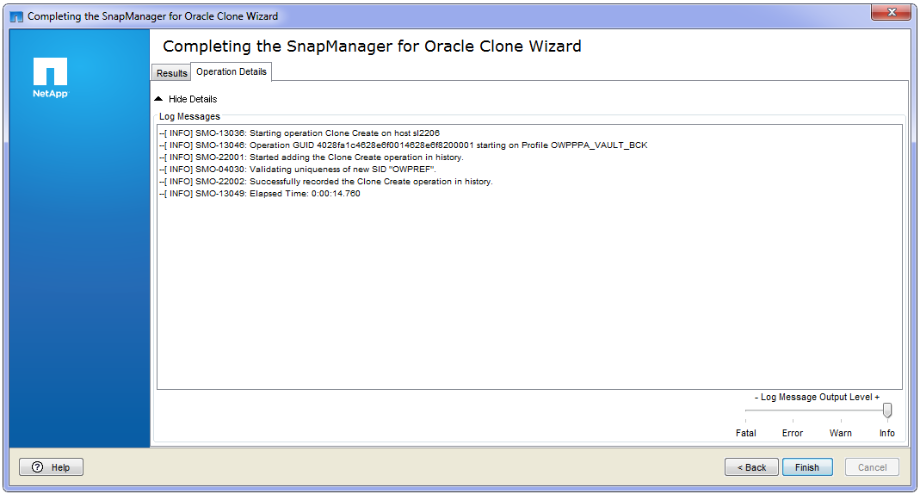

SnapManager for Oracle on Windows, Cloning Operation

Why back up data with snapshots and especially with storage? The fact is that most modern methods of backing up information imply the duration of the process, resource consumption: load on the host, loading channels, occupying space, and as a result, degradation of services. The same applies to cloning large volumes of information for Dev / Test units, increasing the “time gap” between actual data and backup data, this increases the likelihood that the backup may be “not recoverable”. With the use of “hardware” NetApp snapshots that do not affect performance and occupy an unnecessary 100% backup, but only the “difference” (a kind of incremental backup or, better to say, an incremental backup, which doesn’t need to be spent on removing and assembling) , as well as the ability to transfer data for backup and archiving in the form of snapshots,

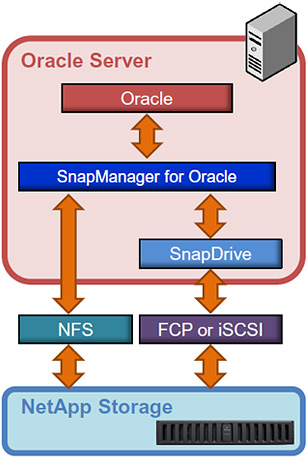

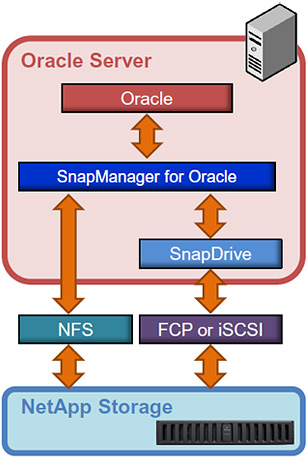

The utility consists of several components: a server on a dedicated host or virtual machine and agents installed on hosts with DB . For the full functioning of SnapManager you need functionality that is also licensed "per controller": FlexClone , SnapRestore . In addition to the installed SnapManager agents , an installed instance of the SnapDrive utility (licenses included with SnapManager) is required on the host inside the OS with DB . SnapDrive helps in creating CCS by interacting with the OS , while SMOinteracts with the application to create an ACB , thus complementing each other. The carving between ACB and CCS . Without purchased licenses SnapRestore and FlexClone , the functionality of instant recovery and the means of storage of instant cloning and cataloging, respectively, will not be available .

SMO integration with Oracle

Licensing at SnapManager «pokontrollernoe" of the license SnapManager includes other managers for the MS the SQL , the MS the Exchange , the MS Share Point , the MS the Hyper-the V , the VMWare the vSphere , the SAP, Lotus Domino, and Citrix Xen Server .

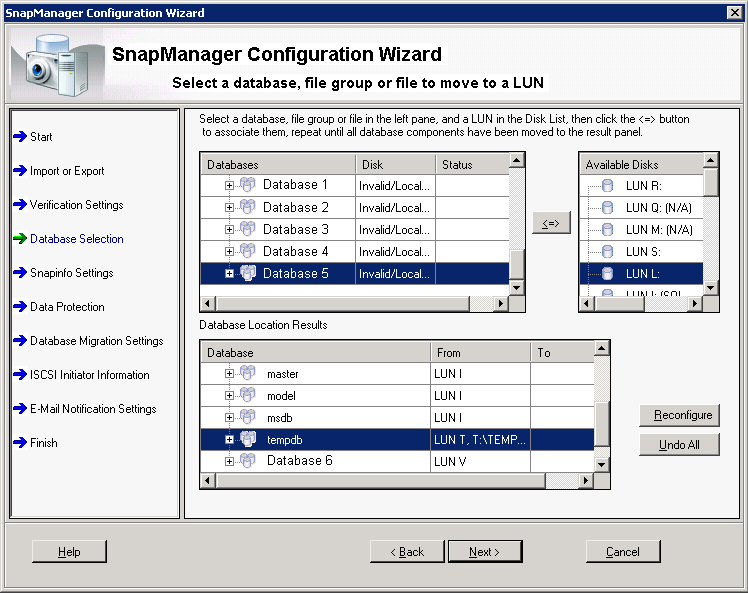

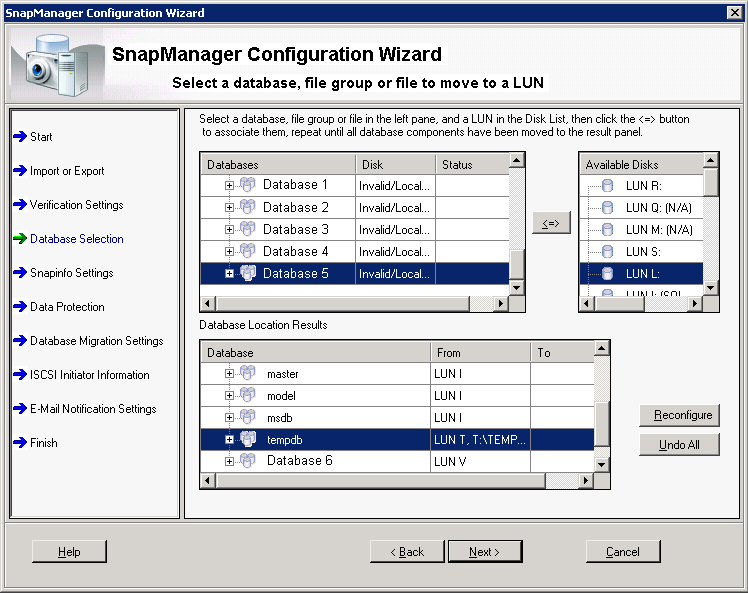

SnapManager for MS SQL, Moving Operation

Пойдя по пути «оптимизации расходов», отказываясь от покупки лицензии SnapManager, нужно понимать, что управление потребуют дополнительных затрат времени и взаимодействия DBA с Server Admins, Сетевыми Администраторами и Админов СХД, для того, чтобы DBA получили вожделенную манипуляцию с DB. В то время как с SnapManager это можно выполнить нажатием пары кнопок в GUI интерфейсе, без привлечения разных специалистов и затрат времени.

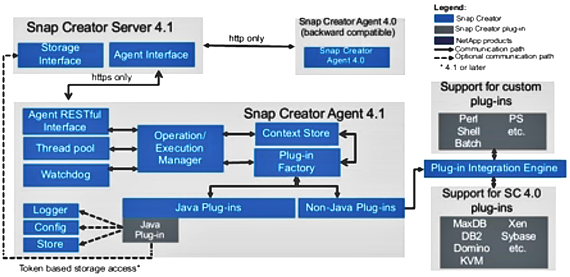

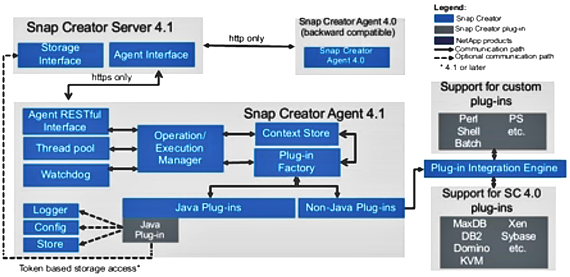

Многие из выполняемых функций SnapManager можно выполнять при помощи бесплатной утилиты SnapCreator, также позволяющей интегрироваться с DB(as well as a large number of other applications) applications for removing consistent ACB snapshots using storage systems . But this utility does not perform many other convenient DB management functions . Such as DB cloning , in-Place recovery, mapping a DB clone to another host, automatic testing of operability and recovery from the archive, etc.

SnapCreator component interaction diagram

Most of the missing functionality in SnapCreator can be compensated for using scripting, which is now available with PowerShell cmdlets in: DataOntap Toolkit , SnapCreator , OnCommand Unified Manager ( OCUM ) and many other useful utilities. What will undoubtedly take time to debug for business processes.

SMO integrates with 10GR2, 11g R1 / R2, 12cR1 (12.1.0.1) with RAC , RMAN , ASM , Direct NFS technologies . Everything written below can, as a rule, also be attributed to SnapManager for MS SQL ( SMSQL ) and SnapCreator .

SnapManager for Oracle on Linux, Backing-up Operation

SMO backs up only the following data:

See TR-3761 NetApp SnapManager 3.3.1 for Oracle , page 12, table 1).

Redo Logs are not backed up, they can be backed up using SnapMirror , see below .

By means of SMO, Archive Logs backup is performed, logs are not overwritten or restored. RMAN is used to manage Redo Logs and Archive Logs . How SMO works with Archive Logs .

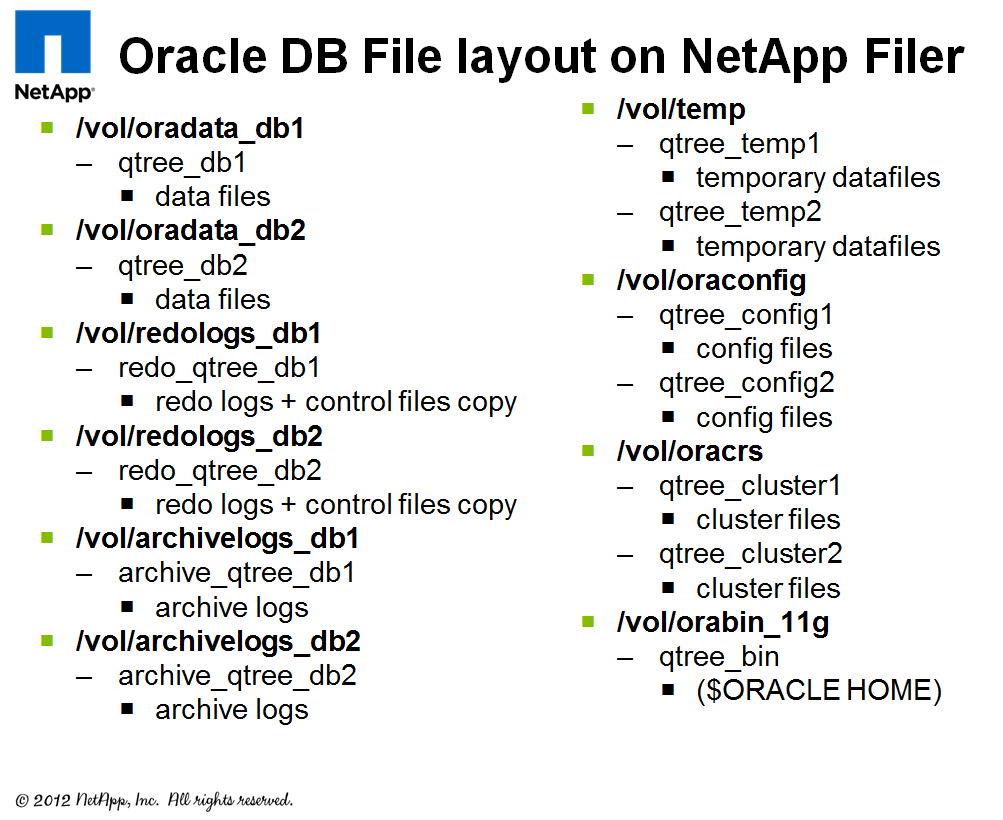

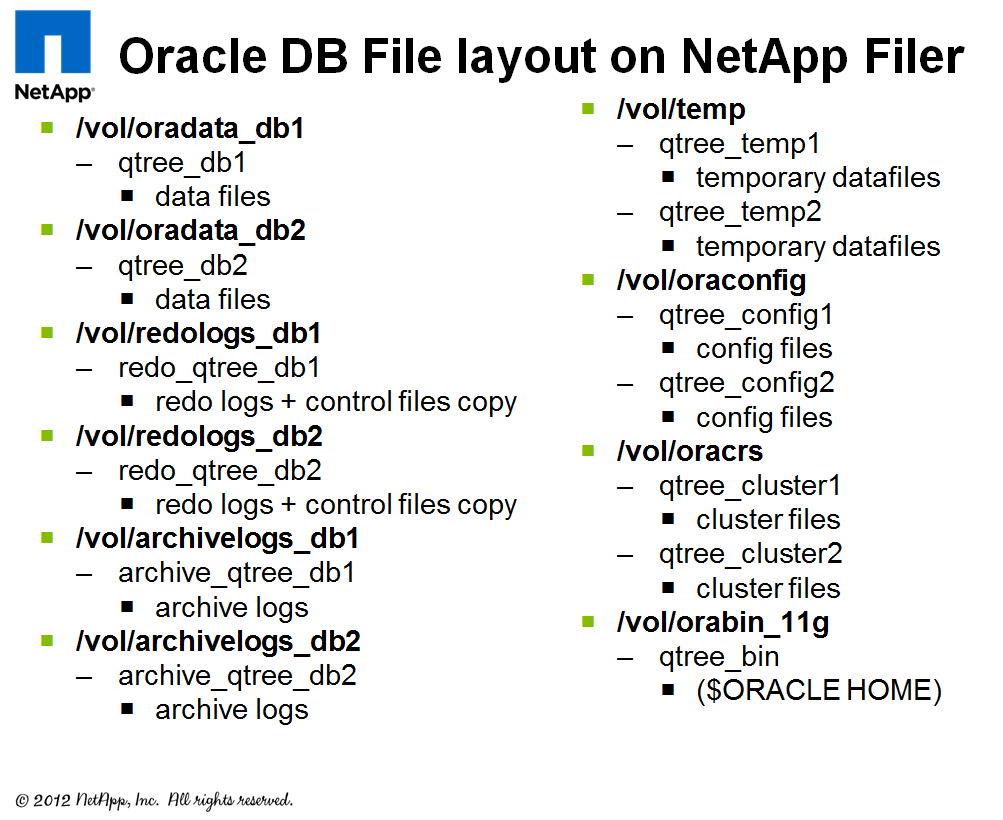

Recommendations on the breakdown of space FlexVol'y and the LUN 's for the SMO , as a rule coincide with the recommendations of " the Oracle the DB on the NetApp ".

First of all, you need to turn off automatic snapshots on FlexVol'yums, they will now be initiated by SMO Server. It is imperative to separate Temp Files into dedicated FlexVol. RAW devices with LVM are not supported . August 2011 | TR-3633 Best Practices for Oracle Databases on NetApp Storage , page 11

Some files of the same type from different DBs can be grouped and stored in one FlexVol, and files of different types must be separated:

All of these requirements for separating Redo Logs , Archive Logs , Data Files, and Temp Files stem from the following:

At the snapshot creation level, this is called Consistency Groups , which is supported by DataOntap 7.2 or higher. But this is only required in the case of ASM . In the case without ASM , Oracle itself copes with the consistency of the backup database, which is distributed across different controllers (FlexVols).

In view of our case with the use of SAN , I want to draw your attention to the following nuances:

В случае использования Thin Provisioning и нескольких LUN'ов в одном FlexVol'юме, можно получить недостачу места для всех LUN'ов в этом FlexVol'юме и как следствие, отвалившиеся все LUN в нём. DataOntap переведет их в режим Offline, чтобы они не повредились. Для избежания этой ситуации рекомендуется использовать ОСRedHat Enterprise Linux 6.2 (или другие современные ОС) или выше с поддержкой Logical Block Provisioning как определено в стандарте SCSI SBC-3 (что часто называют SCSI Thin Provisioning), который «объясняет» ОСthat the LUN is actually “thin” and the place on it is “really over”, prohibiting write operations. Thus, the OS should stop writing in such a LUN , and it will not be switched to Offline and will remain read-only for the OS (another question is how the application will respond to this). This functionality also provides the ability to use Space Reclamation . Thus, modern OSs now work more adequately in thin planning mode with LUNs .

In the case of using snapshots (and in SMO they will be used ) and storing several LUNs in one FlexVol'um, we can again run into the situation with space, even if the LUNs are “thick”. Namely: if snapshots do not have enough space in the allocated reserve, they begin to occupy space in the active file system during the LUN change . In other words, you need to correctly allocate free space for snapshots for LUN 's (s). If it is allocated less, then the snootshots from LUNs will eat up the space in the snapshot reserve, and then from the active file system and see in the previous paragraphwhat will happen. The situation is partially solved by allocating an empirically selected reserve for snapshots, settings for deleting older snapshots ( snap autodelete ) and automatically increasing FlexVol ( volume autogrow ). But I want to draw your attention to the fact that the release of space will happen after the LUN 's go to Offline , in which FlexVol containing them, in which the space actually ended. And the presence of a large number of LUNs in one FlexVol'yum increases, so to speak, the likelihood that one day one of the LUNs's can take and start to “grow” by leaps and bounds - not as planned, having eaten all the space not only in reserve for snapshots, but also in the active FlexVol file system. From here the recommendation pops up : either have one LUN per FlexVol, or store several LUNs in one FlexVol, but make sure that all these LUNs are of the same type and grow "equally proportionally." The second point implies the mandatory configuration of monitoring OCUM (Free software, and indeed a useful thing, monitoring in any situation will not hurt) and monitor what is happening. OCUM can monitor all performance indicators of DataOntap storage OSwhich only the latter, in general, can provide. In addition, OCUM can send alerts to mail, which must be read accordingly. Clearly about snapshots, LUN 's and Fractional reserve and why LUN 's usually only grow, you can see here .

In the case of SAN with snapshots, the OS support of Space Reclamation functions greatly improves the suturing; see the previous paragraph . Space Reclamation allows Thin LUNs to decrease on the storage side as the host removes data on it, solving the " problem of constant growth of Thin LUNs ". Since without Space Reclamation, LUNs always only “grow” in the case of Thin LUNs and even for “thick” LUNs, the absence of Space Reclamationit spills over into “overgrown snapshots” that capture the data blocks that no longer need anyone long ago. According to this, Space Reclamation Mast keV.

Backups created by SMO can be cataloged in RMAN , this configuration is optional. This makes it possible to use functions: block (see the example in Appendix E) and Tablespace-in-time (see the example in Appendix F) recovery. When cataloging SMO backups in RMAN , they must be located in a DB other than the backup one. To register SMO backups in RMAN , you must enable RMAN-enabled profiles . TR-3761 NetApp SnapManager 3.3.1 for Oracle , page 11. It is recommended that you use one thing: either RMAN or SMO .

Bekapirovanie Redo Logs and Archive Logs performed using replication SnapMmirror in synchronous , semi-synchronous or asynchronous mode. Using SnapMirror implies using snapshots (without interacting with SMO ). All other data is typically replicated asynchronously. SnapMirror - licensed "per-controller", on both sides - storing a backup copy (Secondary) and the main controller (Primary) NetApp FAS , containing data that needs to be protected. TR-3455 Database recovery using SnapMirror Async and Sync, Chapter 12, page 17.

Undo Tablespace must be stored with Data Files in order to back them up. Undo Tablespace needs to be stored on the same moon with Data Files .

What is the difference between Archive, Redo and Undo Tablespace .

You may need a NetApp NOW ID to access some pages . If you take NetApp storage for testing, your distributor / integrator will help you download them.

* Qtree - Application for replication in NetApp FAS systems with DataONTAp Cluster-Mode (Clustered ONTAP), is no longer required, since SnapVault and SnapMirror QSM are now able to replicate and restore data at the Volume level .

I express my deep gratitude to shane54 for help in advising on the work of the Oracle database and for constructive criticism.

Please send messages about errors in the text to the LAN .

Comments and additions on the contrary please comment

Why back up data with snapshots and especially with storage? The fact is that most modern methods of backing up information imply the duration of the process, resource consumption: load on the host, loading channels, occupying space, and as a result, degradation of services. The same applies to cloning large volumes of information for Dev / Test units, increasing the “time gap” between actual data and backup data, this increases the likelihood that the backup may be “not recoverable”. With the use of “hardware” NetApp snapshots that do not affect performance and occupy an unnecessary 100% backup, but only the “difference” (a kind of incremental backup or, better to say, an incremental backup, which doesn’t need to be spent on removing and assembling) , as well as the ability to transfer data for backup and archiving in the form of snapshots,

SMO Components

The utility consists of several components: a server on a dedicated host or virtual machine and agents installed on hosts with DB . For the full functioning of SnapManager you need functionality that is also licensed "per controller": FlexClone , SnapRestore . In addition to the installed SnapManager agents , an installed instance of the SnapDrive utility (licenses included with SnapManager) is required on the host inside the OS with DB . SnapDrive helps in creating CCS by interacting with the OS , while SMOinteracts with the application to create an ACB , thus complementing each other. The carving between ACB and CCS . Without purchased licenses SnapRestore and FlexClone , the functionality of instant recovery and the means of storage of instant cloning and cataloging, respectively, will not be available .

SMO integration with Oracle

Licensing at SnapManager «pokontrollernoe" of the license SnapManager includes other managers for the MS the SQL , the MS the Exchange , the MS Share Point , the MS the Hyper-the V , the VMWare the vSphere , the SAP, Lotus Domino, and Citrix Xen Server .

SnapManager for MS SQL, Moving Operation

Snapcreator

Пойдя по пути «оптимизации расходов», отказываясь от покупки лицензии SnapManager, нужно понимать, что управление потребуют дополнительных затрат времени и взаимодействия DBA с Server Admins, Сетевыми Администраторами и Админов СХД, для того, чтобы DBA получили вожделенную манипуляцию с DB. В то время как с SnapManager это можно выполнить нажатием пары кнопок в GUI интерфейсе, без привлечения разных специалистов и затрат времени.

Многие из выполняемых функций SnapManager можно выполнять при помощи бесплатной утилиты SnapCreator, также позволяющей интегрироваться с DB(as well as a large number of other applications) applications for removing consistent ACB snapshots using storage systems . But this utility does not perform many other convenient DB management functions . Such as DB cloning , in-Place recovery, mapping a DB clone to another host, automatic testing of operability and recovery from the archive, etc.

SnapCreator component interaction diagram

Scripting

Most of the missing functionality in SnapCreator can be compensated for using scripting, which is now available with PowerShell cmdlets in: DataOntap Toolkit , SnapCreator , OnCommand Unified Manager ( OCUM ) and many other useful utilities. What will undoubtedly take time to debug for business processes.

A brief educational program on NetApp ideology

Integration

SMO integrates with 10GR2, 11g R1 / R2, 12cR1 (12.1.0.1) with RAC , RMAN , ASM , Direct NFS technologies . Everything written below can, as a rule, also be attributed to SnapManager for MS SQL ( SMSQL ) and SnapCreator .

SnapManager for Oracle on Linux, Backing-up Operation

1) What does SMO backup:

SMO backs up only the following data:

- Data files

- Control files

- Archive Redo logs ( Archive Logs )

See TR-3761 NetApp SnapManager 3.3.1 for Oracle , page 12, table 1).

Redo Logs are not backed up, they can be backed up using SnapMirror , see below .

1.1) How Archive Logs are backed up, overwritten and restored:

By means of SMO, Archive Logs backup is performed, logs are not overwritten or restored. RMAN is used to manage Redo Logs and Archive Logs . How SMO works with Archive Logs .

2) Recommendations for preparation:

Recommendations on the breakdown of space FlexVol'y and the LUN 's for the SMO , as a rule coincide with the recommendations of " the Oracle the DB on the NetApp ".

First of all, you need to turn off automatic snapshots on FlexVol'yums, they will now be initiated by SMO Server. It is imperative to separate Temp Files into dedicated FlexVol. RAW devices with LVM are not supported . August 2011 | TR-3633 Best Practices for Oracle Databases on NetApp Storage , page 11

Some files of the same type from different DBs can be grouped and stored in one FlexVol, and files of different types must be separated:

- Each moon should be put in a separate Qtree * . This is convenient in the case of replication of SnapMirror QSM , SnapVault or NDMPCopy , since data is archived using Qtree * and, importantly, it is always restored to Qtree * . Therefore, data not backed up from Qtree * ( non-qtree data) can always be restored only to Qtree * . Thus, it is convenient to always store data in Qtree * , so that in case of recovery, you do not have to reconfigure access to data from a new place.More details

- By placing each LUN in a separate Qtree *, you can assign quotas for its size, generating alerts not for the entire FlexVol, but for a separate Qtree * , upon reaching a certain threshold for its fullness. This is convenient for tracking LUN status by email without using any additional utilities.

- Temp Files must be separated from all other data, since they change very much during DB operation and, accordingly, snapshots taken from such data occupy valuable storage space.

- Snapshots on Temp Files must be turned off

- Temp Files from all DBs can be added to one FlexVol.

- Files of DB Archive Logs , Redo Logs and Data itself in the case of SAN, each type must be kept on a dedicated LUN . Do not mix DB files of different types in one moon , for example, do not keep Archive Logs and Redo Log files on the same moon .

- LUN 's containing Archive Logs , Redo Log, and Data files themselves , each must be kept on a dedicated FlexVol. Those. For such LUNs , the rule applies: one FlexVol - one LUN (and do not forget about Qtree * ).

- If, for example, DB generates two Archive Logs files and each of them lies on a separate LUN , then such LUNs , as an exception to the rule, can be stored in one FlexVol, i.e. to have several LUN 's on one FlexVol . The same goes for other DB files , including Data Files and Redo Logs .

- It is advisable to store a copy of the Control Files from each DB along with the corresponding Redo Logs .

- Redo Logs must be separated from all instances in a separate FlexVol.

- Archive Logs must be separated from all DB instances into a separate FlexVol.

- Oracle Cluster Registry ( OCR ) files or Voting Disk Files from all instances can be added to one FlexVol, each in a separate Qtree * on this FlexVol. Oracle strongly recommends storing OCR and Voting Disks on disk groups that do not store database files .

- Undo Tablespace needs to be stored along with Data Files .

All of these requirements for separating Redo Logs , Archive Logs , Data Files, and Temp Files stem from the following:

- Temp Files are not needed for backup and recovery, but due to the fact that they change greatly if snapshots are applied to them, they will mercilessly consume space on the storage system , occupying it with useless data.

- If you store the same Temp Files along with other types of DB files that will be backed up with snapshots, they eat up space (see the previous paragraph) and, as a result, having eaten all the space can lead to a situation with the LUN leaving (in this FlexVol'y which ended space) in Offline .

- In the case of using SnapMirror VSM replication, snapshots are used, and if we have “mixed in one heap” data from DB , then see the two previous paragraphs about Temp Files . Plus, everything else will be constantly sent to the remote system by unnecessary data, downloading the communication channel.

- In the case of using SnapMirror QSM or SnapVault replication, the issue of downloading the communication channel can be circumvented by placing the data in one FlexVol, the data of which needs to be replicated, in separate Qtree * and replicating only them, without loading the communication channel, but the question is All the same, snapshots can not be bypassed (see the first two points), since snapshots are removed for the entire FlexVol'yum.

- On the other hand, "the logic is one LUN - one FlexVol'um" comes from the possible need to restore not all DBs , but only one or several. For recovery, you can use SnapRestore functionality with one of the approaches: SFSR or VBSR . VBSR is faster, since it does not require going through the WAFL structure , since VBSR works on a block level with FlexVol , all data inside it will be restored . Thus, when storing several DBs or their parts in one FlexVol , it is possible to “accidentally” restore the older version (s) of another DB at the same time. To prevent this from happening, in connection with this we have a recommendation: one FlexVol - one LUN , for all data that may need to be restored.

- In the case of using file access, such as NFS or Direct NFS , instead of block access, the essence of the above regarding the separation of DB files into different FlexVols is preserved, with the only difference being that NFS is exported at the Qtree * or Volume level instead of LUN . As well as the possibility of more granular recovery of DB files using SFSR .

2.1) Is it possible for one base to live on several controllers (FlexVol'yums) when using SnapManager?

At the snapshot creation level, this is called Consistency Groups , which is supported by DataOntap 7.2 or higher. But this is only required in the case of ASM . In the case without ASM , Oracle itself copes with the consistency of the backup database, which is distributed across different controllers (FlexVols).

In view of our case with the use of SAN , I want to draw your attention to the following nuances:

2.2) Thin Provisioning:

В случае использования Thin Provisioning и нескольких LUN'ов в одном FlexVol'юме, можно получить недостачу места для всех LUN'ов в этом FlexVol'юме и как следствие, отвалившиеся все LUN в нём. DataOntap переведет их в режим Offline, чтобы они не повредились. Для избежания этой ситуации рекомендуется использовать ОСRedHat Enterprise Linux 6.2 (или другие современные ОС) или выше с поддержкой Logical Block Provisioning как определено в стандарте SCSI SBC-3 (что часто называют SCSI Thin Provisioning), который «объясняет» ОСthat the LUN is actually “thin” and the place on it is “really over”, prohibiting write operations. Thus, the OS should stop writing in such a LUN , and it will not be switched to Offline and will remain read-only for the OS (another question is how the application will respond to this). This functionality also provides the ability to use Space Reclamation . Thus, modern OSs now work more adequately in thin planning mode with LUNs .

2.3) SAN & SnapShots:

In the case of using snapshots (and in SMO they will be used ) and storing several LUNs in one FlexVol'um, we can again run into the situation with space, even if the LUNs are “thick”. Namely: if snapshots do not have enough space in the allocated reserve, they begin to occupy space in the active file system during the LUN change . In other words, you need to correctly allocate free space for snapshots for LUN 's (s). If it is allocated less, then the snootshots from LUNs will eat up the space in the snapshot reserve, and then from the active file system and see in the previous paragraphwhat will happen. The situation is partially solved by allocating an empirically selected reserve for snapshots, settings for deleting older snapshots ( snap autodelete ) and automatically increasing FlexVol ( volume autogrow ). But I want to draw your attention to the fact that the release of space will happen after the LUN 's go to Offline , in which FlexVol containing them, in which the space actually ended. And the presence of a large number of LUNs in one FlexVol'yum increases, so to speak, the likelihood that one day one of the LUNs's can take and start to “grow” by leaps and bounds - not as planned, having eaten all the space not only in reserve for snapshots, but also in the active FlexVol file system. From here the recommendation pops up : either have one LUN per FlexVol, or store several LUNs in one FlexVol, but make sure that all these LUNs are of the same type and grow "equally proportionally." The second point implies the mandatory configuration of monitoring OCUM (Free software, and indeed a useful thing, monitoring in any situation will not hurt) and monitor what is happening. OCUM can monitor all performance indicators of DataOntap storage OSwhich only the latter, in general, can provide. In addition, OCUM can send alerts to mail, which must be read accordingly. Clearly about snapshots, LUN 's and Fractional reserve and why LUN 's usually only grow, you can see here .

2.4) Space Reclamation:

In the case of SAN with snapshots, the OS support of Space Reclamation functions greatly improves the suturing; see the previous paragraph . Space Reclamation allows Thin LUNs to decrease on the storage side as the host removes data on it, solving the " problem of constant growth of Thin LUNs ". Since without Space Reclamation, LUNs always only “grow” in the case of Thin LUNs and even for “thick” LUNs, the absence of Space Reclamationit spills over into “overgrown snapshots” that capture the data blocks that no longer need anyone long ago. According to this, Space Reclamation Mast keV.

3) Are backups displayed in RMAN?

Backups created by SMO can be cataloged in RMAN , this configuration is optional. This makes it possible to use functions: block (see the example in Appendix E) and Tablespace-in-time (see the example in Appendix F) recovery. When cataloging SMO backups in RMAN , they must be located in a DB other than the backup one. To register SMO backups in RMAN , you must enable RMAN-enabled profiles . TR-3761 NetApp SnapManager 3.3.1 for Oracle , page 11. It is recommended that you use one thing: either RMAN or SMO .

4) Do you plan to backup Redo Logs with SMO in the future?

Bekapirovanie Redo Logs and Archive Logs performed using replication SnapMmirror in synchronous , semi-synchronous or asynchronous mode. Using SnapMirror implies using snapshots (without interacting with SMO ). All other data is typically replicated asynchronously. SnapMirror - licensed "per-controller", on both sides - storing a backup copy (Secondary) and the main controller (Primary) NetApp FAS , containing data that needs to be protected. TR-3455 Database recovery using SnapMirror Async and Sync, Chapter 12, page 17.

5) Can Undo Tablespace and Temp Files be kept together?

Undo Tablespace must be stored with Data Files in order to back them up. Undo Tablespace needs to be stored on the same moon with Data Files .

What is the difference between Archive, Redo and Undo Tablespace .

You may need a NetApp NOW ID to access some pages . If you take NetApp storage for testing, your distributor / integrator will help you download them.

* Qtree - Application for replication in NetApp FAS systems with DataONTAp Cluster-Mode (Clustered ONTAP), is no longer required, since SnapVault and SnapMirror QSM are now able to replicate and restore data at the Volume level .

I express my deep gratitude to shane54 for help in advising on the work of the Oracle database and for constructive criticism.

Please send messages about errors in the text to the LAN .

Comments and additions on the contrary please comment