Make the site work on touch devices

Touch screens on mobile phones, tablets, laptops and desktops have opened up a whole new series of interactions for web developers. In a translated guide, Patrick Loki discusses the basics of working with touch events in JavaScript. All examples discussed below are in the archive .

Do we need to worry about touches?

With the advent of touch devices, the main question from the developers is: “What do I need to do to make sure that the site or application works on them?” Surprisingly, the answer is nothing. Mobile browsers by default cope with most sites that are not designed for touch devices. Applications not only work normally with static pages, but also process interactive sites with JavaScript, where scripts are associated with events like hover.

To do this, browsers simulate or simulate mouse events on the device’s touch screen. A simple page test (example1.html in the attached files) shows that even on a touch device, pressing a button triggers the following sequence of events: mouseover> mousemove> mousedown> mouseup> click.

These events are triggered in rapid succession with virtually no delay between them. Pay attention to the mousemove event, which provides at least one-time execution of all scripts that are triggered by mouse behavior.

If your site responds to mouse actions, its functions in most cases will continue to work on touch devices, without requiring additional modification.

Mouse Event Simulation Issues

Click delay

When using touch screens, browsers intentionally use an artificial delay of about 300 ms between the touch action (for example, clicking on a button or link) and the actual activation of the click. This delay allows users to perform doubletaps (for example, to enlarge and reduce the image) without accidentally activating other page elements.

If you want to create a site that responds to user actions as a native application, there may be problems. This is not what ordinary users expect from most Internet resources.

Finger tracking

As we have already noticed, synthetic events dispatched by the browser contain the mousemove event - always only one. If users swipe too much on the screen, synthetic events will not be generated at all - the browser interprets such a movement as a gesture like scrolling.

This becomes a problem if your site is controlled by mouse movements - for example, a drawing application.

Let's create a simple canvas application (example3.html). Instead of a specific implementation, let's see how the script responds to mouse movement.

var posX, posY;

...

function positionHandler(e) {

posX = e.clientX;

posY = e.clientY;

}

...

canvas.addEventListener('mousemove', positionHandler, false );

If you test the example with the mouse, you can see that the position of the pointer is continuously monitored as the cursor moves. On the touch device, the application does not respond to the movement of the fingers, but only responds to a click that triggers a synthetic motion event.

“Look deeper”

To solve these problems, you have to go into abstraction. Touch events appeared in Safari for iOS 2.0, and after implementation in almost all browsers were standardized in the W3C Touch Events specification . New events recorded in the standard are touchstart, touchmove, touchend and touchcancel. The first three specifications are equivalent to the standard mousedown, mousemove, and mouseup.

Touchcancel is called when touch interaction is interrupted - for example, if the user extends a finger beyond the current document. Observing the order in which touch and synthetic events are triggered for clicking, we get (example4.html):

touchstart> [touchmove] +> touchend> mouseover> (a single) mousemove> mousedown> mouseup> click .

All touch events are involved: touchstart, one or more touchmove (depending on how carefully the user presses the button without moving his finger across the screen), and touchend. After that, synthetic events are triggered and a final click occurs.

Sensory Event Detection

A simple script is used to determine if the browser supports touch events.

if ('ontouchstart' in window) {

/* browser with Touch Events support */

}

This snippet works great in modern browsers. The old ones have quirks and inconsistencies that can be detected only if you climb out of your skin. If your application focuses on older browsers, try the Modernizr plugin and its testing mechanisms. They will help to identify most inconsistencies.

In determining support for sensory events, we must clearly understand what we are testing.

The selected fragment only checks the ability of the browser to recognize the touch, but does not say that the application is open on the touch screen.

Click Delay Work

If we test the sequence of events transmitted to the browser on touch devices and including synchronization information (example5.html), we will see that a delay of 300 ms appears after the touchend event:

touchstart> [touchmove] +> touchend> [300ms delay]> mouseover> (a single) mousemove> mousedown> mouseup> click .

So, if our scripts respond to clicks, you can get rid of the default browser delay by writing reactions to touchend or touchstart. We do this by responding to any of these events. Touchstart is used for interface elements that should start immediately when you touch the screen - for example, control buttons in html games.

Again, we should not make false assumptions about support for touch events and that the application is open specifically on the touch device. Here is one of the common tricks often mentioned in articles about mobile optimization.

/* if touch supported, listen to 'touchend', otherwise 'click' */

var clickEvent =('ontouchstart'in window ?'touchend':

blah.addEventListener(clickEvent,function(){ ... });

Although this scenario is well-intentioned, it is based on a mutually exclusive principle. A reaction to either a click or a touch, depending on browser support, causes problems on hybrid devices - they will immediately interrupt any interaction with the mouse, trackpad, or touch.

A more robust approach takes into account both types of events:

blah.addEventListener('click', someFunction,false);

blah.addEventListener('touchend', someFunction,false);

The problem is that the function is executed twice: once with touchend, the second time when synthetic events and clicks are fired. You can get around this by suppressing the standard response to mouse events using preventDefault (). We can also prevent code from repeating simply by forcing the touchend handler to fire the desired click event.

blah.addEventListener('touchend',function(e){

e.preventDefault();

e.target.click();

},false);

blah.addEventListener('click', someFunction,false);

There is a problem with preventDefault () - when it is used in the browser, any other default behavior is suppressed. If we apply it directly to the initial touch events, any other activity will be blocked - scrolling, long mouse movement or scaling. Sometimes this comes in place, but the method should be used with caution.

The given code example is not optimized. For a reliable implementation, check it out at FTLabs's FastClick .

Motion tracking with touchmove

Armed with knowledge of touch events, let's return to the tracking example (example3.html) and see how it can be changed to track finger movements on the touch screen.

Before we see the specific changes in this script, we first need to understand how touch events differ from mouse events.

Anatomy of sensory events

According to the Document Object Model (DOM) Level 2 , functions that respond to mouse events receive a mouseevent object as a parameter. This object includes properties - clientX and clientY coordinates, which the script uses to determine the current mouse position.

For instance:

interface MouseEvent : UIEvent {

readonly attribute long screenX;

readonly attribute long screenY;

readonly attribute long clientX;

readonly attribute long clientY;

readonly attribute boolean ctrlKey;

readonly attribute boolean shiftKey;

readonly attribute boolean altKey;

readonly attribute boolean metaKey;

readonly attribute unsigned short button;

readonly attribute EventTarget relatedTarget;

void initMouseEvent(...);

};

As you can see, touchevent contains three different touch lists.

- Touches. Includes all common ground that are currently active on the screen, regardless of the item to which the running function belongs.

- TargetTouches. Contains only touch points that started within the element, even if the user moves his fingers outside it.

- ChangedTouches. Includes any common ground that has changed since the last touch event.

Each of these sheets is a matrix of individual sensory objects. Here we find pairs of coordinates similar to clientX and clientY.

interface Touch {

readonly attribute long identifier;

readonly attribute EventTarget target;

readonly attribute long screenX;;

readonly attribute long screenY;

readonly attribute long clientX;

readonly attribute long clientY;

readonly attribute long pageX;

readonly attribute long pageY;

};

Using touch events to track your fingers

Back to the canvas-based example. We must change the function so that it responds both to sensory events and to mouse actions. You need to track the movement of a single touch point. Just grab the clientX and clientY coordinates from the first element in the targetTouches array.

var posX, posY;

function positionHandler(e) {

if ((e.clientX)&&(e.clientY)) {

posX = e.clientX;

posY = e.clientY;

} else if (e.targetTouches) {

posX = e.targetTouches[0].clientX;

posY = e.targetTouches[0].clientY;

e.preventDefault();

}

}

canvas.addEventListener('mousemove', positionHandler, false );

canvas.addEventListener('touchstart', positionHandler, false);

canvas.addEventListener('touchmove', positionHandler, false);

When testing a modified script on a touch device (example6.html), you will see that tracking a single finger movement now works reliably.

If we want to expand the example so that multitouch works, we will have to slightly change the initial approach. Instead of one pair of coordinates, we will take into account their whole series, which is cyclically processed. This will allow tracking both single mouse clicks and multitouch (example7.html).

var points = [];

function positionHandler(e) {

if ((e.clientX)&&(e.clientY)) {

points[0] = e;

} else if (e.targetTouches) {

points = e.targetTouches;

e.preventDefault();

}

}

function loop() {

...

for (var i = 0; i

Примерно так:

Вопросы производительности

Как и события mousemove, во время движения пальцев touchmove может работать с высокой скоростью. Желательно избегать сложного кода — комплексных вычислений или целых событий рисования для каждого перемещения. Это важно для старых и менее производительных сенсорных устройств, чем современные.

В нашем примере мы выполняем абсолютный минимум — хранение последних массивов мыши или координат точек касания. Код приложения независимо выполняется в отдельном цикле с помощью setInterval.

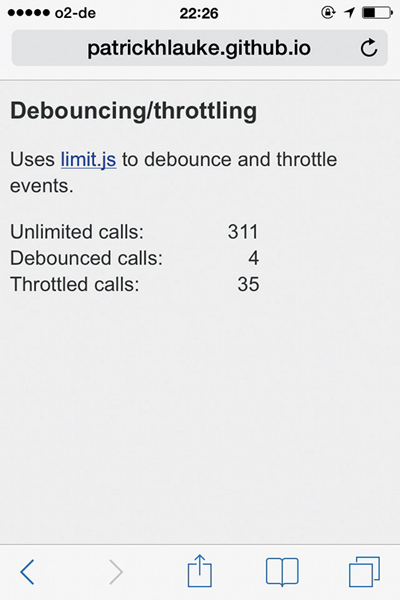

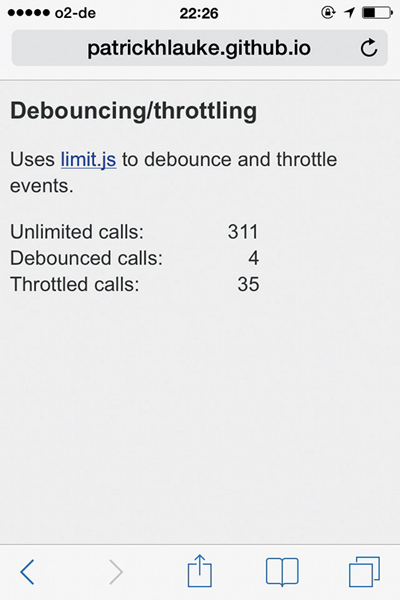

Если количество событий, которое обрабатывается скриптом, слишком высоко, это заслуживает работы специальных решений — например, limit.js.

По умолчанию браузеры на сенсорных устройствах обрабатывают специфические сценарии мыши, но есть ситуации, когда нужно оптимизировать код под сенсорное взаимодействие. В этом уроке мы рассмотрели основы работы с сенсорными событиями в JavaScript. Надеемся, это пригодится.