Creating reports on testing Android applications using Spoon and Emma

Testing is one of the most important steps in developing an application. And Android apps are no exception. When writing code, you usually need to look at it and think about how to test it later. Imagine a situation where you need to test your project, which is completely written. More often than not, it’s not so easy. Most likely, your code has simply not been implemented so that it is easy to test. This means that you have to make changes in it to make it testable ... without destroying any functionality (actually this is called refactoring nowadays). But, making such changes, is it possible to quickly and confidently say that you did not break anything in the working code if it did not have comprehensive tests? Unlikely. I believe that tests, namely UNIT tests,

As for Android, like everyone else, Google did not provide bad testing tools, but not everything can be done with them. For testing, the jUnit framework is used, which has its pros and cons of course.

jUnit - used for unit testing, which allows you to check for the correctness of individual modules of the program source code. The advantage of this approach is the isolation of a single module from others. At the same time, the purpose of this method allows the programmer to make sure that the module, by itself, is able to work correctly. jUnit is a class library. But the one who wrote the tests using this framework probably made sure that it was completely inconvenient when testing the GUI. A sign of good taste is code covered by tests and a report that shows the percentage of code coverage by tests. Recently, in projects, I have to write, among other things, tests from load to GUI and I would like to talk about the features that I meet and how to create these reports. But first, about the main frameworks:

An approximate picture of the use of the main frameworks. In fact, each choice is made for himself. Someone chooses Robotium because of a reluctance to go into the source code, someone Espresso, because of its intuitive interface.

Spoon

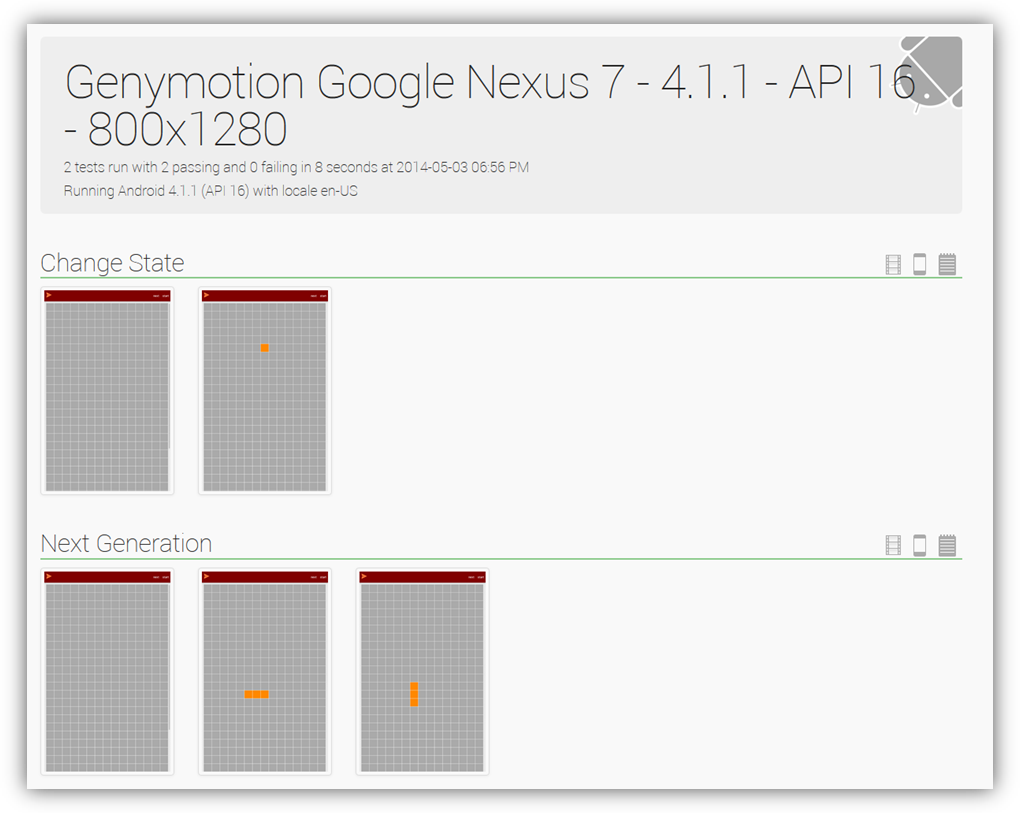

Spoon is a framework that can take screenshots from the screen of a device or emulator during the execution of tests and as a result creates a report from them. In addition to screenshots, he attaches the log of the test runner to the report, and if the test fails, it displays a full stack trace, which is very convenient. To get a report, do the following:

- download Spoon-clien t and Spoon-runner ;

- copy Spoon-client to the libs folder of the project with tests;

- in the same project, create a folder (for example, spoon);

- copy Spoon-runner to the created folder;

- create a bat-file with the following script:

call java -jar spoon-runner-1.1.1-jar-with-dependencies.jar

-- apk Path\to\your\project \bin\project.apk

-- test-apk Path\to\your\test-project \bin\tests.apk

In the subparameters, you can specify a filter for tests, for example, you can run only tests with the “Medium” annotation by adding size medium to the script.

Now the script will look like this:

call java -jar spoon-runner-1.1.1-jar-with-dependencies.jar

-- apk Path\to\your\project \bin\project.apk

-- test-apk Path\to\your\test-project \bin\tests.apk

-- size medium

A full list of subparameters can be found on its official page on Github . That's it, now when writing tests, just insert where you need:

Spoon.screenshot(activity, "state_changed"),

where the second argument is the line that will be highlighted above the screenshot. Yes, another feature - inside of it, it uses regex, and when using spaces in signatures throws an exception. We start the emulator or connect the phone, open our batch file and if everything is done correctly, you will see the report in the same folder:

The official example of the report from the developer is here .

The report from my project can be viewed here .

Not bad, is it? Another advantage of the “spoon” is that it runs tests simultaneously on all connected devices, that is, it will collect the results from all devices in one report. The only and probably significant minus is that it does not take screenshots of the dialogs and it is not possible to see what was on it during the test. And he still will not create a report on code coverage with tests! Well, let's fix it.

Emma

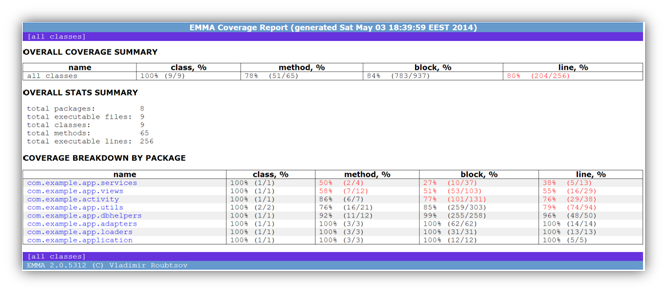

Agree, the report looks at least worthy.

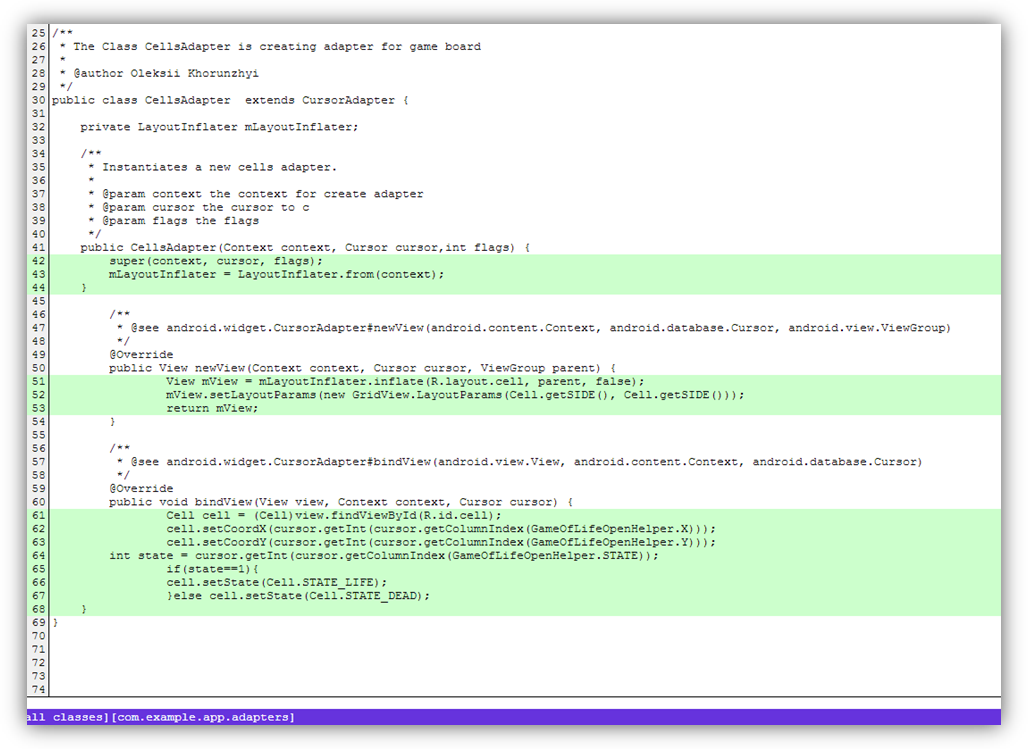

Example of code covered by tests:

and partially covered, respectively:

Full report in all formats .

EMMA is an open source toolkit for measuring and reporting test coverage in Java. This tool is built into the Android SDK and developers provide the ability to generate a report “out of the box”. Key features:

- support for class inspection in offline mode (before loading) and on the fly;

- Supported coverage types: package, class, method, line, and main unit. It is also possible to detect when one line of source code is only partially covered;

- types of output reports: text, HTML, XML;

Building a project using Ant

Apache Ant is a tool for transforming development structures into application deployment designs. It is declarative and all of the command line instructions used to deploy the application are represented by simple XML elements. More details can be found here .

To describe the project assembly procedure, you need: a working project - MyProject and a project with tests for it - MyProjectTests. You can read about the rules that must be followed when creating tests here .

First of all, when building a project using Ant, you need to collect the projects that are used as libraries in the application. If there are none, then you can skip this step. For example, a project uses libraries such as "google_play_service_lib", you need to do the following:

- on the command line, go to the folder installed sdk \ tools (for example, D: \ android \ adt-bundle \ sdk \ tools) and execute:

android update lib-project -p MyLibProject

, where MyLibProject is the path to the library used in the project. As a result, build.xml should appear in the project root and a message will appear in the console:

Updated local.properties

Updated file D:\Workspace\MyProject\build.xml

Updated file D:\Workspace\MyProjectTests\proguard-project.txt

After assembling all the libraries, you need to assemble the working project itself. To do this, in the same folder you need to do:

android update project -p MyProject

where MyProject is the path to the project work branch. Naturally, AndroidManifest.xml should be in this folder. The script will generate build.xml again and build the working project. You can enter the name of the project through subparameters, so that later it is convenient to use:

android update project -p MyProject -n NameForProject

How does the project build with tests? Everything is similar and convenient. Script for building a project with tests:

android update test-project -m ..\MyProject -p \MyProjectTests

where MyProject is the path to the working project, and MyProjectTests is the path to the project with tests.

All is ready! By the way, at this step, developers may have problems due to the use of libraries! For example, in a project, different jar libraries can be compiled based on the libraries that are used in your other libraries. Ant does not understand what to do with them and as a result an error occurs during assembly. That is, if one and the same library is used inside a project, then this may already lead to an error.

To start calculating the amount of code coverage by tests in the script, you need to register emma in the subparameters. Before starting, you need to run the emulator or connect the device. The following script is executed on the command line on the top branch of the project with tests:

ant clean emma debug install test

During the tests, Emma generates the coverage.em file in the bin folder of the main project (metadata), after passing all the tests sets the necessary permissions, creates the coverage.ec file in the folder of the installed project, copies these two files to the bin folder of the test project and based on them generates a report in the same folder.

The source code of my project with sample reports can be viewed on Github .

To summarize

Let's look at the tests, even if they are written very well. You can look at them to answer how much they cover your code? Is it enough for you to have a green strip that shows the result of your tests as a visual report as a whole? I think no. Using reports as a result of the work shows the level of competence of a specialist, and when it comes to test automation, then even more so. Writing scripts can certainly take you some time, but believe me, it's worth it!