The logic of thinking. Part 12. Traces of memory

This series of articles describes a wave model of the brain that is seriously different from traditional models. I strongly recommend that those who have just joined start reading from the first part .

An engram is called the changes that occur with the brain at the time of memorization. In other words, an engram is a trace of memory. It is quite natural that understanding of the nature of engrams is perceived by all researchers as a key task in studying the nature of thinking.

What is the difficulty of this task? If you take a regular book or an external computer drive, then both of these can be called memory. Both store information. But not enough to store. For information to become useful, you must be able to read it and know how to operate it. And here it turns out that the form of information storage itself is closely related to the principles of its processing. One largely determines the other.

Human memory is not just the ability to store a wide range of diverse images, but also a tool that allows you to quickly find and reproduce a relevant memory. Moreover, in addition to associative access to arbitrary fragments of our memory, we also know how to link memories into chronological chains, reproducing not a single image, but a sequence of events.

Wilder Graves Penfield earned well-deserved recognition for his contribution to the study of the functions of the cortex. Being engaged in the treatment of epilepsy, he developed a technique for open brain operations, during which electrical stimulation was used, which allows to clarify the epileptic focus. By exciting various parts of the brain with an electrode, Penfield recorded the response of conscious patients. This made it possible to obtain a detailed idea of the functional organization of the cerebral cortex (Penfield, 1950). Stimulation of some zones, mainly the temporal lobes, evoked vivid memories in patients, in which past events surfaced in great detail. Moreover, the repeated stimulation of the same places evoked the same memories.

The clear localization revealed by Panfil in the cortex of many functions tuned to the search for the same clearly localized traces of memory. In addition, the advent of computers and, accordingly, the idea of how physical media of computer information are organized, stimulated the search for something similar in brain structures.

In 1969, Jerry Lethwin said: “If the human brain consists of specialized neurons, and they encode the unique properties of various objects, then, in principle, somewhere in the brain there must be a neuron with which we recognize and remember our grandmother.” The wording “grandmother’s neuron” has entrenched and often pops up when it comes to talking about a memory device. Moreover, direct experimental evidence was found. Neurons have been detected that respond to certain images, for example, clearly recognizing a specific person or a specific phenomenon. True, in more detailed studies it turned out that the detected "specialized" neurons respond not only to one thing, but to groups in a sense of similar images. So, it turned out that the neuron that responded to Jennifer Aniston also reacted to Lisa Kudrow,

In the first half of the twentieth century, Karl Lashley put up very interesting experiments on the localization of memory. First, he trained rats to find a way out in the maze, and then he removed various parts of the brain and ran it into the maze again. So he tried to find the part of the brain that is responsible for the memory of the acquired skill. But it turned out that the memory in one way or another has always been preserved, despite sometimes significant violations of motor skills. These experiments inspired Karl Pribram to formulate the well-known and popular theory of holographic memory (Pribram, 1971).

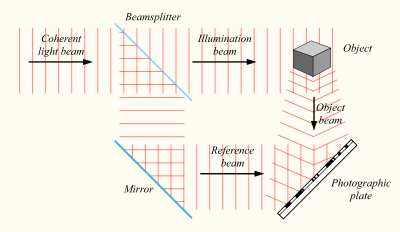

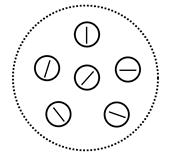

The principles of holography, like the term itself, were invented in 1947 by Denesh Gabor, who received the 1971 Nobel Prize in Physics for this. The essence of holography is as follows. If we have a light source with a stable frequency, then dividing it by means of a translucent mirror into two, we get two coherent light fluxes. One stream can be directed to the object, and the second to the photographic plate.

Creating a hologram

As a result, when the light reflected from the object reaches the photographic plate, it will create an interference pattern with the stream illuminating the plate.

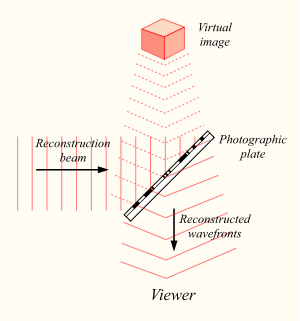

The interference pattern, imprinted on a photographic plate, will store information not only about the amplitude, but also about the phase characteristics of the light field reflected by the object. Now, if you illuminate the previously exposed plate, then the original light flux will be restored, and we will see the remembered object in its entire volume.

Hologram playback

The hologram has some amazing properties. Firstly, the luminous flux preserves the volume, that is, looking at the phantom object from different angles, you can see it from different sides. Secondly, each section of the hologram contains information about the entire light field. So, if we cut the hologram in half, first we will see only half of the object. But if we tilt our heads, then beyond the edge of the remaining hologram we can see the second “cropped” part. Yes, the smaller the fragment of the hologram, the lower its resolution. But even through a small area you can, as through a keyhole, view the entire image. It is interesting that if there is a magnifying glass on the hologram, then through it it will be possible to examine other objects captured there with magnification.

In relation to memory, Pribram formulated: “The essence of the holographic concept is that images are restored when their representations in the form of systems with distributed information are accordingly brought into an active state” (Pribram, 1971).

Mention of the holographic properties of memory can be found in two contexts. On the one hand, calling a holographic memory, they emphasize its distributed nature and ability to restore images using only a part of neurons, similar to how it happens with fragments of a hologram. On the other hand, it is assumed that a memory having properties similar to a hologram is based on the same physical principles. The latter means that since holography is based on the fixation of the interference pattern of light fluxes, the memory, apparently, somehow uses the interference pattern that arises as a result of pulse coding of information. The rhythms of the brain are well known, and where there are fluctuations and waves, and, therefore, their interference is inevitable. And that means

But interference is a subtle thing, small changes in the frequency or phase of the signals should completely change its picture. However, the brain also works successfully with a significant variation in its rhythms. In addition, attempts to impede the spread of electrical activity by dissecting its sections and placing mica in the places of cuts, applying strips of gold foil to create a closure, creating epileptic foci by injection of aluminum paste do not disturb the brain activity too pathologically (Pribram, 1971).

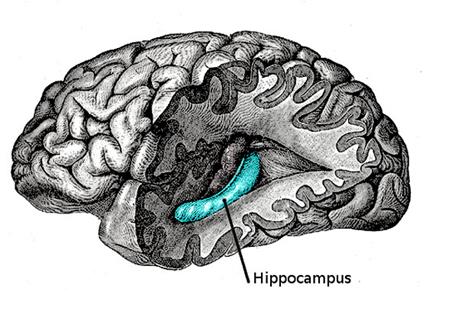

Speaking of memory, it is impossible to ignore the known facts about the relationship of memory and the hippocampus. In 1953, a patient called HM ( Henry Molaison), the surgeon removed the hippocampus (W. Scoviille, B. Milner, 1957). It was a risky attempt to cure severe epilepsy. It was known that the removal of the hippocampus of one of the hemispheres really helps with this disease. Given the exceptional power of epilepsy in HM, the doctor removed the hippocampus from both sides. As a result, HM completely lost the ability to remember anything. He remembered what happened to him before the operation, but everything new flew out of his head as soon as his attention shifted.

Henry Molaison

HM has been researched for a long time. In the course of these studies, countless different experiments have been carried out. One of them was especially interesting. The patient was asked to circle a five-pointed star, looking at her in the mirror. This is not a very simple task, causing difficulty in the absence of the proper skill. The task was given by HM repeatedly and each time he perceived it as the first time he saw it. But it is interesting that each time the task was given him easier and easier. During repeated experiments, he himself noted that he expected that it would turn out to be much more complicated.

The hippocampus of one of the hemispheres

In addition, it turned out that a certain memory for the events was still inherent in HM. For example, he knew about the Kennedy assassination, although it happened after the hippocampus was removed from him.

From these facts, it was concluded that there are at least two different types of memory. One type is responsible for fixing specific memories, and the other is responsible for obtaining some generalized experience, which is expressed in the knowledge of common facts or the acquisition of certain skills.

The case of HM is quite unique. In other situations associated with the removal of the hippocampus, where there was no such complete bilateral damage as in HM, memory impairment was either not so pronounced or absent altogether (W. Scoviille, B. Milner, 1957).

Now let's try to compare everything described with our model. We have shown that persistent repetitive phenomena form patterns of detector neurons. These patterns are able to recognize a combination of features characteristic of them, and add new identifiers to the wave pattern. We have shown how the reverse reproduction of features by the concept identifier can occur. This can be compared with the memory of a generalized experience.

But such a generalized memory does not allow to recreate specific events. If the same phenomenon is repeated in different situations, we in our neural network simply get associative connections between the concept corresponding to the phenomenon and the concepts that describe these circumstances. Using this associativity, you can create an abstract description consisting of concepts that occur together. The task of event memory is not to reproduce a certain abstract picture, but to recreate a previously remembered situation that describes a specific event with all its unique unique features.

The difficulty is actually that in our model there is no place where a complete and comprehensive description of what is happening is localized. A full description consists of many descriptions active on individual zones of the cortex. Each of the zones has a wave description in terms that are specific to this particular area of the brain. And if we even somehow remember what happens on each of the zones separately, these descriptions will still need to be linked together so that a holistic image emerges.

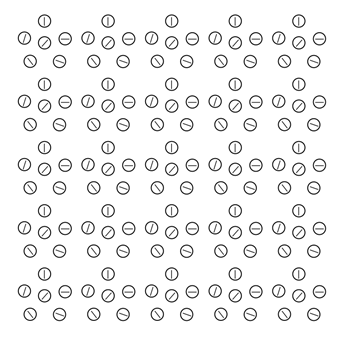

A similar situation arises when we have a topographic projection and neurons with local receptive fields. Suppose we have a neural network consisting of two flat layers (figure below). Suppose that the state of the neurons of the first layer forms a certain picture. This picture is transmitted through the projection fibers to the second layer. Neurons of the second layer have synaptic connections with those fibers that fall within the boundaries of their receptive fields. Thus, each of the neurons of the second layer sees only a small fragment of the original image of the first layer.

Topographic projection of the image on local receptive fields

There is an obvious way how to remember the supplied picture on the second layer. It is necessary to choose a set of neurons so that their receptive fields completely cover the projected image. Remember on each of the neurons your fragment of the image. And for the memory to become connected - mark all these neurons with a common marker, indicating their belonging to one set.

Such memorization is very simple, but extremely wasteful in the number of neurons involved. Each new picture will require a new distributed set of memory elements.

You can get savings if it turns out that different images repeat common fragments, then you can not force the new neuron to memorize such a fragment, but use the existing neuron by simply adding another marker to it, now from the new image.

Thus, we come to the basic idea of distributed memorization. We will describe it first for a picture and topographic projection.

We will submit various images to the first zone and project them onto the second zone. If we make the receptive fields of neurons small enough, the number of unique pictures in each local area will not be so great. We can choose the sizes of the receptive field in such a way that all unique variants of local images fit into the region, the sizes of which will approximately coincide with the size of the receptive field of neurons.

Create spatial regions containing detector neurons. We make sure that each region contains detectors of all possible unique images and that such regions cover the entire space of the second zone. To do this, we can use the previously described principles for identifying sets of factors.

The task of the detectors is to compare the images supplied to their receptive fields with the images characteristic of them. For such a comparison of images, one can use convolution over the receptive field R : The

response of the neuron will be the higher, the more the new image covers the remembered image. If we are not interested in the degree of coverage, and the level of coincidence of the images, you can use the correlation of images, which is nothing else than the normalized convolution:

By the way, the same value is the cosine of the angle formed by the image vector and weights:

As a result, in each local group of detectors when a new picture is submitted, neuron detectors that most accurately describe their local fragment will be triggered.

Now let's do the following: for each new image we will generate our own unique identifier label and mark active neuron detectors with it. We will find that each supply of the image is accompanied by the appearance on the second zone of the cortex of a picture of activity, which is a description of this image through signs available to the second zone. Creating a unique identifier and marking it with active neurons of detectors - this is the memorization of a specific event.

If we select one of the markers, find the neurons-detectors containing it, and restore the local images characteristic of them, then we will get the restoration of the original image.

In order to memorize and reproduce many different images, detector neurons must have unchanged synaptic weights and have the ability to store as many markers as they need to remember.

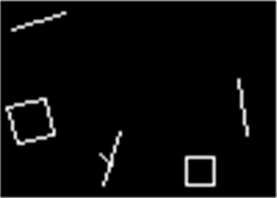

Let us show the work of distributed memorization using a simple example. Suppose that we generate in the upper zone contour images of various geometric shapes (figure below).

Image file

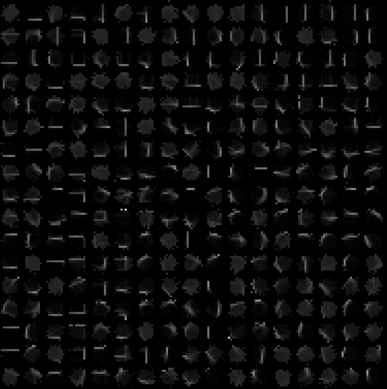

We will train the lower zone to highlight various factors by the decorrelation method. The main images that will appear in each small receptive field are lines at different angles. There will be other images, for example, intersections and angles inherent in geometric shapes. But the lines will dominate, that is, meet more often. This means that they stand out primarily in the form of factors. The real result of such training is shown in the figure below.

Fragment of a field of factors extracted from contour images

It can be seen that there are many vertical and horizontal lines that differ in their position on the receptive field. This is not surprising, since even a small bias creates a new factor that does not intersect with its parallel “twins”. Suppose that we somehow complicated our network so that adjacent parallel “twins” merged into one factor. Further, let us assume that in small areas factors have emerged, as shown in the figure below, with a certain discreteness describing all possible directions.

Factors in a small area corresponding to different directions with a discreteness of one hour.

Then the result of training the entire cortical zone can be conditionally depicted as follows:

Conditional result of learning the cortical zone. For clarity, neurons are not placed on a regular grid.

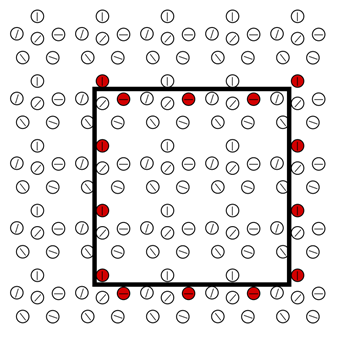

Now let’s apply a square image to the trained zone of the cortex. Neurons that see a stimulus characteristic of them on their receptive field are activated (figure below).

Reaction of the trained cortical zone to the image of the square

Now we will generate a random unique number - the identifier of the memory. For simplicity, we will not use our wave networks for now, we will confine ourselves to the assumption that each neuron can store, in addition to synaptic weights, a set of identifiers, that is, a large array of disordered numbers. Let's make all active neurons remember in their sets the just generated identifier. Actually, with this action we will fix the memory of the square seen.

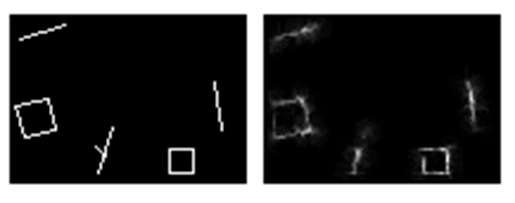

By submitting new images, for each of them we will generate our own unique identifier and add it to the neurons that have responded to the current image. Now, in order to remember something, it will be enough to take the corresponding identifier, activate all the neurons that contain it, and then restore the patterns of images characteristic of these neurons. Naturally, the richer and sharper the description system will be, the more accurately the restored image will coincide with the original. But even on very crude models, for example, on the network above, you can get quite plausible recovery results (figure below).

The original image and memory reconstructed from the factors of the “rough” model.

Now we can formulate our assumptions about how the event memory of the real brain is arranged.

Learning of various zones of the cortex leads to the formation of patterns of neuron detectors capable of responding to images characteristic of these zones. This training is based on synaptic plasticity. This training does not capture specific events, but only highlights generalized concepts. When a “grandmother’s neuron” arises, one cannot judge memories that have anything to do with grandmother by his synaptic scales. Weights of synapses are described not by specific events, but by pictures of signs characteristic of grandmother's recognition.

The picture of the description occurring in each of the zones of the cortex is dualistic. This is both a picture of the evoked activity of neuron detectors, and a wave identifier formed both by the cortex's own emitting patterns and by waves that came along the projection system.

The role of the hippocampus is to create a unique identifier for each memory and add it to the overall wave picture. As a result of this, in addition to listing the signs describing the current event, on each cortical zone in the wave identifier, there will also appear a unique additive from the hippocampus, which will make it possible to distinguish wave descriptions of similar events.

Detector neurons in a state of evoked activity fix the current wave identifier on their metabotropic clusters. By the way, we already observed something similar when we described the system of generalized associativity of concepts. Only now a unique component from the hippocampus has been added to the identifier. With this action we will create an engram that allows you to find all the neurons-detectors related to one memory.

It should be noted that this design works the same way regardless of how information is transmitted to the cortical zone. For both topographic and wave projection, the principles of memorization remain unchanged.

This memory design has all the holographic properties required from memory. Detector neurons of any detector pattern store information about the entire wave pattern that was on the cortex at the time of memorization, which corresponds exactly to how a fragment of the hologram stores information about the wave pattern of the surrounding space.

The fixation of the engram is distributed over all parts of the brain that have been active in recognizing what is happening. This means that the memory is not tied to any one or even several neurons and does not have a specific localization. Removing any part of the cortex, as was the case in Lashley’s experiments, does not destroy the whole engram, but only impoverishes it in the description in terms that have been deleted.

It becomes clear the nature of the neurons that respond to Jennifer Aniston or the master Yoda. These are not memory neurons - these are only neuron detectors associated with concepts related to the respective films.

The nature of vivid visions arising from stimulation of the brain with an electrode can be explained. The electrode excites a random pattern of neurons with which it is in contact. If it turns out that this pattern is similar to a wave fragment of some known identifier cortex, then it starts the corresponding wave, which builds the rest of the information picture of the brain. A repeated electrical impulse to an already inserted electrode causes the same vision, since it creates the same pattern of activity. But at the same time, the nature of the vision is in no way connected with the place where the electrode fell. It is not concepts that really have localization that are activated, but a wave that, in principle, could have arisen in any other place. Just at the place of introduction of the electrode, the pattern of this wave coincided with the shape of the needle. For the wave model of the cortex, all this is quite natural,

Our concept of memory explains well the features of the HM patient. Since the hippocampus is necessary to create a unique identifier, it is not surprising that its absence made it impossible to create new memories and, at the same time, the existing memories were not broken. Where an identifier has already been assigned, the hippocampus is no longer needed for subsequent information procedures. Since the formation of neuron detectors and detector patterns is not tied to the hippocampus, the ability to process learning and the formation of generalized memory is also explained.

Once again I draw attention to the fact that in the description of event memory we did not use the plasticity of synapses as an instrument of memorization. The plasticity of synapses with us is a mechanism for the formation of patterns of neuron detectors. That is, traces of specific events cannot be found directly on the synapses of neurons, although the images described by synaptic scales always remind one of the experience encountered earlier. We have come to the need to separate learning mechanisms from event memory. Based on this, two types of engrams were obtained in our model. One type is the modification of synaptic scales, which allows distinguishing features that are the basis for all subsequent descriptions. The second type is the formation of extrasynaptic metabotropic receptive clusters that unite many neurons involved in the description of a particular event. Moreover, the second type of engram is impossible without the first. This means that for the formation of memories of any events in their entirety, there must be factors to make such a description.

Information is described hierarchically by the brain, highlighting more and more abstract features from level to level. When we talk about memories of an event, we usually don’t mean lower level photographic memory, we are talking about fixing a more abstract description, which in the process of restoration can lead to reconstruction of the original photographic picture. But for such a description to be possible, it is necessary that appropriate factors be formed. It seems that it is for this reason that we do not have early childhood memories. At the age to which the failure of our memory relates, we simply do not yet have the concepts that are necessary for a full description of events.

The used literature

Continuation

Previous parts:

Part 1. Neuron

Part 2. Factors

Part 3. Perceptron, convolutional networks

Part 4. Background activity

Part 5. Brain waves

Part 6. Projection system

Part 7. Human-computer interface

Part 8. Isolation of factors in wave networks

Part 9. Patterns of neuron detectors. Rear projection

Part 10. Spatial self-organization

Part 11. Dynamic neural networks. Associativity