Scientists have found a part of the human brain responsible for the pitch of our speech

In June, a team of scientists from the University of California at San Francisco published a study that sheds light on how people change the pitch in their speech.

The results of this study may be useful in creating synthesizers of naturally sounding speech - with emotions and various intonations.

About research - in our today's article.

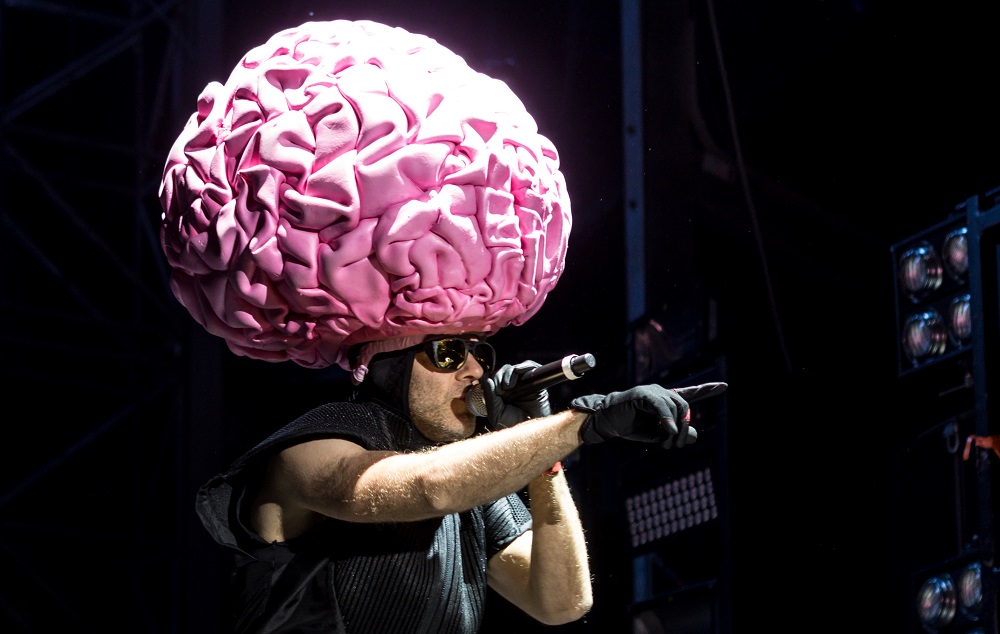

Photo by Florian Koppe / CC

A team of scientists at the University of California recently conducted a series of experiments. She studies the interrelationship of various parts of the brain and organs of speech. Researchers are trying to find out what is happening in the brain during a conversation.

The work referred to in the article focuses on the area controlling the larynx, including at the time of changing the pitch.

The leading expert in the study was a neurosurgeon Edward Chang. He works with patients suffering from epilepsy and performs operations that prevent seizures. Chang follows the brain activity of some of his patients with the help of special equipment.

The team recruited volunteers for their research from this particular group of patients. Connected sensors allow you to monitor their neural activity during experiments. This method, known as electrocorticography , helped scientists find the area of the brain responsible for changes in pitch.

Study participants were asked to repeat the same sentence out loud, but to emphasize different words each time. This changed the meaning of the phrase. At the same time, the frequency of the main tone also changed - the frequency of oscillations of the vocal cords.

The team found that neurons in one area of the brain were activated when the patient raised his tone. This area in the motor zone of the cortex is responsible for the muscles of the larynx. Researchers stimulated neurons with electricity in this area, to which the muscles of the larynx responded with tension, while some patients involuntarily made sounds.

Participants in the study also included the recording of their own votes. This caused the response of neurons. From this, team members concluded that this area of the brain is involved not only in changing the frequency of the fundamental tone, but also in speech perception. This can give an idea of how the brain is involved in imitating someone else's speech - it allows you to change the pitch and other characteristics in order to parody the interlocutor.

Journalist Robbie Gonzalez from Wired believes that the results of the study can be useful in laryngeal prosthetics and allow patients who are deprived of voice to “talk” more realistically. This is confirmed by the scientists themselves.

Human speech synthesizers — for example, the one used by Stephen Hawking — can still reproduce words by interpreting neural activity. However, they cannot place accents, as a person with a healthy speech apparatus would do. Because of this, it sounds unnatural, and it is not always clear whether the interlocutor asks a question or makes a statement.

Scientists continue to explore the area of the brain responsible for changing the frequency of the fundamental tone. There is an assumption that in the future speech synthesizers will be able to analyze neural activity in this area and, based on the data obtained, build sentences in a natural way - emphasize the right words with the pitch, formulate questions and statements intonation depending on what the person wants to say.

Not so long ago, in the laboratory of Edward Chang conducted another study that can help in the development of voice-forming devices. Participants read out hundreds of sentences, in the sound of which almost all possible phonetic constructions of American English were used. And scientists watched the subjects' neural activity.

Photo by PxHere / PD

This time, the subject of interest was co-articulation - how the vocal tract organs (for example, the lips and tongue) work when uttering different sounds. Attention was given to words in which different vowels follow the same hard consonant. When pronouncing such words, lips and tongue often work differently - as a result, our perception of the corresponding sounds is different.

Scientists not only identified groups of neurons responsible for specific movements of the vocal tract organs, but also found that the speech centers of the brain coordinate the movements of the muscles of the tongue, larynx, and other organs of the vocal tract, relying on the context of speech — the order in which the sounds are made. We know that language takes different positions depending on what the next sound in a word will be, and there are a huge number of such sound combinations - this is another factor that makes the sound of human speech natural.

The study of all variants of co-articulation controlled by neural activity will also play a role in the development of speech synthesis technologies of people who have lost the ability to speak, but whose neural functions have survived.

To help people with disabilities are usedand systems using the opposite principle, AI-based tools that help transform speech into text. The presence of intonation and accents in speech is also difficult for this technology. Their presence makes it difficult for artificial intelligence algorithms to recognize individual words.

Employees of Cisco, the Moscow Institute of Physics and Technology and the Higher School of Economics recently presented a possible solution to the problem for converting American English to text. Their system uses the CMUdict pronunciation database.and recurrent neural network capabilities. Their method consists in automatic preliminary “cleaning” of speech from “superfluous” overtones. Thus, in terms of its sound, speech approaches that of spoken American English, without clearly expressed regional or ethnic “tracks.”

Professor Chang in the future wants to explore and how the brain of people who speak the dialects of Chinese. In them, variations in the frequency of the fundamental tone can significantly change the meaning of a word. Scientists wonder how people perceive different phonetic constructions in this case.

Benjamin Dichter, one of Chang’s colleagues, believes that the next step is to go further in understanding the brain-larynx relationship. The team now has to learn to guess which tone frequency the speaker will choose, analyzing his neural activity. This is the key to creating a synthesizer for naturally sounding speech.

Scientists believethat such a device will not be released in the near future, but the study of Dichter and the team will bring science closer to the moment when the artificial speech apparatus learns to interpret not only individual words, but also intonations, which means adding emotions to it.

More interesting about sound - in our Telegram-channel :

How Star Wars sounded.

How Star Wars sounded.

Unusual audio gadgets.

Unusual audio gadgets.

Sounds from the world of nightmares.

Sounds from the world of nightmares.

Cinema on records

Cinema on records

Music at work

Music at work

The results of this study may be useful in creating synthesizers of naturally sounding speech - with emotions and various intonations.

About research - in our today's article.

Photo by Florian Koppe / CC

How did the study go?

A team of scientists at the University of California recently conducted a series of experiments. She studies the interrelationship of various parts of the brain and organs of speech. Researchers are trying to find out what is happening in the brain during a conversation.

The work referred to in the article focuses on the area controlling the larynx, including at the time of changing the pitch.

The leading expert in the study was a neurosurgeon Edward Chang. He works with patients suffering from epilepsy and performs operations that prevent seizures. Chang follows the brain activity of some of his patients with the help of special equipment.

The team recruited volunteers for their research from this particular group of patients. Connected sensors allow you to monitor their neural activity during experiments. This method, known as electrocorticography , helped scientists find the area of the brain responsible for changes in pitch.

Study participants were asked to repeat the same sentence out loud, but to emphasize different words each time. This changed the meaning of the phrase. At the same time, the frequency of the main tone also changed - the frequency of oscillations of the vocal cords.

The team found that neurons in one area of the brain were activated when the patient raised his tone. This area in the motor zone of the cortex is responsible for the muscles of the larynx. Researchers stimulated neurons with electricity in this area, to which the muscles of the larynx responded with tension, while some patients involuntarily made sounds.

Participants in the study also included the recording of their own votes. This caused the response of neurons. From this, team members concluded that this area of the brain is involved not only in changing the frequency of the fundamental tone, but also in speech perception. This can give an idea of how the brain is involved in imitating someone else's speech - it allows you to change the pitch and other characteristics in order to parody the interlocutor.

Useful in the development of voice synthesizers

Journalist Robbie Gonzalez from Wired believes that the results of the study can be useful in laryngeal prosthetics and allow patients who are deprived of voice to “talk” more realistically. This is confirmed by the scientists themselves.

Human speech synthesizers — for example, the one used by Stephen Hawking — can still reproduce words by interpreting neural activity. However, they cannot place accents, as a person with a healthy speech apparatus would do. Because of this, it sounds unnatural, and it is not always clear whether the interlocutor asks a question or makes a statement.

Scientists continue to explore the area of the brain responsible for changing the frequency of the fundamental tone. There is an assumption that in the future speech synthesizers will be able to analyze neural activity in this area and, based on the data obtained, build sentences in a natural way - emphasize the right words with the pitch, formulate questions and statements intonation depending on what the person wants to say.

Other studies of speech patterns

Not so long ago, in the laboratory of Edward Chang conducted another study that can help in the development of voice-forming devices. Participants read out hundreds of sentences, in the sound of which almost all possible phonetic constructions of American English were used. And scientists watched the subjects' neural activity.

Photo by PxHere / PD

This time, the subject of interest was co-articulation - how the vocal tract organs (for example, the lips and tongue) work when uttering different sounds. Attention was given to words in which different vowels follow the same hard consonant. When pronouncing such words, lips and tongue often work differently - as a result, our perception of the corresponding sounds is different.

Scientists not only identified groups of neurons responsible for specific movements of the vocal tract organs, but also found that the speech centers of the brain coordinate the movements of the muscles of the tongue, larynx, and other organs of the vocal tract, relying on the context of speech — the order in which the sounds are made. We know that language takes different positions depending on what the next sound in a word will be, and there are a huge number of such sound combinations - this is another factor that makes the sound of human speech natural.

The study of all variants of co-articulation controlled by neural activity will also play a role in the development of speech synthesis technologies of people who have lost the ability to speak, but whose neural functions have survived.

To help people with disabilities are usedand systems using the opposite principle, AI-based tools that help transform speech into text. The presence of intonation and accents in speech is also difficult for this technology. Their presence makes it difficult for artificial intelligence algorithms to recognize individual words.

Employees of Cisco, the Moscow Institute of Physics and Technology and the Higher School of Economics recently presented a possible solution to the problem for converting American English to text. Their system uses the CMUdict pronunciation database.and recurrent neural network capabilities. Their method consists in automatic preliminary “cleaning” of speech from “superfluous” overtones. Thus, in terms of its sound, speech approaches that of spoken American English, without clearly expressed regional or ethnic “tracks.”

The future of speech research

Professor Chang in the future wants to explore and how the brain of people who speak the dialects of Chinese. In them, variations in the frequency of the fundamental tone can significantly change the meaning of a word. Scientists wonder how people perceive different phonetic constructions in this case.

Benjamin Dichter, one of Chang’s colleagues, believes that the next step is to go further in understanding the brain-larynx relationship. The team now has to learn to guess which tone frequency the speaker will choose, analyzing his neural activity. This is the key to creating a synthesizer for naturally sounding speech.

Scientists believethat such a device will not be released in the near future, but the study of Dichter and the team will bring science closer to the moment when the artificial speech apparatus learns to interpret not only individual words, but also intonations, which means adding emotions to it.

More interesting about sound - in our Telegram-channel :

How Star Wars sounded.

How Star Wars sounded.  Unusual audio gadgets.

Unusual audio gadgets.  Sounds from the world of nightmares.

Sounds from the world of nightmares.  Cinema on records

Cinema on records  Music at work

Music at work