How traffic optimization is done

- Tutorial

The “efficiency” of a standard WAN is only about 10%.

If you look into almost any communication channel between a company branch and a data center, you can see a rather non-optimal picture:

- Firstly, a lot (up to 60–70% of the channel) of redundant information that has already been requested in one way or another is transmitted .

- Secondly, the channel is loaded with “chatty” applications designed to work on the local network - they exchange short messages, which negatively affects their performance in the communication channel.

- Thirdly, the TCP protocol itself was originally created for local networks and is excellent for small RTT delays and in the absence of packet loss in the network. In real channels, when packet loss occurs, the speed degrades greatly and slowly recovers due to large RTT.

I work as the head of the engineering team of the CROC telecommunications department and regularly optimize the communication channels of data centers of both our and energy companies, banks and other organizations. Below I will tell the basics and give the most interesting, in my opinion, solution.

Compression and Deduplication

The first problem has already been described: a lot of redundant duplicate data is transmitted in the channel. The most striking example is the Citrix farm, in which branches of a bank work: in a single office, 20-30 different machines can request the same data. Accordingly, the channel could be safely unloaded by 60–70% due to deduplication.

On Citrix itself, of course, you can enable data compression, but the efficiency (compression) is several times lower than on specialized traffic optimizers. Mainly due to the fact that optimizers not only compress data, but also deduplicate. Through the optimizer, the traffic of the entire branch passes. And the more users in the branch, the more repeated user requests and the greater the effect of deduplication. For a single user, standard compression, such as Limpel-Ziv, can be even higher than deduplication, but if there are more devices, deduplication will come first.

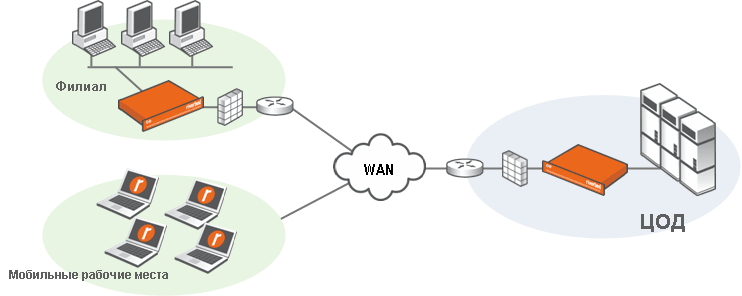

As a rule, optimizers are PACs, but they can also be implemented as virtual machines. To optimize traffic on the communication channel, optimizers must be installed at both sites. Optimizers are placed before VPN gateways, since deduplication of encrypted traffic is useless.

The deduplication algorithm is as follows:

- The branch makes a request to the data center;

- The server sends data to the office;

- Before getting into the communication channel, the data passes through the optimizer on the side of the data center;

- The optimizer segments data and deduplicates it. Data is divided into blocks, each of which receives a short name - a link to the block;

- Links and data blocks are stored in local storage - the so-called dictionary;

- Links and data blocks are sent to the optimizer in the branch. But before sending, the data center optimizer compresses the data according to the algorithm. As a result, the data does not become larger even during the first (cold) data transfer;

- The branch optimizer recovers data compressed by the algorithm obtained from the data center, builds its local symmetric correspondence dictionary (data block - link), removes links from the data, and sends the original data to the client;

- Now, any data passing through branch or data center optimizers will be checked for duplicated data. If a match is found with the block in the dictionary, then this block of raw data will be replaced by a short link. A known (already transmitted) block is not transmitted.

It remains to add that the dictionary is constantly updated and, thanks to a special algorithm, the most requested data blocks remain in the dictionary.

We see a fundamental difference from traditional caching devices. Caching devices operate at the file level. If the file has undergone any, even minor, changes, then it must be transferred again. Optimizers work at the level of data blocks, and when a previously transferred file is changed, only changes will be sent to the communication channel, and the rest will be replaced by links.

Another problem is that TCP speed is limited by window size (TCP Windows Size). Window size - the amount of data transmitted by the sender before receiving confirmation from the recipient. At the same time, it is required to transmit TCP Windows Size less than once to transmit compressed traffic, which leads to an increase in the transmission speed.

So again, this works like this:

- Device A deduplicates traffic.

- Device B collects the “complete picture” from its local storage.

- Both of these devices work symmetrically.

- Both of these devices do not affect the infrastructure and configuration of everything that lies behind them, that is, they are simply included in the channel break, for example, at the exit from the data center and at the entrance to the regional office of the company.

- Devices in no way limit communication with nodes where there are no such devices.

Deduplication for encrypted channels

The encrypted channel is clearly less suitable for compression and deduplication, that is, there is almost no practical benefit from working with already encrypted traffic. Therefore, optimizers are included in the gap to the encryption device: the data center sends the data to the optimizer, the optimizer sends it to encryption (for example, to a secure VPN channel), on the other side the traffic is decrypted and sent to the optimizer on the spot, and it already sends them to the network. This is a standard feature of “optimizer boxes”, and all this happens without reducing the risk of traffic compromise.

Mobile Deduplication

In recent years, quite often people with laptops and tablets work directly with data centers, who also need a lot of data (the same images of virtual machines or samples from the database). For them, not “optimizer boxes” are used, but special software that simply consumes part of the processor resources and part of the hard drive for the same purposes. In fact, we are changing a slight decrease in laptop performance and cache space on the hard drive for a faster channel. Users usually do not notice anything other than speeding up network services.

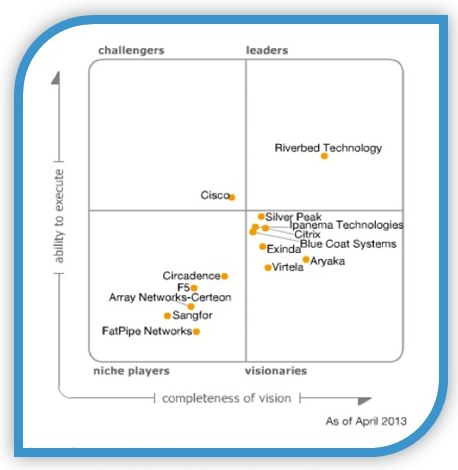

Who makes these optimizers?

We use Riverbed solutions. This company was founded in 2002, and in 2004 introduced its first model of optimizers for communication channels. Riverbed products and solutions, including WAN optimization, performance management, application delivery, and data warehouse acceleration, enable IT professionals to increase and manage productivity. Optimizers are very easy to integrate into the network. The easiest way is to install “in the gap” from the LAN to the router or VPN gateway.

Competitive solutions. In 2013, Riverbed occupied 50% of the market for the WAN optimization segment.

From the point of view of the customer’s commercial director, these are several boxes that, after simple connection to the network, accelerate slow channels by 2–3 times and reduce the channel load by 2 times. For this, almost everyone loves them!

Optimizer Connection

The easiest and most reliable way is to “break” between the edge router and the LAN switch. If the optimizer fails, it closes the contacts of the LAN and WAN interfaces - and the traffic simply passes through it, as through a regular cross-cable. Accordingly, seeing the non-optimized traffic, the optimizer on the other side also simply passes it through itself without processing.

Respectively:

- The connection of the branch with the optimizer and the data center with the optimizer - traffic is optimized.

- A branch office connection without an optimizer and a data center with an optimizer - the data center optimizer simply transparently passes traffic without changes.

- The connection of the branch with the optimizer and the data center with the optimizer when any of the optimizers fails - the traffic simply does not compress and goes “as is”.

Naturally, in data centers, optimizers are clustered for fault tolerance or capacity increase plus are supplied with Interceptor balancers. But about this a little lower, when we get to specific equipment.

TCP Acceleration

TCP speed is limited by window size. A window is the amount of information that a server can send to a client before receiving confirmation of receipt.

Standard TCP behavior looks like this:

- slow overclocking, TCP window size increases;

- in case of packet loss - a sharp drop in speed (window reduction by 2 times);

- and again its slow increase (increase in the window);

- packet loss and subsidence again and so on.

The orange “saw” on the graph is standard TCP behavior.

On communication channels with a large bandwidth, but with any loss level and high RTT delays, the available bandwidth is used inefficiently, that is, the channel never loads completely.

The Riverbed thought about the same thing. And since we already have optimizer boxes at the input and output, it is foolish not to use them to modify the TCP protocol to avoid standard problems. Therefore, optimizers can not only optimize traffic at the data level (deduplication / compression), but also accelerate the transport level.

Here are a number of modes available to speed up TCP:

- HighSpeed TCP mode - here the speed reaches its maximum much faster than during normal work with TCP. With losses, it is not so low and not so much sagging as standard TCP;

- MaxTCP mode - uses 100% of the band without slowdowns. The packet is lost - deceleration does not occur. However, this mode requires the setting of QoS quality of service rules to determine the limitations of the available bandwidth that MX-TCP traffic can occupy;

- SCPS mode - designed specifically for satellite communications channels. Here the bands are not limited, as in MaxTCP. SCPS perfectly adapts to the floating characteristics of satellite channels.

Application optimization

Many applications are chatty, that is, they can send up to 50 packets when one is enough. As I already said, this is a consequence of designing for local networks, and not for working through “long-distance” communication channels. Using optimizers, the number of round-trip passes is reduced by more than 50 times.

Here's what it looks like:

Optimizers act as transparent proxies at the seventh level for a number of the most common application protocols.

The data center optimizer acts as a client in relation to the server. The branch optimizer acts as a server in relation to clients. Thus, inefficient, “chatty” communication of applications remains on the local network. Between optimizers, application messaging takes place in a more suitable form for communication channels - the number of messages is reduced.

Riverbed optimization devices can accelerate the following application protocols at the seventh level:

Interestingly, there are encrypted applications, including encrypted Citrix and MAPI. When optimizing encrypted traffic, there is no reduction in security.

Examples of application acceleration. In a real network, the acceleration will depend on the communication channel. The worse the communication channel, the greater acceleration rates can be achieved.

Typical wiring diagram

Steelhead optimizers are placed in front of the data channel, but up to encryption devices. For data centers with special requirements, clustering is also used to increase reliability plus Interceptor load balancers.

Result (example)

Green - WAN traffic. Blue - LAN traffic. Without the Riverbed optimizer, they would be the same.

The highlighted column shows the percentage of compression over TCP ports.

Iron Rulers

Capacity may be expanded by license. In some cases, hardware upgrade is required to improve performance. Upgrade capabilities within the platform are indicated by green arrows.

The younger model is suitable even for a small online store: it is from 1 megabit per second and 20 channels. And the flagship supports up to 150,000 simultaneous open connections on 1.5 gigabit per second channels. If this is not enough, the Inteceptor balancer is used. Clusters from balancers and optimizers allow working with a channel of up to 40 gigabits per second with 1 million connections open simultaneously.

How much is the price?

The youngest model - from about 100 thousand rubles, the device for medium data centers - 1.1 million rubles, for large data centers - from 5.5 million rubles. At the same time, the price varies quite a lot depending on specific usage patterns, plus there may be discounts, so these numbers are purely approximate, it is better to check by mail (it is at the end of the topic). The payback of such solutions for medium and large businesses is fairly easy to calculate, simply figuring out that you will have 30 to 60% of the channel freed up (again, I can name the specific indicator with an accuracy of 10% by mail depending on the type of utilization of the channel), and users will not complain about application brakes.

More Riverbed Items:

After the channel is optimized in the described way, we often monitor and solve problems with specific services and equipment. In practice, these are whole detective stories. I will talk about them a little later. If it is interesting - subscribe to the corporate CROC blog on Habré.

For whom I specifically implemented:

I do not have the right to name all customers, but I can say that the Riverbed traffic optimization solution was used to:

- the five largest representatives of the banking sector;

- a major gold mining company;

- a large logistics company;

- smaller companies.

Questions

If you are interested in something specific, ask in the comments or mail to AVrublevsky@croc.ru . By the same mail I can send price calculations, implementation schemes and an assessment of the optimization of the channel after discussing your specific situation. It is clear that an accurate assessment is possible only after the test, but on average the error after discussion is about 10%.