Processing digital images in remote sensing (remote sensing of the earth)

On Habré there were a lot of articles about the use of various image processing methods, including data classification, filtering. Many of these approaches are also used in remote sensing when processing digital images of the Earth.

From the moment the image is received from the satellite, to the possibility of analyzing it, a whole cycle of procedures must be taken to bring it into a form convenient for obtaining and subsequent analysis of visual information.

Those who are interested in the process itself, I ask for cat (traffic):

Before moving on to the very essence, I would like to immediately introduce a number ofelementary concepts that, most likely, many are already familiar with, but, nevertheless, we will not deviate from the specifics. Those who are confident in their competence on this issue, let them immediately proceed to the most interesting.

As everyone knows, a digital image is a matrix of pixels, the value of each of which is obtained by averaging over four components: space coordinates (x and y), wavelength and time.

The process of compiling the matrix itself goes like this: solar radiation is reflected from the objects taken, the energy that enters the sensor’s surface is fixed by it, then it is integrated, from which the integral pixel value is set. The integer value is obtained after converting the integral value into an electrical signal. Each pixel stores information in binary form. The more bits (memory) allocated per pixel, the greater the number of values corresponding to one pixel, the more accurately the original discrete signal is approximated, the more information the picture can store.

In CCD scanners, the already mentioned detectors scan the Earth and divide a continuous stream of data into pixels.

A lot depends on the type of scanner, it determines the method of obtaining images. So, there are three main types of scanners:

Digital images are displayed using either an 8-bit grayscale or 24-bit scale, which is based on a mixture of different shades of R, G, B. The original range of pixel values is converted into a limited range by combining color values that correspond to any three channels of a multispectral digital image . One pixel is displayed by 256 ^ 3 RGB vectors, one vector is one color. There are other options for radiometric resolution. For example, QuickBird has 11bit / pix, Landsat-8 has 16bit / pix.

How spectral channels are formed in optical sensors:

The beam of light received by the sensors is divided into several rays. Each beam, passing its optical path, goes through a spectral filter. To separate the spectral ranges, prisms and diffraction gratings can be used.

The following procedures are included in the cycle of preliminary procedures for image processing:

Decryption is the process of detecting and recognizing objects and terrain phenomena in an image. It can be either manual, that is, based on a visual (human) assessment of the image, or machine (automatic). The latter, something tells me, is of great interest to many Habrausers. Machine processing, in essence, comes down to various classification mechanisms. First you need to imagine all the pixels (their spectral brightness) as vectors in the space of spectral features. When analyzing the quantitative relationships of the spectral brightnesses of different objects, pixels are divided into classes. The classification of pictures is divided into classification with training and classification without training.

Classification with training implies the existence of a standard with which the brightness of each pixel is compared. As a result, having several standards predefined, we get many objects divided into classes. This classification only works if the objects that are displayed in the image are known in advance, the classes are clearly distinguishable and their number is small.

Here are just a few of the methods that can be used in classifications with training:

Classification without training is based on a fully automatic distribution of pixels into classes based on statistics on the distribution of brightness values of pixels. This type of classification is used if it is not initially known how many objects are present in the image, the number of objects is large, as a result, the machine issues the received classes, and we already determine which objects to match them with.

All the processes of pre-processing and improving the quality of images, as well as decryption, represent a huge field for discussion, each of them can serve as an occasion for an entire article (and not one). If anyone is interested in a specific topic, please leave your wishes in the comments for the subsequent development of the plot. Further, a post is planned on the use of various indices, such as vegetative NDVI, to improve the interpretation and identification of objects.

The article used information from the site , also from the following sources: 1 and 2 .

PS. It is possible to download digital data for free on the website of the US Geological Survey.

For your own experiments in the processing of images there isfree demo software (though with some limited features compared to the full version, but enough to warm up) and one more software .

From the moment the image is received from the satellite, to the possibility of analyzing it, a whole cycle of procedures must be taken to bring it into a form convenient for obtaining and subsequent analysis of visual information.

Those who are interested in the process itself, I ask for cat (traffic):

Before moving on to the very essence, I would like to immediately introduce a number of

The digital image itself and the process of obtaining it

As everyone knows, a digital image is a matrix of pixels, the value of each of which is obtained by averaging over four components: space coordinates (x and y), wavelength and time.

The process of compiling the matrix itself goes like this: solar radiation is reflected from the objects taken, the energy that enters the sensor’s surface is fixed by it, then it is integrated, from which the integral pixel value is set. The integer value is obtained after converting the integral value into an electrical signal. Each pixel stores information in binary form. The more bits (memory) allocated per pixel, the greater the number of values corresponding to one pixel, the more accurately the original discrete signal is approximated, the more information the picture can store.

In CCD scanners, the already mentioned detectors scan the Earth and divide a continuous stream of data into pixels.

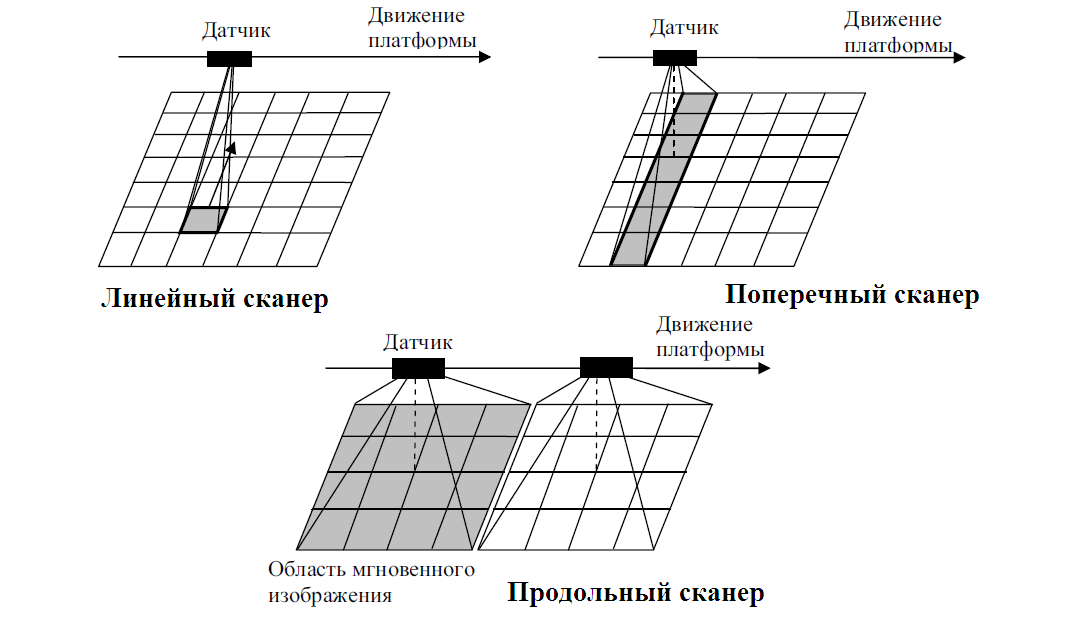

A lot depends on the type of scanner, it determines the method of obtaining images. So, there are three main types of scanners:

- A linear scanner (example - AVHRR) is the simplest one, it is equipped with only one detector element.

- Cross CCD scanner (GOES, MODIS, TM) - uses a line of detectors located along the survey path for scanning. A parallel scan of the Earth is carried out with each cycle of motion of the mirror.

- Longitudinal scanners (IKONOS, QuickBird, SPOT) have thousands of detectors in the CCD line, therefore, parallel scanning is carried out simply by moving the platform in orbit.

Digital images are displayed using either an 8-bit grayscale or 24-bit scale, which is based on a mixture of different shades of R, G, B. The original range of pixel values is converted into a limited range by combining color values that correspond to any three channels of a multispectral digital image . One pixel is displayed by 256 ^ 3 RGB vectors, one vector is one color. There are other options for radiometric resolution. For example, QuickBird has 11bit / pix, Landsat-8 has 16bit / pix.

How spectral channels are formed in optical sensors:

The beam of light received by the sensors is divided into several rays. Each beam, passing its optical path, goes through a spectral filter. To separate the spectral ranges, prisms and diffraction gratings can be used.

Preliminary image processing procedures

The following procedures are included in the cycle of preliminary procedures for image processing:

- Radiometric correction - eliminates the variation in the brightness of pixels, which occurs as a result of improper operation of the detectors, the influence of terrain and atmosphere.

- Atmospheric correction - correction for the influence of the atmosphere, which determines the location of the shooting ranges due to the transparency windows.

- Geometric correction includes correction of image distortions such as streakiness, line dropping, and also geocoding - linking the image in such a way that each point in the image is assigned the coordinate of the corresponding point on the ground. Mathematically, georeferencing is usually done using power polynomials. The accuracy of the binding is increased in the presence of reference points, then the picture as if “planted” on them. After geocoding, the brightness characteristics of the already transformed image are determined by various methods: the nearest neighbor, bilinear interpolation, bicubic convolution.

- Orthorectification - it eliminates image errors due to differences in elevation of the terrain, as a result, many flaws in central design are eliminated in the resulting image.

The following is a process for improving image quality, including:

- Spectral transformations , which are built on the work with the spectral diagram - a graph showing the relationship between the number of pixels in the image and the values of spectral brightness. In spectral transformations, such a parameter as contrast changes . To increase it, there are a number of methods, for example:

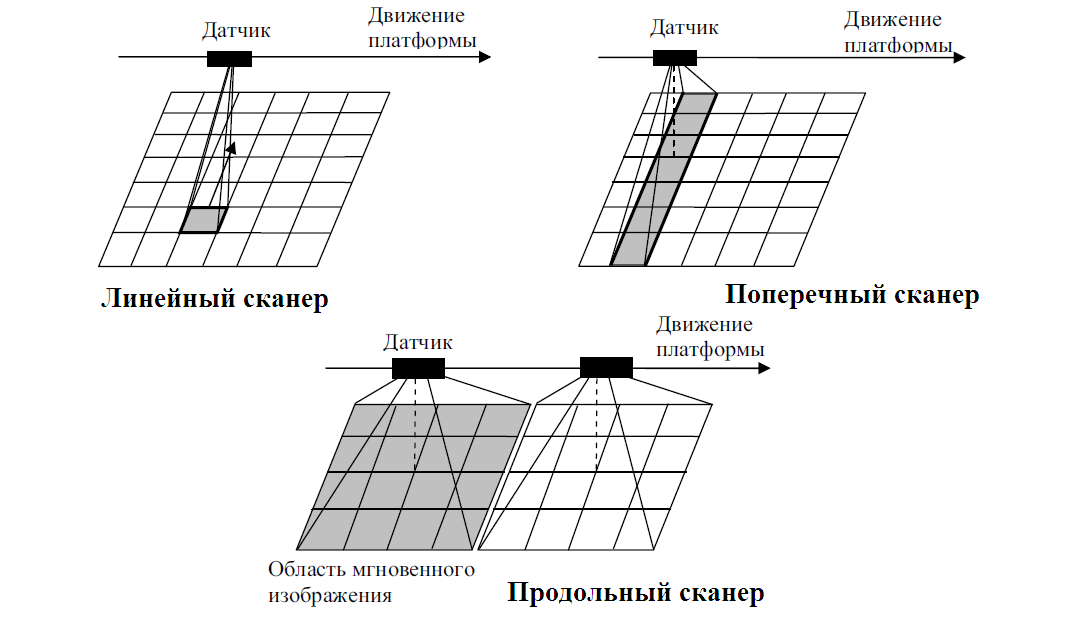

- linear stretching of the histogram, which consists in the fact that all brightness values are assigned new values in order to cover the entire possible range from 0 to 255:

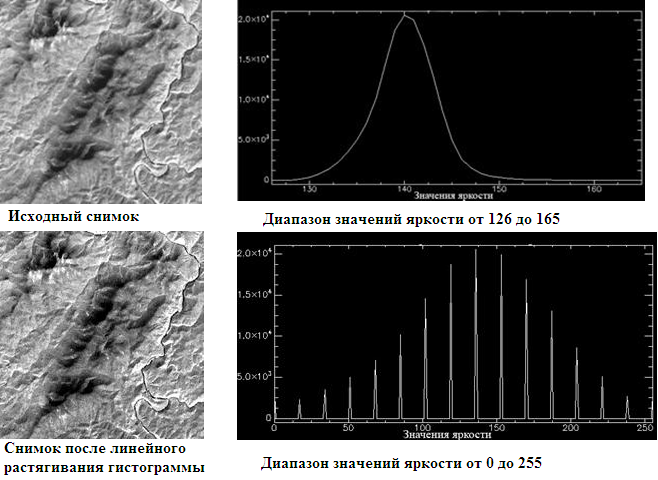

- Histogram normalization - only the most intense (most informative) section of the diagram is stretched over the entire range of brightness values:

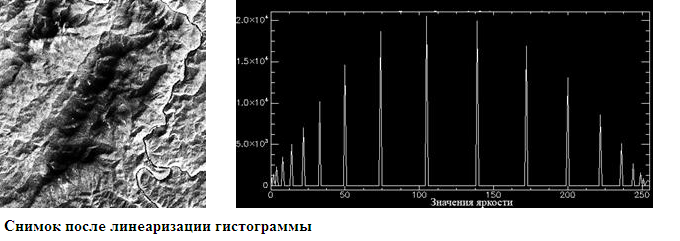

- Histogram linearization - each pixel is assigned a new brightness value, so that each brightness level has approximately the same number of pixels:

- linear stretching of the histogram, which consists in the fact that all brightness values are assigned new values in order to cover the entire possible range from 0 to 255:

- Filtering - enhances the reproduction of objects, eliminates noise, emphasizes structural lines, smooths the image and does much more - depending on the task. The whole filtering process is based on the concept of a sliding window - a square matrix of weight coefficients (usually a 3 * 3 or 5 * 5 matrix). Each pixel brightness value is recalculated as follows: if the pixel is in the center of a window moving from pixel to image pixel, then a new value is assigned to it, calculated by the functional dependence of the values of the pixels surrounding it. So the window “slips” across all the pixels of the image, changing their value. Depending on the selected weights, the properties of the resulting image change. Habrauser described filtering in more detailUnickSoft in his post .

- The Fourier transform improves the quality of the image by decomposing it into many spatial-frequency components. The distribution of brightness characteristics in space is represented as a linear combination of periodic functions sin and cos with given characteristics in the frequency domain. For example, to remove noise, it is enough to identify the frequency of their occurrence.

The final stage of working with images - decryption

Decryption is the process of detecting and recognizing objects and terrain phenomena in an image. It can be either manual, that is, based on a visual (human) assessment of the image, or machine (automatic). The latter, something tells me, is of great interest to many Habrausers. Machine processing, in essence, comes down to various classification mechanisms. First you need to imagine all the pixels (their spectral brightness) as vectors in the space of spectral features. When analyzing the quantitative relationships of the spectral brightnesses of different objects, pixels are divided into classes. The classification of pictures is divided into classification with training and classification without training.

Classification with training

Classification with training implies the existence of a standard with which the brightness of each pixel is compared. As a result, having several standards predefined, we get many objects divided into classes. This classification only works if the objects that are displayed in the image are known in advance, the classes are clearly distinguishable and their number is small.

Here are just a few of the methods that can be used in classifications with training:

- Minimum distance method - pixel brightness values are considered as vectors in the space of spectral features. Between these values and the values of the vectors of the reference sections, the spectral distance is calculated as the root of the sum of the squares of the difference between the vectors of the pixel and the reference (in other words, the Euclidean distance between them). All pixels are divided into classes depending on whether the distance between them and the reference exceeds the set or not. So, if the distance is less, then the class is defined, the pixel can be attributed to the standard:

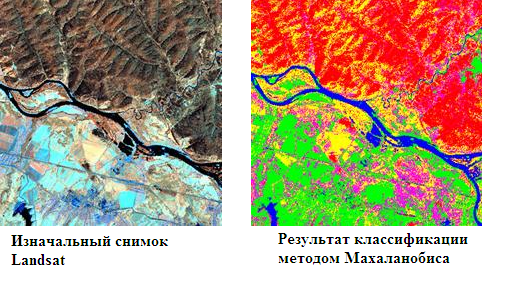

- The Mahalanobis distance method is very similar to the first method, only classification does not measure the Euclidean distance between the vectors, but the Mahalanobis distance, which takes into account the variance of the brightness values of the standard. In this method, if the Euclidean distance to two references from a given pixel is equal, then the class with the variance of the reference sample is greater will win:

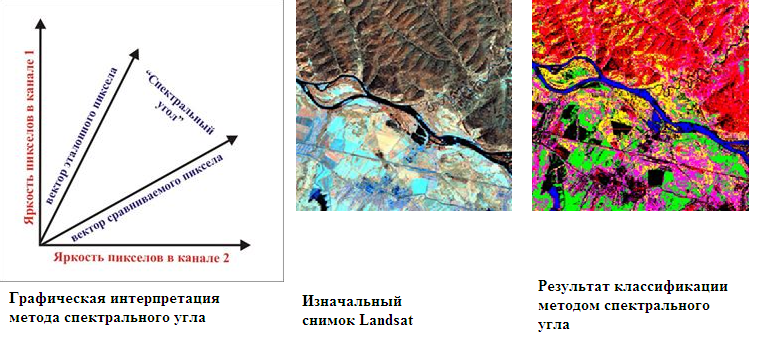

- Spectral angle method - initially the maximum value of the spectral angle (the angle between the reference vector and the vector of this pixel) is set. The spectral angle is found, and, as with the Euclidean distance, if the angle is less than the specified one, then the pixel falls into the standard class with which the comparison is made:

Classification without training

Classification without training is based on a fully automatic distribution of pixels into classes based on statistics on the distribution of brightness values of pixels. This type of classification is used if it is not initially known how many objects are present in the image, the number of objects is large, as a result, the machine issues the received classes, and we already determine which objects to match them with.

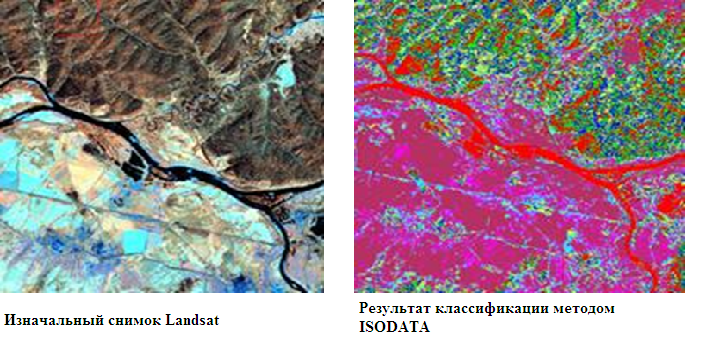

- ISODATA Method (Iterative Self-Organizing Data Analysis Technique Algorithm)based on cluster analysis using the method of successive approximations. After considering the brightness of pixels as vectors in the space of spectral features, the nearest ones are determined in one class. For each spectral zone, the statistical parameters of the brightness distribution are calculated. All pixels are divided by some n number of equal ranges, inside each of which is an average value. For each pixel in the range, the spectral distance to the average value is calculated. All pixels with the smallest distance between them are defined in one cluster. This is the first iteration. At the second iteration and subsequent it is already real average values for each cluster are calculated. Each new iteration refines the boundaries of future classes:

- The K-means method is similar to the previous method, with the exception of the fact that the initial means are set (this is possible only if the objects in the picture are well readable).

All the processes of pre-processing and improving the quality of images, as well as decryption, represent a huge field for discussion, each of them can serve as an occasion for an entire article (and not one). If anyone is interested in a specific topic, please leave your wishes in the comments for the subsequent development of the plot. Further, a post is planned on the use of various indices, such as vegetative NDVI, to improve the interpretation and identification of objects.

The article used information from the site , also from the following sources: 1 and 2 .

PS. It is possible to download digital data for free on the website of the US Geological Survey.

For your own experiments in the processing of images there isfree demo software (though with some limited features compared to the full version, but enough to warm up) and one more software .