Testers - a supporting role?

In the countries of the former USSR, a definite attitude towards the tester as a supporting role has developed:

Why this happens is quite understandable: few people met qualified testers live, testers do not make a useful result (they don’t write a product), and in general it’s customary to save on everything we get. Another question is interesting: what is obtained thanks to this approach? Let's look at examples.

Everything is clear here. In order for the project to bring more profit, it must be better sold and reduced production costs.

OK, let's hire a low-cost testing specialist (most often, a student or a fresh graduate) who will poke buttons and register errors in the system. When the volume of work grows, and he ceases to cope - we will hire a second one, and gradually we will build a whole testing department. At first glance, everything seems quite good, if not for some little things:

And at some point, sooner or later, all the RMs come to understand that the testers should be quite qualified and find the guys stronger (who, however, can no longer always catch up and disentangle all this mess baggage accumulated for them). Nevertheless, there are normal testers who adequately design the tests, and the automation framework prepares a suitable one. And all is well, but testers are just testers. Therefore,

“Right now we will write a worthy version of testing, and they will only have to check that everything is fine and start finding the little things found!”

Here, for some reason, the problems begin again:

Okay, smart people should learn from mistakes, at least on their own. Looking at all this happiness, you understand that something is wrong. You connect testers to the project in the early stages, they prepare unit tests until the integration solution is ready, they discuss the decisions made with the team, and in the hot pre-release season, you do not waste time discussing and making belated changes to the product.

And everything could be fine if it were not for the next “but”:

If you have the proper strength and desires, testers can evaluate it. With a lack of communication skills - justify the importance of certain changes. But affect the result?

Medicine has not yet known cases that an ultrasound machine cured anything, and testing is just a diagnosis. And at this stage, if your testing team still has sufficiently competent and unbroken employees, new “graters” begin:

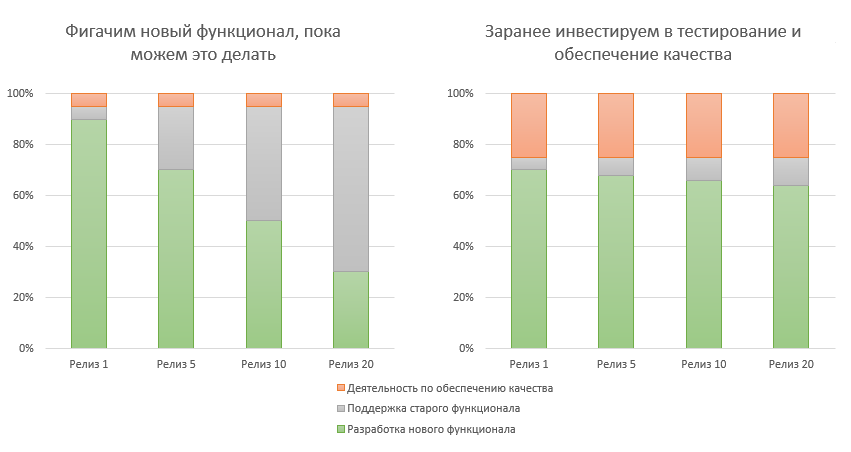

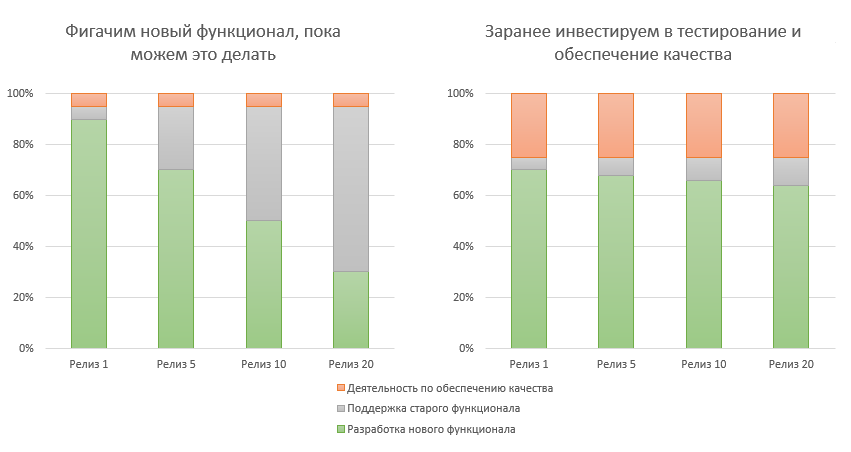

Gradually, all good beginnings fly off the chimney, and instead of ensuring quality, the maximum that testers can do is to promptly report errors found. Declaring the need for improving the quality of the RM, in practice, they are not ready to invest in it, and there is slowly a collapse. Support for old functionality costs more and more. I tried to depict the difference in the distribution of team resources in time in the two approaches on the diagram:

And the sooner you start investing resources in ensuring quality and efficient processes, the more, ultimately, you have to develop new functionality! No matter how unexpected it may sound.

To summarize:

It is still rare, but in the ex-USSR sensible quality assurance processes begin to occur. Are you ready to change something for the better?

- They are ready to take on the role of a tester anyone who knows how to confidently press buttons

- Testers rarely participate in the fate of the project, make decisions on requirements and deadlines

- They try to connect testers as late as possible when it is necessary to “click” and “look for errors”

- With the exception of a small number of food companies, most employers offer testers 1.5-2 times lower salaries than developers.

Why this happens is quite understandable: few people met qualified testers live, testers do not make a useful result (they don’t write a product), and in general it’s customary to save on everything we get. Another question is interesting: what is obtained thanks to this approach? Let's look at examples.

Let's save on salary

Everything is clear here. In order for the project to bring more profit, it must be better sold and reduced production costs.

OK, let's hire a low-cost testing specialist (most often, a student or a fresh graduate) who will poke buttons and register errors in the system. When the volume of work grows, and he ceases to cope - we will hire a second one, and gradually we will build a whole testing department. At first glance, everything seems quite good, if not for some little things:

- Unskilled testers get bugs without a detailed analysis. Such errors are difficult to localize and correct. For example, for each of the 50 errors made per week, developers spend an average of an extra 15 minutes analyzing. Real inconspicuous little things! Just 600 developer hours per year - or 3.5 person months !

- Over time, when your supported code grows in size, you need regular regression testing to verify that nothing has broken. Your student testers purposefully and methodically perform the same tests from release to release, occasionally finding errors (hi, the effect of a pesticide !) When someone comes up with the idea of automation (most often, RM), these guys will write several hundred scripts with copy-paste to you - and then, instead of manual testing, autotests will update each release. At some point, in order to maintain all this happiness, you will need more testers: something between a lot and a lot . And a lot is always expensive.

- In the end, despite the growing number of testers, you will encounter errors in a productive environment. Swearing at users, losing trust, wasting your time. In general, you will continue to spend excess resources .

And at some point, sooner or later, all the RMs come to understand that the testers should be quite qualified and find the guys stronger (who, however, can no longer always catch up and disentangle all this mess baggage accumulated for them). Nevertheless, there are normal testers who adequately design the tests, and the automation framework prepares a suitable one. And all is well, but testers are just testers. Therefore,

Testers should not be allowed to access the product too early.

“Right now we will write a worthy version of testing, and they will only have to check that everything is fine and start finding the little things found!”

Here, for some reason, the problems begin again:

- Late connected to the process, testers do not have time to delve into the environment. There is a lack of understanding of business scenarios, the user's work environment, and the reasons for making many design, architectural and design decisions. At about this stage, “graters” begin due to different visions of how and what should be implemented, because testers either lack knowledge or have a different vision, but they did not participate in the development of design solutions. Congratulations, a lot has changed: you again have low-quality testing, mutual dissatisfaction, lack of time for serious changes in the product .

- After spending a long time on your project (which many adequate testers simply will not do under such conditions), testers begin to understand the environment much better. The chances of success grow if they are involved in developing requirements and communicating with customers. But you continue to connect testing in the last stages, because it is so accepted? Most likely, one of two things awaits you: if there is not enough time for testing, errors will be skipped. If you can move the deadlines, you just have to make too many major changes. Of course, this is just a bugfix, that's fine ... Until then, think about the simple: how could it be avoided, especially at the last stages of the release?

Okay, smart people should learn from mistakes, at least on their own. Looking at all this happiness, you understand that something is wrong. You connect testers to the project in the early stages, they prepare unit tests until the integration solution is ready, they discuss the decisions made with the team, and in the hot pre-release season, you do not waste time discussing and making belated changes to the product.

And everything could be fine if it were not for the next “but”:

Testers cannot affect product quality.

If you have the proper strength and desires, testers can evaluate it. With a lack of communication skills - justify the importance of certain changes. But affect the result?

Medicine has not yet known cases that an ultrasound machine cured anything, and testing is just a diagnosis. And at this stage, if your testing team still has sufficiently competent and unbroken employees, new “graters” begin:

- For more competent automation, the product needs easily supported locators - pffff! “Again, spend extra time on testing!”, RM will say, and will postpone the task until those never-coming-times-when-the team-will have nothing to do.

- To more accurately evaluate the test coverage and its weak areas, we need detailed decomposed requirements - pffff! “Enough to breed bureaucracy, we have an aggile!”

- We need to better understand users and attract the target audience at the stage of working out business scenarios, evaluate usability - pfffff! “This will postpone the release for 2 weeks, it’s better to redo it after user reviews!”

Gradually, all good beginnings fly off the chimney, and instead of ensuring quality, the maximum that testers can do is to promptly report errors found. Declaring the need for improving the quality of the RM, in practice, they are not ready to invest in it, and there is slowly a collapse. Support for old functionality costs more and more. I tried to depict the difference in the distribution of team resources in time in the two approaches on the diagram:

And the sooner you start investing resources in ensuring quality and efficient processes, the more, ultimately, you have to develop new functionality! No matter how unexpected it may sound.

conclusions

To summarize:

- An autotest developer is a developer. A test analyst is an analyst . If you recruit people for testing, presenting them with less qualification requirements than developers and analysts, then gradually you dig a hole for your whole team.

- Testing is part of the development , not the next stage.

- Only testers cannot participate in testing and quality assurance . The product and the process should contribute to the possibility of testing and improvement, and if you do not invest in it from the very beginning, then you have to pay much more - on the difficulties with support, making changes, testing and communicating with customers.

It is still rare, but in the ex-USSR sensible quality assurance processes begin to occur. Are you ready to change something for the better?