Scaling is easy. Part Three - Strategies

In the previous parts ( here and here ) we talked about the basic architectural principles for building scalable portals. Today we continue the conversation about optimizing a well-built portal. So, scaling strategies.

Caches are a good thing to increase the impact power of a single component or service. But every optimization once comes to an end. This is the latest moment to think about how to maintain multiple instances of your services, in other words, how to scale your architecture. Different types of nodes can be scaled differently. The general rule is this: the closer the component is to the user, the easier it is to scale it.

Performance is not the only reason to work on scaling. The availability of a system to a large extent also depends on whether we can run several instances of each component in parallel, and, thanks to this, be able to bear the loss of any part of the system. The top perfection of scaling is to be able to administer the system flexibly , that is, to adjust the use of resources for traffic.

Scaling the presentation layer is usually easy. This is the level to which web applications running in a web server or servlet container (for example, tomcat or jetty) belong, and are responsible for generating markup, i.e. HTML, XML or JSON.

You can simply add and remove new servers as needed - as long as a single web server:

It’s more difficult to scale the application tier — the level where services run. But before moving on to the application tier, let's look at the tier behind it - the database.

The sad truth about scaling through the database is that it does not work. And although from time to time, representatives of various database manufacturers try to convince us again that this time they can certainly scale - at the most crucial moment they will leave us. Little disclaimer: I'm not saying that you don’t need to do database clusters or replication a la master / slave. There are many reasons for using clusters and replicas, but performance is not among them.

This is the main reason that applications scale so poorly across databases: the main task of the database is only to save data (ACID and all that). Reading data is much more difficult for them (before shouting “how” and “why,” think: why do you need so many indexers like lucene / solar / elastic search?). Since we cannot scale through the base, we need to scale through the application tier. There are many reasons why this works great, I will name two:

There are different strategies for scaling services.

First you need to determine what is the state of the service (state). The state of one service instance is that information that is known only to him and which, accordingly, distinguishes it from other instances.

A service instance is typically JavaVM in which one copy of this service runs. The information that determines its state is usually the data that goes into the cache. If a service has no data of its own at all, it is stateless. In order to reduce the load on a service, you can run several instances of this service. Strategies for distributing traffic to these instances, in general, are scaling strategies.

The simplest strategy is Round-Robin. At the same time, each client "talks" with each instance of the service, which are used in turn, that is, one after another, in a circle. This strategy works well until the services are stateless and perform simple tasks, for example, send emails through an external interface.

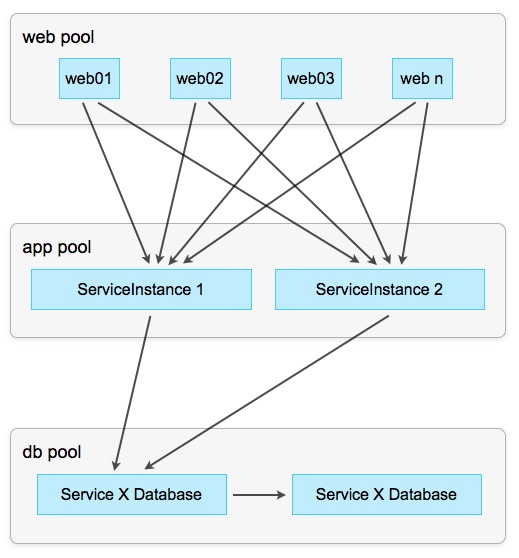

Scheme 5: We distribute calls to services according to the Round-Robin principle

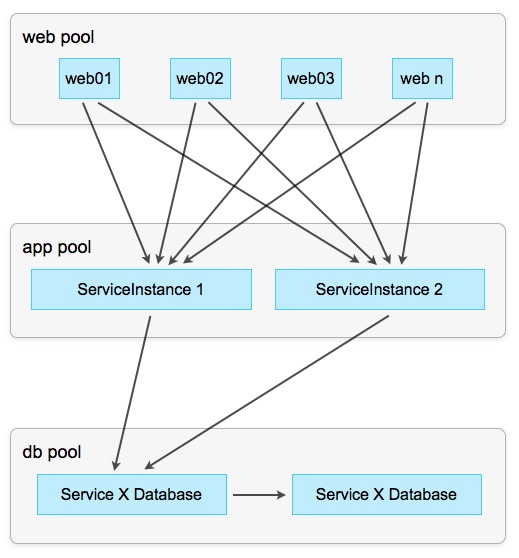

When service instances have states, they should be synchronized with each other, for example, by announcing changes in state through EventChannel or another Publish / Subscriber option:

Scheme 6: Round-Robin with synchronization state

In this case, each instance informs the other instances of all changes that it makes to each stored object. In turn, the remaining instances repeat the same operation locally, changing their private state, thereby maintaining the state of the service consistent (consistent) across all instances.

This strategy works well with little traffic. However, with its increase, problems arise with the possibility of simultaneously changing the same object with several instances.

Routing is used to combat this. In this case, routing means that the service instance is selected depending on the context. The context may be a client, operation, or data. Data routing, sharding), Is the most powerful routing tool: it means a routing algorithm that selects the target instance of the service based on the parameters of the operation (i.e. data).

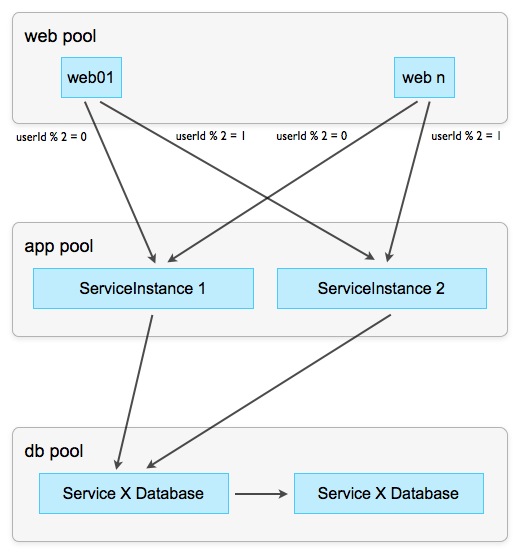

Ideally, we have a single-valued parameter, for example, UserId, which can be easily converted into numbers and divide with the remainder. Dividing by the number of working instances, the remainder indicates the target instance of the request: all requests with a remainder of 0 go to the first instance, with a remainder of 1 to the second, etc.

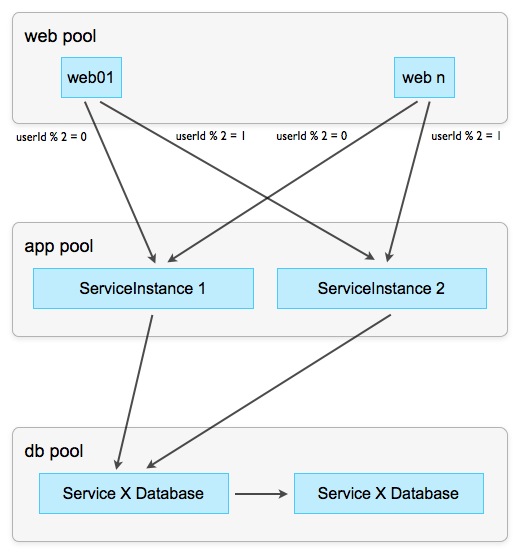

Figure 7: Remaining Sharding

This strategy has a useful side effect: due to the fact that all requests based on the data of the same user always fall on the same instance, the data is fragmented. This means smaller caches, optionally fragmented databases (that is, each instance or their group has its own database) and other optimization snacks.

If you have the appropriate middleware, you can take it a step further and combine different strategies. For example, you can group several instances into groups by which to distribute queries by sharding, and use Round-Robin for elasticity within groups. The number of such combined strategies is too large ( another example ) to describe them all in one post, and depends on the specific problem.

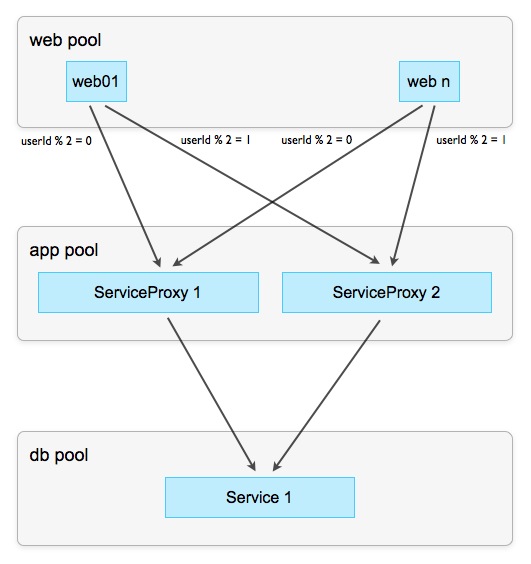

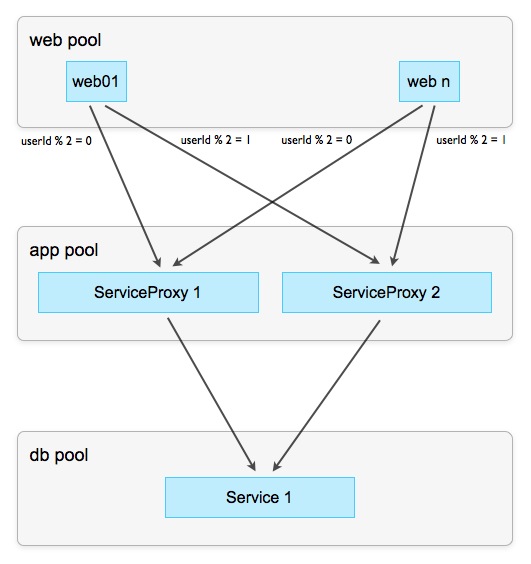

Not always and not any data can be segmented, especially when one operation changes two data sets simultaneously in different contexts. A classic example is the delivery of a message from user A to user B, in which both mailboxes are changed simultaneously. It is impossible to find an algorithm for distributing data across instances (sharding), which ensures that user A and B boxes are in the same service instance. Butthe old woman also has a breakdown and there are solutions for this situation. The simplest thing is to implicitly split the service (ideally through middleware) so that the client does not know anything about it. For example:

Figure 8: Proxy Services

The task of proxy service instances is to process and respond to reading requests, without bringing them to the master service. Since we have much more reading than writing operations (recall the initial installation ), eliminating the master service from them, we will greatly facilitate his life. Reading operations usually take place in the context of one (active) user and, therefore, can be "shared", as described above. The remaining writing operations go through the proxy to the master, but due to the fact that the main part of the load remains on the proxy, they already deliver significantly less hemorrhoids.

In the framework of this series ( here , here and, in fact, here) we talked about how to find the right architecture and with what tools to scale it. I hope I convinced the reader that the time spent searching and following architectural paradigms is an investment that pays off many times in difficult times. Slave-Proxies, Round-Robin routing, or zero-caches are not what we think of first, starting to work on a new portal. Yes, and do not embed them “just in case” - it is important to know how to use them and have an architecture that allows the use of such tools.

Not every portal should be scaled, but those who must must be able to do this quickly. Relying solely on technology (NoSQL, etc.) means giving the wheel to the wrong hands, and if the technology choice is wrong, pick up the fragments of your system with a broom.

If from the very beginning you choose the right architecture, find your principles and paradigms and adhere to them, then you can always find the answer to the difficulties and challenges that arise.

Good luck

The potential for local optimization is very limited.

Caches are a good thing to increase the impact power of a single component or service. But every optimization once comes to an end. This is the latest moment to think about how to maintain multiple instances of your services, in other words, how to scale your architecture. Different types of nodes can be scaled differently. The general rule is this: the closer the component is to the user, the easier it is to scale it.

Performance is not the only reason to work on scaling. The availability of a system to a large extent also depends on whether we can run several instances of each component in parallel, and, thanks to this, be able to bear the loss of any part of the system. The top perfection of scaling is to be able to administer the system flexibly , that is, to adjust the use of resources for traffic.

Scaling the presentation layer is usually easy. This is the level to which web applications running in a web server or servlet container (for example, tomcat or jetty) belong, and are responsible for generating markup, i.e. HTML, XML or JSON.

You can simply add and remove new servers as needed - as long as a single web server:

- stateless, or

- its state is recoverable - for example, because it consists entirely of caches, or

- their (caches) state refers to specific users and there is a guarantee that the same user will always get to the same server (session stickiness).

It’s more difficult to scale the application tier — the level where services run. But before moving on to the application tier, let's look at the tier behind it - the database.

The sad truth about scaling through the database is that it does not work. And although from time to time, representatives of various database manufacturers try to convince us again that this time they can certainly scale - at the most crucial moment they will leave us. Little disclaimer: I'm not saying that you don’t need to do database clusters or replication a la master / slave. There are many reasons for using clusters and replicas, but performance is not among them.

This is the main reason that applications scale so poorly across databases: the main task of the database is only to save data (ACID and all that). Reading data is much more difficult for them (before shouting “how” and “why,” think: why do you need so many indexers like lucene / solar / elastic search?). Since we cannot scale through the base, we need to scale through the application tier. There are many reasons why this works great, I will name two:

- At this level, most of the treatise "Knowledge about the application and its data" is collected. We can scale, knowing what and how the application does and how it is used

- Here you can work with the help of programming language tools, which are much more powerful than the tools provided by the database level.

There are different strategies for scaling services.

Scaling strategies.

First you need to determine what is the state of the service (state). The state of one service instance is that information that is known only to him and which, accordingly, distinguishes it from other instances.

A service instance is typically JavaVM in which one copy of this service runs. The information that determines its state is usually the data that goes into the cache. If a service has no data of its own at all, it is stateless. In order to reduce the load on a service, you can run several instances of this service. Strategies for distributing traffic to these instances, in general, are scaling strategies.

The simplest strategy is Round-Robin. At the same time, each client "talks" with each instance of the service, which are used in turn, that is, one after another, in a circle. This strategy works well until the services are stateless and perform simple tasks, for example, send emails through an external interface.

Scheme 5: We distribute calls to services according to the Round-Robin principle

When service instances have states, they should be synchronized with each other, for example, by announcing changes in state through EventChannel or another Publish / Subscriber option:

Scheme 6: Round-Robin with synchronization state

In this case, each instance informs the other instances of all changes that it makes to each stored object. In turn, the remaining instances repeat the same operation locally, changing their private state, thereby maintaining the state of the service consistent (consistent) across all instances.

This strategy works well with little traffic. However, with its increase, problems arise with the possibility of simultaneously changing the same object with several instances.

Routing is used to combat this. In this case, routing means that the service instance is selected depending on the context. The context may be a client, operation, or data. Data routing, sharding), Is the most powerful routing tool: it means a routing algorithm that selects the target instance of the service based on the parameters of the operation (i.e. data).

Ideally, we have a single-valued parameter, for example, UserId, which can be easily converted into numbers and divide with the remainder. Dividing by the number of working instances, the remainder indicates the target instance of the request: all requests with a remainder of 0 go to the first instance, with a remainder of 1 to the second, etc.

Figure 7: Remaining Sharding

This strategy has a useful side effect: due to the fact that all requests based on the data of the same user always fall on the same instance, the data is fragmented. This means smaller caches, optionally fragmented databases (that is, each instance or their group has its own database) and other optimization snacks.

If you have the appropriate middleware, you can take it a step further and combine different strategies. For example, you can group several instances into groups by which to distribute queries by sharding, and use Round-Robin for elasticity within groups. The number of such combined strategies is too large ( another example ) to describe them all in one post, and depends on the specific problem.

Not always and not any data can be segmented, especially when one operation changes two data sets simultaneously in different contexts. A classic example is the delivery of a message from user A to user B, in which both mailboxes are changed simultaneously. It is impossible to find an algorithm for distributing data across instances (sharding), which ensures that user A and B boxes are in the same service instance. But

Figure 8: Proxy Services

The task of proxy service instances is to process and respond to reading requests, without bringing them to the master service. Since we have much more reading than writing operations (recall the initial installation ), eliminating the master service from them, we will greatly facilitate his life. Reading operations usually take place in the context of one (active) user and, therefore, can be "shared", as described above. The remaining writing operations go through the proxy to the master, but due to the fact that the main part of the load remains on the proxy, they already deliver significantly less hemorrhoids.

Epilogue

In the framework of this series ( here , here and, in fact, here) we talked about how to find the right architecture and with what tools to scale it. I hope I convinced the reader that the time spent searching and following architectural paradigms is an investment that pays off many times in difficult times. Slave-Proxies, Round-Robin routing, or zero-caches are not what we think of first, starting to work on a new portal. Yes, and do not embed them “just in case” - it is important to know how to use them and have an architecture that allows the use of such tools.

Not every portal should be scaled, but those who must must be able to do this quickly. Relying solely on technology (NoSQL, etc.) means giving the wheel to the wrong hands, and if the technology choice is wrong, pick up the fragments of your system with a broom.

If from the very beginning you choose the right architecture, find your principles and paradigms and adhere to them, then you can always find the answer to the difficulties and challenges that arise.

Good luck