Adaptive Broadcasting Technologies

In a previous article, I superficially examined the general principles of adaptive broadcasting. In this article, I will consider separately each of the adaptive broadcasting technologies presented, and also tell you what problems Telebreeze faced when using adaptive broadcasting.

In general, there are not so many of them, and you yourself already guess which one is - of course, this is Apple HTTP Adaptive Streaming (HLS).

1. Apple has done its best and has been using this technology on all its devices (iOS and Mac operating systems) for a relatively long time, and is also supported by the latest versions of Android and most TV set-top boxes.

Apple's HLS is one of the most common HTTP video transfer protocols that has already proven its reliability and passed the test of time.

Certainly not perfect in our world, but Apple, as always, is on top. You do not think I'm not a fan of Apple, I just try to judge objectively.

So, a few words about the transmission of video and audio signals: The video signal is packaged in an MPEG-2 TS container, and the very common MPEG H.264 (video) and AAC (audio) codecs are used. The video is encoded with a different bitrate at the output, and as a result, a playlist in the m3u8 format is obtained. To protect content from unauthorized access, the AES-128 algorithm is used, which can encrypt content transmitted over HLS.

This is how Apple HTTP Adaptive Streaming (HLS) technology can be described.

2. Now consider the equally popular adaptive broadcast technology from Microsoft - Smooth Streaming.

Smooth Streaming is a protocol that is supported by the Silverlight video player, as well as naturally operating systems Windows and Windows Phone.

What Microsoft has, what Apple does not have, or what advantages Smooth Streaming has over HLS. Although this can be argued, since these are relatively different approaches to adaptive broadcasting, but let's all look at the projection in one direction. The biggest advantage is the more versatile and extensible manifest file format (xml). A lesser advantage is that all segments of the video are packaged in the most common MP4 container at the moment.

The video and audio signal is first segmented, and then packaged into an MP4 container, as mentioned above, and distributed via HTTP through the web server and CDN. H.264 codecs (video) and AAC (audio) are used.

In general, Windows are promoting their concept very successfully, which indicates their constructive competition with Apple.

3. So we got to Adobe HTTP Dynamic Streaming (HDS), which is another option for adaptive broadcasting.

As the name implies, this technology was created by Adobe and is supported on almost any personal computer in the world.

The principle of operation is similar to other adaptive systems of HTTP technology. In this regard, it is difficult to single out special advantages; we can only say that HDS supports only one DRM system - Flash Access.

4. I would also like to consider the topic of the General Standard for Adaptive Broadcasting.

The big problem at the moment, which the broadcasters are facing, is to try to support the maximum possible number of solutions on various devices of their clients and use different technologies. It is expensive and not always convenient.

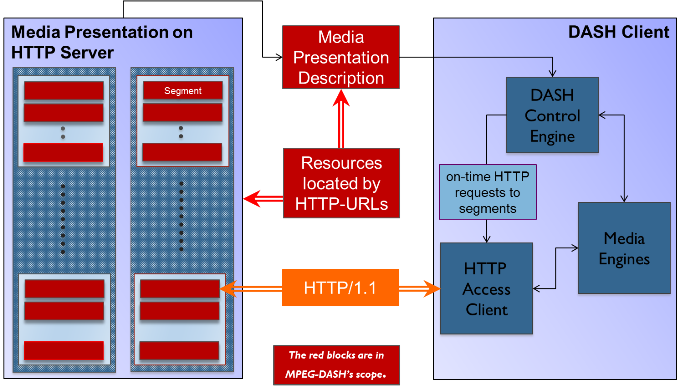

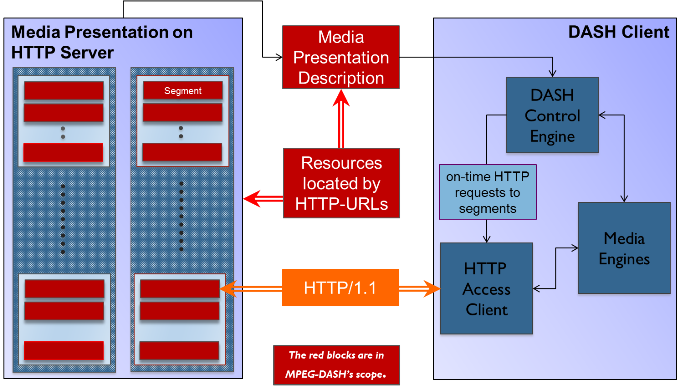

And here there is a need for the widespread introduction of a single standard for video broadcasting. MPEG-DASH (Dynamic Adaptive Streaming over HTTP), developed by MPEG-LA and ISO, which they launched last year, is intended to become such a standard.

It can be called the most universal and perfect broadcast protocol. It has the use of manifest files (xml) from Smooth Streaming, also has support for most container formats, and integration with UltraViolet (DRM system), which works on the principle of “Buy once, play it anywhere”. UltraViolet will provide a single environment for encoding all devices.

No matter how universal this technology is, and no matter how it is promoted by world standardization organizations, its implementation is expected in the distant future.

What problems can a developer face when implementing adaptive broadcasting?

One of them is how long should be the same video segment that is transmitted in the stream, and how many such stream options should be.

If you make the video segment unnecessarily short, then the useless traffic consumption increases. Why is this happening, but because in each segment, in addition to the video, the so-called overhead will hold back. The result is that the more segments, the more overhead, and that’s exactly what the traffic is useless for.

But there is also a flip side to the coin, if you make the video segment too long, then the quality of the service will decrease by several times, because the user will have to wait for a change in quality.

There is no universal solution to the problem, since the balance in this case is found only by trial and error.

Coding profile parameters will depend on adaptive broadcast technology, as well as on country and region. And here the segment length and the number of threads should be selected for each corresponding case.

We, Telebreeze Corporation , use HTTP Adaptive Streaming (HLS). This system has a number of positive aspects, as well as a number of problems. I’ll talk about all this in the next article .

So, brief results: Each presented technology has its own advantages and competes with each other, which should positively affect the development of such a young direction as adaptive broadcasting, because everyone knows that competition is the engine of progress.

In general, there are not so many of them, and you yourself already guess which one is - of course, this is Apple HTTP Adaptive Streaming (HLS).

1. Apple has done its best and has been using this technology on all its devices (iOS and Mac operating systems) for a relatively long time, and is also supported by the latest versions of Android and most TV set-top boxes.

Apple's HLS is one of the most common HTTP video transfer protocols that has already proven its reliability and passed the test of time.

Certainly not perfect in our world, but Apple, as always, is on top. You do not think I'm not a fan of Apple, I just try to judge objectively.

So, a few words about the transmission of video and audio signals: The video signal is packaged in an MPEG-2 TS container, and the very common MPEG H.264 (video) and AAC (audio) codecs are used. The video is encoded with a different bitrate at the output, and as a result, a playlist in the m3u8 format is obtained. To protect content from unauthorized access, the AES-128 algorithm is used, which can encrypt content transmitted over HLS.

This is how Apple HTTP Adaptive Streaming (HLS) technology can be described.

2. Now consider the equally popular adaptive broadcast technology from Microsoft - Smooth Streaming.

Smooth Streaming is a protocol that is supported by the Silverlight video player, as well as naturally operating systems Windows and Windows Phone.

What Microsoft has, what Apple does not have, or what advantages Smooth Streaming has over HLS. Although this can be argued, since these are relatively different approaches to adaptive broadcasting, but let's all look at the projection in one direction. The biggest advantage is the more versatile and extensible manifest file format (xml). A lesser advantage is that all segments of the video are packaged in the most common MP4 container at the moment.

The video and audio signal is first segmented, and then packaged into an MP4 container, as mentioned above, and distributed via HTTP through the web server and CDN. H.264 codecs (video) and AAC (audio) are used.

In general, Windows are promoting their concept very successfully, which indicates their constructive competition with Apple.

3. So we got to Adobe HTTP Dynamic Streaming (HDS), which is another option for adaptive broadcasting.

As the name implies, this technology was created by Adobe and is supported on almost any personal computer in the world.

The principle of operation is similar to other adaptive systems of HTTP technology. In this regard, it is difficult to single out special advantages; we can only say that HDS supports only one DRM system - Flash Access.

4. I would also like to consider the topic of the General Standard for Adaptive Broadcasting.

The big problem at the moment, which the broadcasters are facing, is to try to support the maximum possible number of solutions on various devices of their clients and use different technologies. It is expensive and not always convenient.

And here there is a need for the widespread introduction of a single standard for video broadcasting. MPEG-DASH (Dynamic Adaptive Streaming over HTTP), developed by MPEG-LA and ISO, which they launched last year, is intended to become such a standard.

It can be called the most universal and perfect broadcast protocol. It has the use of manifest files (xml) from Smooth Streaming, also has support for most container formats, and integration with UltraViolet (DRM system), which works on the principle of “Buy once, play it anywhere”. UltraViolet will provide a single environment for encoding all devices.

No matter how universal this technology is, and no matter how it is promoted by world standardization organizations, its implementation is expected in the distant future.

What problems can a developer face when implementing adaptive broadcasting?

One of them is how long should be the same video segment that is transmitted in the stream, and how many such stream options should be.

If you make the video segment unnecessarily short, then the useless traffic consumption increases. Why is this happening, but because in each segment, in addition to the video, the so-called overhead will hold back. The result is that the more segments, the more overhead, and that’s exactly what the traffic is useless for.

But there is also a flip side to the coin, if you make the video segment too long, then the quality of the service will decrease by several times, because the user will have to wait for a change in quality.

There is no universal solution to the problem, since the balance in this case is found only by trial and error.

Coding profile parameters will depend on adaptive broadcast technology, as well as on country and region. And here the segment length and the number of threads should be selected for each corresponding case.

We, Telebreeze Corporation , use HTTP Adaptive Streaming (HLS). This system has a number of positive aspects, as well as a number of problems. I’ll talk about all this in the next article .

So, brief results: Each presented technology has its own advantages and competes with each other, which should positively affect the development of such a young direction as adaptive broadcasting, because everyone knows that competition is the engine of progress.