Models of actors of 40 years

Highly loaded systems, built on the model of actors - this is the trend of today. Here is an incomplete list of articles on the hub, in which, to one degree or another, this model or one of its implementations is mentioned, for example, 1 , 2 , 3 , 3 , 4 , 5 , 6 , 7 . There is a good wikipedia article about actors. Unfortunately, after reading it, I still have many questions, the answers to which I could only find in the original sources. I want to present the results of this review to your attention.

Are actors a set of practices, a development methodology, an architectural pattern, a marketing move?

In my university course, like many, the concept of computability was defined through Turing and Post machines. The machine has a state - the set of values of all cells in the tape. The process of computing is a sequence of machine steps, each of which changes its state. Each step of the machine is the performance of one atomic indivisible action (operation). I will call this a traditional model of computation.

Such an interpretation of the calculations leads to the concept of global time, when at one and the same moment in time one and only one atomic operation can be performed. This property is essentially used to prove the properties of various synchronization primitives in the case of multi-threaded programming on single-processor machines, for example, in the book Gregory R. Andrews Fundamentals of multi-threaded, parallel and distributed programming .

For multiprocessor machines or distributed systems, the requirement of global time is generally unacceptable. Therefore, it is necessary to generalize the concept of computability to the case of parallel computing. One of these generalizations is the actor model.

The appearance of this model in the distant 70s was due to a strong belief that next-generation machines will be multiprocessor, and programs have artificial intelligence. In 1973, Carl Hewitt , Peter Bishop, and Richard Steiger published an article called A Universal Modular Actor Formalism For Artificial Intelligent . In this article, they introduced the concept of an actor and explained that many application classes are a special case of the actor model. By the way, this year was the 40th anniversary!

An actor is a universal abstraction of a computational entity, which in response to a received message

The difference between them is the same as between the phone and sending the message by mail. In the first case (these are calculations based on global time), if several people try to reach one phone number, then they begin to compete for access to a shared shared resource - the recipient. Office PBXs, multi-line telephones, call-center software - all this (a la synchronization primitives) is necessary in order to ensure efficient processing of incoming calls. In the case of mail, the sender of the letter (actor) simply sends the letter to the addressee without any delay due to the need to coordinate their actions with other senders. But! However, we do not know when the recipient will read our letter.

The two largest specialists are Carl Hewitt and his apprentice Gul A. Agha . Hewitt mainly deals with the rigorous justification that other computational approaches are a special case of the actor model, and Agha with various applications for distributed systems.

Each of the results in itself is a topic for a separate large article, so I will provide only a summary and a link to the source.

Events in the actor model form a partially ordered set. The axiomatics of this set, called Ordering Laws, was described by Carl Hewitt and Henry Baker in Actors and Continous Functionals (1977). There are quite a few axioms, but their point is to justify that the actor model is suitable for practical use, for example, that at any given time the number of messages addressed to one recipient, of course.

In 1985, Gul A. Agha, under the direction of Hewitt, defended his dissertation Actors: A Model Of Concurrent Computations in Distributed Systems. Agha's dissertation describes the syntax of a minimal programming language that supports actors, as well as a set of typical tasks and methods for solving them (chapter 4).

Another important contribution to the practical approaches to the implementation of the actor model is the article by Phillip Haller and Martin Odersky Event-Based Programming without Inversion Control . It proposed a new approach to the development of actor systems, which was used in Scala. Its essence is that the resulting parallel recording program is very similar to "ordinary sequential" programming.

The same path, for example, was chosen for the development of C #. This is what the actors in C # 5 look like:

Here, the await keyword implies calling the corresponding method asynchronously and returning to the calculation when the response is received.

In 2011, Gul A. Aga and Karmani summarized many years of experience in implementing actor systems, describing the most common implementation method. They called him Fog Cutter . True, Hewitt has repeatedly criticized such an architecture, for example, here and here. The essence of criticism boils down to the fact that this is just one of the possible implementations of a particular case of a model that does not fully implement the entire actor model.

Indeed, in the phd dissertation Foundations of Actor SemanticsWilliam Duglas Clinger (a student of Karl Hewitt) shows that the actor model has unlimited non-determinism, while the Turing machine is limited non-deterministic. From this, he and Karl Hewitt conclude that there are algorithms that can be implemented in the actor model, but cannot be implemented on a Turing machine. Therefore, any attempt to implement the actor model on "ordinary" computers that implement Turing computability will lead to the fact that only a particular case of the actor model will be implemented.

In 1988, Robert Kowalski , the creator of the Prolog language, put forward the thesis that "calculations can be grouped by logical conclusions." This is true for sequential calculations and for some parallel models. But in 1988, Hewitt and Agha published an article Guarded Horn clause languages: are they deductive and Logical? , in which they showed that for the actor model this is not true in the following sense: the current state of the program may not deductively follow from the previous one. What does this mean in practice: debugging a program based on the actor model is not as effective as in the case of sequential programs.

Why do functional languages arise wherever it comes to parallel programming? To answer this question, consider a small C ++ example:

Since this piece of code does not contain I / O operations, in order to enable another thread to execute its code on the same processor core, it is necessary to wait for the thread scheduler of the operating system to force the thread switching. This can happen either when a system function is called in another thread, or by an event from a timer. By default, a timer event fires once every 15.6 ms , this explains why you should not reduce this time to 1 ms. It turns out that the imperative style allows you to write a "greedy" program that is trying to single-handedly use all the resources of the processor, and we are limited in the means to influence this.

We rewrite this program through tail recursion.

Forgive me admirers of functional programming languages for such a free interpretation, but my goal is only to demonstrate the idea.

The loop iteration has been replaced by a function call. Suppose that at the call point of each function, the compiler embeds code to calculate the time taken to execute the current thread and switch to another thread if necessary. Now we have the opportunity to switch from one thread to another within nanoseconds. The same idea allows you to implement lightweight threads, for example, as in Erlang.

For these properties, functional languages are convenient to use where parallelism and real-time reaction are needed.

If you need a very fast response, then functional languages are beyond competition, but what if during the processing of the request we have to go to the database, and the response time from it is about 50-100 ms, or we have to perform many calculations, that is, make many function calls . The overhead associated with calculating the execution time and switching threads to each call makes itself felt, and imperative languages are more effective in this case. To see this, just look at benchmarksgame.alioth.debian.org . The site offers to measure the performance of programs written in different languages, comparing solutions to the same problem. Here are some examples of comparisons: reverse-complement , mandelbrot , regex-dna, pidigits .

First, I’ll make a reservation, firstly, these are just tests - you don’t need to take them very seriously. On the other hand, anyone who is unsatisfied with the situation of their favorite programming language can propose their own solution and change the balance of power. Secondly, I cited only those links where imperative languages win by a clear advantage, because my goal is only to illustrate the idea expressed several paragraphs earlier on the overhead associated with organizing parallelism.

Another interesting observation is the implementation of the actor model in imperative languages, usually in tests above the functional Erlang. Again, I’ll make a reservation, everyone knows that Erlang is not good at computing, namely, where you need an instant response, but this only once again confirms the thought of overhead.

Let me give you one metaphor: there are long-distance runners — marathon runners, and there are — short-distance runners — sprinters. Some require endurance at a low average speed (relative to sprinters), and the second require maximum performance in a very short time (relative to marathon runners). Alas, it is simply physiologically impossible to show equally high results at sprint and marathon distances, simply because completely different properties are required from the muscles. These runners are even distinguished by their figures: marathon runners are dry and sinewy, and sprinters are athletic and muscular.

Another confirmation that parallel programming is not so simple is the project to create the 5th generation of computers.

In the 80s of the last century, an attempt was made in Japan to create computers of the 5th generation . The project was again initiated by the belief that the future is in parallel computing, large databases and the logical processing of large databases.

It was spent $ 500 million in 80s prices. The project lasted 10 years and ended in complete failure! Parallel programs did not give significant performance advantages over single-threaded ones. And for decades, Intel has taken the palm with its single-threaded processors. And only when the manufacturers of processors came up against technological limitations on increasing clock speeds, the idea of parallelism again began to gain popularity.

The performance issues discussed above, as well as the fact that Hewitt insistently emphasizes that neither threads, nor message queues, nor any well-known constructions are part of the actor model, have led to a variety of ideas and implementation methods.

Three areas stand out:

You read articles of the 70s: the future lies with multiprocessor systems, artificial intelligence; 80s: the future belongs to multiprocessor systems, large databases, logical data processing, now: the future belongs to processors with a large number of cores, big data, cloud computing, data mining. It is not difficult to draw analogies between the current situation and forecasts 40 years ago. All this resembles an example from trainings on motivation about a donkey and carrots. We take a step to get closer to the cherished goal, and the goal moves away from us as much as we get closer to it, but it is it that makes us develop and move forward.

When I first came across a model of actors, and that was relatively recently. I had a question - why didn’t I find out about her before. Specially looked at books by Tanenbaum, Gregory Andrews, Enterprise Application Integration Patternsand others - all of them devote quite a lot of space to the concept of messaging, but none of them speaks a word about the model of actors and Hewitt. But when you read Hewitt or Agha, a lot of time is devoted to criticizing the traditional model of calculations. It seems that the actors are simply ignoring. The same thing happens in programming: in the world of imperative programming, practically nothing is known about functional - it seems to be gone, at least until recently, and in the world of functional programming imperative is criticized. Maybe someone has thoughts why this happened?

If this article is of interest, then I plan to write a series of articles, for example:

If there are suggestions on the articles, I will be glad to hear.

This article is based on one of my report

The story begins with actors about the fifth minute.

What is an actor model?

Are actors a set of practices, a development methodology, an architectural pattern, a marketing move?

In my university course, like many, the concept of computability was defined through Turing and Post machines. The machine has a state - the set of values of all cells in the tape. The process of computing is a sequence of machine steps, each of which changes its state. Each step of the machine is the performance of one atomic indivisible action (operation). I will call this a traditional model of computation.

Such an interpretation of the calculations leads to the concept of global time, when at one and the same moment in time one and only one atomic operation can be performed. This property is essentially used to prove the properties of various synchronization primitives in the case of multi-threaded programming on single-processor machines, for example, in the book Gregory R. Andrews Fundamentals of multi-threaded, parallel and distributed programming .

For multiprocessor machines or distributed systems, the requirement of global time is generally unacceptable. Therefore, it is necessary to generalize the concept of computability to the case of parallel computing. One of these generalizations is the actor model.

How did it all start?

The appearance of this model in the distant 70s was due to a strong belief that next-generation machines will be multiprocessor, and programs have artificial intelligence. In 1973, Carl Hewitt , Peter Bishop, and Richard Steiger published an article called A Universal Modular Actor Formalism For Artificial Intelligent . In this article, they introduced the concept of an actor and explained that many application classes are a special case of the actor model. By the way, this year was the 40th anniversary!

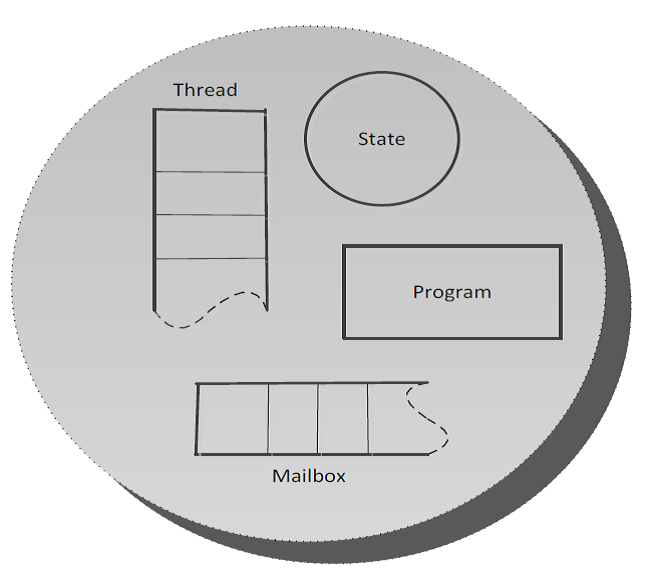

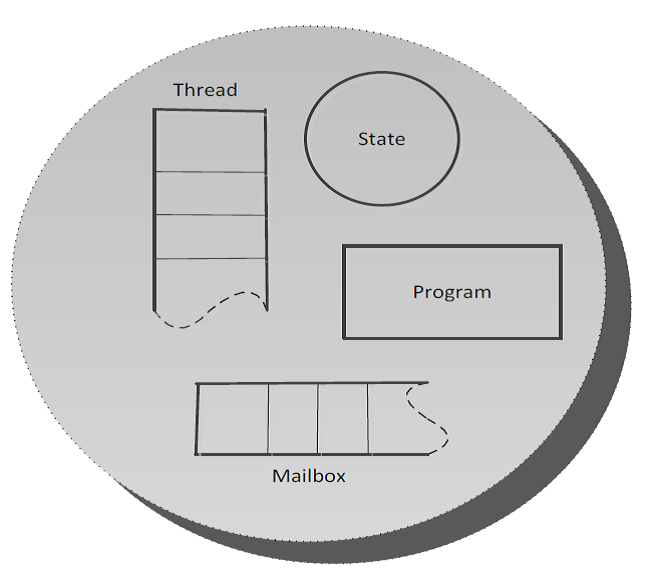

An actor is a universal abstraction of a computational entity, which in response to a received message

- Can send a finite number of messages to other actors,

- Create a finite number of actors,

- Select a behavior to receive the next message.

How fundamentally do the actors differ from the traditional computational model?

The difference between them is the same as between the phone and sending the message by mail. In the first case (these are calculations based on global time), if several people try to reach one phone number, then they begin to compete for access to a shared shared resource - the recipient. Office PBXs, multi-line telephones, call-center software - all this (a la synchronization primitives) is necessary in order to ensure efficient processing of incoming calls. In the case of mail, the sender of the letter (actor) simply sends the letter to the addressee without any delay due to the need to coordinate their actions with other senders. But! However, we do not know when the recipient will read our letter.

Who is involved in the development of actor theory?

The two largest specialists are Carl Hewitt and his apprentice Gul A. Agha . Hewitt mainly deals with the rigorous justification that other computational approaches are a special case of the actor model, and Agha with various applications for distributed systems.

Key Results

Each of the results in itself is a topic for a separate large article, so I will provide only a summary and a link to the source.

Events in the actor model form a partially ordered set. The axiomatics of this set, called Ordering Laws, was described by Carl Hewitt and Henry Baker in Actors and Continous Functionals (1977). There are quite a few axioms, but their point is to justify that the actor model is suitable for practical use, for example, that at any given time the number of messages addressed to one recipient, of course.

In 1985, Gul A. Agha, under the direction of Hewitt, defended his dissertation Actors: A Model Of Concurrent Computations in Distributed Systems. Agha's dissertation describes the syntax of a minimal programming language that supports actors, as well as a set of typical tasks and methods for solving them (chapter 4).

Another important contribution to the practical approaches to the implementation of the actor model is the article by Phillip Haller and Martin Odersky Event-Based Programming without Inversion Control . It proposed a new approach to the development of actor systems, which was used in Scala. Its essence is that the resulting parallel recording program is very similar to "ordinary sequential" programming.

The same path, for example, was chosen for the development of C #. This is what the actors in C # 5 look like:

static async Task SavePage(string file, string a)

{

using (var stream = File.AppendText(file))

{

var html = await new WebClient().DownloadStringTaskAsync(a);

await stream.WriteAsync(html);

}

}

Here, the await keyword implies calling the corresponding method asynchronously and returning to the calculation when the response is received.

In 2011, Gul A. Aga and Karmani summarized many years of experience in implementing actor systems, describing the most common implementation method. They called him Fog Cutter . True, Hewitt has repeatedly criticized such an architecture, for example, here and here. The essence of criticism boils down to the fact that this is just one of the possible implementations of a particular case of a model that does not fully implement the entire actor model.

Indeed, in the phd dissertation Foundations of Actor SemanticsWilliam Duglas Clinger (a student of Karl Hewitt) shows that the actor model has unlimited non-determinism, while the Turing machine is limited non-deterministic. From this, he and Karl Hewitt conclude that there are algorithms that can be implemented in the actor model, but cannot be implemented on a Turing machine. Therefore, any attempt to implement the actor model on "ordinary" computers that implement Turing computability will lead to the fact that only a particular case of the actor model will be implemented.

A spoonful ...

In 1988, Robert Kowalski , the creator of the Prolog language, put forward the thesis that "calculations can be grouped by logical conclusions." This is true for sequential calculations and for some parallel models. But in 1988, Hewitt and Agha published an article Guarded Horn clause languages: are they deductive and Logical? , in which they showed that for the actor model this is not true in the following sense: the current state of the program may not deductively follow from the previous one. What does this mean in practice: debugging a program based on the actor model is not as effective as in the case of sequential programs.

Why functional languages?

Why do functional languages arise wherever it comes to parallel programming? To answer this question, consider a small C ++ example:

int j = 0;

for(int i =0; ; ++i)

{

++j;

}

Since this piece of code does not contain I / O operations, in order to enable another thread to execute its code on the same processor core, it is necessary to wait for the thread scheduler of the operating system to force the thread switching. This can happen either when a system function is called in another thread, or by an event from a timer. By default, a timer event fires once every 15.6 ms , this explains why you should not reduce this time to 1 ms. It turns out that the imperative style allows you to write a "greedy" program that is trying to single-handedly use all the resources of the processor, and we are limited in the means to influence this.

We rewrite this program through tail recursion.

void cycle(int i, int& j)

{

++j;

cycle(i+1, j);

}

Forgive me admirers of functional programming languages for such a free interpretation, but my goal is only to demonstrate the idea.

The loop iteration has been replaced by a function call. Suppose that at the call point of each function, the compiler embeds code to calculate the time taken to execute the current thread and switch to another thread if necessary. Now we have the opportunity to switch from one thread to another within nanoseconds. The same idea allows you to implement lightweight threads, for example, as in Erlang.

For these properties, functional languages are convenient to use where parallelism and real-time reaction are needed.

Rehabilitation of imperative languages

If you need a very fast response, then functional languages are beyond competition, but what if during the processing of the request we have to go to the database, and the response time from it is about 50-100 ms, or we have to perform many calculations, that is, make many function calls . The overhead associated with calculating the execution time and switching threads to each call makes itself felt, and imperative languages are more effective in this case. To see this, just look at benchmarksgame.alioth.debian.org . The site offers to measure the performance of programs written in different languages, comparing solutions to the same problem. Here are some examples of comparisons: reverse-complement , mandelbrot , regex-dna, pidigits .

First, I’ll make a reservation, firstly, these are just tests - you don’t need to take them very seriously. On the other hand, anyone who is unsatisfied with the situation of their favorite programming language can propose their own solution and change the balance of power. Secondly, I cited only those links where imperative languages win by a clear advantage, because my goal is only to illustrate the idea expressed several paragraphs earlier on the overhead associated with organizing parallelism.

Another interesting observation is the implementation of the actor model in imperative languages, usually in tests above the functional Erlang. Again, I’ll make a reservation, everyone knows that Erlang is not good at computing, namely, where you need an instant response, but this only once again confirms the thought of overhead.

Let me give you one metaphor: there are long-distance runners — marathon runners, and there are — short-distance runners — sprinters. Some require endurance at a low average speed (relative to sprinters), and the second require maximum performance in a very short time (relative to marathon runners). Alas, it is simply physiologically impossible to show equally high results at sprint and marathon distances, simply because completely different properties are required from the muscles. These runners are even distinguished by their figures: marathon runners are dry and sinewy, and sprinters are athletic and muscular.

5th generation of computers

Another confirmation that parallel programming is not so simple is the project to create the 5th generation of computers.

In the 80s of the last century, an attempt was made in Japan to create computers of the 5th generation . The project was again initiated by the belief that the future is in parallel computing, large databases and the logical processing of large databases.

It was spent $ 500 million in 80s prices. The project lasted 10 years and ended in complete failure! Parallel programs did not give significant performance advantages over single-threaded ones. And for decades, Intel has taken the palm with its single-threaded processors. And only when the manufacturers of processors came up against technological limitations on increasing clock speeds, the idea of parallelism again began to gain popularity.

Approaches to the implementation of the actor model

The performance issues discussed above, as well as the fact that Hewitt insistently emphasizes that neither threads, nor message queues, nor any well-known constructions are part of the actor model, have led to a variety of ideas and implementation methods.

Three areas stand out:

- New programming language. This niche is occupied by functional languages. A prime example: Erlang.

- Extensions of existing languages. For example, Scala, C #. The idea of Odersky and Haller is used, which allows you to write parallel programs in the "familiar style".

- Libraries for an existing language.

In conclusion, two observations

Belief in parallel computing.

You read articles of the 70s: the future lies with multiprocessor systems, artificial intelligence; 80s: the future belongs to multiprocessor systems, large databases, logical data processing, now: the future belongs to processors with a large number of cores, big data, cloud computing, data mining. It is not difficult to draw analogies between the current situation and forecasts 40 years ago. All this resembles an example from trainings on motivation about a donkey and carrots. We take a step to get closer to the cherished goal, and the goal moves away from us as much as we get closer to it, but it is it that makes us develop and move forward.

Ignoring on the one hand and criticism on the other.

When I first came across a model of actors, and that was relatively recently. I had a question - why didn’t I find out about her before. Specially looked at books by Tanenbaum, Gregory Andrews, Enterprise Application Integration Patternsand others - all of them devote quite a lot of space to the concept of messaging, but none of them speaks a word about the model of actors and Hewitt. But when you read Hewitt or Agha, a lot of time is devoted to criticizing the traditional model of calculations. It seems that the actors are simply ignoring. The same thing happens in programming: in the world of imperative programming, practically nothing is known about functional - it seems to be gone, at least until recently, and in the world of functional programming imperative is criticized. Maybe someone has thoughts why this happened?

What's next?

If this article is of interest, then I plan to write a series of articles, for example:

- Actors sequencing by Actors and Continous Functionals .

- Summary of ideas presented in Actors: A Model Of Concurrent Computations in Distributed Systems Gul A. Agha dissertation .

- Pros and cons of the Odersky Event-Based Programming with Inversion Control approach .

- Coming back to a spoonful ... - a couple of my own ideas, already implemented and tested in practice, how to increase the reliability and fault tolerance of systems implemented according to the actor model.

If there are suggestions on the articles, I will be glad to hear.

PS

This article is based on one of my report

The story begins with actors about the fifth minute.