MongoDB vs PostgreSQL performance comparison. Part II: Index

Continued, start here .

For this experiment, we created indexes on the id and floatvalue fields (we omitted text fields, we won’t touch on the topic of the full-text index, since this is material for a separate article). As queries we used samples from the ranges:

But first, you need to evaluate how much the insertion speed has fallen after adding indexes. To do this, add another 250,000 entries in MongoDB and POstgreSQL.

Mongodb

PostgreSQL

After simple calculations, we can understand that MongoDB remained the undisputed leader in terms of insertion speed: after adding indexes, its insertion rate fell by only ~ 10% and amounted to 3600 objects per second . Whereas PostgreSQL's insertion speed dropped by ~ 30% to about 536 records per second .

I would like the situation with the sample to develop in a similar way. We execute the following queries:

MongoDB

PostgreSQL

However, after comparing the speed of operations, the sampling situation changed in favor of PostgreSQL:

It is also worth noting that when sampling not from the range, but with specific digits (n.p.

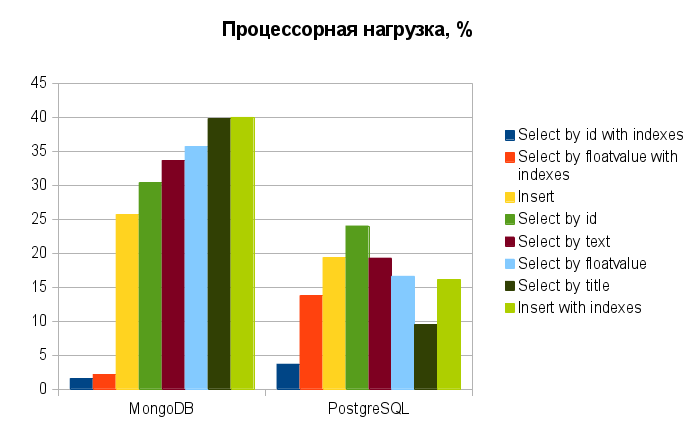

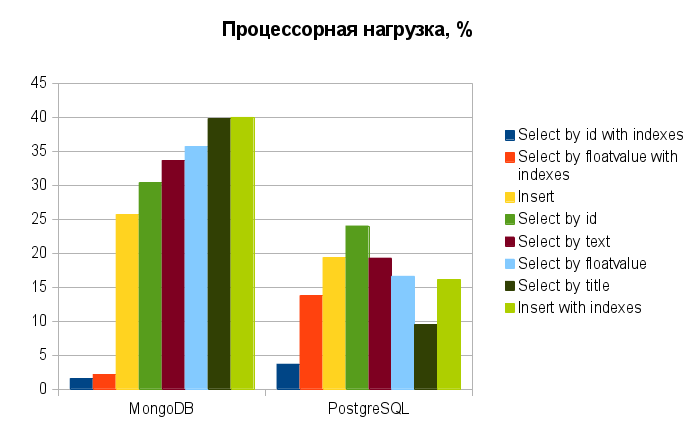

Another revelation was that when using indexes, MongoDB so drastically reduces CPU consumption (up to 1-2% versus 30-40% when searching without indexing) that it even overtakes PostgreSQL in this (decrease to 4-14% versus 5-25 % ).

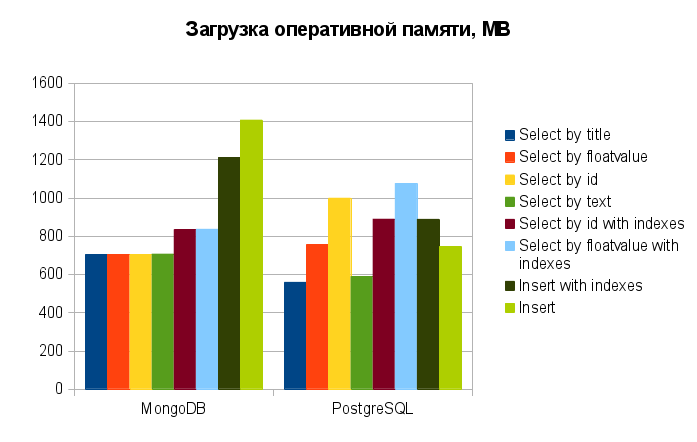

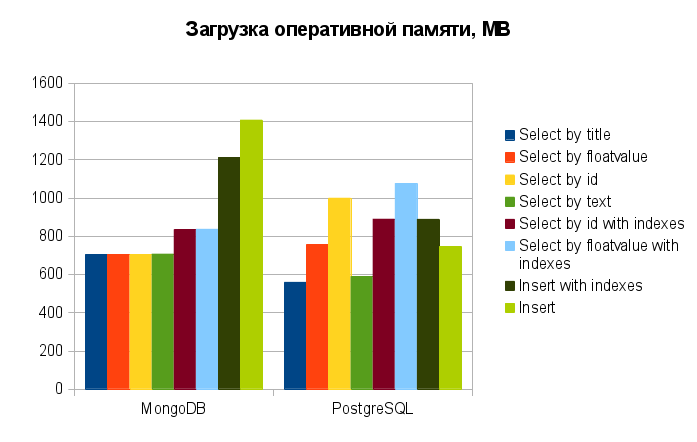

Before summing up something, I share, as promised, with the resulting plate and resource consumption diagrams for requests:

And now about the results.

With a naked eye, you can immediately notice one advantage of MongoDB over PostgreSQL: insertion speed . It is almost an order of magnitude higher both with the use of indices and without them. Moreover, the use of indexes does not significantly reduce it (by only ~ 10% against a 30% decrease in PostgreSQL). This is really an excellent result! But ... how often do you use the insert relative to the selection (under various conditions)?

When fetching from a collection without indexes, MongoDB also leads, although not so significantly. Not bad! But...how often do you work with tables without indexes?

Do not think that with my questions I am trying to turn you away from noSQL DBMS. Tables without indexes (I do not mean primary) have a place to be in these or those decisions. The priority of insertion speed for some tasks is also very real and, moreover, is sometimes very much in demand. The question is, do you specifically need this? Concrete for your current task? This (very superficial) testing is not intended to give an answer to, I will not hide, the rather popular question “What is better than SQL or noSQL?”. It is designed to lead you to thoughts, assess the needs and opportunities when choosing a solution for a particular task.

In the end, I’ll say that we, for example, use both types of DBMS, depending on the data structure, goals and options for working with them. An integrated approach is much better and allows you to optimally work with any data.

Experiment II: Index

For this experiment, we created indexes on the id and floatvalue fields (we omitted text fields, we won’t touch on the topic of the full-text index, since this is material for a separate article). As queries we used samples from the ranges:

- 10,000 < id <100,000

- 200,000 < floatvalue <300,000

But first, you need to evaluate how much the insertion speed has fallen after adding indexes. To do this, add another 250,000 entries in MongoDB and POstgreSQL.

Mongodb

Insert 250000 records complete! Total time: 69.453 sec

PostgreSQL

psql -d prefTest -f 250k.p5.sql (Total time: 466.153 sec)

After simple calculations, we can understand that MongoDB remained the undisputed leader in terms of insertion speed: after adding indexes, its insertion rate fell by only ~ 10% and amounted to 3600 objects per second . Whereas PostgreSQL's insertion speed dropped by ~ 30% to about 536 records per second .

I would like the situation with the sample to develop in a similar way. We execute the following queries:

MongoDB

db.tmp.find({$and:[{id:{$gt:10000}},{id:{$lt:100000}}]})db.tmp.find({$and:[{floatvalue: {$lt:300000}},{floatvalue: {$gt:200000}}]})

PostgreSQL

select * from tmp where id>10000 and id<100000select * from tmp where floatvalue<300000 and floatvalue>200000

However, after comparing the speed of operations, the sampling situation changed in favor of PostgreSQL:

It is also worth noting that when sampling not from the range, but with specific digits (n.p.

floatvalue=1234567.76545) both DBMSs showed a result of 0 milliseconds. Therefore, such operations are not even considered here. This is about the wise use of indexes in accordance with the planned sampling conditions. Here, indexes and queries are used only for the purpose of load testing. Another revelation was that when using indexes, MongoDB so drastically reduces CPU consumption (up to 1-2% versus 30-40% when searching without indexing) that it even overtakes PostgreSQL in this (decrease to 4-14% versus 5-25 % ).

Summary

Before summing up something, I share, as promised, with the resulting plate and resource consumption diagrams for requests:

And now about the results.

With a naked eye, you can immediately notice one advantage of MongoDB over PostgreSQL: insertion speed . It is almost an order of magnitude higher both with the use of indices and without them. Moreover, the use of indexes does not significantly reduce it (by only ~ 10% against a 30% decrease in PostgreSQL). This is really an excellent result! But ... how often do you use the insert relative to the selection (under various conditions)?

When fetching from a collection without indexes, MongoDB also leads, although not so significantly. Not bad! But...how often do you work with tables without indexes?

Do not think that with my questions I am trying to turn you away from noSQL DBMS. Tables without indexes (I do not mean primary) have a place to be in these or those decisions. The priority of insertion speed for some tasks is also very real and, moreover, is sometimes very much in demand. The question is, do you specifically need this? Concrete for your current task? This (very superficial) testing is not intended to give an answer to, I will not hide, the rather popular question “What is better than SQL or noSQL?”. It is designed to lead you to thoughts, assess the needs and opportunities when choosing a solution for a particular task.

In the end, I’ll say that we, for example, use both types of DBMS, depending on the data structure, goals and options for working with them. An integrated approach is much better and allows you to optimally work with any data.