Fault tolerance: how to provide reliable service in the event of equipment failure

Ensuring fault tolerance is not a trivial task. There is no standard solution for her. There are some common patterns, components. But even within the same organization, different solutions are used to ensure the tolerance of different nodes. What can we say about comparing approaches in different organizations.

Someone leaves the problem at random, someone hangs a banner on the Five Hundred and tries to make money from failures. Someone is using standard solutions from vendors of databases or network devices. And someone goes into the now fashionable “clouds”.

One thing is clear - as the business grows, ensuring resilience to failures (not even recovery procedures after failures) becomes an increasingly acute problem. The reputation of the company begins to depend on the number of accidents per year, with long downtime it becomes inconvenient to use the service, etc. There are many reasons.

In this article, we will look at one of our ways to ensure fault tolerance. By stability we mean the preservation of the system’s operability in case of failure of as many nodes of this system as possible.

Typically, a web application architecture is structured as follows (and our architecture is no exception):

The web server is engaged in primary processing and dispatching of requests, it performs most of the domain logic, it knows where to get data, what to do with it, where to put new data. It doesn’t make much difference which particular web server will process the user request. If the software is written more or less correctly, any web server will successfully perform the work required of it (unless it is overloaded, of course). Therefore, failure of one of the servers will not lead to serious problems: a simple load transfer to the surviving servers will occur, and the web application will continue to work (ideally, all pending transactions will be rolled back and the user request can be processed again on another server) .

The main difficulties begin at the data storage level. The main task of this subsystem is to save and increase the information necessary for the functioning of the whole system as a whole. Some of this data can sometimes be lost, and the rest is irreplaceable, and their loss actually means the death of the project. In any case, if the data is partially lost, damaged or temporarily unavailable, the performance of the system decreases dramatically.

In fact, of course, everything is much more complicated. If the web server fails, the system usually does not return to the previous point. This is due to the fact that as part of one business transaction, it is necessary to make changes in several different repositories (while the mechanism of distributed transactions in the data warehouse layer is usually not implemented, and it is too expensive to implement and operate). Other possible reasons for the discrepancy in the data are errors in the program code, unaccounted-for scripts, carelessness of developers and administrators (the “human factor”), strict restrictions on the development time, and a bunch of other important and unimportant reasons why programmers write not-so-perfect code. But in most situations this is not necessary (not all applications are written for the banking and financial sectors).

Given all of the above considerations, the presence of a good storage system and access to data gives a tangible bonus to the ability of the system to survive accidents. That is why we will concentrate on how to ensure fault tolerance in the data access subsystem.

In recent years, we have been using Tarantula as a database (this is our open source project, a very fast, convenient database that can be easily expanded using stored procedures. See the website http://tarantool.org ).

So, our goal is maximum data availability. The tarantula as software usually does not cause problems, because it is stable, the load on the server is well predicted, the server will not leave unexpectedly due to the increased load. We are faced with the problem of equipment reliability. Sometimes servers burn up, disks roll, racks are cut down, routers fail, links to data centers disappear ... But we still need to serve our users.

In order not to lose data, you need to duplicate the database server, place it in a different rack, behind another router or even in another data center and configure data replication from the main server to the backup one. We must not forget about the mandatory and regular backup of data. In the event of a technical breakdown, the data will remain live and accessible. But there is one caveat: the data will be available at a different address. And applications will continue to habitually knock on a broken server.

The simplest and most common option is to wake one of the system administrators so that he can figure out the problem and reconfigure all our applications to use the new data server. Not the best solution: switching takes a lot of time, it is likely to forget something, and an irregular work schedule is very bad.

There is a less reliable, but more automated option - the use of specialized software to determine if the server is alive and whether it is time to switch to duplicate. Let the gentlemen admins correct me, but it seems to me that there is a non-zero probability of false positives, which will lead to discrepancies in data between different repositories. True, the number of false positives can be reduced by increasing the response time of the system to failure (which, in turn, leads to an increase in downtime).

Alternatively, you can put a master copy of the data in each data center and write the software in such a way that everything works correctly in this configuration. But for most tasks, such a solution is simply fantastic.

I would also like to be able to handle cases of network splitting, when for a part of the cluster a master copy of the data remains available.

As a result, we chose a compromise option - in case of problems with access to the database, automatically switch to a replica (but in read-only access mode). Moreover, each server decides to switch to the replica and back to the master independently, based on its own information about the availability of the master and replicas. If the server with the master copy of the data really breaks down, system administrators will take all the necessary steps to fix it, and the system will work with a bit of cut functionality at this time (for example, some users will not have the contact editing function). Depending on the criticality of the service, administrators can take various actions to eliminate the problem - from the immediate introduction of a replacement to the “morning lift”. The main thing, that the web application continues to work, albeit in a slightly truncated version. Automatically.

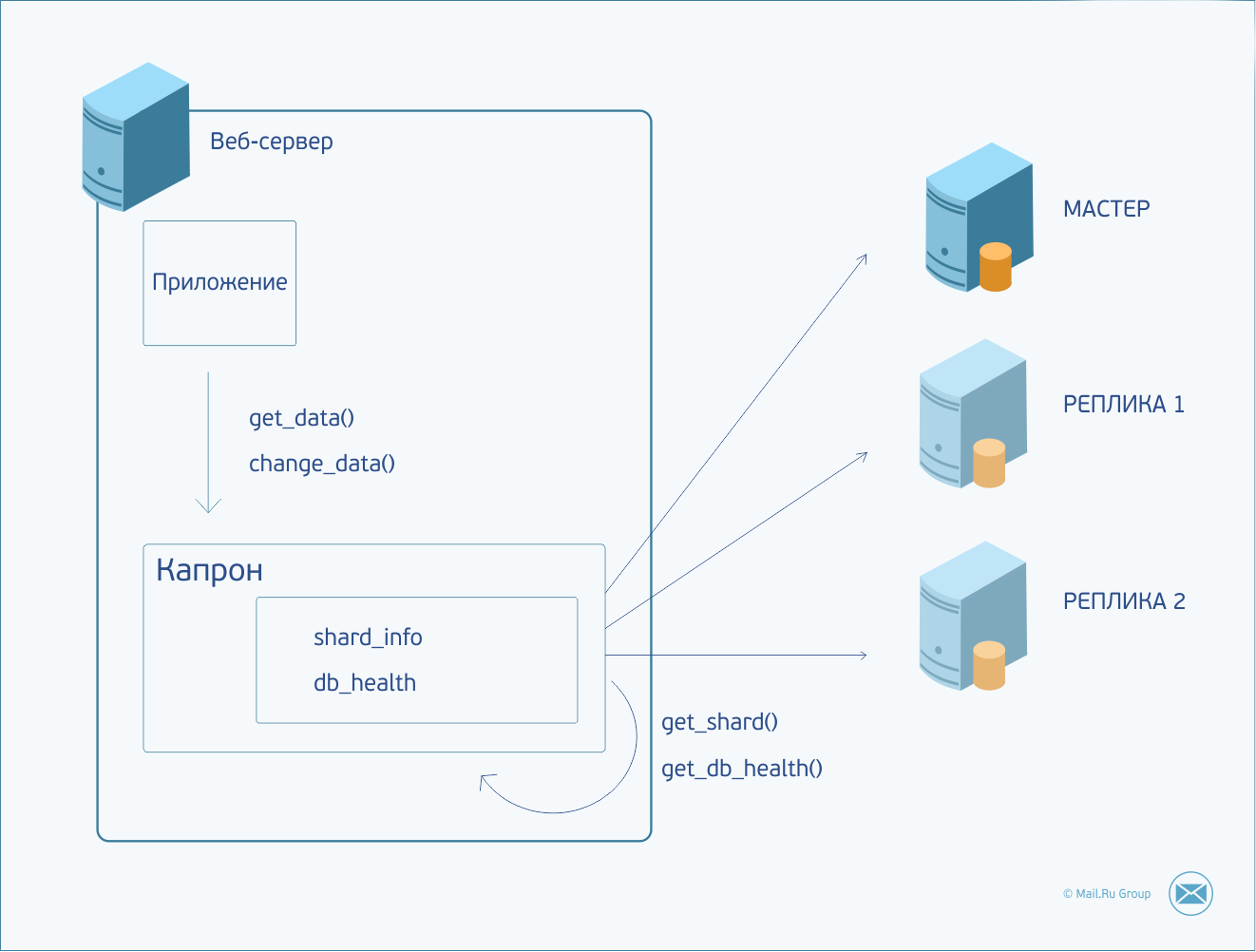

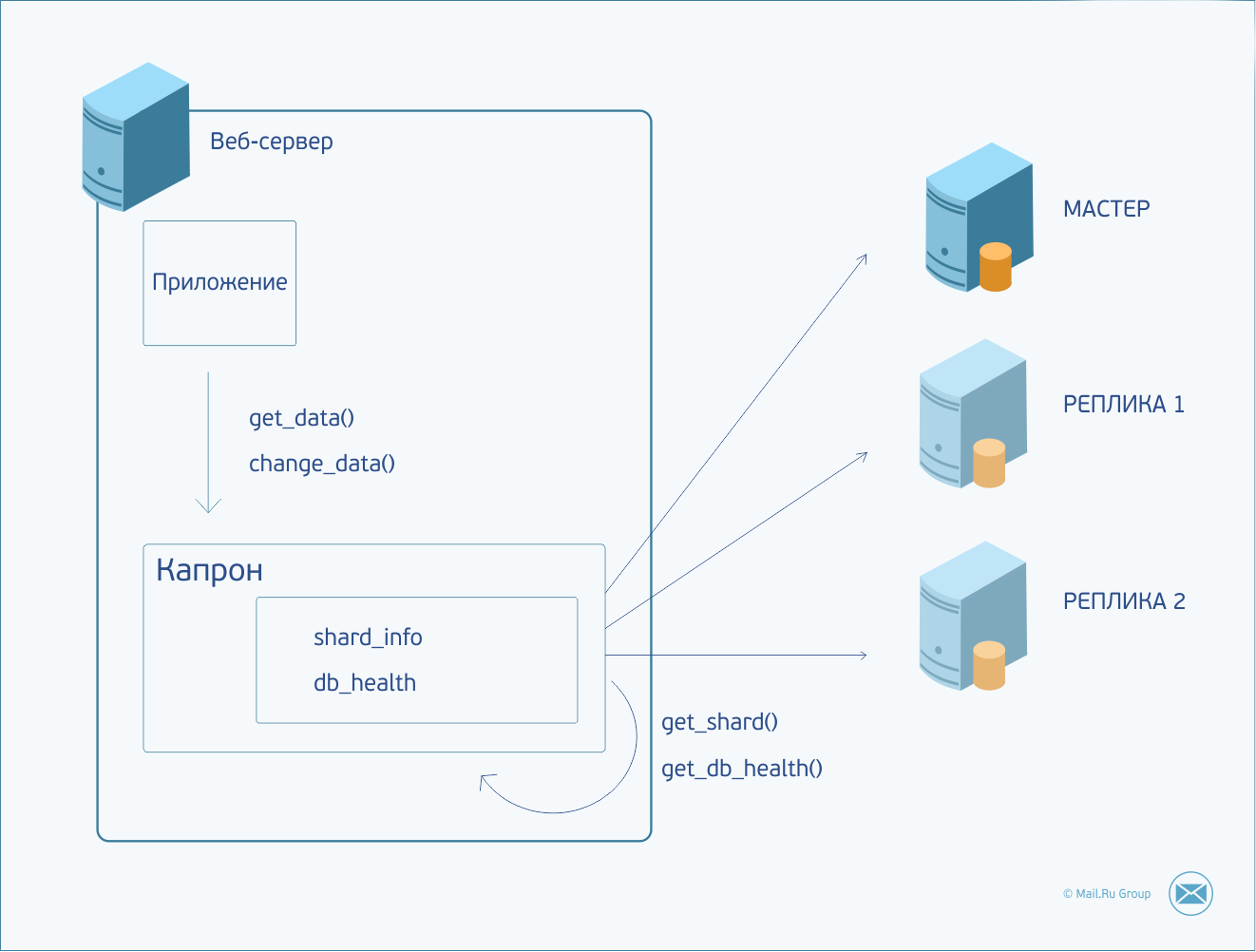

I cannot but tell you about one component that is widely used in our country, which we have on each server. We call him Kapron. Kapron acts as a multiplexer for database queries (MySQL and Tarantool), supporting a pool of constant connections with them, encapsulating all the information about the database configuration, sharding and load balancing. Kapron allows you to hide the features of the database protocol, providing its customers with a simpler and clearer interface. Very handy thing. And the ideal candidate for putting the previously described logic there.

So, the application needs to perform some actions with the data. It forms a request and transfers it to Kapron. Kapron determines which shard to send the request to, establishes a connection (or uses the connection already created earlier) with the desired server and sends it a command. If the server is unavailable or the response time-out is exceeded, the request is duplicated in one of the replicas. In case of failure, the request will be sent to the next replica, etc., until the replicas run out or the request is processed. Server availability status is maintained between requests. And in case the wizard was unavailable, the next request will immediately go to the replica. Kapron in the background continues to knock on the master server and, as soon as it comes to life, will immediately start sending requests to it again.

Due to the large flow of requests, knowledge about the inaccessibility of certain database servers is quickly updated, and this allows you to respond as quickly as possible to a changing situation.

The result is a fairly simple and versatile scheme. It handles not only cases when the master copy of the data crashes, but also normal network delays. If the master staymautil, the request will be redirected to the replica (and in the case of a non-changing request, it is successfully processed). In the general case, this allows to reduce the threshold values of network expectations for reading requests, and during brakes on the master server it is faster to return the result to the client.

The second bonus. Now you can more painlessly (less painfully) carry out software updates and other scheduled work on database servers. Since read requests are usually the vast majority, restarting the wizard will not result in a denial of service for most requests.

We plan not to stop there and will try to eliminate some obvious flaws:

1) In the case of a network timeout, we try to send a request to the replica. In the case of a modifying request, this is a vain effort, because the replica will not be able to process it. It makes sense not to send modifying commands to the replica a priori. It is solved at the level of manual configuration of command types.

2) Now the network timeout does not mean that the data on the wizard will not be changed. Although we will return to the client that we could not process his request. In fairness, it is worth noting that this flaw was present before. The situation can be corrected by introducing on the side of the Tarantula restrictions on the processing time of commands (if the changes are not applied for the specified time, they are automatically rolled back, and the request is false). We set the processing timeout to 1 second, and the network timeout in Kapron - 2 seconds.

3) Well, of course, we expect from the developers of Tarantula synchronous master-master-replication. This will allow you to evenly distribute requests between multiple master copies and successfully process requests even if one of the servers is unavailable!

Dmitry Isaykin, Leading Mail.Ru Mail Developer

Someone leaves the problem at random, someone hangs a banner on the Five Hundred and tries to make money from failures. Someone is using standard solutions from vendors of databases or network devices. And someone goes into the now fashionable “clouds”.

One thing is clear - as the business grows, ensuring resilience to failures (not even recovery procedures after failures) becomes an increasingly acute problem. The reputation of the company begins to depend on the number of accidents per year, with long downtime it becomes inconvenient to use the service, etc. There are many reasons.

In this article, we will look at one of our ways to ensure fault tolerance. By stability we mean the preservation of the system’s operability in case of failure of as many nodes of this system as possible.

Typically, a web application architecture is structured as follows (and our architecture is no exception):

The web server is engaged in primary processing and dispatching of requests, it performs most of the domain logic, it knows where to get data, what to do with it, where to put new data. It doesn’t make much difference which particular web server will process the user request. If the software is written more or less correctly, any web server will successfully perform the work required of it (unless it is overloaded, of course). Therefore, failure of one of the servers will not lead to serious problems: a simple load transfer to the surviving servers will occur, and the web application will continue to work (ideally, all pending transactions will be rolled back and the user request can be processed again on another server) .

The main difficulties begin at the data storage level. The main task of this subsystem is to save and increase the information necessary for the functioning of the whole system as a whole. Some of this data can sometimes be lost, and the rest is irreplaceable, and their loss actually means the death of the project. In any case, if the data is partially lost, damaged or temporarily unavailable, the performance of the system decreases dramatically.

In fact, of course, everything is much more complicated. If the web server fails, the system usually does not return to the previous point. This is due to the fact that as part of one business transaction, it is necessary to make changes in several different repositories (while the mechanism of distributed transactions in the data warehouse layer is usually not implemented, and it is too expensive to implement and operate). Other possible reasons for the discrepancy in the data are errors in the program code, unaccounted-for scripts, carelessness of developers and administrators (the “human factor”), strict restrictions on the development time, and a bunch of other important and unimportant reasons why programmers write not-so-perfect code. But in most situations this is not necessary (not all applications are written for the banking and financial sectors).

Given all of the above considerations, the presence of a good storage system and access to data gives a tangible bonus to the ability of the system to survive accidents. That is why we will concentrate on how to ensure fault tolerance in the data access subsystem.

In recent years, we have been using Tarantula as a database (this is our open source project, a very fast, convenient database that can be easily expanded using stored procedures. See the website http://tarantool.org ).

So, our goal is maximum data availability. The tarantula as software usually does not cause problems, because it is stable, the load on the server is well predicted, the server will not leave unexpectedly due to the increased load. We are faced with the problem of equipment reliability. Sometimes servers burn up, disks roll, racks are cut down, routers fail, links to data centers disappear ... But we still need to serve our users.

In order not to lose data, you need to duplicate the database server, place it in a different rack, behind another router or even in another data center and configure data replication from the main server to the backup one. We must not forget about the mandatory and regular backup of data. In the event of a technical breakdown, the data will remain live and accessible. But there is one caveat: the data will be available at a different address. And applications will continue to habitually knock on a broken server.

The simplest and most common option is to wake one of the system administrators so that he can figure out the problem and reconfigure all our applications to use the new data server. Not the best solution: switching takes a lot of time, it is likely to forget something, and an irregular work schedule is very bad.

There is a less reliable, but more automated option - the use of specialized software to determine if the server is alive and whether it is time to switch to duplicate. Let the gentlemen admins correct me, but it seems to me that there is a non-zero probability of false positives, which will lead to discrepancies in data between different repositories. True, the number of false positives can be reduced by increasing the response time of the system to failure (which, in turn, leads to an increase in downtime).

Alternatively, you can put a master copy of the data in each data center and write the software in such a way that everything works correctly in this configuration. But for most tasks, such a solution is simply fantastic.

I would also like to be able to handle cases of network splitting, when for a part of the cluster a master copy of the data remains available.

As a result, we chose a compromise option - in case of problems with access to the database, automatically switch to a replica (but in read-only access mode). Moreover, each server decides to switch to the replica and back to the master independently, based on its own information about the availability of the master and replicas. If the server with the master copy of the data really breaks down, system administrators will take all the necessary steps to fix it, and the system will work with a bit of cut functionality at this time (for example, some users will not have the contact editing function). Depending on the criticality of the service, administrators can take various actions to eliminate the problem - from the immediate introduction of a replacement to the “morning lift”. The main thing, that the web application continues to work, albeit in a slightly truncated version. Automatically.

I cannot but tell you about one component that is widely used in our country, which we have on each server. We call him Kapron. Kapron acts as a multiplexer for database queries (MySQL and Tarantool), supporting a pool of constant connections with them, encapsulating all the information about the database configuration, sharding and load balancing. Kapron allows you to hide the features of the database protocol, providing its customers with a simpler and clearer interface. Very handy thing. And the ideal candidate for putting the previously described logic there.

So, the application needs to perform some actions with the data. It forms a request and transfers it to Kapron. Kapron determines which shard to send the request to, establishes a connection (or uses the connection already created earlier) with the desired server and sends it a command. If the server is unavailable or the response time-out is exceeded, the request is duplicated in one of the replicas. In case of failure, the request will be sent to the next replica, etc., until the replicas run out or the request is processed. Server availability status is maintained between requests. And in case the wizard was unavailable, the next request will immediately go to the replica. Kapron in the background continues to knock on the master server and, as soon as it comes to life, will immediately start sending requests to it again.

Due to the large flow of requests, knowledge about the inaccessibility of certain database servers is quickly updated, and this allows you to respond as quickly as possible to a changing situation.

The result is a fairly simple and versatile scheme. It handles not only cases when the master copy of the data crashes, but also normal network delays. If the master staymautil, the request will be redirected to the replica (and in the case of a non-changing request, it is successfully processed). In the general case, this allows to reduce the threshold values of network expectations for reading requests, and during brakes on the master server it is faster to return the result to the client.

The second bonus. Now you can more painlessly (less painfully) carry out software updates and other scheduled work on database servers. Since read requests are usually the vast majority, restarting the wizard will not result in a denial of service for most requests.

We plan not to stop there and will try to eliminate some obvious flaws:

1) In the case of a network timeout, we try to send a request to the replica. In the case of a modifying request, this is a vain effort, because the replica will not be able to process it. It makes sense not to send modifying commands to the replica a priori. It is solved at the level of manual configuration of command types.

2) Now the network timeout does not mean that the data on the wizard will not be changed. Although we will return to the client that we could not process his request. In fairness, it is worth noting that this flaw was present before. The situation can be corrected by introducing on the side of the Tarantula restrictions on the processing time of commands (if the changes are not applied for the specified time, they are automatically rolled back, and the request is false). We set the processing timeout to 1 second, and the network timeout in Kapron - 2 seconds.

3) Well, of course, we expect from the developers of Tarantula synchronous master-master-replication. This will allow you to evenly distribute requests between multiple master copies and successfully process requests even if one of the servers is unavailable!

Dmitry Isaykin, Leading Mail.Ru Mail Developer