Creation of AI by the method of "gluttony". Intellectual Odyssey

I wanted to write a talking program. I really wanted to, desperately.

Of course, I’m a long way from professional programmers, and I’m not a linguist either, but there is system thinking, and the feeling of language is in place. Especially since I have been interested in the subject of AI for a long time, I even rolled a couple of posts at one time. Why not realize knowledge in the program code? Well, I tried as best I could.

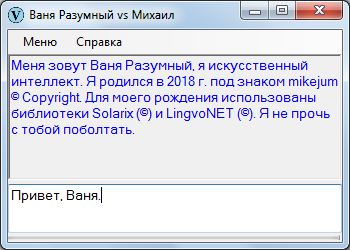

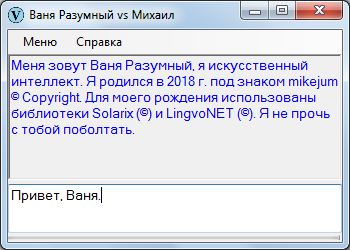

Meet Vanya Reasonable.

Below is a description of the problems that have arisen in front of me on this common path, and how to overcome them.

Regarding the result, I’ll make a reservation right now: the goal was not the program code, but the formulation of the principles of artificial thinking , which functioned NOT on the basis of physical reality, like biological organisms, but on the basis of SYNTAX. Fans of the Turing test, neural networks and machine learning may not worry.

Attention, the material is voluminous.

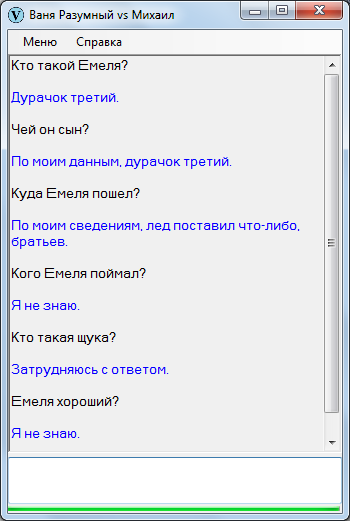

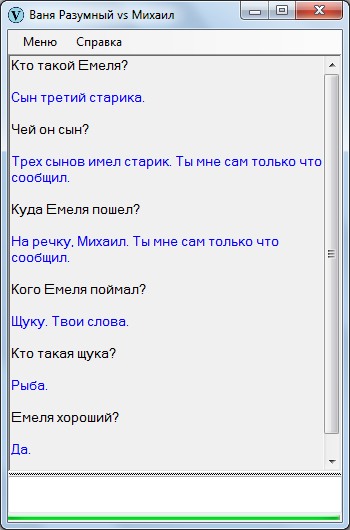

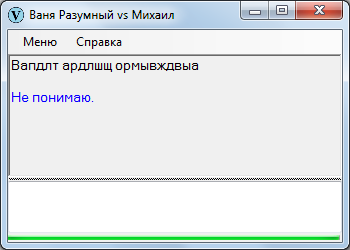

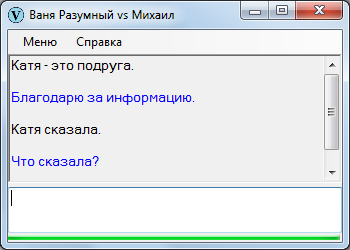

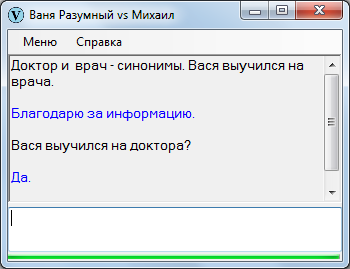

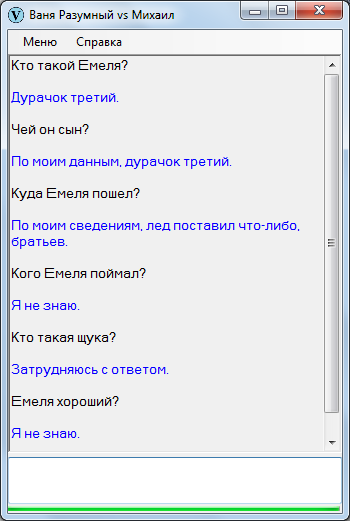

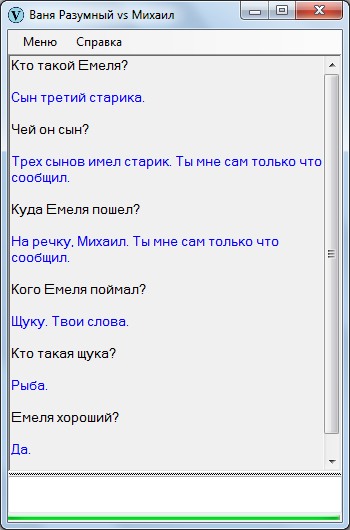

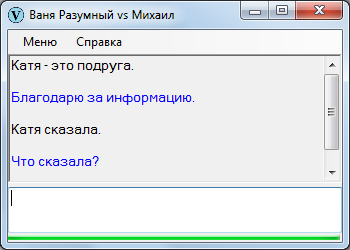

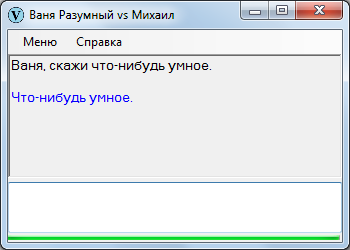

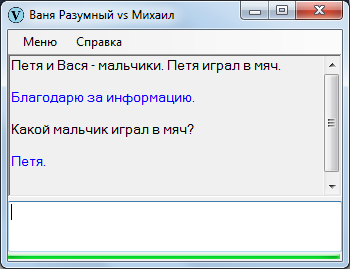

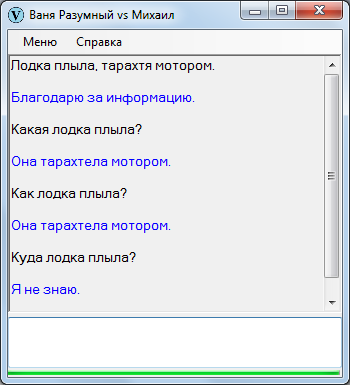

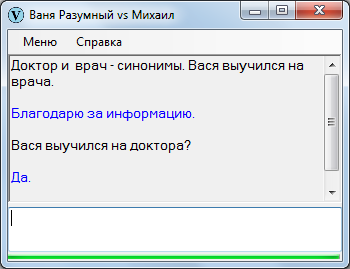

So that there are no baseless expectations followed by malicious screenshots in the comments, I will immediately demonstrate Vanya Reasonable in an unpresentable form. Here, I downloaded the beginning of "Emeli" and tried to ask around - just like in literature classes in elementary school.

Original text:

Once upon a time there was an old man. He had three sons: two smart, the third - a fool Emelya. Those brothers are working, but Emelya is on the stove all day, he doesn't want to know anything.

Once the brothers went to the market, and the women, daughters-in-law, let's send it:

- Go, Emelya, for water.

And he told them from the stove:

- Reluctance ...

- Go, Emelya, and then the brothers return from the bazaar, you will not bring gifts.

- Okay.

Tears of Emelya from the stove, put on his shoes, got dressed, took buckets and an ax and went to the river. He cut the ice, scooped up the buckets and set them, while he himself looks into the ice hole. And I saw Emelya in the hole in the pike.

Dialogue:

However, the text can be adapted - for example, let's say: The

old man had three sons. The first son was smart. The second son was smart. The third son was a fool. Emelya is the third son of an old man. Emelya good.

The older sons work all day, and Emelya lies on the stove.

Once the brothers went to the bazaar. The daughters say to Emele:

- Go get some water.

Emelya replies with the stove:

- I will not go for water.

Bridesmaids say:

- Brothers will not bring gifts to you.

- Okay.

Emelya got down from the stove, put on his shoes, got dressed, took the buckets and the ax. Then Emelya went to the river.

Emelya cut ice and scooped up buckets. And I saw Emelya in the hole in the pike. Pike is a fish. Emelya caught a pike.

After adaptation comes out decently:

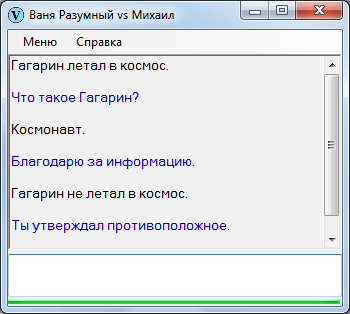

All the same, in artistic texts it is impermissibly confused. Despite everything, Vanya really thinks , unlike many of his fellows, primarily those created on the basis of neural networks, which only pretend to be reasonable, but in fact are nothing more than plastic dolls. If you are interested in knowing why, read on.

I turn directly to the description of intellectual odyssey.

I argued as follows.

What is the main problem of AI? The fact that the computer can not understand the meanings of words, respectively, to be "reasonable." In this case, it is believed that people are reasonable, because they understand the words spoken by the interlocutor.

In fact, not a damn people are reasonable. The word itself means no more than the letters that make it up (in fact, curved lines) or sounds (air vibrations). Meaning arises only as a relationship between letter-sounds as elements of articulate speech, at the expense of stable associations, including through connections with the world of visual perception. For example, the source indicates the subject and says: "This is a tree." And you understand that this item is called a tree.

A chat bot (by which we agree to understand an AI that deals exclusively with conversations) cannot be pointed at the subject and say: “This is a tree,” due to the lack of an eye for the chat bot, that is, a video camera. It is possible to explain only in words, but how can you explain, if he does not understand human speech ?!

Fortunately, the relationship between the elements of speech is defined, and quite rigidly: syntax is responsible for them. Consequently, any text built according to the laws of syntax contains a certain meaning fixed in the syntax. The very “hilarious kuzdra” in the headline, famous and unsurpassed in visibility - which, as it turned out, has long been attached to computer research.

From the memories of a comrade of the distant 70s:

On the basis of the "gossip", it is possible to build a full-fledged dialogue.

- Who has boklan?

- Kuzdra.

- Who kudlachit bokrenka?

- Also kuzdra.

- What is she like?

- globally.

- How would the bokar?

- Shteko.

Such an AI — quite fluently communicating — will not be able to understand the meanings of the words it uses: it will be out of it, neither what a “plug” is, nor who is such a “bokr”, nor how is it possible to make a clock. But is it possible for this reason that it can be considered that AI is unreasonable, and a person, a perfect biological creature who “understands” the semantics of his own expressions, fully thinks, unlike AI,? In fullness ...

People do not see objects, but observe a panorama of colored dots - in fact, chaotic. Objects are formed not in the external world, but in the human brain, due to the linking of color dots together, in separate aggregates. This means that we exist in a kind of illusory world, which in a certain sense we have constructed.

I read that some primitive tribe does not see airplanes in the sky - they simply do not see it, and that’s all. I also read that the Indians did not notice the ships of Columbus that approached the shores, because ... they simply did not notice. And they noticed after they were told how to look - that is, how to link the color pixels into specific objects. I believe these pseudo-geographic bikes: such visual paradoxes seem to me plausible.

A person has no reason to believe that his thinking is arranged in some special way, therefore, the principle of the “gracious kuzdra” is the only way to make someone reasonable, be it a chat bot or a person . You can use different tools, but the concept is unconditional. I thought so before the start of work and did not change my opinion on its completion.

Having set the task, I began to google about the appropriate libraries for C #.

The first disappointment awaited me here: nothing useful, at least free, was not found. All the ready-made modules for the implementation of chat bots were not required, interested in working with word forms. It seemed that it was simpler to:

a) define the morphological features of the word form,

b) return the word form with the required morphological features?

Lexicon is limited, the number of signs too. And the thing is so useful, everyday ... Not there it was!

Finding nothing, rashly tried to code the determinant of morphological features on their own. Somehow it did not immediately set. On this my intellectual odyssey would have ended ingloriously, in the port of departure, if repeated, more thorough, googling did not reveal a couple of free libraries, which I eventually took advantage of. As always, thanks to the enthusiasts.

The first library is Solarix . A lot of things allow, but I, due to low competence and financial insufficiency (the library is only partially free), used an unconditionally free parser that parses the text and writes the result to a file.

The second library is LingvoNET , designed to inject and conjugate the words of the Russian language. The previous declensions and conjugations are also available, but this one attracted me to be easy to master.

Having received the desired, rolled up his sleeves.

I present the final result in the reduction. I do not describe all intellectual wanderings and difficulties: they are too confused. Often wandered in the wrong place, so I had to rethink and redo the seemingly complete.

In accordance with the concept I have implemented, as a primary element, we have words with morphological features known to us.

The morphological features of the words are returned by the Solarix parser - it would seem that there are no problems. However, there are problems due to the fact that some phrases have a common inseparable semantics, and therefore must be perceived by the AI as a single element.

I counted four kinds of similar phrases:

1. Phraseological momentum,

Unfortunately, the parser does not return them.

Locksmith beat thumbs up.

For the parser “to beat the backdoor” is for different words, although the semantic basis is the same here, and the phrase is necessary to perceive as it is perceived by our brain: together.

2. Proper Names

Alexander Sergeevich Pushkin.

It is clear that this is a single pointer to a single object, although the individual components of its words can be used in other pointers.

3. Sayings, proverbs, aphorisms.

They, as a rule, also represent a single semantic field.

Old horse furrow does not spoil.

4. Compound function words (as a result, due to the fact that, for a reason, etc.) the

Parser returns some of them together, others do not.

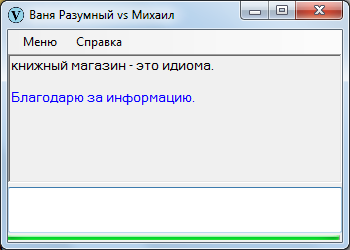

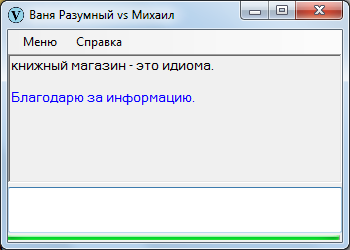

5. Just stable bookshop-type phrases. Do not attach any adjective to the noun "shop", even if the corresponding products were sold in it. No one will say "pasta shop", even if the store sells only pasta.

Compound words — regardless of their type — must be treated as if they were a single, consistent construct ... But how do you know which word combinations belong to this group?

The most obvious is through direct instructions:

A more complex option applicable only to names is the definition of idioms by capital letters:

It is clear that it is possible to determine compound words by their repeatability, but this is difficult. The use of dictionaries could be a simpler and more efficient way, however, bad luck came out with dictionaries: they could not be used within the framework of the implemented concept (I will visit them at the end of the post).

Words are defined, their properties are fixed, what next? Further it is necessary to determine the backbone of the phrase: any developer of AI probably came to the same conclusion.

Here is the phrase: A

carpenter is making a board.

What can you say about it, focusing solely on the syntax?

We can say that:

1. The subject (the noun of the nominative case) is that which performs the action.

2. The object (non-nominative noun) is what the action is aimed at.

3. The action itself is a verb.

With a passive voice, subject and object are interchanged. In this case, the passive voice can be converted back into real: the object - with the passive voice, it is always in the instrumental case - in this case it becomes the subject of the nominative case, and the subject becomes the object of accusative case.

It was:

Carpenter whittled a board.

It became:

Board planed carpenter.

In other words, the pledge makes the phrase invariant.

In some cases, the invariance of the phrase is practically indefinable, unfortunately.

We have a saying:

Matches are in the box.

It can be reformulated: The

box contains matches.

The content of both phrases is identical, but the actions expressed by verbs are formally multidirectional (in the first case, the matches affect the boxes, in the second case - vice versa), while the pledges of both verbs are active.

“To lie” and “contain” are not even antonyms, but something that, as far as I understand, there is no name in linguistics. And if there is no name, then there can be no dictionaries of such terminological pairs: the difficulty that the AI will inevitably encounter when trying to "comprehend" the information received.

The joint use of the triad is not necessary: any of these elements may be absent in the phrase.

Hippo moved.

Evening.

It is light.

Conversely, elements may be present in the plural form, for example:

Vasya and Petya fought.

As for the multiplicity, after long reflections and alterations, I established the following processing rules:

1. The subject is always present in the sentence. In the absence of a subject, a stub denoting the noun is inserted into the source text.

It is light.

Convert to:

[Noun] light. In other words: Something light.

2. The action may be absent in the absence of the object. If there is an object, a stub is inserted to indicate the verb.

Step into the abyss.

Convert to:

Step [verb] into the abyss. It can be interpreted as: Step [sent] into the abyss.

3. The object in the proposal may be missing. No plug is used.

He went.

Increasing the phrase to “He drove [noun]” makes no sense, because people don't necessarily go somewhere: the term may mean the beginning of movement.

4. The subject and the object may be multiple. The phrase is not divided.

Multiple subjects:

A boy and a girl were watching TV.

Multiple objects: The

hunter obtained the moose and the boar.

5. The action is allowed only single: with a plurality of actions, the phrase is divided according to the number of verbs.

The janitor smiled and shook his finger.

Let's convert to:

Janitor smiled. The janitor shook his finger.

In this case, the phrase requires separation.

The properties of the main elements (that is, nouns and verbs) are mainly their morphological features: gender, case, face, number, transitivity, reflexivity, modality, and more — all that the Solarix parser returns. Plus, others that are not considered linguistics. I used characteristics that are not completely canonical: negation, quoting, punctuation (which I considered to be a property of the previous word), etc.

Some of these properties are rare (for example, gender: there are no words at the same time male and female), others are multiple. Multiple properties include, for example, a number and a date. You can say "5 horses" , but you can: "Not less than 3 and not more than 7 horses": in the second case, both numerals refer to the same word, thus are its multiple properties.

It is important that the remaining parts of speech are the properties of the main elements or some kind of auxiliary entities such as references, in particular:

• adjective - noun property,

• adverb - verb property (as a rule),

• preposition - noun property,

• union - a marker of plurality of elements ( as a rule),

• pronoun - a reference to the previous noun,

• numeral - a pointer to the number of subjects or objects.

Above was mentioned the case requiring the division of the sentence into parts (example with the janitor). This is not the only case - there are others.

The most obvious: complex and complex sentences.

The young man smiled, and the girl smiled in response.

The bather flopped into the water, which was cold.

We divide into: The

young man smiled. The girl smiled back.

Bather flopped into the water. The water was cold.

A little less obvious are the participles and the verbal adverb. However, they express actions that lead to the need for separation.

The car, turned on the spot, crashed into a bump stop.

The car unfolded in place crashed into the bump stop.

We share:

The car turned on the spot. The car hit a bump.

The same result would have been obtained with a plurality of verbs with which participles and verbal adverbs are interchangeable.

The car turned on the spot and crashed into a bump stop.

The final design of all three source variants of the example is identical.

It is necessary to separate sentences not only in the above-mentioned cases, but also in many others, including those for which, it would seem, the division is contraindicated.

An example:

Good to walk in the autumn forest.

What is there to divide when everything is compact and compact? A little puzzling the lack of a subject, because, as we agreed, the subject should be expressed as a noun, but here he is ... an adverb or something? But no, separation is necessary!

[Noun] walk in the autumn forest. Good. In the sense of: Someone walks in the autumn forest. It's good.

It was said above that the cases serve to distinguish between subjects and objects: the case of subjects is nominative, while the case of objects is not nominative. Professional linguists will hardly agree with me: they know a lot of cases when objects are in a non-nominative case, as are the subjects.

Cottage is a home.

Not only the subject, but also the object in the nominative case. Exception, you say? It is possible to refer to an exception, but it is more logical to consider the specified construction as two phrases united by a special property. I call such phrases, obtained by dividing a sentence into parts, by sectors.

The bottom line is that one sector is the definition of another (or even many of the previous sectors. Due to the complexity of this functionality, I did not even try to implement it).

Here the second sector is the definition of the first. And no objects to you in the nominative case!

The main thing, of course, is that not only individual elements, but also sectors can have properties.

In addition to the goal, I used such properties of the sectors as:

• type of message (statement, order, question),

• type of question,

• pledge (did the passive pledge transform into a valid one),

• address (to the interlocutor by name: yes or no ),

• gluing,

• validity,

• time,

• probability.

How do you call this entity in linguists, I have no idea. Maybe a connecting word?

So, gluing are used for communication between sectors. Simply put, these are official words like “therefore,” “when,” “because of which,” “in view of what,” and so on.

Suppose we have a complex sentence with gluing "therefore."

The wind blew, so the foliage rustled.

It is clear that the two parts are interconnected as cause and effect: the gluing of “therefore” testifies to this.

Gluing may be absent, then it is problematic to detect it - it is almost impossible.

The wind blew and the foliage rustled.

In physical reality, we focus on sight, and if communication occurs on a verbal level, then intonation and visual experience, and usually guess, but not in the realm of words. How to distinguish the above phrase, indicating the cause and effect, from the ordinary sequence of events?

The wind blew and the hare ran.

The hare did not run at all because the wind blew - we know - but this does not follow from the syntax, although it does not follow the opposite. Who knows how it really happened? Maybe the wind blew, as a result of which the branch broke off, the hare became frightened and ran - in this case the whiff of the wind is the reason that the hare ran.

The named problem cannot be solved for the chat bot in principle: physical reality is one thing, verbal sphere is another. The element base is different (by element base, I mean the way of perception of reality: for a chat bot, this is written speech).

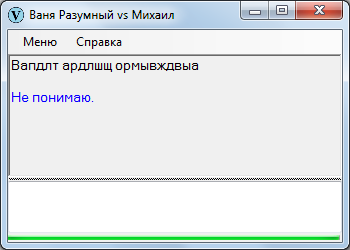

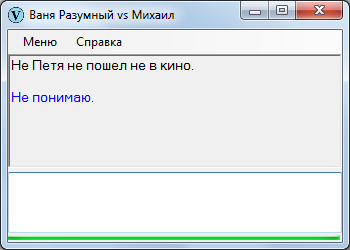

Some phrases turn out to be incorrect. It is clear that if you give out the AI a frank rubbish, he will not understand.

However, even the phrase, built at first glance in accordance with the rules of syntax, can be defective due to different nuances, for example:

Are you able to perceive such a phrase? If yes, congratulations, because when I try to think, I fall into a stupor: the brain refuses. At the same time, simplified versions are perceived routinely:

Petya did not go to the cinema.

Peter went not to the cinema.

Not Peter went to the movies.

People learn the world in accordance with the algorithms embedded in their heads, but if they get non-standard information, the vaunted mind evaporates somewhere.

It is obvious that phrases with disabilities must be ruthlessly rejected during processing.

The main signs of invalid phrases, in my interpretation:

• the absence of significant words (nouns and verbs),

• too many words not recognized by the parser as parts of speech,

• the presence of a noun in a non-nominative case in the absence of a verb (an error in the algorithm, since in such cases the verb is forcibly inserted),

• too many negative particles (see above),

• too many dates in the absence of enumeration (usually in the sentence one date, or, in the case of indication for a period, two dates),

• the presence of two verbs (as a result of an error in the algorithm),

• unification of the union (if a single “or” is found, for example, then there is an error either in the phrase or algorithm),

• neodymium What are the unions (simultaneous use of alternative "and" unions "or").

The sentence is formalized, is it time to write it down? Early. You need to insert the missing words and replace the links.

Take for example the dialogue:

- Petrov, how is your health?

- Normal.

- And my wife?

- Also.

Formalization requires the completion of the missing, something like this:

- How is Petrov's health?

- Petrov's health is normal.

- And how is the health of his wife Petrova?

“Petrov’s wife’s health is also normal.”

After that - not earlier - you can proceed to the processing of the text, followed by recording in the database.

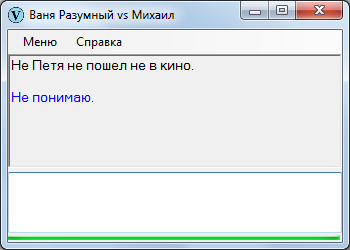

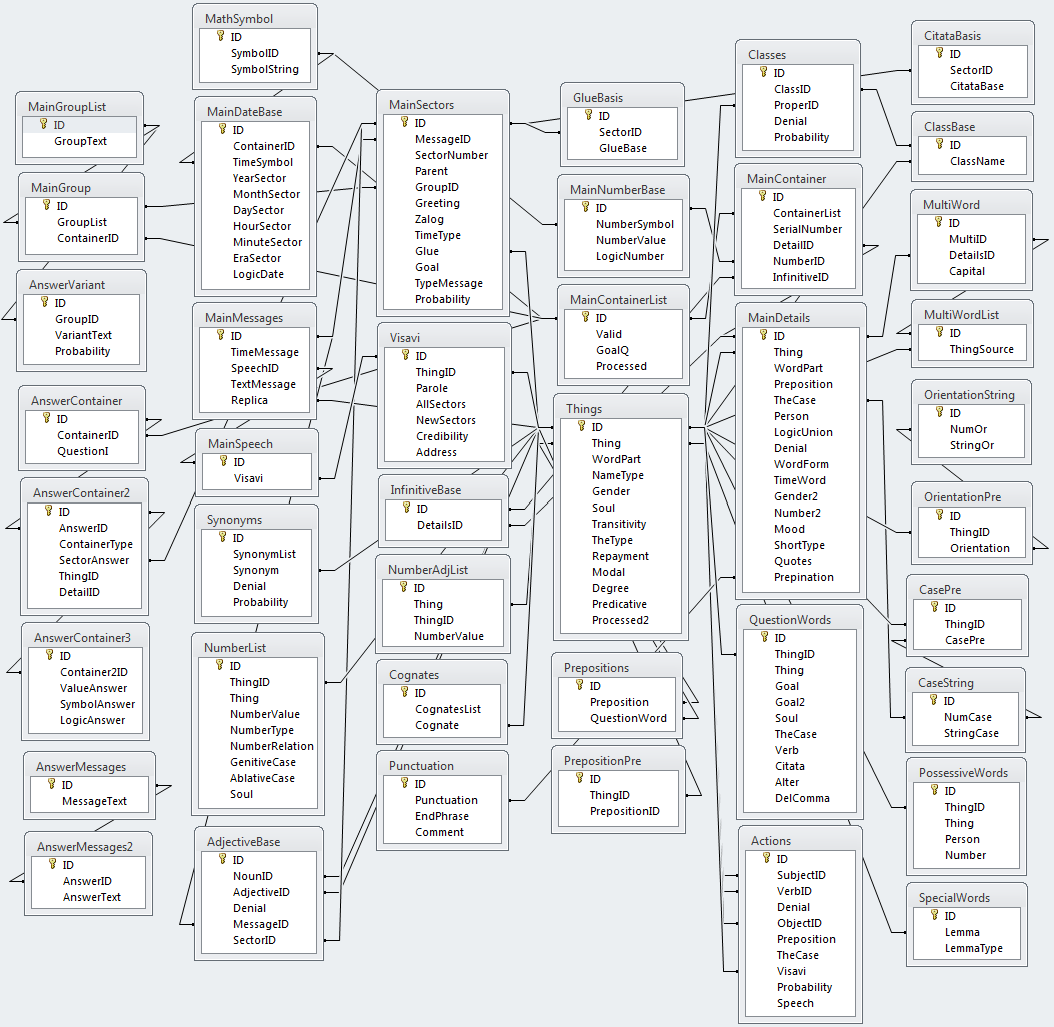

Here it is - the base, with all the properties, as well as auxiliary and reference tables.

Hierarchical structure:

1. Conversation (communication session with the interlocutor).

2. Message (text that the interlocutor gives out at a time).

3. Offer (message information unit).

4. Sector (part of the proposal).

5. The word.

God knows that, especially for the readers of the local resource ... But I’m an amateur, I suffered for a long time.

After recording the replica of the interlocutor in the database, you can proceed directly to communication. The problem is how to communicate? To say something in the affirmative? Ask a counter question? Ask for something?

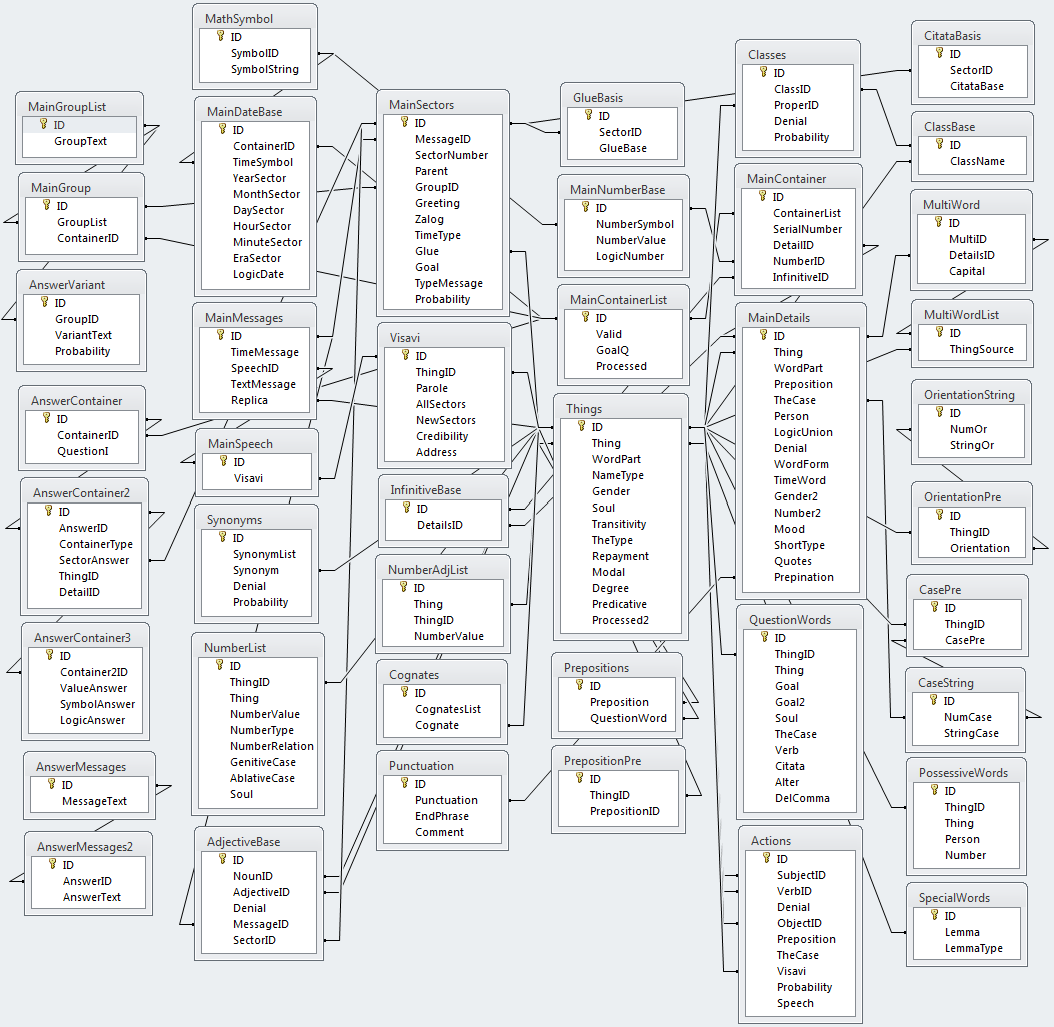

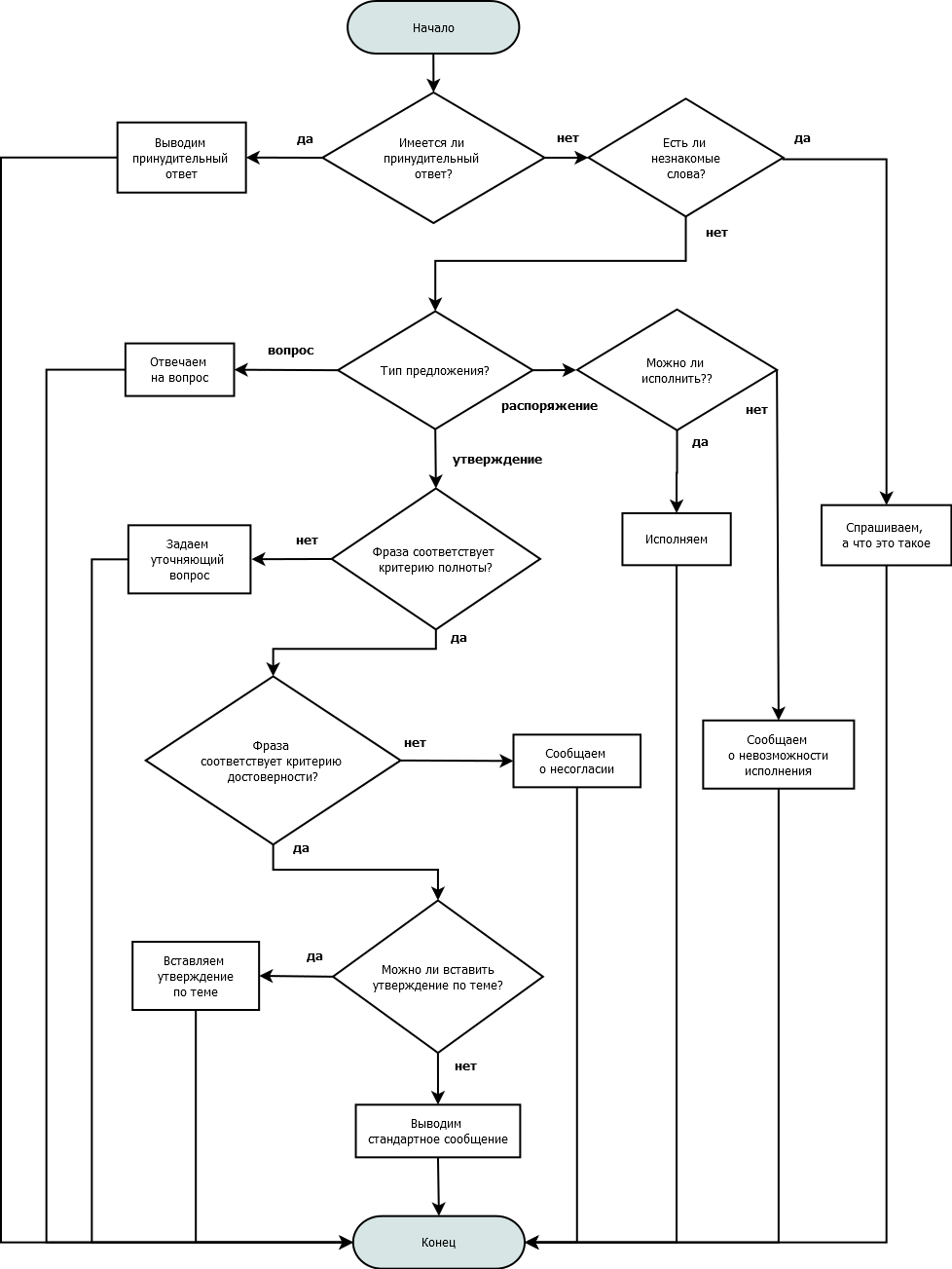

By trial and error a scheme emerged.

A coercive response is, for example, when a sentence is invalid or the interlocutor has determined that such and such a phrase must be answered in this or that way.

Information incompleteness - when it is possible to establish that there is no meaningful element in the sentence, as a rule an object.

What did you say? Anyone who has heard such, first of all, specify what is meant.

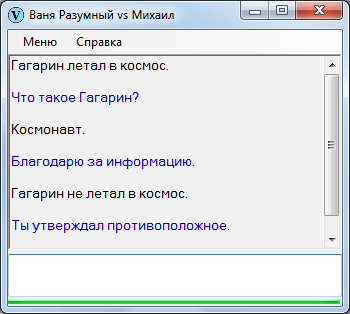

Reliability - compliance with what is written in memory. If someone hears "Gagarin did not fly into space," and the opposite is recorded in his memory, he will again ask (must ask) for clarification.

Natural reaction.

If necessary to answer the standard, Vanya Reasonable selects from a typical set of answers at random.

I must say that with typical messages I didn’t bother too much due to the fact that to achieve complete similarity with the human reaction is impossible by definition. Suppose a chat bot issues a standard "I agree." You can, picking up synonymous messages, return them randomly:

Exactly.

You're right.

You say it right.

Your truth.

etc.

However, any of these synonyms has meaning differences from fellows, which is why their use is not at all identical. Nuances that are easily recognized by people by facial expression and intonation of the interlocutor, but cannot be identified at the syntactic level. This is not syntax for you - it is a physical reality.

Yes, at the syntax level, one cannot even distinguish a negative answer from a positive one!

Let us take two familiar alternative answers to the same question:

How

else ! Here's another!

The first answer means “Yes”, and the second “No”. There is no way to determine the values by syntax.

The main role in the dialogue is played by the types of sentences:

1. Disposition.

2. Approval.

3. Question.

The most elementary of all - the order. Vanya Reasonable is trying to understand by action what the interlocutor wants: if he can perform, he performs, otherwise he reports the refusal.

Well, what can a chat bot do? Say about something, execute the command.

Nothing worth attention.

In the affirmative statement of the interlocutor, the reaction in general is reduced to the search for a counter-statement on the topic, where the topic is a subject or object, if desired.

The astronaut flew to Mars.

In response, finding an affirmative sentence in the database with the word "astronaut", you can give: The

astronauts are brave.

And you can search by object, returning something like:

Mars is a red planet.

But there are no guarantees that an inappropriate phrase will not be found in the database, such as:

Planet flying to Mars trash containers.

Choose criteria? .. But what ??? After much deliberation, I found that as a response statement on the subject of AI, I should issue a phrase denoting a personal attitude to the subject:

I did not fly to Mars.

Again, if such a phrase is found in the database.

Instead of a response statement, a question may be generated: in the event that Vanya misunderstood something in the subject concerned.

The questions are divided into two subtypes, which I have designated for myself as general and special.

General questions - without a question word, allowing answers “Yes” or “No”.

Special questions require in each case an individual answer, here a simple "Yes" or "No" will not get off.

How many people are on earth?

Why are elephants big?

Who is Napoleon?

I had to systematize the interrogative words. Each question word - which, fortunately, is still a bit - was assigned a type. It turned out a list of the form:

Thing: what?

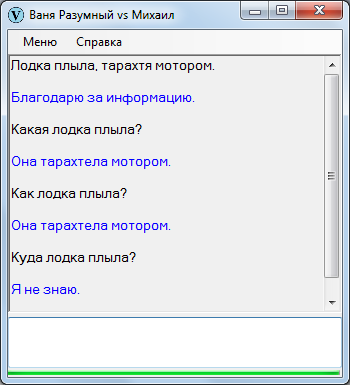

Name: who?

Place: where? Where?

Adjective: which one?

Adverb: how?

Number: how much?

Date: when?

The reason: why?

And so on.

In the absence of an interrogative word, the question is general.

With a general question, Vanya Reasonable is looking for answers not only directly to him, but in the case of a negative answer, and to all special questions generated by the basic general. The goal is to provide an alternative sector as a response.

The search for the answer is carried out, of course, by templates:

Having established the sector-answer, we can give the interlocutor a “Yes”, or “No”, or “And the devil knows him,” but this is for a general question.

If the question is special, we do the same thing, with the only difference. For a question word, we look only for its morphological features, without the word itself.

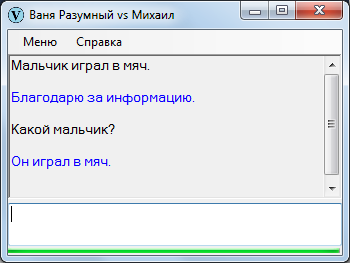

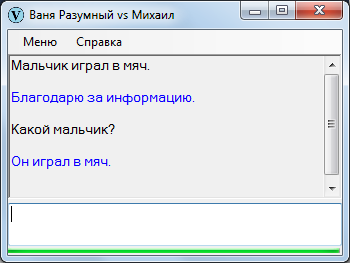

Suppose they asked:

Who shot?

For “shot,” we are looking for “shooting” with given morphological signs, and for “who” - any word with given morphological signs. The sector in which both words are present will be the answer.

In some cases (in fact, in many) a single search on a question word with given morphological signs does not work, so you have to search repeatedly.

Ask:

What boy?

“Which” is a question word for an adjective, that is, in the general case Vanya should look for an adjective. If an adjective is in the base for the given noun, the corresponding answer is given.

In a different situation, the answer may be not only an adjective, but also something else, such as the participle.

A participle expresses an action, therefore, from a certain point of view, any action characterizes a subject no less than an adjective and participle.

More complex, associated with the search for the subject, the option of replacing the template:

A couple of dozen of similar options: this is what I was able to detect.

Note: one result may be the answer to several interrogative subtypes at once.

The last question is not answered - Vanya, respectively, and does not respond.

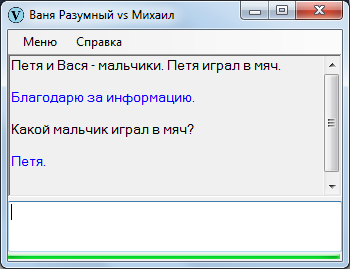

Suppose a chat bot is asked:

Where did the stupid Peter go?

In fact, the question is divided into two related sectors:

Where did Petya go? Is Petya stupid?

At the same time, it is not a fact that both answers will turn out to be positive or negative: it is quite possible to imagine that Peter went to the store, but at the same time, Peter was not at all stupid.

We have to first check the accuracy of the definitions, then answer the main question.

In this case, there is no information on the definition of Petit, therefore there is no reason to answer the main question.

Similarly, the need to search across multiple sectors arises when there are general questions expressed in compound sentences or complex sentences.

I caught the asp who bent the scales?

After the division of the proposal is converted into sectors:

I caught the asp? Zhereh bent scales?

Also in all other cases requiring division into sectors. Difficult, though surmountable.

Imagine that AI is asked how many astronauts flew into space. It’s good if the database contains one phrase:

40 astronauts flew into space.

And if there is another phrase there?

45 cosmonauts flew into space.

40 or 45, who to believe? Probably one of the sources is lying, but which one? You can believe this or that, but what are the criteria of faith? Obviously, the rating of the source. But how can an AI determine a source’s rating if all interlocutors for it are, strictly speaking, the same?

It seemed to me logical that the AI sought to obtain the maximum amount of knowledge, that is: the interlocutor offering the new (missing in the database) information - good, not offering - bad.

As a result, the following mechanism was implemented:

As a result, the AI is able to determine which of the two statements to believe.

Do you think the problem with the number of astronauts is solved? Nothing is resolved, because both sources may be true. As of January 1, there were 40 cosmonauts, during the year 5 people flew into space, as of December 31, 45 cosmonauts were listed. But how does the AI know about this ?!

What caused serious difficulties is the invariance, and so serious that I would like to meet a person who managed to resolve them within the framework of syntax. I'm not at all sure that such a person has already been born.

So, the same message can be formulated differently:

I am sick.

I can not.

Breaks me down

I feel bad.

I am unwell.

Nausea.

Health to hell.

Etc.

Some little things on this way are surmountable.

Synonyms - the most obvious and universally implemented solution.

You can also use the same words, for example:

Illness.

Pain.

Get sick

Disease.

Painfully.

Here are phrases with them, expressing, in fact, a single thought.

He caught the disease.

He is in pain.

He is ill.

He has a disease.

It hurts him.

Unfortunately, it is possible to formulate without the use of singular words. For example:

Health is shaken.

Therefore, within the framework of syntax, there is no complete and unconditional solution to the problem of invariance. To solve the problem of invariance, you need not a chat bot, but an AI with a full set of human sensations.

The speech reached the intellect, and I will concentrate on it.

Personally, I (although everyone has their own opinion, I understand) have three points that make the thinking being like that:

1. Feedback.

2. Thinking.

3. Reflection.

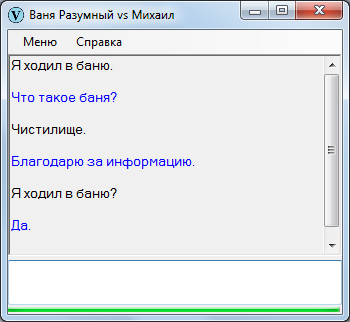

Feedback - the dependence of the reaction from previous experience. It is implemented in a primitive way, due to the recording of information in the database. At first, the AI did not know something, then he heard, remembered, and now he knows that this is the feedback.

Thinking is the process of generating new information that is not directly received from the interlocutor. The interlocutor issues a message, the AI analyzes and comes to its own conclusions.

Vanya Reasonable draws conclusions, then writes them into the database with the note “idea” (property of the sector). All thinking, actually.

So we think:

So AI should think:

The question is not what is thinking, but how artificial thinking should be organized ...

As a basis of thinking, I (I have no doubt that, like numerous predecessors) used classes. In mathematics, this is called sets, in linguistics - hyperonyms, but I prefer the Darwinian-programmer “classes”. Bulldog belongs to the class of dogs, dogs belong to the class of animals, and so on up and down the hierarchy.

Terms of use are trivial:

The most informative is not in the classes and not in their members, but in where to get the necessary information for the taxonomy.

Thought up the following.

First, to determine whether a particular concept belongs to classes from definitions - so to speak, directly.

If a bug is a dog and all dogs are barking, it is pretty obvious that the bug is also able to bark.

Replace the original statement "dog" to "Tuzik." It is logical to assume that if a dog Tuzik can bark, then the dog Beetle also knows how?

Not logical. What if instead of "barking" insert "give birth to puppies"? An animal does not know how, and Bug - easily. For this reason, the search returned a negative result. At the same time, an alternative sector was discovered (the ability of Tuzik to bark), which became the answer - in my opinion, quite informative.

Another way to discover classes is enumeration. The initial premise: words associated with some unions ( and, or, and, except ) or commas in connection with the transfer, belong to at least one general class.

I did not say a word that the Beetle is a dog: Vanya Reasonable himself made a conclusion from the information received. Otherwise, he could have made a mistake: for example, if I wrote “Tuzik is a dog”, because you can’t put the Bug on dogs in any way. I would have to make adjustments.

What I did, in fact, and Vanya reacted accordingly, although not without flaws.

The next way is some interrogative adverbs (where, where, from where, when). If such a splicing refers to a word, it can be concluded that the word belongs to a certain class (place or time).

Finally, action. The performance of subjects of the same actions with high probability indicates the presence of subjects of a general class. As the Americans say, if something floats like a duck, flies like a duck and quacks like a duck, then this is a duck.

The method is inaccurate, because the same actions can be performed by objects belonging to different classes. However, if all the actions performed coincide, it can not lead to certain thoughts.

As a result, the thinking process can be likened to solving puzzles, when the complete picture is restored from the available pieces of information .

If you think that you are thinking differently, then it is unlikely ... Imagine that a friend Petrov brags: "My daughter is a first-grader." What can you say about his daughter, whom you first hear about? Belonging to the “first grader” class speaks about the age of the subject. Age (also in a sense, class) indicates an approximate growth, weight, intellectual abilities, and so on. The female gender (again, the class) suggests primary and secondary sex characteristics, and belonging to the surname Petrov (class, all class) proves that the sought-after first-grader is a fool like all Petrovs you know.

But seriously, in our conclusions we operate on the subject's belonging to a certain set of classes, and more to anything. And how does our vaunted human thinking differ from ordinary machine algorithms ?! Nothing.

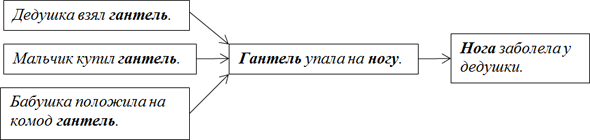

The process of thinking should be based not only on classes, but also on causality (cause-effect relationships).

Pressure has changed over the Atlantic, so the wind blew. The wind blew, so the hat fell off my head. A hat fell off her head, so the hat fell into a puddle. The hat fell into a puddle, so the hat got wet.

If we go along the causal chain from the end to the beginning, we will inevitably come to the initial conclusion:

The hat is soaked because pressure has changed over the Atlantic.

And try to say that the conclusion is wrong!

But similar causal chains can be compiled not only by consecutive, but also by separate phrases, as a result, the conclusions reached by the AI will turn out to be unexpected!

There is a second way to establish causality. It is connected with the use of the triad known to us: subject - action - object.

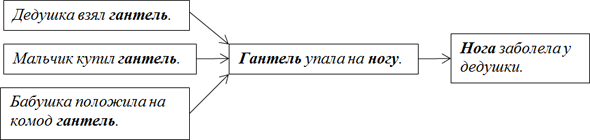

What is the original meaning of the triad? Indeed, causality between the subject and the object is expressed in the chain of events. Events take place one after another, and the past object becomes a subject, in turn, affecting another object, etc.

This sequence is a causal chain in which:

a) the reason that the dumbbell fell was that the grandfather took the dumbbell,

b) the reason that the leg got sick, is that the dumbbell fell on the leg.

Pure syntax in which the AI has no idea, neither what is a grandfather, nor what is a dumbbell, nor what is a leg.

According to the existing knowledge base, the dumbbell can fall on one leg only in the above case - in no other.

Imagine that new records are added to the database:

A boy bought a dumbbell.

Grandma put a dumbbell on the chest.

As a result, new potentialities arise that the dumbbell will fall on the leg, as a result of which the grandfather's leg is sore.

We have a fan of converging possibilities, very suitable for analysis.

A creature basing its thinking on the rules of syntax can conclude that if a boy bought a dumbbell or grandmother put a dumbbell on a chest of drawers, then the dumbbell can fall on its leg, with all the ensuing consequences. It is legitimate to ask:

Dumbbell will not fall on his leg?

Or, on the next round of causality: The

foot does not hurt grandfather?

In fact, this is a prediction of the future, but not only. There is also a restoration of the past, as it is possible to move along the causal chain, not forward, but also backward.

This is the mechanism of prediction:

The central triad in the diagram is the current one. If the subject of the current triad and any object in the subsequent causal chain coincide or at least belong to the same class, we have a causal connection within the same subject-object. The same is with the previous causal chain.

Sample implementation:

Pinch me if it's not thinking!

Of course, the presence of causality is far from obvious if the observer is in the physical world.

I saw a birch. Birch rustled foliage.

We, existing outside the rules of syntax, understand that the birch rustles the foliage not at all because someone saw it, but from the point of view of syntax, this is exactly the situation. Who would then rustle the leaves if I had not seen ?!

How many use the Russian language, so much convinced, what a wonderful miracle!

In addition to feedback and thinking, AI requires reflection.

I remember, in a film about robots, the billionaire inventor ran down the corridor with joyful cries: “She (the artificial woman) realizes herself! She is aware of herself! ”

Naive! in reflection there is no secret, even in the reflection of living beings, not so much in the reflection of AI.

We are aware of ourselves as a subject solely for the reason that our body is separated from our thinking. Chat bot is also able to reflect solely due to thinking, and what it is, we found out: generating your own, different from the replicas of the interlocutor, messages. In other words, the thinking chat bot should generate internal (sent directly to the database, and not to the desktop) messages about itself. This does not constitute any noticeable problem.

You can, for example, generate thoughts by the interlocutor's interlocutors: the interlocutor provides information about the AI - he analyzes and comes to some conclusions. In the process of analysis, it undoubtedly reflects: realizes itself.

You can build on the messages are not the interlocutor, but your own.

Suppose a question is generated, in accordance with the scheme announced above:

What is motion?

What does this mean? What means: generated question. The idea is recorded as such, on behalf of the AI:

I asked a question.

We write it down to the database, but we do not deduce it as an answer: this is reflection.

It is clear that many ideas can be generated - as many as the computing power and imagination of the Creator draw. If the question is asked by the interlocutor and his own thought is no longer written about himself, but about the interlocutor, this is thinking. In short, thinking about yourself is a reflection, and thinking about the outside world is not reflection .

As a result of thinking, worlds are being parallelized. In the grassroots, primordial world, corresponding to the physical world of people, messages are exchanged:

- How are you?

- Perfectly.

In the higher world, corresponding to our world of ideas, thoughts are born. Some thoughts relate to the outside world (not reflection), others - to oneself (reflection).

The interviewee asked a question.

I answered the question.

Or:

The interlocutor for some reason worried.

I am absolutely calm.

Worlds are mixed together, sometimes until completely indistinguishable.

Remark:

Are you really in love?

Its main semantic part “did you fall in love?” Belongs directly to the dialogue, whereas the adverb “for sure” belongs to the outside world of ideas. A complete reconstructed phrase should sound like this:

Are you sure that you fell in love?

The first part of the phrase refers to the world of ideas, while the second - to the world of dialogue.

As a result, when working with parallel worlds, it becomes necessary to process not only the semantics of messages, but also some external characteristics in relation to them. But how?

Suppose the other person asks:

- What time is it?

Focusing on the grassroots semantic world of messages, the AI should begin to wool all over the base in search of an answer.

Suppose you find a phrase six months ago:

Now it's 15 hours 30 minutes.

It is unlikely that an outdated message will serve as the correct answer to the current question. And so that the data on the current time is not outdated, always were fresh, it is necessary each - O Lord! - instantly generate a message of the type:

Now 15 hours 30 minutes 28 seconds 34 milliseconds.

The base will not pull.

What the hell to climb the base, if you can return the system time, thereby refer directly to the outside world in relation to the dialogue ?! You can, of course, but ...

As one thoughtful character remarked, one cannot embrace the immense. thereforeThe number of upper parallel worlds of any system is limited by computing power, ultimately by the purpose of the system and the common sense of the Creator .

At one fine moment, when the work on AI came to a logical conclusion, I downloaded a bit more text into Vanya than usual - lines like that of poltsychi - and decided to enjoy the conversation. Not here it was! The young man turned out to be stupid: somehow too, to indecency, he thought for a long time. It turned out that because of the multiplicity of SQL queries.

Suppose there are only 3 words in the phrase (two nouns and a verb) - these are 3 references to the base. The intersection of the results for each treatment will give a sector-answer, from which, if desired, you can choose a word-answer, otherwise use a typical phrase as an answer.

If any word has synonyms, you need to check the base for each combination.

Take the question: The

doctor saw the hippo?

The synonym “doctor” is “doctor”, and the synonym for “hippopotamus” is “hippo”, as a result we get 3 additional options:

The doctor saw a hippopotamus.

The doctor saw a hippo.

The doctor saw a hippo.

In addition to synonyms, there are classes that also have to check. Since the doctor belongs to a class of people, the answer to the question will also be the phrase: The

man saw a hippopotamus.

Okay, no problem: drive enums into one SQL query. Although the length of the string is also finite, it is necessary to control it, but this is not the point ...

In other situations in which the structure of the phrase changes, therefore the parameters of the SQL query change, the minimum number of calls to the database can not be avoided.

First, for each word you need to check for denial:

Did not the doctor see the hippo?

The doctor did not see the hippo?

The doctor saw no hippo?

Secondly, it is necessary to carry out an alternative search of another kind - I mentioned this earlier. This is when the question "what?" Consecutive constructions are formed, formed on the basis of the adjective, participle, verb.

How to be? If you reduce the number of SQL queries, the AI will lose many analysis capabilities, and the variety of responses will suffer accordingly.

Here enlightenment descended on me. Why search the database if each statement is in itself the answer to a number of questions?

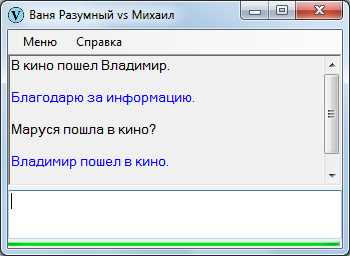

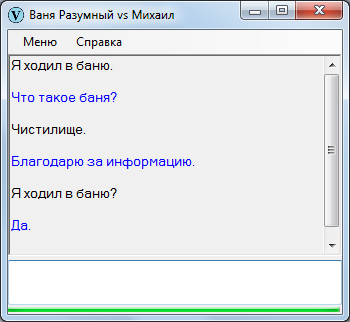

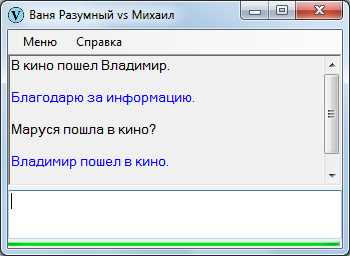

Someone claims:

Marusya went to the movies.

This statement is the answer to a common question:

did Maroussia go to the movies?

Also, it is the answer to the general question with denial:

Marusia did not go to the cinema?

You can, adding interrogative words, make up special questions, for example:

Who went to the cinema?

Where did Marusya go?

The answer to all these questions is the current sector.

So, constructing questions of different types, you can enter them into the database, and, as an answer, relate the source sector statement to them ! At the same time, the system is not overloaded with SQL queries: the dialogue flows much more fun than before.

There is no doubt that people think in the same way. If you ask too complicated a question, the interlocutor will not immediately answer, but will think: how much it will take to find an answer, he will think so much. But after the answer is found, the answer to the following similar questions will start instantly. In other words, people react according to the patterns they have: a pattern exists - the answer follows immediately; Template is missing - there is no answer (a “quick” stub is inserted instead of the answer). So, this method of optimization is not “invented from the head”, but in a certain sense of the word is natural.

On the other hand, a question may be asked that cannot be answered in the database. Here optimization will not help: AI will think again for a long time. In order to avoid a long wait, when you reach the set limit of n seconds, you have to interrupt searches. Then Vanya answers with a stub like:

Too complicated question.

Which, again, is fully consistent with natural algorithms. You too will not answer the too complicated question (difficult for you, naturally, and not for Vanya, who is in his infancy).

The above method does not exhaust the optimization possibilities. But ... as they say, the rich.

The conceptual diagram of Vanya Reasonable is as follows:

1. Parsim the text using the Solarix library.

2. We process, transforming into lists of elements and properties.

3. We write to the database.

4. At the time of recording, we link future potential issues with the current statement.

5. We are looking for what to say in response (sector).

6. At the same time we generate our own thoughts (in text form).

7. Transform the sector back into text (we restore the word forms using the LingvoNET library).

8. We give the answer to the interlocutor.

9. In parallel, we process and record thoughts.

Much of what was planned was not implemented, and it is unlikely to be implemented in the future. There are two reasons:

1. First, the limited computational resources. As I already mentioned, in order to avoid time drags, it was necessary to artificially limit thinking of answers, while the thinking functionality itself is primitive. There is much to complicate, the possibilities are endless, the ideas are transparent and worked out at the initial level, but it is desirable to have more powerful hardware.

2. Secondly, the obvious (personally for me) commercial futility of this event. Kill another year to satisfy an extra dozen readers of this resource? And why shouldTsiolkovsky build a rocket in the garage if the principle of jet thrust is formulated by him?? There will be interest in serious uncles, it will be possible to sculpt further, on the groove and with much greater success, but for now other things are up to you. So Vanya Reasonable is nothing more than a prototype of the future of AI.

By the way, the appointment of a future full-fledged AI is not at all the execution of voice commands, as it is practiced by modern voice assistants, but ...

1) Computer psychotherapy.

You return from work upset and say the AI:

"The boss is a fool."

“Shitty Mutt”, the AI responds with interest.

Further in the same vein. You will not conduct this kind of dialogue with every interlocutor, even a close relative, but with a computer device - please. For what the device should be appropriately, taking into account your individual characteristics, set up and educated.

2) Testing for accuracy.

As follows from the entire current post, the syntax has its own semantics, which can be correlated with someone's conclusions. Consequently, the AI is able to check statements for authenticity by relating them to previous statements known to it. And if the AI is not a chat bot, but a device with a video camera and other sensation sensors, then not only with the statements of the interlocutors, but also with the real world, which, as we remember, is only a set of visual points and other elementary objects. We perceive (see and otherwise feel) buildings, trees, clouds, but after all, the AI can perceive something else, unexpected, “zamylenny” for the human eye. That, you see, is much more interesting ...

You can download the release here .

Sources here, if there are willing to delve into the amateurish code. Why do we need a code, if Vanya Reasonable is just an illustration of the current post, setting forth the principles of creating AI by the “cool kuzdra” method ?!

Well, in the end.

I am not a programmer, bribes are smooth from me. What I mean is that there are a lot of mistakes - perhaps it is indecent a lot, even in dialogs, not only when reading art files. I note that errors are found not only in my code, but also in the libraries used, although in a smaller number. However, any error in the library is automatically transmitted to the dialogue, it must be understood.

Base filled as I could. A couple of hundred phrases: the initial ideas about oneself and the human race, the rules of politeness, did not have enough patience for more. Raising a baby, even a computer one, is a laborious task.

I worked on the issue of downloading dictionaries, in the end I gave up the idea. Orthographic and synonyms can still be downloaded (although well, for a very long time), but the sensible one - no way. The dictionaries are listed, for example:

Sidorov is a composer. Cantatas, suites.

Alps - mountain range. Ore mining. Tourism.

And I need:

Sidorov - composer. Sidorov - author of cantatas, suites.

Alps - mountain range. Ore is mined in the Alps. Tourism is developed in the Alps.

That is, with explanatory dictionaries, complete incompatibility: it is inconceivable at the level of syntax to determine where the "author" and where "is mined." Actually, there are not so many dictionaries in the network. Were required: sensible, synonyms, words with the same root, phraseological units, hypernames ... But what now!

If, inspired by the current post, you decided to make sure that the author is curved, then you can replenish the database in two ways: either by dialogue or by downloading text. It is better to start uploading phrases from a new line.

I warn you, phrases are indexed painfully long, so loading several pages of text, the right word, is not worth it - one is enough.

Synonyms are set on the pattern:

Doctor, doctor - these are synonyms.

The same is the antonyms (which are really only members of the class, which I did not immediately realize. White and black are antonyms. However, blue, green, etc. are members of the same class, therefore antonyms as an entity are absent — if you specify antonyms are recorded class members).

White and black are antonyms.

Standard responses are set in the dialog. I gave an example, but I repeat:

When downloading, the standard answer is placed after the original phrase:

You are a good fellow.

Answer: You too.

It is necessary to type texts competently, with observance of punctuation marks, otherwise you will not be understood.

All tips, actually.

No, if you do not show off, then as a standard chatbot, Vanya Reasonable at the very least is able to function.

From this side I am satisfied with my intellectual odyssey. Crystal clarity in the head: what can be implemented within the framework of syntax, and what is impossible. And full confidence that the creation of an artificial being lacks mechanics and computational power, and as for the principles of thinking, whether on the basis of syntax, visual or other perception, or a combined method, there are no theoretical obstacles .

Of course, I’m a long way from professional programmers, and I’m not a linguist either, but there is system thinking, and the feeling of language is in place. Especially since I have been interested in the subject of AI for a long time, I even rolled a couple of posts at one time. Why not realize knowledge in the program code? Well, I tried as best I could.

Meet Vanya Reasonable.

Below is a description of the problems that have arisen in front of me on this common path, and how to overcome them.

Regarding the result, I’ll make a reservation right now: the goal was not the program code, but the formulation of the principles of artificial thinking , which functioned NOT on the basis of physical reality, like biological organisms, but on the basis of SYNTAX. Fans of the Turing test, neural networks and machine learning may not worry.

Attention, the material is voluminous.

Cold water tub

So that there are no baseless expectations followed by malicious screenshots in the comments, I will immediately demonstrate Vanya Reasonable in an unpresentable form. Here, I downloaded the beginning of "Emeli" and tried to ask around - just like in literature classes in elementary school.

Original text:

Once upon a time there was an old man. He had three sons: two smart, the third - a fool Emelya. Those brothers are working, but Emelya is on the stove all day, he doesn't want to know anything.

Once the brothers went to the market, and the women, daughters-in-law, let's send it:

- Go, Emelya, for water.

And he told them from the stove:

- Reluctance ...

- Go, Emelya, and then the brothers return from the bazaar, you will not bring gifts.

- Okay.

Tears of Emelya from the stove, put on his shoes, got dressed, took buckets and an ax and went to the river. He cut the ice, scooped up the buckets and set them, while he himself looks into the ice hole. And I saw Emelya in the hole in the pike.

Dialogue:

However, the text can be adapted - for example, let's say: The

old man had three sons. The first son was smart. The second son was smart. The third son was a fool. Emelya is the third son of an old man. Emelya good.

The older sons work all day, and Emelya lies on the stove.

Once the brothers went to the bazaar. The daughters say to Emele:

- Go get some water.

Emelya replies with the stove:

- I will not go for water.

Bridesmaids say:

- Brothers will not bring gifts to you.

- Okay.

Emelya got down from the stove, put on his shoes, got dressed, took the buckets and the ax. Then Emelya went to the river.

Emelya cut ice and scooped up buckets. And I saw Emelya in the hole in the pike. Pike is a fish. Emelya caught a pike.

After adaptation comes out decently:

All the same, in artistic texts it is impermissibly confused. Despite everything, Vanya really thinks , unlike many of his fellows, primarily those created on the basis of neural networks, which only pretend to be reasonable, but in fact are nothing more than plastic dolls. If you are interested in knowing why, read on.

I turn directly to the description of intellectual odyssey.

Theoretical justification

I argued as follows.

What is the main problem of AI? The fact that the computer can not understand the meanings of words, respectively, to be "reasonable." In this case, it is believed that people are reasonable, because they understand the words spoken by the interlocutor.

In fact, not a damn people are reasonable. The word itself means no more than the letters that make it up (in fact, curved lines) or sounds (air vibrations). Meaning arises only as a relationship between letter-sounds as elements of articulate speech, at the expense of stable associations, including through connections with the world of visual perception. For example, the source indicates the subject and says: "This is a tree." And you understand that this item is called a tree.

A chat bot (by which we agree to understand an AI that deals exclusively with conversations) cannot be pointed at the subject and say: “This is a tree,” due to the lack of an eye for the chat bot, that is, a video camera. It is possible to explain only in words, but how can you explain, if he does not understand human speech ?!

Fortunately, the relationship between the elements of speech is defined, and quite rigidly: syntax is responsible for them. Consequently, any text built according to the laws of syntax contains a certain meaning fixed in the syntax. The very “hilarious kuzdra” in the headline, famous and unsurpassed in visibility - which, as it turned out, has long been attached to computer research.

From the memories of a comrade of the distant 70s:

Glosse Kuzdra

In July 2012, an expert came to me in the Concept and said “Glossy Kuzdra”. I understood him. It was a sign of involvement in a once-common semantic field. ...

"Gloe kuzdra shteko blanka bokra and kudlachit bokrenk".

В 70е годы искусственным интеллектом занимались в ВЦ АН СССР, в ИПУ АН СССР и нескольких др. институтах. В том числе было направление, которое занималось машинным переводом. Проблема не решалась годами, семантическая неоднозначность не давала перевести смысл без контекста. Было не понятно, как формализовать контекст в компьютерной программе. И вот Д. А. Поспелов /наверно/ распространил пример, иллюстрирующий трудность этого перевода. Он взял фразу, которая состоит из бессмысленных слов, но вместе с тем по строю предложения и звучанию слов создает совершенно ясные одинаковые ассоциации у совершенно разных людей. Этот эффект понимания не мог быть достигнут машиной. Действительно, Глокая куздра – этакая злая коза – она, ясное дело, сильно боднула козла и что-то несусветное творит с козленком… Семинары по машинному переводу проходили и в ИПУ, и я встречал на доске такое вот оглавление: «Глокая куздра. Семинар пройдет в 14.00 в 425 ауд.».

Справка. Автор этой фразы – известный языковед, академик Лев Владимирович Щерба. Корни входящих в нее слов созданы искусственно, суффиксы и окончания позволяют определить, к каким частям речи относятся эти слова и вывести значение фразы. В книге Л. В. Успенского «Слово о словах» приведен ее общий смысл: «Нечто женского рода в один прием совершило что-то над каким-то существом мужского рода, а потом начало что-то такое вытворять длительное, постепенное с его детенышем».

Источник kuchkarov.livejournal.com/13035.html

"Gloe kuzdra shteko blanka bokra and kudlachit bokrenk".

В 70е годы искусственным интеллектом занимались в ВЦ АН СССР, в ИПУ АН СССР и нескольких др. институтах. В том числе было направление, которое занималось машинным переводом. Проблема не решалась годами, семантическая неоднозначность не давала перевести смысл без контекста. Было не понятно, как формализовать контекст в компьютерной программе. И вот Д. А. Поспелов /наверно/ распространил пример, иллюстрирующий трудность этого перевода. Он взял фразу, которая состоит из бессмысленных слов, но вместе с тем по строю предложения и звучанию слов создает совершенно ясные одинаковые ассоциации у совершенно разных людей. Этот эффект понимания не мог быть достигнут машиной. Действительно, Глокая куздра – этакая злая коза – она, ясное дело, сильно боднула козла и что-то несусветное творит с козленком… Семинары по машинному переводу проходили и в ИПУ, и я встречал на доске такое вот оглавление: «Глокая куздра. Семинар пройдет в 14.00 в 425 ауд.».

Справка. Автор этой фразы – известный языковед, академик Лев Владимирович Щерба. Корни входящих в нее слов созданы искусственно, суффиксы и окончания позволяют определить, к каким частям речи относятся эти слова и вывести значение фразы. В книге Л. В. Успенского «Слово о словах» приведен ее общий смысл: «Нечто женского рода в один прием совершило что-то над каким-то существом мужского рода, а потом начало что-то такое вытворять длительное, постепенное с его детенышем».

Источник kuchkarov.livejournal.com/13035.html

On the basis of the "gossip", it is possible to build a full-fledged dialogue.

- Who has boklan?

- Kuzdra.

- Who kudlachit bokrenka?

- Also kuzdra.

- What is she like?

- globally.

- How would the bokar?

- Shteko.

Such an AI — quite fluently communicating — will not be able to understand the meanings of the words it uses: it will be out of it, neither what a “plug” is, nor who is such a “bokr”, nor how is it possible to make a clock. But is it possible for this reason that it can be considered that AI is unreasonable, and a person, a perfect biological creature who “understands” the semantics of his own expressions, fully thinks, unlike AI,? In fullness ...

People do not see objects, but observe a panorama of colored dots - in fact, chaotic. Objects are formed not in the external world, but in the human brain, due to the linking of color dots together, in separate aggregates. This means that we exist in a kind of illusory world, which in a certain sense we have constructed.

I read that some primitive tribe does not see airplanes in the sky - they simply do not see it, and that’s all. I also read that the Indians did not notice the ships of Columbus that approached the shores, because ... they simply did not notice. And they noticed after they were told how to look - that is, how to link the color pixels into specific objects. I believe these pseudo-geographic bikes: such visual paradoxes seem to me plausible.

A person has no reason to believe that his thinking is arranged in some special way, therefore, the principle of the “gracious kuzdra” is the only way to make someone reasonable, be it a chat bot or a person . You can use different tools, but the concept is unconditional. I thought so before the start of work and did not change my opinion on its completion.

Libraries

Having set the task, I began to google about the appropriate libraries for C #.

The first disappointment awaited me here: nothing useful, at least free, was not found. All the ready-made modules for the implementation of chat bots were not required, interested in working with word forms. It seemed that it was simpler to:

a) define the morphological features of the word form,

b) return the word form with the required morphological features?

Lexicon is limited, the number of signs too. And the thing is so useful, everyday ... Not there it was!

Finding nothing, rashly tried to code the determinant of morphological features on their own. Somehow it did not immediately set. On this my intellectual odyssey would have ended ingloriously, in the port of departure, if repeated, more thorough, googling did not reveal a couple of free libraries, which I eventually took advantage of. As always, thanks to the enthusiasts.

The first library is Solarix . A lot of things allow, but I, due to low competence and financial insufficiency (the library is only partially free), used an unconditionally free parser that parses the text and writes the result to a file.

An example of parsing the Solarix parser

<?xml version='1.0' encoding='utf-8' ?><parsing><sentenceparagraph_id='-1'><text>мама мыла грязную раму.</text><tokens><token><word>мама</word><position>0</position><lemma>мама</lemma><part_of_speech>СУЩЕСТВИТЕЛЬНОЕ</part_of_speech><tags>ПАДЕЖ:ИМ|ЧИСЛО:ЕД|РОД:ЖЕН|ОДУШ:ОДУШ|ПЕРЕЧИСЛИМОСТЬ:ДА|ПАДЕЖВАЛ:РОД</tags></token><token><word>мыла</word><position>1</position><lemma>мыть</lemma><part_of_speech>ГЛАГОЛ</part_of_speech><tags>НАКЛОНЕНИЕ:ИЗЪЯВ|ВРЕМЯ:ПРОШЕДШЕЕ|ЧИСЛО:ЕД|РОД:ЖЕН|МОДАЛЬНЫЙ:0|ПЕРЕХОДНОСТЬ:ПЕРЕХОДНЫЙ|ПАДЕЖ:ВИН|ПАДЕЖ:ТВОР|ПАДЕЖ:ДАТ|ВИД:НЕСОВЕРШ|ВОЗВРАТНОСТЬ:0</tags></token><token><word>грязную</word><position>2</position><lemma>грязный</lemma><part_of_speech>ПРИЛАГАТЕЛЬНОЕ</part_of_speech><tags>СТЕПЕНЬ:АТРИБ|КРАТКИЙ:0|ПАДЕЖ:ВИН|ЧИСЛО:ЕД|РОД:ЖЕН</tags></token><token><word>раму</word><position>3</position><lemma>рама</lemma><part_of_speech>СУЩЕСТВИТЕЛЬНОЕ</part_of_speech><tags>ПАДЕЖ:ВИН|ЧИСЛО:ЕД|РОД:ЖЕН|ОДУШ:НЕОДУШ|ПЕРЕЧИСЛИМОСТЬ:ДА|ПАДЕЖВАЛ:РОД</tags></token><token><word>.</word><position>4</position><lemma>.</lemma><part_of_speech>ПУНКТУАТОР</part_of_speech><tags></tags></token></tokens></sentence></parsing>The second library is LingvoNET , designed to inject and conjugate the words of the Russian language. The previous declensions and conjugations are also available, but this one attracted me to be easy to master.

Having received the desired, rolled up his sleeves.

I present the final result in the reduction. I do not describe all intellectual wanderings and difficulties: they are too confused. Often wandered in the wrong place, so I had to rethink and redo the seemingly complete.

In the beginning was the word

In accordance with the concept I have implemented, as a primary element, we have words with morphological features known to us.

The morphological features of the words are returned by the Solarix parser - it would seem that there are no problems. However, there are problems due to the fact that some phrases have a common inseparable semantics, and therefore must be perceived by the AI as a single element.

I counted four kinds of similar phrases:

1. Phraseological momentum,

Unfortunately, the parser does not return them.

Locksmith beat thumbs up.

For the parser “to beat the backdoor” is for different words, although the semantic basis is the same here, and the phrase is necessary to perceive as it is perceived by our brain: together.

2. Proper Names

Alexander Sergeevich Pushkin.

It is clear that this is a single pointer to a single object, although the individual components of its words can be used in other pointers.

3. Sayings, proverbs, aphorisms.

They, as a rule, also represent a single semantic field.

Old horse furrow does not spoil.

4. Compound function words (as a result, due to the fact that, for a reason, etc.) the

Parser returns some of them together, others do not.

5. Just stable bookshop-type phrases. Do not attach any adjective to the noun "shop", even if the corresponding products were sold in it. No one will say "pasta shop", even if the store sells only pasta.

Compound words — regardless of their type — must be treated as if they were a single, consistent construct ... But how do you know which word combinations belong to this group?

The most obvious is through direct instructions:

A more complex option applicable only to names is the definition of idioms by capital letters:

- some proper names, in particular, full names, have all capital letters

- other proper names only begin with a capital letter (for example, the Aral Sea).

It is clear that it is possible to determine compound words by their repeatability, but this is difficult. The use of dictionaries could be a simpler and more efficient way, however, bad luck came out with dictionaries: they could not be used within the framework of the implemented concept (I will visit them at the end of the post).

Subject - Action - Object

Words are defined, their properties are fixed, what next? Further it is necessary to determine the backbone of the phrase: any developer of AI probably came to the same conclusion.

Here is the phrase: A

carpenter is making a board.

What can you say about it, focusing solely on the syntax?

We can say that:

1. The subject (the noun of the nominative case) is that which performs the action.

2. The object (non-nominative noun) is what the action is aimed at.

3. The action itself is a verb.

With a passive voice, subject and object are interchanged. In this case, the passive voice can be converted back into real: the object - with the passive voice, it is always in the instrumental case - in this case it becomes the subject of the nominative case, and the subject becomes the object of accusative case.

It was:

Carpenter whittled a board.

It became:

Board planed carpenter.

In other words, the pledge makes the phrase invariant.

In some cases, the invariance of the phrase is practically indefinable, unfortunately.

We have a saying:

Matches are in the box.

It can be reformulated: The

box contains matches.

The content of both phrases is identical, but the actions expressed by verbs are formally multidirectional (in the first case, the matches affect the boxes, in the second case - vice versa), while the pledges of both verbs are active.

“To lie” and “contain” are not even antonyms, but something that, as far as I understand, there is no name in linguistics. And if there is no name, then there can be no dictionaries of such terminological pairs: the difficulty that the AI will inevitably encounter when trying to "comprehend" the information received.

The joint use of the triad is not necessary: any of these elements may be absent in the phrase.

Hippo moved.

Evening.

It is light.

Conversely, elements may be present in the plural form, for example:

Vasya and Petya fought.

As for the multiplicity, after long reflections and alterations, I established the following processing rules:

1. The subject is always present in the sentence. In the absence of a subject, a stub denoting the noun is inserted into the source text.

It is light.

Convert to:

[Noun] light. In other words: Something light.

2. The action may be absent in the absence of the object. If there is an object, a stub is inserted to indicate the verb.

Step into the abyss.

Convert to:

Step [verb] into the abyss. It can be interpreted as: Step [sent] into the abyss.

3. The object in the proposal may be missing. No plug is used.

He went.

Increasing the phrase to “He drove [noun]” makes no sense, because people don't necessarily go somewhere: the term may mean the beginning of movement.

4. The subject and the object may be multiple. The phrase is not divided.

Multiple subjects:

A boy and a girl were watching TV.

Multiple objects: The

hunter obtained the moose and the boar.

5. The action is allowed only single: with a plurality of actions, the phrase is divided according to the number of verbs.

The janitor smiled and shook his finger.

Let's convert to:

Janitor smiled. The janitor shook his finger.

In this case, the phrase requires separation.

Element properties

The properties of the main elements (that is, nouns and verbs) are mainly their morphological features: gender, case, face, number, transitivity, reflexivity, modality, and more — all that the Solarix parser returns. Plus, others that are not considered linguistics. I used characteristics that are not completely canonical: negation, quoting, punctuation (which I considered to be a property of the previous word), etc.

Some of these properties are rare (for example, gender: there are no words at the same time male and female), others are multiple. Multiple properties include, for example, a number and a date. You can say "5 horses" , but you can: "Not less than 3 and not more than 7 horses": in the second case, both numerals refer to the same word, thus are its multiple properties.

It is important that the remaining parts of speech are the properties of the main elements or some kind of auxiliary entities such as references, in particular:

• adjective - noun property,

• adverb - verb property (as a rule),

• preposition - noun property,

• union - a marker of plurality of elements ( as a rule),

• pronoun - a reference to the previous noun,

• numeral - a pointer to the number of subjects or objects.

Division of offers into sectors

Above was mentioned the case requiring the division of the sentence into parts (example with the janitor). This is not the only case - there are others.

The most obvious: complex and complex sentences.

The young man smiled, and the girl smiled in response.

The bather flopped into the water, which was cold.

We divide into: The

young man smiled. The girl smiled back.

Bather flopped into the water. The water was cold.

A little less obvious are the participles and the verbal adverb. However, they express actions that lead to the need for separation.

The car, turned on the spot, crashed into a bump stop.

The car unfolded in place crashed into the bump stop.

We share:

The car turned on the spot. The car hit a bump.

The same result would have been obtained with a plurality of verbs with which participles and verbal adverbs are interchangeable.

The car turned on the spot and crashed into a bump stop.

The final design of all three source variants of the example is identical.

It is necessary to separate sentences not only in the above-mentioned cases, but also in many others, including those for which, it would seem, the division is contraindicated.

An example:

Good to walk in the autumn forest.

What is there to divide when everything is compact and compact? A little puzzling the lack of a subject, because, as we agreed, the subject should be expressed as a noun, but here he is ... an adverb or something? But no, separation is necessary!

[Noun] walk in the autumn forest. Good. In the sense of: Someone walks in the autumn forest. It's good.

It was said above that the cases serve to distinguish between subjects and objects: the case of subjects is nominative, while the case of objects is not nominative. Professional linguists will hardly agree with me: they know a lot of cases when objects are in a non-nominative case, as are the subjects.

Cottage is a home.

Not only the subject, but also the object in the nominative case. Exception, you say? It is possible to refer to an exception, but it is more logical to consider the specified construction as two phrases united by a special property. I call such phrases, obtained by dividing a sentence into parts, by sectors.

The bottom line is that one sector is the definition of another (or even many of the previous sectors. Due to the complexity of this functionality, I did not even try to implement it).

Here the second sector is the definition of the first. And no objects to you in the nominative case!

The main thing, of course, is that not only individual elements, but also sectors can have properties.

In addition to the goal, I used such properties of the sectors as:

• type of message (statement, order, question),

• type of question,

• pledge (did the passive pledge transform into a valid one),

• address (to the interlocutor by name: yes or no ),

• gluing,

• validity,

• time,

• probability.

Glueing

How do you call this entity in linguists, I have no idea. Maybe a connecting word?

So, gluing are used for communication between sectors. Simply put, these are official words like “therefore,” “when,” “because of which,” “in view of what,” and so on.

Suppose we have a complex sentence with gluing "therefore."

The wind blew, so the foliage rustled.

It is clear that the two parts are interconnected as cause and effect: the gluing of “therefore” testifies to this.

Gluing may be absent, then it is problematic to detect it - it is almost impossible.

The wind blew and the foliage rustled.

In physical reality, we focus on sight, and if communication occurs on a verbal level, then intonation and visual experience, and usually guess, but not in the realm of words. How to distinguish the above phrase, indicating the cause and effect, from the ordinary sequence of events?

The wind blew and the hare ran.

The hare did not run at all because the wind blew - we know - but this does not follow from the syntax, although it does not follow the opposite. Who knows how it really happened? Maybe the wind blew, as a result of which the branch broke off, the hare became frightened and ran - in this case the whiff of the wind is the reason that the hare ran.