Acoustic Surveillance Systems

Surveillance systems we associate primarily with video cameras, and, as a rule, without a microphone. Acoustic observation is exotic, something from the world of submarines and spy fighters. It is not surprising, because vision is the main channel for perceiving information by a person, and audio information could add little to visual, while the operator sitting in front of the monitor was a key element of any surveillance system.

However, the massive spread of computers and the development of artificial intelligence systems has led to the fact that now more and more information is analyzed automatically. Video analytics systems are already able to recognize car numbers, faces, figures of people. Given the rather modest abilities of the human ear, computer hearing can surpass a person much faster than computer vision. Sound has many advantages - the microphone does not have to be within line of sight, it does not have dead zones. A good microphone is cheaper than a good camera, the flow of information from it is much smaller, which means it is easier to store and process in real time. There are many situations for recognizing which video requires very non-trivial computer vision algorithms,

In recent years, acoustic surveillance technology has begun to penetrate everyday life. So far, the most widely used are fire detection systems, which are installed in neighborhoods with a high crime rate. In the United States, several dozen police stations have already installed similar systems.

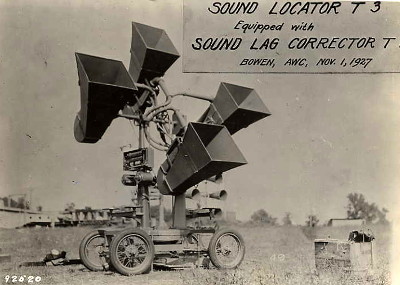

Shooting detection systems have a long history. They began to be used during the First World War to localize enemy artillery. During World War II they were used to warn about air raids - by the end of the war they were replaced by radars. The first such systems did not even have microphones and resembled huge stethoscopes. Modern detectors are often used to combat snipers.

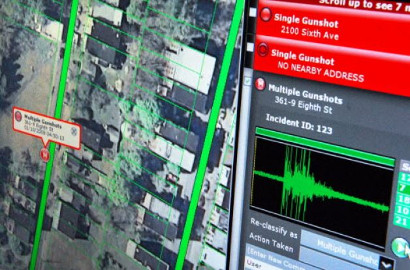

Shooting detection systems have a long history. They began to be used during the First World War to localize enemy artillery. During World War II they were used to warn about air raids - by the end of the war they were replaced by radars. The first such systems did not even have microphones and resembled huge stethoscopes. Modern detectors are often used to combat snipers.One of the leading manufacturers of civilian fire detection systems is ShotSpotter . A network of directional microphones is installed on the roofs of buildings, poles and other high places. With the help of triangulation, the sound source is localized with an accuracy of several meters. In disadvantaged areas, only in 25% of cases, someone calls the police after hearing the shots. This usually takes a few minutes. ShotSpotter sends a signal to the site in a few seconds, and the exact location of the shot is marked on the map.

All acoustic information is sent not only to the police, but also to the company's servers, for analysis. This is necessary in order to reduce the number of false positives from firecrackers, fireworks and pops from car mufflers. Each case of operation of the system provides new information for the machine learning system, which learns to accurately recognize the sound signs characteristic of a shot. For example, unlike other similar sounds, when fired, not only cotton is heard that is produced by powder gases escaping from the barrel, but also a shock wave from a bullet moving at supersonic speed.

All acoustic information is sent not only to the police, but also to the company's servers, for analysis. This is necessary in order to reduce the number of false positives from firecrackers, fireworks and pops from car mufflers. Each case of operation of the system provides new information for the machine learning system, which learns to accurately recognize the sound signs characteristic of a shot. For example, unlike other similar sounds, when fired, not only cotton is heard that is produced by powder gases escaping from the barrel, but also a shock wave from a bullet moving at supersonic speed.Naturally, a shot from a weapon with a silencer will go unnoticed by the system, but, according to the FBI, such weapons are used by criminals in only one out of a hundred cases. In general, the system shows good results and already works in several major cities in the United States, the UK and Brazil.

Additional benefits can be obtained by using acoustic detectors in conjunction with camcorders and infrared sensors. Controlled cameras can automatically rotate in the direction of the shot. Recording from such cameras, accompanied by information about the exact time and place of the shot, can greatly help in the investigation of the crime and serve as evidence in court.

EU funded EAR-IT projecthas much broader goals. The network of acoustic sensors is planned to be used not only for safety purposes, but also for analysis of traffic flows and pedestrians in cities and buildings, environmental monitoring. Microphones placed at the intersection can provide fairly accurate information about the number, speed and type of passing cars. Hearing the approaching sound of a siren, the system can adjust the work of the traffic light so as to skip the special vehicle without delay.

Inside buildings, the noise level can be used to determine the number of people in different parts of the room and, based on these data, adjust the operation of ventilation and air conditioning systems, draw up acoustic maps of the rooms to help more rationally plan the reconstruction and optimize the operation of the building. The sensor network will consist of many small inexpensive microphones and a small number of nodes equipped with high-quality microphones and a processor for signal processing.

The project involves several European research institutes and organizations. The long-term goal of the project is the creation of “smart” cities and buildings that not only see, but also hear everything that happens inside them.