We want to replace devops with a script (actually not): developers, you need your opinion

We are developing a cloud project for development — a platform that can make life as simple as possible for devops, developers, testers, timlids, and other professionals involved in the development process. This product is not for now and not for tomorrow, and the need for it is just being formed.

The main idea is that you can deploy the pipeline with pre-configured tools, but with the possibility of making a number of settings, and you will only have to deploy the code.

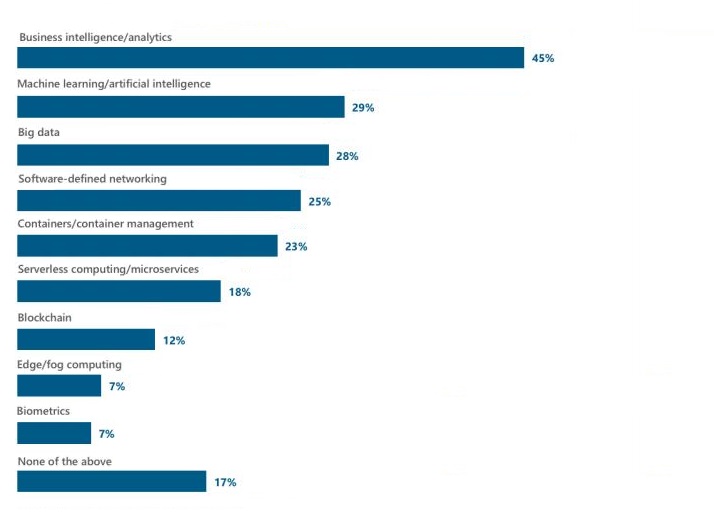

Where does this perversion come from? We see a clear trend that now the speed of deployment of new projects affects the market. Commerce depends on how quickly releases are delivered. How quickly bugs will be fixed, how quickly new niches will be dealt with. At the beginning of 2018, the global company 451 Research conducted a survey on which technologies would be a priority during development. The top ten included technologies for creating and managing containers, as well as serverless application architecture and microservices, overtaking even such a HYIP theme as blockchain.

And now we have a couple of questions for you.

Chart from the survey:

Is it necessary?

The use of containers in the development of a new product has both its advantages and disadvantages. To use this technology or not, you need to decide on the basis of the tasks. For a number of tasks without the use of containers can not do, and for some they are simply superfluous. For example, for sites with low traffic will be quite enough simple architecture of the two servers. But if you plan to significantly increase the development, as well as a huge increase in visitors in a short time, then in this case it is worth considering the infrastructure using containers.

There are several advantages to using containers:

- Each application runs in its own container in isolation, which reduces configuration problems to a minimum.

- Application security is also achieved by isolating each container.

- Due to the fact that containers use the kernel of the operating system, now there is no need for a guest OS, due to which a large amount of resources are released.

- Also, due to the use of the operating system kernel, and because it does not need to rely on the hypervisor, containers require much less resources compared to other stacks.

- Again, due to the fact that containers do not require a guest operating system, they can be easily migrated from one server to another.

- Due to the fact that each application runs in an isolated container, it is easy to transfer from the local machine to the cloud;

- It is very “cheap” to start and stop the container due to the use of the operating system kernel.

In connection with all the above, we believe that the containerization technology is currently the fastest, most resource-efficient and most secure stack. Due to the advantages of containers, you can have the same environment both on the local machine and in production, which facilitates the process of continuous integration and continuous delivery.

The advantages of containers are so convincing that they will definitely be used for a long time.

What does the cloud to develop?

In the ideal world of the developer, any commit code should, at the touch of a button, roll back into production, as if by magic.

We had this: there is a Gitlabchik with tasks and a source code. When you need to collect something - GitLab Runner. We work on Git Flow, all features on separate branches. When a branch goes to the repository, tests on this code are run in GitLab. If the tests have passed, the developer of this branch can make a merge request, actually a request for review of the code. After the review, the branch is accepted, merged into the dev-branch, and tests are run over it again. When deploying, GitLab Runner collects the Docker container and rolls it out onto the staging server, where it can be clicked and rejoiced. And here is the first plug-in - we scan the code with our hands for compliance with the functionality and this is the first thing we fix. After that we pour the code into the release branch. And for her, we roll out a separate pre-productive version of our solution, which our business customers are watching. After the pre-prod version is uploaded, we roll it into production and it rolls out onto grocery nodes. There are auto-generation release notes and bug reports. The assembly speed was more than 30 minutes, now it is an order of magnitude less. We picked up a set of tools for ourselves and now we are thinking about how to make a similar ready-made SaaS.

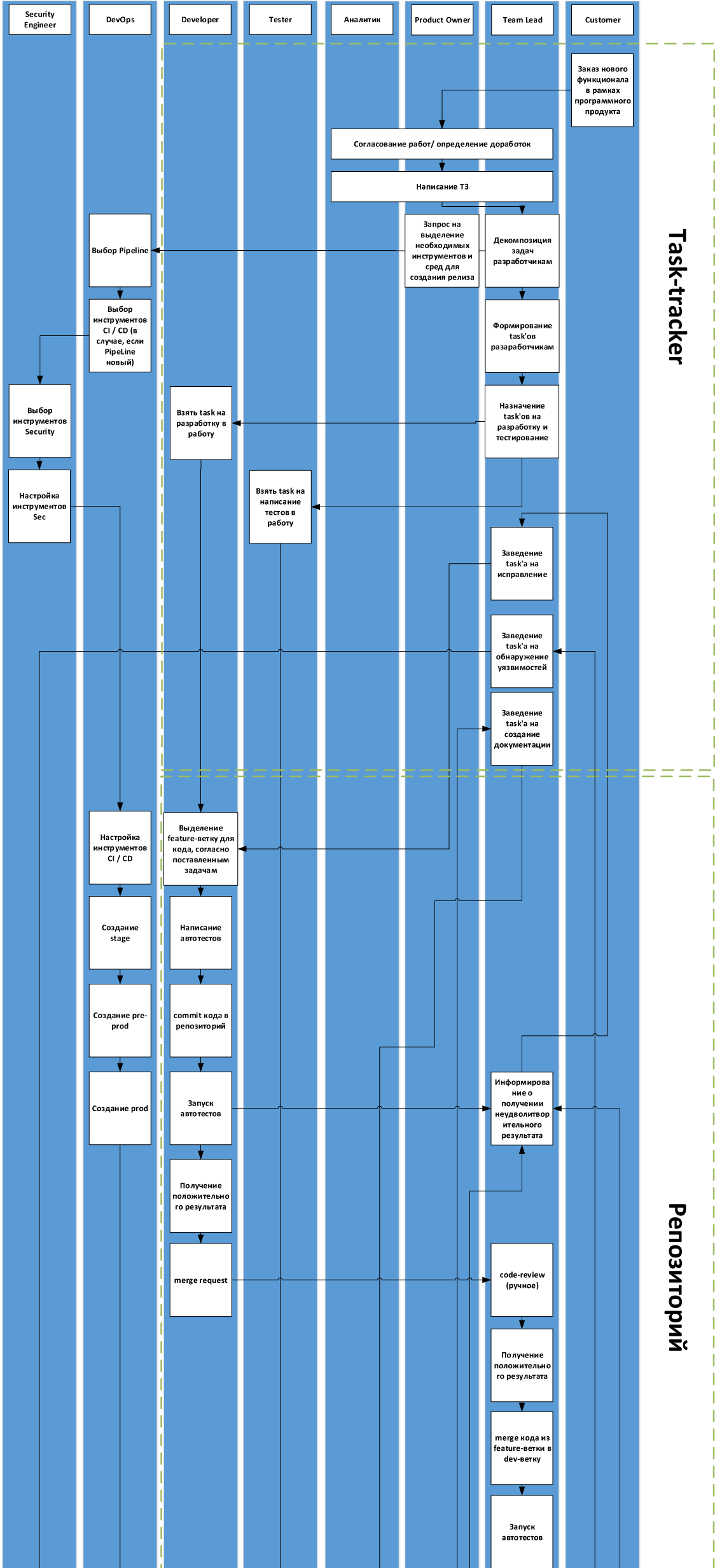

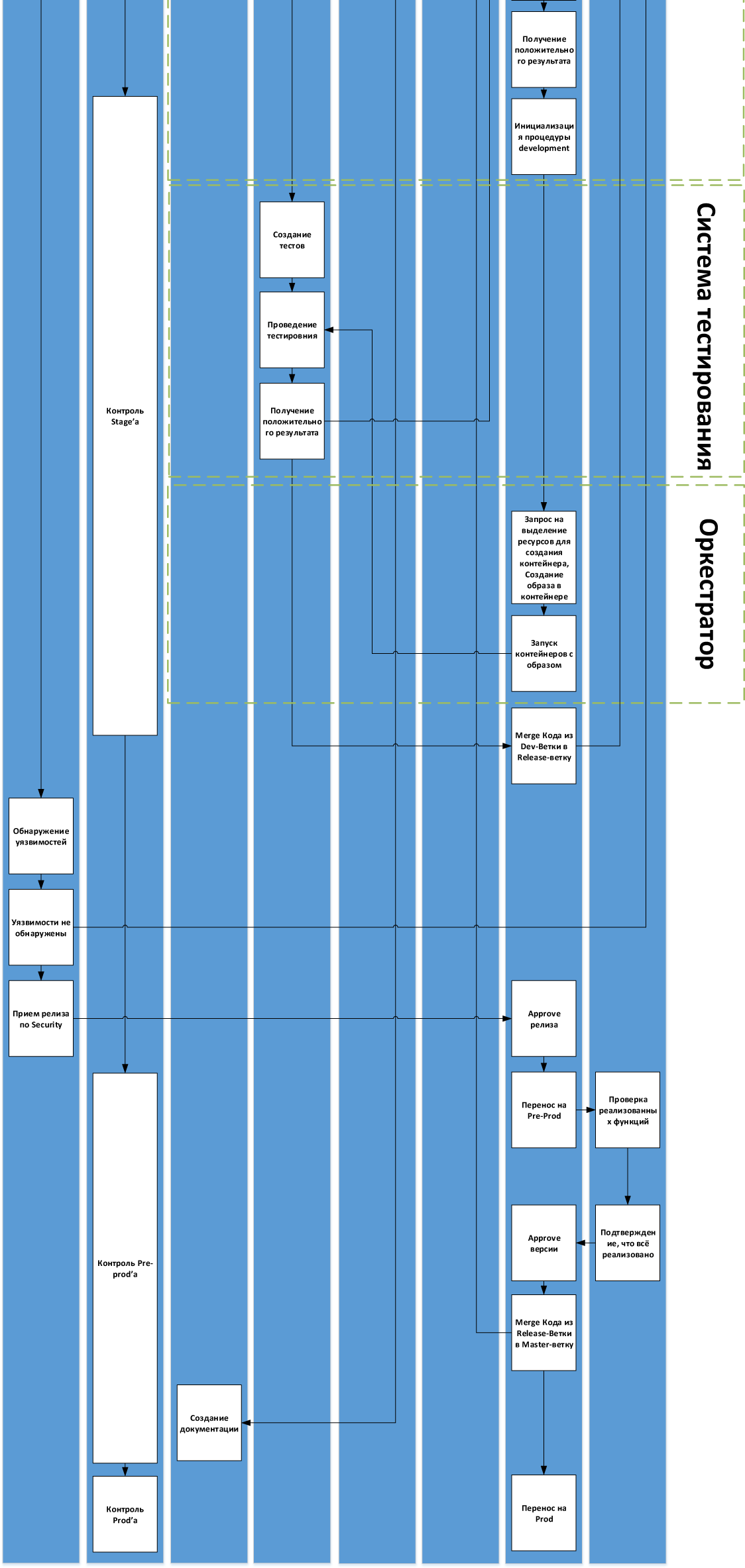

Immediately, the typical withdrawal process for us is as follows:

- Setting targets for the implementation of new features

- Code localization

- Changes according to the tasks. Writing automatic tests before build.

- Checking the code, both manual and auto tests

- Merging code into a dev branch

- Build dev branches

- Test infrastructure deployment

- Depla release on test infrastructure

- Running testing, functional, integration, etc.

- In case of bugs, finish them immediately in the dev-branch

- Transfer dev-branches to master-branch

Here is a diagram with details for our process:

Actually, the first question is - please tell us where you had any rake and how versatile or not this scheme is. If you use workflow, which is very different from this, then add a few words, why so, please.

What kind of product are we planning?

We decided not to copy Amazon in this regard, but to conduct our development taking into account the specifics of the market. Immediately make a reservation that all the calculations - our subjective opinion, based on our analysis. We are open to a constructive dialogue and are ready to change the roadmap of the product.

When analyzing the existing pipeline from Amazon, we came to the conclusion that it possesses tremendous opportunities, but at the same time the emphasis is placed on very large corporate teams. It seemed to us that in order to roll out a microservice in Docker, an unreasonably long time is needed, more than if, for example, they rolled out to Kubernetes, since there is a setting of internal configs, the definition of internal agreements, etc. and all this needs a long time to understand.

On the other hand, there is, for example, Heroku, where you can deploy in one click. But due to the fact that projects, as a rule, are quite extensive, with third-party microservices, at some point it becomes necessary to roll out custom docker-images with DBaaS services, and all this in Herocku doesn’t really fit, because either expensive, either uncomfortable.

We want to find another option. Golden mean. Depending on the type of project and tasks, provide you with a set of pre-configured tools already integrated into a single pipeline, while leaving both the possibility of a profound change in settings and the possibility of replacing the tools themselves.

So what will it be?

An ecosystem that includes a portal and a set of tools and services that minimize the interaction of developers with the infrastructure level. You define environment parameters that are not tied to the physical environment:

- Development environment (configuration management system, task setting system, repository for storing code and artifacts, task tracker)

- CI - Continuous Integration (build, infrastructure, and orchestration)

- QA - Quality Assurance (testing, monitoring and logging)

- Staging - Integration Environment / Predrelizny contour

- Production - Productive contour

When choosing tools, we focused on best-practice in the market.

We will build the infrastructure with Stage and Prod, using Docker and Kubernetes with parallel features.

This will be done iteratively - at the first stage, a service is planned that will allow you to take a Docker file from the project, then assemble the required container and lay them out Kubernetes.

We also plan to pay special attention to the service to control the development process and continuous delivery. What do we mean by this service? This is an opportunity to form a hierarchical KPI model with indicators such as% coverage by unit tests, average time to eliminate an incident or defect, average time from commit to delivery, etc.

Collecting source data from different systems - test management systems, task management, CI / CD components, infra monitoring tools, etc.

And the most important thing is to show in an adequate form available for quick analysis - dashboards with the ability to drilldown, a comparative analysis of indicators.

What we want to do

Actually, I would really like to hear from you about all this and our plans for the steps. Now they are:

- Infrastructure and Orchestration - Docker & Kubernetes

- Task setting, storage of code and artifacts, task tracker - Gitlab, Redmine, S3

- Production and Development - Chef / Ansible

- Build - Jenkins

- Testing - Selenium, LoadRunner

- Monitoring and Logging - Prometheus & ELK

- By the way, how do you see if there is a choice within the framework of the platform - I wanted it, chose Jenkins, did not want it - GitLab Runner?

- Or is it not important what is inside, the main thing is that everything should be built, tested and deployed?

How can I help?

The product will be developed for domestic developers. If you tell us now how best to do it, it will most likely come into release.

Tell me right now what stacks you are using. You can - in the comments or by mail to team@ts-cloud.ru.

UPD: for convenience, we made a short questionnaire in Google-form - here .

Next, we will keep you up to date with the development - and at some point we will give helping participants access to the beta (in fact, free access to good computing resources of the cloud in exchange for feedback).