The LinguaLeo Chronicles: How We Made Dialogues in English with Node.js and DynamoDB

LinguaLeo users begin to learn English in the Jungle - a repository of thousands of materials of different levels of complexity, format and themes; step by step learn to hear and understand the speech of native speakers and expand vocabulary. Who needs grammar - go to Courses . Vocabulary is replenished not only from the Jungle, by adding unfamiliar words to the Personal Dictionary, but also with the help of prepared Word Sets available for individual study. In the Communication section, you can conduct Dialogs in English to practice the language with other LinguaLeo users in real-time mode, choosing topics for communication. Communication in English only!

To create Dialogs in English, we used Node.js, DynamoDB (all on AWS). Now we will share our experience.

█ Why Node.js?

“... In order to provide our users with all the opportunities for communication, we chose Node.js. Why? Node.js is adequate for working with streams, where there are a huge number of modules . In addition, a competent JavaScript programmer, working on Node, will be able to implement the entire scope of the task, and not just its server part (unlike, for example, an Erlang specialist).

As a “transport node” between the server and the client, we used WebSockets as the fastest way to deliver information.

To solve the cross-platform problem, we chose the Sock.JS library . Firstly, due to W3C compliance for WS, and secondly, Sock.JS is great for our needs.

So let's go. We launched the Node.js daemon on Amazon EC2's “cloud” capabilities. The application is deployed through git. The forever module is responsible for logging and restarting . By the way, Amazon has a very useful service - Amazon Cloudwatch . Its functionality allows you to monitor the main parameters of the system, and most importantly its dignity - customizable notifications that allow you to monitor only what is really important. To obtain detailed information about the state of the application, we use nodetime .

The dynode module is used as a driver for working with DynamoDB . He proved himself well, however, imperfect technical documentation added tar to the barrel of honey. Official Node.js SDKcame out of Amazon later and is still in the Developer Preview stage. In such a

█ Subtleties of Node.js

We paid special attention to the problem of user authorization on the dialog server. We are responsible for authorization on the server cookie. This is a very “expensive” solution in terms of speed, but it impresses with stability and security, plus an independent service-oriented architecture.

How it works? The user's cookies are sent via WebSockets to the dialog server → the dialog server checks the validity of the cookie by sending a request with the received cookie to our API, and it already sends the user data back. If the data was received without errors, the Dialog server does its job (authorizes) and sends the data to the user .

We know that many people practice LinguaLeo not only at home, but also at work. It often happens that corporate firewalls are configured excessively strictly, which is why all ports except standard ones are unavailable. We remember this, and for the WebSocket connection we use port 443 (without binding to https). This solution avoids potential network problems.

█ Features of DynamoDB

A little over a year ago, Amazon released a distributed NoSQL database - Amazon DynamoDB . At the stage of designing the system architecture, we faced a choice between two products: MongoDB and DynamoDB. We refused the first option because of difficulties with administration, and the choice fell on Amazon, since support was not required in the case of its product. Well, of course, it was interesting to “break in” the technology ourselves for further use.

It turned out that working with DynamoDB is very different from everything that we are used to seeing. Being a SaaS product, this thing taught us to take into account the time for an http request. Within the Amazon data center, the average query time is about 20 milliseconds, which is why we came to the conclusion: it is highly advisable to make all selections only through indexes (this is faster and cheaper), and scan requests should be used exclusively for analytics or migrations.

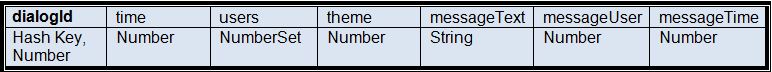

█ Data structure

Dialogs - storing dialogue metadata, caching the last message.

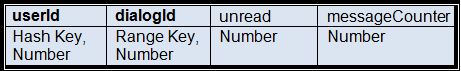

UserDialogs - list of user dialogs, caching + counter of unread messages.

Messages - all user messages.

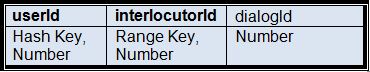

User2User - participants in the dialogue.

Example 1

The process of obtaining all user dialogs for the page "My dialogs in English" is implemented non-standard. On the page, the user sees all his dialogs, including the total number of unread messages, as well as the last message of each dialogue.

Data is selected in two queries. The first is fetching all dialoglds from the UserDialogs table using Query . The second is getting dialogs through BatchGetItem. There is a slight nuance -BatchGetItem selects a maximum of 100 messages at a time. Therefore, if the user has 242 of them, we will need to make 3 requests, which takes about 70-100 milliseconds. Keeping this in mind and striving for better performance optimization, we cache the dialog data in ElasticCache , updating it with every new message.

Example 2

Adding a new message to the dialog is also non-trivial. The speed of the database is not very important to us, since we do not wait for a record in DynamoDB. After all, we need to conduct a large number of records, and this plays a significant role for the speed of subsequent sampling. First, we write a new message to the Messages PutItem table , and then we cache the UpdateItem table in the Dialogs table. Next, we need to increment the messageCounter in the UserDialogs table for each user (UpdateItem). After all, everything happens, suddenly one of the users decided to delete the dialogue and reset us the message counter. In total = 4 requests, about 70-100 milliseconds are collected in time. Unfortunately, such transactions are not supported in DynamoDB, which imposes significant restrictions on processes where data integrity is critical.

In general, changing product requirements is a fairly common occurrence. Sometimes this entails a transformation in the data structure. In relational databases, this is solved using ALTER TABLE, but in DynamoDB this thing is simply not there. Changing the circuit here is very expensive. We have to recreate tables or use Elastic MapReduce. You have to pay for both options. A lot of data = a lot of money. In order to somehow cope with this, I had to select all the Scan data by 1 megabyte, and then write to a new table. It takes a lot of time, but this is a fee for the lack of DBA.

█ Impressions of DynamoDB

Our experiment on using DynamoDB was a success. This is an amazing database that is easy to scale. Work with it and forget about administration. But remember: in return, it requires careful handling at the architectural design stage. Otherwise, all sorts of unexpected turns and unpleasant rakes are possible there. We recommend using DynamoDB if there is a lot of non-critical data, and you need to work with them often. We use it - we like it :) :)

***

Communicate easily and naturally in Dialogs in English - practice English in a pleasant company and join our team !

Follow the news on Facebook , Vkontakte and Twitter, share your impressions and have fun. Freedom of communication is great!

LinguaLeo Team

LinguaLeo Team