BYOD in container: virtualizing Android. Part two

I continue the story about the Android virtualization technology, made in Parallels Labs by a group of students of the Department of MIIT of the Academic University of St. Petersburg as part of the master's work. In yesterday's article, we examined the general concept of virtualization of Android-based devices, which allows us to do this. The goal of today's post is to deal with the virtualization of telephony, sound, and user input systems.

Audio and telephony were virtualized in user space. The general idea of such virtualization is to implement proxies (server and client) at the level of a device-independent interface in the Android stack. The proxy server is located in a separate container with the original operating system software stack for executing client requests on physical devices. A proxy client is hosted in each container with an Android user.

A typical scenario for using an audio device when running multiple Androids would be to listen to music in one of the OS and play the sound of a game running in another. The user would probably like to hear the sound of all running Android, but in fact the physical sound device is not designed for that. When you try to use it, the OS reports an error and sometimes can not play sound until the next reboot. Virtualization of the audio device should provide the ability to simultaneously listen to the sound of all Android.

It is also required to implement interaction with the telephony service so that during a telephone conversation the audio device records and transmits the user's voice to the cellular network and reproduces the voice of the interlocutor received from the cellular network.

The substitute hardware-independent interface to the audio device is the AudioHardwareInterface interface, as the Android Platform Developer's Guide tells us . In addition, it is the lowest level and simplest hardware independent audio interface. In fact, it is a stream for recording sound for playback. In addition to the sound stream played by Android, other useful information can be obtained in its implementation.

One could do the substitution at a higher level. For example, to replace part of the Android audio framework. But analysis showed that the audio framework is much more complex than AudioHardwareInterface. Therefore, the proxy client in the case of virtualization audio device is an implementation of AudioHardwareInterface.

The main duty of the proxy client is to send the Android audio stream and other useful information to the proxy server, which will play the audio streams of all Android. Sound streams are played in uncompressed form, so their transfer using copying to a proxy server can produce noticeable overhead. We avoid them due to the use of inter-container shared memory for transmitting audio streams. Information and control messages are transmitted using the mechanism of inter-container messaging.

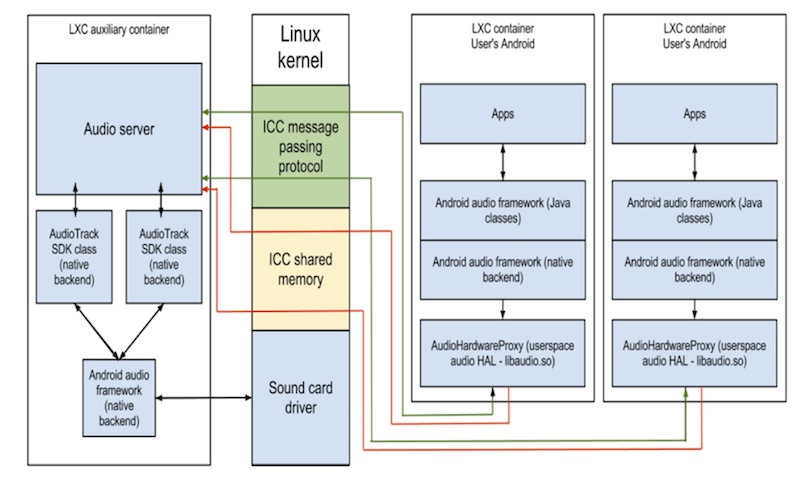

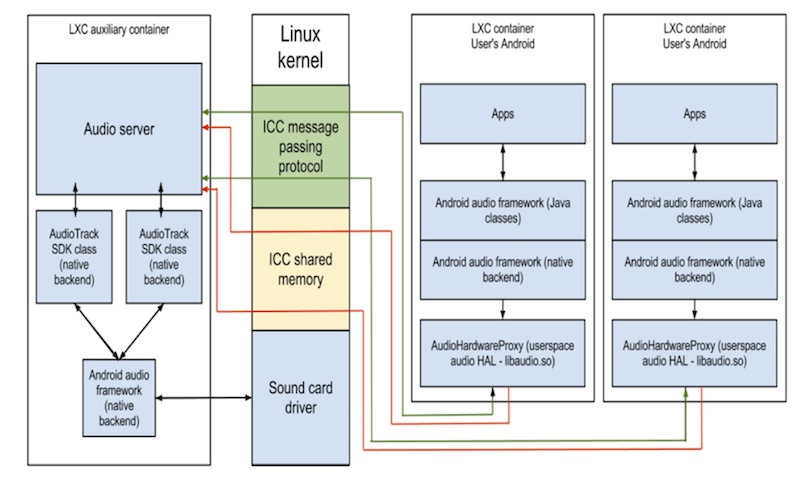

The main task of the proxy server is to mix the sound streams received from the proxy clients and play the resulting sound stream on a physical audio device. To ensure maximum portability of the proxy server, mixing and reproduction of sound should be independent of the device. The Android audio framework interfaces are hardware independent. In addition, the audio framework already has capabilities for mixing audio streams. Android audio framework does not provide access to its mixer from the outside, so to use it you need to either integrate into the audio framework or use the mixer implicitly. The architecture of our sound virtualization solution is shown in the figure.

The simplest solution that allows you to mix and play sound in Android is to use the audio classes from the Android SDK. In our solution, the sound stream of each user is played by a separate object of the AudioTrack class from the Android SDK. Using classes allows the Android audio framework to mix all reproduced audio streams and sends the resulting audio stream to the device’s speaker or to the headphone output.

It should be noted that the classes from the Android SDK are written in Java, and to use them you need to run the Dalvik virtual Java machine. Starting Dalvik in the case of Android means starting the entire OS due to the use of a mechanism such as bootstrapping in it. Here we are faced with a problem: running Android takes up 200–250 Mb of RAM and consumes computing resources. These are unacceptable overheads for the task of reproducing and mixing sound streams, but they were avoided in this work. In fact, we are not using Java classes from the Android SDK, but their native backend written in C ++. This is why starting Dalvik, which means starting a full Android container, is not required.

Completely native applications, such as a proxy server, cannot be registered in the Android rights control system. At the same time, the services accessed by the proxy server silently ignored requests due to the lack of rights. Everything was decided by removing the rights checks in these services. We performed this trick with a calm mind, since this removal does not affect security. Extraneous applications never run in a container with a proxy server, it is completely under the control of virtualization technology.

The user probably wants the sound volume of different Android to be adjustable. It turned out that in Android 2.x there is no abstraction of the hardware mixer, i.e. Mixing is done through the CPU. Since the mixing of sound streams inside each Android is completely independent (there is no common shared hardware mixer), the overall volume level of each Android can be adjusted in Android itself. The proxy server simply does not make changes to the sound levels of each OS, and the user sets the sound level of each Android in their user interface, as if there was no virtualization on the phone.

Mobile devices typically have multiple audio output devices. For example, a large speaker, handset, headphones, bluetooth headset. Launched operating systems may simultaneously require playback of their audio streams on different output devices. In some cases, their requirements will be incompatible. For example, it makes no sense to play one sound on a large speaker, and the other in the headphones or handset. It will also be strange if during the call, not only the voice of the interlocutor is heard in the receiver, but also the music played before the call.

Therefore, a policy was developed for routing audio streams to various output devices that implements the strategy of "least surprise" of the user. As part of this strategy, in particular, sometimes you want to simulate the sound of Android. To do this, the proxy client is switched to fictitious playback mode, in which synchronous functions are blocked for the duration of the reproduced portion of the audio stream, so Android thinks that it played the sound, although in fact it did not go to the proxy server for playback.

In the proxy client, which is the implementation of AudioHardwareInterface, we can get information about whether the conversation is currently on the phone or not. If the conversation is ongoing, then you need to configure the audio subsystem to record and play voice and interact with the telephony service. In fact, for this it is enough to put the AudioHardwareInterface implementation that comes with the device into call mode by calling the setMode method (MODE_IN_CALL) and set the audio routing to the handset, otherwise the interlocutor’s voice will be played by the current audio output device. Thus, the device supplier is responsible for implementing the interaction with the telephony service.

The most interesting and complicated technology was the dispatching of phone calls between containers. The software equipment management stack for accessing mobile networks on Android contains the following components:

1. Com.android.internal.telephony package API.

2. The rild daemon, which multiplexes user application requests in mobile network access equipment.

3. Proprietary equipment access library.

4. GSM modem driver.

The interface between components (2) and (3) is documented and called the Radio Interface Layer, or RIL. It is described in the file development / pdk / docs / porting / telephony.jd.

For RIL virtualization, I had to solve the following problems:

Proprietary RIL implementations in different Androids try to reinitialize equipment;

It is not clear which of the Androids should receive incoming calls and SMS;

It is unclear how to manage calls and SMS from different "Androids".

It should also be noted here that the implementation of the GSM-modem drivers differs significantly on different devices, therefore, the existence of a universal virtualization solution for this device in the kernel is impossible. In addition, the proprietary RIL library uses an undocumented protocol to interact with a GSM modem, which is an obstacle to its virtualization even for a specific smartphone model.

The specific implementation of the RIL library on the phone is unknown, but its interface is known. We created the abstraction layer as a virtual RIL proxy and RIL client. On the client side, the rild daemon instead of the original RIL library loads the RIL client we have implemented. The RIL client, intercepting calls to the interface, transmits calls using the RIL proxy intercontainer mechanism, which in turn does the main interface virtualization, and uses the original RIL library to access telephony.

The server part is implemented as a single-threaded application that downloads the proprietary implementation of RIL and, using the mechanism of inter-container interaction, receives messages from proxy clients working in user containers. In the OnRequestComplete handler, we don’t know the type of request, but the way to pack the response of the proprietary RIL implementation depends on it, so when the request arrives, the server remembers the token matching the request number in order to call the desired response marshaller in this handler. The RIL proxy server must handle the situation when from different clients receive requests with the same tokens, so when the next request arrives, the proxy server scans the list of request tokens processed by the RIL proprietary library, and if it turns out that a request with such a token is being processed, then the server generates a new token for the incoming request. Thus, the proxy server for all incoming requests stores their real and dummy tokens.

For each type of request received by OnRequest, a processing policy is defined. Currently, three policies are defined:

For each type of asynchronous notification, its routing policies are also defined:

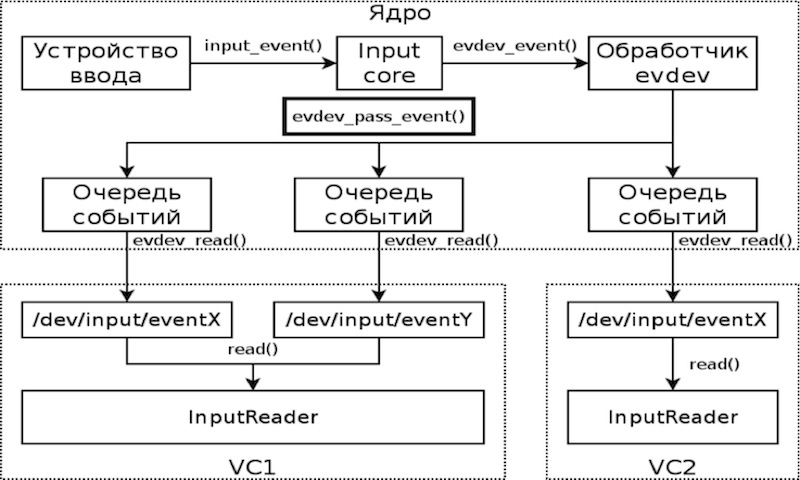

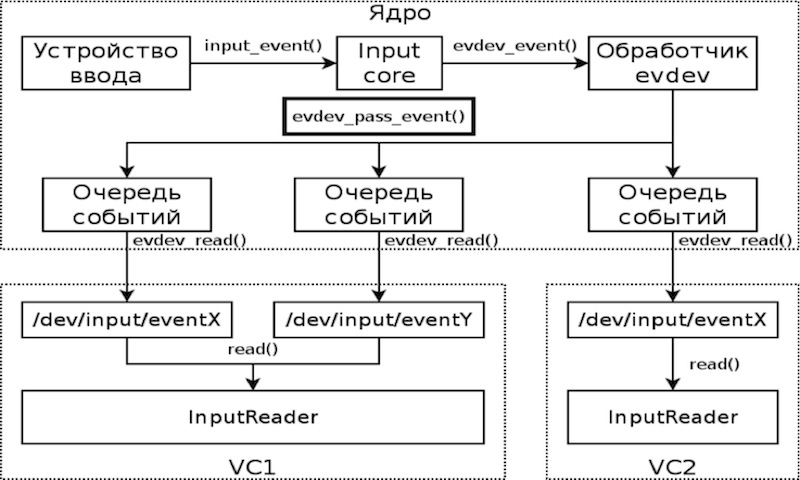

Android uses the evdev subsystem to collect kernel input events. For each process that opened the file / dev / input / eventX, this driver creates a queue of events coming from the kernel of the input subsystem.

In this way, incoming input events can be delivered to all containers. The evdev_event () function is an input event dispatcher, so in order to prevent delivery of input messages to inactive containers, this function has been modified so that all incoming input events are added only to the process queue of the active container.

After we managed to launch our solution on smartphones, we did a series of experiments in order to quantify the characteristics of our solution. I must say right away that we did not work on optimization - this work has yet to be done.

The main objectives of the testing were as follows:

Testing was carried out on the Samsung Galaxy S II smartphone in the following scenarios for using the smartphone:

1. Simple (we turned on the phone and do nothing with it);

2. Play music with the screen backlight on;

3. Angry Birds (where without them?) And simultaneous music playback.

Test scenarios were executed on three configurations:

1. The original CyanogenMod 7 environment for Samsung Galaxy S II (1);

2. One container with CyanogenMod 7 (2);

3. Two containers with CyanogenMod 7: music was played in one container, a game was launched in the other (3).

The following parameters were measured in each test run:

1. The amount of free memory and the size of the file system cache (according to the information / proc / memfoinfo);

2. Battery charge (according to the information / sys / class / power_supply / battery / capacity.

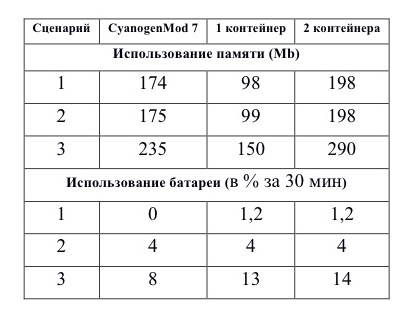

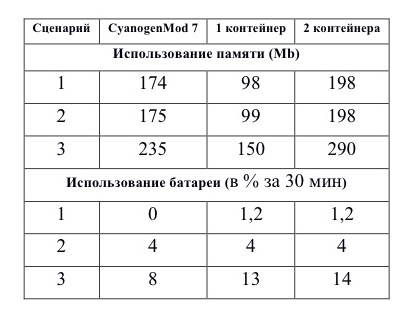

Each test run lasted 30 minutes, measurements were taken at a frequency of 2 s. Here's what we got:

It may seem strange that the memory consumption in the case of containers are less than in the real environment, the exact reason has not been established (we haven’t tried to figure it out yet), however, the same effect is also observed in the Cells project, which they described in their article .

Testing showed the acceptability of the developed solutions and demonstrated insignificant consumption of memory and the smartphone’s battery by the developed technology, with the exception of the case (the last row of the table), in which the energy consumption of two containers is almost doubled compared to unmodified Android. Currently, work is underway to find out the reasons for this behavior.

As you can see from the values of memory consumption, when you start the second container, it increases by more than 2 times, which is an obstacle to launching several containers on one smartphone: experiments show that the Google Nexus S smartphone, which has 380 MB, cannot start more than one container without paging file activation.

A large part of technological news is now being generated around mobile devices. A master's (or diploma, to whom it is more familiar) project of a group of students showed the viability of virtualization technology on mobile devices and gave an idea of how this technology can be used to create products.

Some interesting facts and figures about creating our solution:

If something important is left behind the scenes, ask questions in the comments. I will try to answer them.

Audio and telephony were virtualized in user space. The general idea of such virtualization is to implement proxies (server and client) at the level of a device-independent interface in the Android stack. The proxy server is located in a separate container with the original operating system software stack for executing client requests on physical devices. A proxy client is hosted in each container with an Android user.

Audio device

A typical scenario for using an audio device when running multiple Androids would be to listen to music in one of the OS and play the sound of a game running in another. The user would probably like to hear the sound of all running Android, but in fact the physical sound device is not designed for that. When you try to use it, the OS reports an error and sometimes can not play sound until the next reboot. Virtualization of the audio device should provide the ability to simultaneously listen to the sound of all Android.

It is also required to implement interaction with the telephony service so that during a telephone conversation the audio device records and transmits the user's voice to the cellular network and reproduces the voice of the interlocutor received from the cellular network.

Proxy client

The substitute hardware-independent interface to the audio device is the AudioHardwareInterface interface, as the Android Platform Developer's Guide tells us . In addition, it is the lowest level and simplest hardware independent audio interface. In fact, it is a stream for recording sound for playback. In addition to the sound stream played by Android, other useful information can be obtained in its implementation.

One could do the substitution at a higher level. For example, to replace part of the Android audio framework. But analysis showed that the audio framework is much more complex than AudioHardwareInterface. Therefore, the proxy client in the case of virtualization audio device is an implementation of AudioHardwareInterface.

The main duty of the proxy client is to send the Android audio stream and other useful information to the proxy server, which will play the audio streams of all Android. Sound streams are played in uncompressed form, so their transfer using copying to a proxy server can produce noticeable overhead. We avoid them due to the use of inter-container shared memory for transmitting audio streams. Information and control messages are transmitted using the mechanism of inter-container messaging.

Proxy server

The main task of the proxy server is to mix the sound streams received from the proxy clients and play the resulting sound stream on a physical audio device. To ensure maximum portability of the proxy server, mixing and reproduction of sound should be independent of the device. The Android audio framework interfaces are hardware independent. In addition, the audio framework already has capabilities for mixing audio streams. Android audio framework does not provide access to its mixer from the outside, so to use it you need to either integrate into the audio framework or use the mixer implicitly. The architecture of our sound virtualization solution is shown in the figure.

The simplest solution that allows you to mix and play sound in Android is to use the audio classes from the Android SDK. In our solution, the sound stream of each user is played by a separate object of the AudioTrack class from the Android SDK. Using classes allows the Android audio framework to mix all reproduced audio streams and sends the resulting audio stream to the device’s speaker or to the headphone output.

It should be noted that the classes from the Android SDK are written in Java, and to use them you need to run the Dalvik virtual Java machine. Starting Dalvik in the case of Android means starting the entire OS due to the use of a mechanism such as bootstrapping in it. Here we are faced with a problem: running Android takes up 200–250 Mb of RAM and consumes computing resources. These are unacceptable overheads for the task of reproducing and mixing sound streams, but they were avoided in this work. In fact, we are not using Java classes from the Android SDK, but their native backend written in C ++. This is why starting Dalvik, which means starting a full Android container, is not required.

Completely native applications, such as a proxy server, cannot be registered in the Android rights control system. At the same time, the services accessed by the proxy server silently ignored requests due to the lack of rights. Everything was decided by removing the rights checks in these services. We performed this trick with a calm mind, since this removal does not affect security. Extraneous applications never run in a container with a proxy server, it is completely under the control of virtualization technology.

Sound level control

The user probably wants the sound volume of different Android to be adjustable. It turned out that in Android 2.x there is no abstraction of the hardware mixer, i.e. Mixing is done through the CPU. Since the mixing of sound streams inside each Android is completely independent (there is no common shared hardware mixer), the overall volume level of each Android can be adjusted in Android itself. The proxy server simply does not make changes to the sound levels of each OS, and the user sets the sound level of each Android in their user interface, as if there was no virtualization on the phone.

Sound output devices

Mobile devices typically have multiple audio output devices. For example, a large speaker, handset, headphones, bluetooth headset. Launched operating systems may simultaneously require playback of their audio streams on different output devices. In some cases, their requirements will be incompatible. For example, it makes no sense to play one sound on a large speaker, and the other in the headphones or handset. It will also be strange if during the call, not only the voice of the interlocutor is heard in the receiver, but also the music played before the call.

Therefore, a policy was developed for routing audio streams to various output devices that implements the strategy of "least surprise" of the user. As part of this strategy, in particular, sometimes you want to simulate the sound of Android. To do this, the proxy client is switched to fictitious playback mode, in which synchronous functions are blocked for the duration of the reproduced portion of the audio stream, so Android thinks that it played the sound, although in fact it did not go to the proxy server for playback.

Interaction with a telephony service

In the proxy client, which is the implementation of AudioHardwareInterface, we can get information about whether the conversation is currently on the phone or not. If the conversation is ongoing, then you need to configure the audio subsystem to record and play voice and interact with the telephony service. In fact, for this it is enough to put the AudioHardwareInterface implementation that comes with the device into call mode by calling the setMode method (MODE_IN_CALL) and set the audio routing to the handset, otherwise the interlocutor’s voice will be played by the current audio output device. Thus, the device supplier is responsible for implementing the interaction with the telephony service.

Telephony

The most interesting and complicated technology was the dispatching of phone calls between containers. The software equipment management stack for accessing mobile networks on Android contains the following components:

1. Com.android.internal.telephony package API.

2. The rild daemon, which multiplexes user application requests in mobile network access equipment.

3. Proprietary equipment access library.

4. GSM modem driver.

The interface between components (2) and (3) is documented and called the Radio Interface Layer, or RIL. It is described in the file development / pdk / docs / porting / telephony.jd.

For RIL virtualization, I had to solve the following problems:

Proprietary RIL implementations in different Androids try to reinitialize equipment;

It is not clear which of the Androids should receive incoming calls and SMS;

It is unclear how to manage calls and SMS from different "Androids".

It should also be noted here that the implementation of the GSM-modem drivers differs significantly on different devices, therefore, the existence of a universal virtualization solution for this device in the kernel is impossible. In addition, the proprietary RIL library uses an undocumented protocol to interact with a GSM modem, which is an obstacle to its virtualization even for a specific smartphone model.

Client part

The specific implementation of the RIL library on the phone is unknown, but its interface is known. We created the abstraction layer as a virtual RIL proxy and RIL client. On the client side, the rild daemon instead of the original RIL library loads the RIL client we have implemented. The RIL client, intercepting calls to the interface, transmits calls using the RIL proxy intercontainer mechanism, which in turn does the main interface virtualization, and uses the original RIL library to access telephony.

Server side

The server part is implemented as a single-threaded application that downloads the proprietary implementation of RIL and, using the mechanism of inter-container interaction, receives messages from proxy clients working in user containers. In the OnRequestComplete handler, we don’t know the type of request, but the way to pack the response of the proprietary RIL implementation depends on it, so when the request arrives, the server remembers the token matching the request number in order to call the desired response marshaller in this handler. The RIL proxy server must handle the situation when from different clients receive requests with the same tokens, so when the next request arrives, the proxy server scans the list of request tokens processed by the RIL proprietary library, and if it turns out that a request with such a token is being processed, then the server generates a new token for the incoming request. Thus, the proxy server for all incoming requests stores their real and dummy tokens.

Request Routing Policy

For each type of request received by OnRequest, a processing policy is defined. Currently, three policies are defined:

- Unconditional request blocking. The policy is set for all requests that have never been encountered during the development of a proxy server.

- Unconditional transfer of request to proprietary library. The policy applies to all requests that do not change the state of the proprietary library and GSM modem.

- Transfer request from active container to proprietary library. Serves for requests related to sending SMS and making calls.

For each type of asynchronous notification, its routing policies are also defined:

- Unconditional blocking of the notification.

- Routing to the active container. The policy is set for notifications related to the acceptance of SMS and calls.

- Routing to all containers. For service notifications “hardware” - for example, about a change in the signal strength of a cellular network.

Input subsystem

Android uses the evdev subsystem to collect kernel input events. For each process that opened the file / dev / input / eventX, this driver creates a queue of events coming from the kernel of the input subsystem.

In this way, incoming input events can be delivered to all containers. The evdev_event () function is an input event dispatcher, so in order to prevent delivery of input messages to inactive containers, this function has been modified so that all incoming input events are added only to the process queue of the active container.

A little bit about testing

After we managed to launch our solution on smartphones, we did a series of experiments in order to quantify the characteristics of our solution. I must say right away that we did not work on optimization - this work has yet to be done.

The main objectives of the testing were as follows:

- demonstration of the readiness of the developed technology;

- measurement of memory consumption on virtualized phones;

- determination of energy consumption of the developed technology.

Testing was carried out on the Samsung Galaxy S II smartphone in the following scenarios for using the smartphone:

1. Simple (we turned on the phone and do nothing with it);

2. Play music with the screen backlight on;

3. Angry Birds (where without them?) And simultaneous music playback.

Test scenarios were executed on three configurations:

1. The original CyanogenMod 7 environment for Samsung Galaxy S II (1);

2. One container with CyanogenMod 7 (2);

3. Two containers with CyanogenMod 7: music was played in one container, a game was launched in the other (3).

The following parameters were measured in each test run:

1. The amount of free memory and the size of the file system cache (according to the information / proc / memfoinfo);

2. Battery charge (according to the information / sys / class / power_supply / battery / capacity.

Each test run lasted 30 minutes, measurements were taken at a frequency of 2 s. Here's what we got:

It may seem strange that the memory consumption in the case of containers are less than in the real environment, the exact reason has not been established (we haven’t tried to figure it out yet), however, the same effect is also observed in the Cells project, which they described in their article .

Testing showed the acceptability of the developed solutions and demonstrated insignificant consumption of memory and the smartphone’s battery by the developed technology, with the exception of the case (the last row of the table), in which the energy consumption of two containers is almost doubled compared to unmodified Android. Currently, work is underway to find out the reasons for this behavior.

As you can see from the values of memory consumption, when you start the second container, it increases by more than 2 times, which is an obstacle to launching several containers on one smartphone: experiments show that the Google Nexus S smartphone, which has 380 MB, cannot start more than one container without paging file activation.

Conclusion

A large part of technological news is now being generated around mobile devices. A master's (or diploma, to whom it is more familiar) project of a group of students showed the viability of virtualization technology on mobile devices and gave an idea of how this technology can be used to create products.

Some interesting facts and figures about creating our solution:

- There are three main developers of the project, two of whom were students of the Academic University in St. Petersburg at the time of the work, and one led the graduation project;

- The project lasted 11 months;

- The project was assisted by advice from engineers at the Parallels Moscow office;

- The project involved three Android devices.

If something important is left behind the scenes, ask questions in the comments. I will try to answer them.