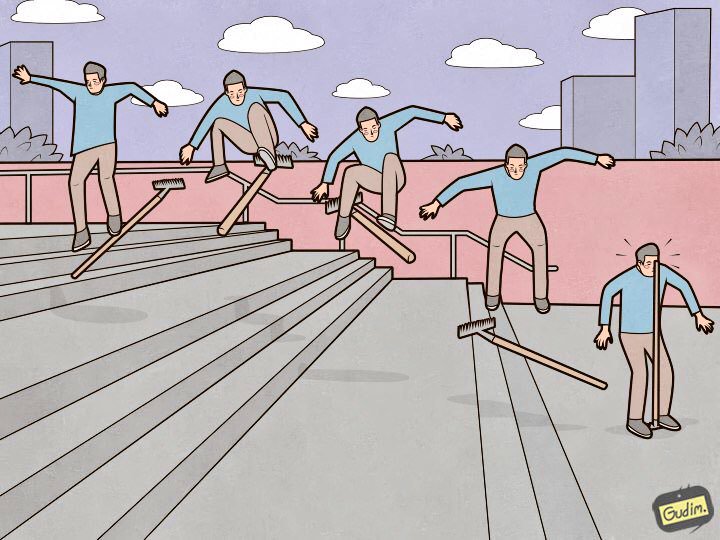

Nine Elasticsearch rakes that I stepped on

“A trained person is also stepping on a rake.

But on the other hand, there is where the handle is. ”

Elasticsearch is a great tool, but each tool requires not only adjustment and care , but also attention to detail. Some are insignificant and lie on the surface, while others are hidden so deeply that it will take more than a day, not a dozen coffee cups and more than one kilometer of nerves to search. In this article I will tell about nine wonderful rakes in the setting of the elastic, which I stepped on.

I will arrange a rake descending evidence. From those that can be foreseen and circumvented at the stage of setting up and entering a cluster in the production state, to very strange, but bringing the most extensive experience (and the stars in the eyes).

Data nodes must be the same.

“Cluster works at the speed of the slowest data node” is a suffered axiom. But there is another obvious point that is not related to performance: elastic does not think in disk space, but in shards, and tries to evenly distribute them among data nodes. If there is more space on one of the data nodes than on others, then it will be useless to stand idle.

Deprecation.log

It may happen that someone does not use the most modern means of sending data to the elastic, which does not know how to set the Content-Type when executing requests. In this list, for example, heka, or when logs leave devices with their built-in tools). In this case, the deprecation. The log begins to grow at an awesome rate, and for each request, the following lines appear in it:

[2018-07-07T14:10:26,659][WARN ][o.e.d.r.RestController] Content type detection for rest requests is deprecated. Specify the content type using the [Content-Type] header.

[2018-07-07T14:10:26,670][WARN ][o.e.d.r.RestController] Content type detection for rest requests is deprecated. Specify the content type using the [Content-Type] header.

[2018-07-07T14:10:26,671][WARN ][o.e.d.r.RestController] Content type detection for rest requests is deprecated. Specify the content type using the [Content-Type] header.

[2018-07-07T14:10:26,673][WARN ][o.e.d.r.RestController] Content type detection for rest requests is deprecated. Specify the content type using the [Content-Type] header.

[2018-07-07T14:10:26,677][WARN ][o.e.d.r.RestController ] Content type detection for rest requests is deprecated. Specify the content type using the [Content-Type] header.

Requests come, on average, every 5-10 ms - and each time a new line is added to the log. This adversely affects the performance of the disk subsystem and increases iowait. Deprecation.log can be turned off, but this is not very reasonable. To collect the elastic logs into it, but not to allow littering, I disable only the logs of the oedrRestController class.

To do this, add the following structure to logs4j2.properties:

logger.restcontroller.name = org.elasticsearch.deprecation.rest.RestController

logger.restcontroller.level = error

It will raise the logs of this class to the error level, and they will stop falling into deprecation.log.

.kibana

What does the normal cluster installation process look like? We put the nodes, combine them into a cluster, put the x-pack (who needs it), and of course, Kibana. We start, check that everything works and Kibana sees the cluster, and continue tuning. The problem is that on a freshly installed cluster, the default template looks like this:

{

"default": {

"order": 0,

"template": "*",

"settings": {

"number_of_shards": "1",

"number_of_replicas": "0"

}

},

"mappings": {},

"aliases": {}

}And the .kibana index where all settings are stored is created in a single copy.

Somehow there was a case when due to a hardware failure, one of the data nodes in the cluster was killed. He quickly came to a consistent state by raising replicas of the shard from neighboring data-nodes, but, by a happy coincidence, it was on this data-node that the only shard with an index of .kibana was located. The situation is stalemate - the cluster is alive, in working condition, and Kibana is in red-status, and my phone is torn from the calls of employees who urgently need their logs.

All this is solved simply. Nothing has fallen yet:

XPUT .kibana/_settings

{

"index": {

"number_of_replicas": "<количество_дата_нод>"

}

}XMX / XMS

The documentation says - "No more than 32 GB", and rightly so. But it is also correct that you do not need to set it in the service settings.

-Xms32g-Xmx32gBecause it is already more than 32 gigabytes, and here we run into an interesting nuance of Java memory. Above a certain limit, Java ceases to use compressed pointers and begins to consume an unreasonably large amount of memory. It’s easy to check if compressed pointers use a Java machine running Elasticsearch. We look at the service log:

[2018-07-29T15:04:22,041][INFO][o.e.e.NodeEnvironment][log-elastic-hot3] heap size [31.6gb], compressed ordinary object pointers [true]The amount of memory that should not be exceeded depends, among other things, on the version of Java being used. To calculate the exact amount in your case - see the documentation .

I now have all the elastic data-nodes installed:

-Xms32766m-Xmx32766mIt seems to be a banal fact, and the documentation is well described, but I regularly encounter installations with Elasticsearch, where this moment is missed, and Xms / Xmx are exposed in 32g.

/ var / lib / elasticsearch

This is the default path for storing data in elasticsearch. yml:

path.data: /var/lib/elasticsearchThere I usually mount one large RAID array, and here's why: we specify ES several ways to store data, for example, like this:

path.data: /var/lib/elasticsearch/data1, /var/lib/elasticsearch/data2In data1 and data2, different disks or raid arrays are mounted. But elastic does not balance and does not distribute the load between these paths. First, he fills one section, then he starts writing to another, so the load on the storage will be uneven. Knowing this, I made an unequivocal decision - I combined all the disks in RAID0 / 1 and mounted it in the path specified in path.data.

available_processors

And no, I mean not the processors on the ingest nodes. If you look at the properties of the running node (via the _nodes API), you can see something like this:

"os". {

"refresh_interval_in_millis": 1000,

"name": "Linux",

"arch": "amd64",

"version": "4.4.0-87-generic",

"available_processors": 28,

"allocated_processors": 28

}It is seen that the node is running on a host with 28 cores, and the elastic correctly determined their number and started at all. But if there are more than 32 cores, then sometimes it’s like this:

"os": {

"refresh_interval_in_millis": 1000,

"name": "Linux",

"arch": "amd64",

"version": "4.4.0-116-generic",

"available_processors": 72,

"allocated_processors": 32

}It is necessary to forcibly set the number of processors available to the service - this has a good effect on node performance.

processors: 72thread_pool.bulk.queue_size

In the section about thread_pool.bulk.rejected of the previous article there was such a metric - a count of the number of refusals for requests to add data.

I wrote that the growth of this indicator is a very bad sign, and the developers recommend not setting up thread pools, but adding new nodes to the cluster - supposedly, this solves performance problems. But the rules are needed in order to sometimes break them. Yes, and it does not always work to “throw a problem with iron,” so one of the measures to deal with failures in bulk requests is to increase the size of this queue.

By default, the queue settings look like this:

"thread_pool":

{

"bulk": {

"type": "fixed",

"min": 28,

"max": 28,

"queue_size": 200

}

}The algorithm is as follows:

- We collect statistics on the average queue size during the day (the instantaneous value is stored in thread_pool.bulk.queue);

- Carefully increase the queue_size to a size slightly larger than the average size of the active queue - because a failure occurs when it is exceeded;

- Increasing the size of the pool is not necessary, but acceptable.

To do this, add something like this to the host’s settings (you will, of course, have your own values):

thread_pool.bulk.size: 32

thread_pool.bulk.queue_size: 500And after restarting the node, be sure to monitor the load, I / O, memory consumption. and all that is possible to roll back the settings, if necessary.

Important: these settings make sense only on the nodes working on receiving new data.

Pre-index creation

As I said in the first article of the cycle, we use Elasticsearch to store the logs of all microservices. The point is simple - one index stores the logs of one component in one day.

From this it follows that new indices are created every day by the number of microservices - so earlier every night the elastic fell into clinch for about 8 minutes, until a hundred new indices were created, several hundred new shards, the disk load schedule went off the shelf, the queues grew to send logs to elastic on hosts, and Zabbix flourished with alerts like a Christmas tree.

To avoid this, a common Python script was written for preliminary index creation. The script works like this: it finds the indexes for today, extracts their mappings and creates new indexes with the same mappings, but one day in advance. Works on cron, runs in those hours when the Elastic is least loaded. The script uses the elasticsearch library and is available on GitHub .

Transparent Huge Pages

Once we discovered that the elastic nodes that are working to receive data began to hang under load during peak hours. And with very strange symptoms: the use of all processor cores drops to zero, but nevertheless the service hangs in memory, regularly listens to the port, does nothing, does not respond to requests, and after some time falls out of the cluster. The systemctl restart service does not respond. Only good old kill −9 helps.

This is not caught by standard monitoring tools; on the charts until the moment of the fall, the nominal picture is empty in the service logs. The memory dump of the java-machine at this moment could not be done either.

But, as they say, "we are professionals, so after some time they googled the decision." A similar problem was highlighted in the discuss.elastic.co thread . and turned out to be a bug in the kernel related to tranparent huge pages. It was decided to turn off the thp in the kernel using the sysfsutils package.

Check if transparent huge pages are turned on for you:

cat /sys/kernel/mm/transparent_hugepage/enabled

always madvise [never]If there is always [always] - you are potentially in danger.

Conclusion

These are the main rakes (in fact there were, of course, more), which I happened to step on in one and a half years of work as an administrator of the Elasticsearch cluster. I hope this information will be useful to you on the difficult and mysterious path to the ideal cluster Elasticsearch.

And for the illustration, thanks to Anton Gudim - there is still a lot of good in his instagram .