The true power of regular expressions

- Transfer

As a frequent visitor to the PHP tag on StackOverflow , I very often come up with questions about how to parse some specific aspects of HTML using regular expressions. The most common answer to this is:

This statement - in the context of the question - lies somewhere between the highly misleading and the completely wrong. What I want to try to demonstrate in this article is how powerful modern regular expressions really are .

In the context of the theory of formal languages , something is called “regular” when it has a grammar in which any inference rule has one of the following forms:

Here, the symbol

But what is

All this may sound somewhat abstract, so let's look at an example: the definition of natural numbers as a grammar.

This grammar suggests that:

In this example, numbers from 0 to 9 will be terminals (since they cannot be broken down into components), and N will be the only non-terminal (since it can be further separated).

If you look at the rules again and compare them with the definition of regular grammatical forms above, you will see that they meet the specified criteria: the first ten rules correspond to the form

Another point that you could pay attention to is that despite the grammar's description of such simple things, it is already quite bloated. Wouldn't it be better if we could express the same concept, but in a more concise form?

And then regular expressions come onto the scene: the grammar above is equivalent to regexp

So, a logical question arises: can regular expressions describe only regular grammars, or are they capable of more? The answer to it is , and yes, and no.

Regular expressions in the sense of formal grammars can (by definition) describe only regular grammars and nothing more.

But when programmers talk about “regular expressions,” they don’t mean formal grammars. They talk about derived regular expressions implemented in their programming languages. And these regexp implementations are very superficially related to the original notion of regularity.

Any modern variety of regexps can describe much more.than just regular languages. Clarifying how much more is the topic of further narration.

To keep things simple, I will focus only on the PCRE implementation of regexp. Just because I know it best (since it is used in PHP). Many other implementations are very similar to it, so most of the following can be applied to them too.

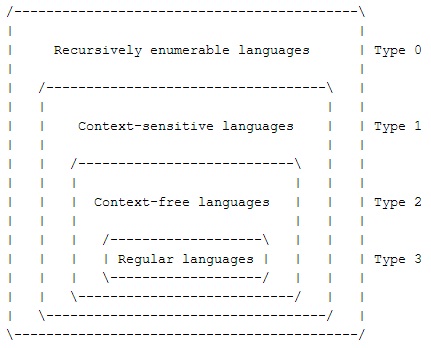

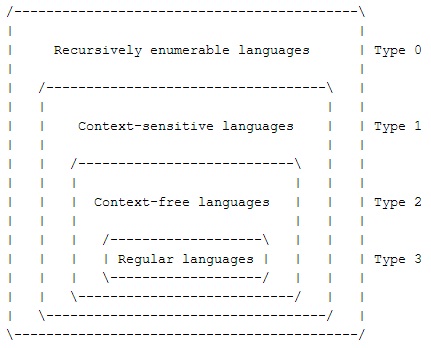

To analyze what is possible and what cannot be described with the help of regular expressions, we first consider what other types of languages exist. A good starting point for this would be the Chomsky hierarchy :

As you can see, it divides formal languages into four types: regular languages (type 3) - the least powerful, followed by context-free languages (type 2), context-sensitive languages (type 1) and finally, omnipotent unlimited languages (type 0).

Chomsky’s hierarchy is containerized, so the small boxes in the picture are completely enclosed in large boxes. Those. any regular language is also context-free (but not vice versa!).

So, let's go up a notch in the hierarchy. We already know that a regular expression can describe any regular language. Is this possible for context-free languages?

(Reminder: when I say “regular expressions”, I mean them in a programmatic sense, and not in the sense of the theory of formal languages).

The answer to the question above: yes, it is possible!

Consider a classic example of context-free grammar

This regular expression is very simple:

From the above considerations, it should be clear that this expression can describe any string with the same number

Consequently, regular expressions are able to describe at least some non-regular, context-free grammars. But can they describe them all? To answer this question, let us first look at the definition of context-free grammars.

In a context-free grammar, all inference rules have the form:

Here

As an example, consider the following grammar:

This is an excerpt from a PHP grammar (just a few simple rules). The syntax is slightly different from what we used before, but it can be easily understood. One aspect worth noting is that the names T_SOMETHING here are also terminal characters. Such symbols are usually called tokens ; they encode more abstract concepts. For example, T_FUNCTION is the keyword function, and T_STRING is the token label (like getUserById or some_other_name).

I use this example to demonstrate one thing: context-free grammars are already powerful enough to encode complex languages. This is why almost all programming languages have context-free grammars. This list also includes syntactically correct HTML.

Returning to the actual question: can regular expressions bind all context-free grammars this way? Again the answer is yes!

This is extremely easy to prove, since regular expressions (at least PCRE and the like) provide syntax for constructing grammars, very similar to the one above:

What you see above is a regular expression for describing an email address using RFC 5322 . It is built using a simple conversion of BNF rules from RFC to a notation that PCRE understands.

The syntax is extremely simple: all rule definitions are wrapped in a DEFINE statement, which basically means that all these rules do not have to correspond directly to something, they simply need to be declared. Only the part

Definitions of rules, in fact, are not real "rules", but rather subpatterns. In the first example, it

Pay attention to the following fact: the regular expression above uses the

Thus, the above syntax allows you to simply map the grammar to a regular expression:

The only catch is that regular expressions do not support left-side recursion. That is, if we take the above definition of a list of parameters:

You wo n’t be able to accurately convert it to a grammar-based regexp. The following will not work:

The reason for this is because it

But do not be alarmed, this does not in any way affect the power of regular expressions. Each left recursive grammar can be converted to a right recursive. In the example above, just swap two parts:

Now it should be absolutely clear that regular expressions can describe any context-free language (and, therefore, almost all languages that programmers encounter). The only problem: although regular expressions can describe context-free grammars, they usually cannot parse them. Parsing involves converting a string into an abstract syntax tree. For this it is impossible to use regular expressions, at least PCRE (although, of course, in Perl, where it is possible to insert arbitrary code into a regular expression, you can do almost anything ...).

However, the above-

Now let me focus again on what I mentioned in passing earlier: syntactically correct HTML is context-free. Therefore, you can describe it using regular expressions, as opposed to popular belief. Just remember two things: first, used for most of the HTML, you face, do notsyntactically correct (usually, it’s not even closed). And secondly, just what you can does not mean that you should . You can write your software on Brainfuck, but there are reasons why you do not, right?

My opinion on the topic is this: wherever you need general HTML processing, use the DOM library to your taste. It will correctly process the incorrect HTML and take on the whole burden of parsing. On the other hand, if you are dealing with specific cases, then fast regular expressions are often the best way out. And I have to admit: although I usually tell people not to parse HTML using regular expressions, I myself know how to do this often. Just because I often encounter specific situations when using regexps is simpler.

Now that we’ve taken a closer look at context-free languages, let's go up one more step in the Chomsky hierarchy: context-sensitive languages.

For them, the structural rules will take the following form:

This mixture of symbols at first looks quite complicated, but in fact everything is simple. The core is still the template

To make the foregoing more understandable, try to interpret the following rules:

In Russian, it will sound like this:

Context-sensitive languages are something that you rarely see in “normal” programming. They are more important in natural language processing (because natural languages are definitely not context-free. Words have different meanings depending on context). But even people who process natural languages work with the so-called “soft context-sensitive languages”, because they are enough for modeling, and parsing is much faster.

To understand how powerful context-sensitive grammars are, let's look at another class of grammars that has exactly the same expressive power as context-sensitive grammars: non-shortening grammars.

For non-shortening grammars, each inference rule has the form

So non-shorthand grammars allow any rules until they start shortening the input line. Those.

These relationships (equivalent for context-sensitive and non-lengthening grammars) make it absolutely clear that with context-sensitive grammars you can do almost anything. Just don’t shorten :)

To get an idea of why both grammars have the same expressive power, look at the following example of transformations:

Okay, back to regular expressions. Can they also describe context-sensitive languages?

This time I can’t give you a definite answer. Of course, they can describe some context-sensitive languages, but I have no idea if they can describe them all .

As an example of a context-sensitive language that can be easily described using regexps, we take a modification of the context-free language

The PCRE regexp for this language is as follows:

If you ignore the statement

A new entity for us is this

In the regexp above, you can also see how the concept of context is implemented in regular expressions using statements. If we now return to the definition of context-sensitive grammar, then you can say that a view inference rule

can be converted to the following DEFINE regexp rule:

This is the same as saying that

Looking at the above, you might think that now you can easily convert a context-sensitive grammar to a regular expression, but this is actually not the case. The reason is that the retrospective statement

This is a major blow to the ease of converting context-sensitive grammars into regexps. Almost all such grammars require variable widths for retrospective statements.

But the fact that it is impossible to directly convert context-sensitive grammar to regexp alone does not mean that regular expressions cannot describe them all. For example, the above language

So what can we say in the end? Regexps can describe at least some context-sensitive languages, but it is not known whether they can describe all of them .

The next class of grammars in the Chomsky hierarchy is unlimited grammars. The set of languages that can be formed with their help includes all recursively enumerated languages.

Little can be said about unlimited grammars, since they, uh, are unlimited. Their inference rules take the form

Therefore, unlimited grammars simply remove the “non-shortening” part from the non-shortening grammars. Therefore, for them it

How accurate are unlimited grammars? So much so that they cannot be stronger: they are Turing-full. There is even a “programming language” based on unlimited grammars: Thue. Since it is Turing-complete, it can do everything that other programming languages are capable of.

One of the consequences of Turing completeness is that the problem of checking whether a certain line belongs to a certain grammar is unsolvable in the general case.

Unfortunately, I cannot tell you anything else about how regular expressions and unlimited grammars are related. Hell, I couldn't even find an example of adequate unlimited grammar (which would not be non-shortening).

But now, since we are talking about Turing completeness, let's move on to the next point:

Here is another very powerful regular expression feature that I haven't mentioned before: backlinks.

Those. containing such a very simple regexp:

What this regexp describes is called the “copy language” and is another typical example of context-sensitive languages.

Similarly, you can use the other links to describe the other grammar examples above:

An explanation of how this works is beyond the scope of this article, but you can read about this great topic on StackOverflow .

As you can see, simply adding a backlink (without the support of a recursive subpattern) already increases the power of regular expressions. In principle, it is so powerful that it makes the description of regular expressions an NP-complete problem.

What does NP-complete mean? NP-completeness is one of the classes in the theory of computational complexity for solving problems, into which many "difficult" problems fall. Examples of NP-complete problems are the Traveling Salesman Problem (TSP), the Boolean Formula Satisfaction Problem (SAT), and the Knapsack Problem (BKP).

Another important condition for calling a problem NP-complete is the ability to reduce any other NP-problem to it. Thus, all NP-complete problems are basically interchangeable. If you find a quick solution to one of them, then you will have a quick solution to all of them.

So if someone finds a quick solution for an NP-complete problem, then almost all of the complex computational problems of humanity will be solved in one fell swoop. What, as we know, will mean the end of civilization.

To prove that regular expressions with backlinks are exactly NP-complete, you can simply take one of the well-known NP-complete problems and prove that it can be solved by using regular expressions. As an example, I chose the 3-CNF SAT task.

The 3-CNF SAT stands for “The feasibility problem for Boolean formulas in 3-conjunctive normal form” and is simple enough to understand. There is a Boolean formula of the following form:

It consists of a series of conditions separated by AND operators. Each of these conditions consists of three variables (or their negations) separated by OR operators. The 3-CNF SAT asks: is there a solution to this Boolean formula (e.g., true)?

The above Boolean formula can be converted to the following regular expression:

If you run this code, you'll get the following result

This means that the formula given above is satisfied if

The regular expression uses a very simple trick: for any three conditions, the string contains

Now it's up to the engine. It checks the various paths for string binding until either a solution is found or it surrenders.

Since the article was a bit long, here is a summary of the main points:

But do not forget: from what you can do not follow what you should . Processing HTML with regular expressions is a really bad idea in some cases. And in others, this is perhaps the best thing to do.

Just figure out what is the simplest solution for your specific task, and use it. If you choose to solve the problem using regular expressions, do not forget about the x-modifier, which allows you to make the format of your regexp more beautiful. For complex regular expressions, also remember to use DEFINE statements and named subpatterns to keep your code clean and readable.

That's all.

From the translator: possible comments regarding the translation, please write in a personal. I will be very grateful for them.

“You cannot parse HTML with regular expressions because HTML is not regular. Use an XML parser and you will be happy ”

This statement - in the context of the question - lies somewhere between the highly misleading and the completely wrong. What I want to try to demonstrate in this article is how powerful modern regular expressions really are .

What does “regular” really mean?

In the context of the theory of formal languages , something is called “regular” when it has a grammar in which any inference rule has one of the following forms:

B -> aB -> aCB -> εHere, the symbol

->can be read as “the left side can be replaced by the right side”. Those. the first rule will sound like this: “ Bcan be replaced a”, the second - “ Bcan be replaced aC”, and the third - “ Bcan be replaced with an empty string” ( ε- an empty string character). But what is

B, Cand a? It is agreed that the letters in upper case denote the so-called " non-terminals " ( non-terminals ) - characters that can bedisassemble into components - and the letters in lowercase denote "terminals" ( terminals ) - characters that cannot be further broken down. All this may sound somewhat abstract, so let's look at an example: the definition of natural numbers as a grammar.

N -> 0N -> 1N -> 2N -> 3N -> 4N -> 5N -> 6N -> 7N -> 8N -> 9N -> 0NN -> 1NN -> 2NN -> 3NN -> 4NN -> 5NN -> 6NN -> 7NN -> 8NN -> 9NThis grammar suggests that:

Натуральное число (N) - это... одна из цифр от 0 до 9или... одна из цифр от 0 до 9, за которой следует другое натуральное число (N)In this example, numbers from 0 to 9 will be terminals (since they cannot be broken down into components), and N will be the only non-terminal (since it can be further separated).

If you look at the rules again and compare them with the definition of regular grammatical forms above, you will see that they meet the specified criteria: the first ten rules correspond to the form

B -> a, and the second ten correspond to the formB -> aC. Therefore, the grammar for natural numbers is regular . Another point that you could pay attention to is that despite the grammar's description of such simple things, it is already quite bloated. Wouldn't it be better if we could express the same concept, but in a more concise form?

And then regular expressions come onto the scene: the grammar above is equivalent to regexp

[0-9]+(which is pretty darn simpler). And a transformation of this type can be applied to any regular grammar: each of them has a corresponding regular expression that describes all the lines allowed for it.What regular expressions can describe

So, a logical question arises: can regular expressions describe only regular grammars, or are they capable of more? The answer to it is , and yes, and no.

Regular expressions in the sense of formal grammars can (by definition) describe only regular grammars and nothing more.

But when programmers talk about “regular expressions,” they don’t mean formal grammars. They talk about derived regular expressions implemented in their programming languages. And these regexp implementations are very superficially related to the original notion of regularity.

Any modern variety of regexps can describe much more.than just regular languages. Clarifying how much more is the topic of further narration.

To keep things simple, I will focus only on the PCRE implementation of regexp. Just because I know it best (since it is used in PHP). Many other implementations are very similar to it, so most of the following can be applied to them too.

Language hierarchy

To analyze what is possible and what cannot be described with the help of regular expressions, we first consider what other types of languages exist. A good starting point for this would be the Chomsky hierarchy :

As you can see, it divides formal languages into four types: regular languages (type 3) - the least powerful, followed by context-free languages (type 2), context-sensitive languages (type 1) and finally, omnipotent unlimited languages (type 0).

Chomsky’s hierarchy is containerized, so the small boxes in the picture are completely enclosed in large boxes. Those. any regular language is also context-free (but not vice versa!).

So, let's go up a notch in the hierarchy. We already know that a regular expression can describe any regular language. Is this possible for context-free languages?

(Reminder: when I say “regular expressions”, I mean them in a programmatic sense, and not in the sense of the theory of formal languages).

Description of context-free languages

The answer to the question above: yes, it is possible!

Consider a classic example of context-free grammar

{a^n b^n, n>0}, which means "The number of characters afollowed by the same number of characters b." The (PCRE) regexp for this language will be: /^(a(?1)?b)$/This regular expression is very simple:

(?1)refers to the first subpattern - (a(?1)?b). Therefore, in principle, you can replace the (?1)subpatterns, thus forming a recursive dependence: /^(a(?1)?b)$//^(a(a(?1)?b)?b)$//^(a(a(a(?1)?b)?b)?b)$//^(a(a(a(a(?1)?b)?b)?b)?b)$/# and so onFrom the above considerations, it should be clear that this expression can describe any string with the same number

aand b.Consequently, regular expressions are able to describe at least some non-regular, context-free grammars. But can they describe them all? To answer this question, let us first look at the definition of context-free grammars.

In a context-free grammar, all inference rules have the form:

A -> βHere

A, again, a nonterminal symbol, and βa arbitrary string of terminals and nonterminals. Thus, each context-free grammar rule has a nonterminal on the left and a string of arbitrary characters on the right. As an example, consider the following grammar:

function_declaration -> T_FUNCTION is_ref T_STRING '(' parameter_list ')''{' inner_statement_list '}'

is_ref -> '&'

is_ref -> ε

parameter_list -> non_empty_parameter_list

parameter_list -> ε

non_empty_parameter_list -> parameter

non_empty_parameter_list -> non_empty_parameter_list ',' parameter

// ... ... ...This is an excerpt from a PHP grammar (just a few simple rules). The syntax is slightly different from what we used before, but it can be easily understood. One aspect worth noting is that the names T_SOMETHING here are also terminal characters. Such symbols are usually called tokens ; they encode more abstract concepts. For example, T_FUNCTION is the keyword function, and T_STRING is the token label (like getUserById or some_other_name).

I use this example to demonstrate one thing: context-free grammars are already powerful enough to encode complex languages. This is why almost all programming languages have context-free grammars. This list also includes syntactically correct HTML.

Returning to the actual question: can regular expressions bind all context-free grammars this way? Again the answer is yes!

This is extremely easy to prove, since regular expressions (at least PCRE and the like) provide syntax for constructing grammars, very similar to the one above:

/

(?(DEFINE)

(?<addr_spec> (?&local_part) @ (?&domain) )

(?<local_part> (?&dot_atom) | (?"ed_string) | (?&obs_local_part) )

(?<domain> (?&dot_atom) | (?&domain_literal) | (?&obs_domain) )

(?<domain_literal> (?&CFWS)? \[ (?: (?&FWS)? (?&dtext) )* (?&FWS)? \] (?&CFWS)? )

(?<dtext> [\x21-\x5a] | [\x5e-\x7e] | (?&obs_dtext) )

(?<quoted_pair> \\ (?: (?&VCHAR) | (?&WSP) ) | (?&obs_qp) )

(?<dot_atom> (?&CFWS)? (?&dot_atom_text) (?&CFWS)? )

(?<dot_atom_text> (?&atext) (?: \. (?&atext) )* )

(?<atext> [a-zA-Z0-9!#$%&'*+/=?^_`{|}~-]+ )

(?<atom> (?&CFWS)? (?&atext) (?&CFWS)? )

(?<word> (?&atom) | (?"ed_string) )

(?<quoted_string> (?&CFWS)? " (?: (?&FWS)? (?&qcontent) )* (?&FWS)? " (?&CFWS)? )

(?<qcontent> (?&qtext) | (?"ed_pair) )

(?<qtext> \x21 | [\x23-\x5b] | [\x5d-\x7e] | (?&obs_qtext) )

# comments and whitespace

(?<FWS> (?: (?&WSP)* \r\n )? (?&WSP)+ | (?&obs_FWS) )

(?<CFWS> (?: (?&FWS)? (?&comment) )+ (?&FWS)? | (?&FWS) )

(?<comment> \( (?: (?&FWS)? (?&ccontent) )* (?&FWS)? \) )

(?<ccontent> (?&ctext) | (?"ed_pair) | (?&comment) )

(?<ctext> [\x21-\x27] | [\x2a-\x5b] | [\x5d-\x7e] | (?&obs_ctext) )

# obsolete tokens

(?<obs_domain> (?&atom) (?: \. (?&atom) )* )

(?<obs_local_part> (?&word) (?: \. (?&word) )* )

(?<obs_dtext> (?&obs_NO_WS_CTL) | (?"ed_pair) )

(?<obs_qp> \\ (?: \x00 | (?&obs_NO_WS_CTL) | \n | \r ) )

(?<obs_FWS> (?&WSP)+ (?: \r\n (?&WSP)+ )* )

(?<obs_ctext> (?&obs_NO_WS_CTL) )

(?<obs_qtext> (?&obs_NO_WS_CTL) )

(?<obs_NO_WS_CTL> [\x01-\x08] | \x0b | \x0c | [\x0e-\x1f] | \x7f )

# character class definitions

(?<VCHAR> [\x21-\x7E] )

(?<WSP> [ \t] )

)

^(?&addr_spec)$

/x

What you see above is a regular expression for describing an email address using RFC 5322 . It is built using a simple conversion of BNF rules from RFC to a notation that PCRE understands.

The syntax is extremely simple: all rule definitions are wrapped in a DEFINE statement, which basically means that all these rules do not have to correspond directly to something, they simply need to be declared. Only the part

^(?&addr_spec)$at the very end determines what is finally described here. Definitions of rules, in fact, are not real "rules", but rather subpatterns. In the first example, it

(a(?1)?b)1referred to the first subpattern. With many submasks of this kind, naming is impractical, so they are given meaningful names. So what (?<xyz> ...)defines a template namedxyz. (?&xyz)- link to it. Pay attention to the following fact: the regular expression above uses the

x-modifier. He instructs the engine to ignore spaces and adhere to the #-style of comments. This way you can better format your regexp so that other people can understand it normally. (Unlike this regexp RFC 822 for e-mail address ...) Thus, the above syntax allows you to simply map the grammar to a regular expression:

A -> B CA -> C D// becomes(?<A> (?&B) (?&C) | (?&C) (?&D))The only catch is that regular expressions do not support left-side recursion. That is, if we take the above definition of a list of parameters:

non_empty_parameter_list -> parameter

non_empty_parameter_list -> non_empty_parameter_list ',' parameter

You wo n’t be able to accurately convert it to a grammar-based regexp. The following will not work:

(?<non_empty_parameter_list> (?¶meter) | (?&non_empty_parameter_list) , (?¶meter))The reason for this is because it

non_empty_parameter_listappears on the far left of its own definition. This is called left-handed recursion and is very common in grammar definitions. The reason is that LALR (1) parsers, which are most often used for parsing, usually handle left-side recursion better than right-sided. But do not be alarmed, this does not in any way affect the power of regular expressions. Each left recursive grammar can be converted to a right recursive. In the example above, just swap two parts:

non_empty_parameter_list -> parameter

non_empty_parameter_list -> parameter ',' non_empty_parameter_list

Now it should be absolutely clear that regular expressions can describe any context-free language (and, therefore, almost all languages that programmers encounter). The only problem: although regular expressions can describe context-free grammars, they usually cannot parse them. Parsing involves converting a string into an abstract syntax tree. For this it is impossible to use regular expressions, at least PCRE (although, of course, in Perl, where it is possible to insert arbitrary code into a regular expression, you can do almost anything ...).

However, the above-

DEFINEregexp turned out to be veryuseful to me. In the end, usually you do not need full support for parsing, you just want to describe something (for example, an e-mail address) or extract small pieces of data (and not build a whole parse tree). Most of the complex processing tasks can be made much simpler using regexp-based grammar :) Now let me focus again on what I mentioned in passing earlier: syntactically correct HTML is context-free. Therefore, you can describe it using regular expressions, as opposed to popular belief. Just remember two things: first, used for most of the HTML, you face, do notsyntactically correct (usually, it’s not even closed). And secondly, just what you can does not mean that you should . You can write your software on Brainfuck, but there are reasons why you do not, right?

My opinion on the topic is this: wherever you need general HTML processing, use the DOM library to your taste. It will correctly process the incorrect HTML and take on the whole burden of parsing. On the other hand, if you are dealing with specific cases, then fast regular expressions are often the best way out. And I have to admit: although I usually tell people not to parse HTML using regular expressions, I myself know how to do this often. Just because I often encounter specific situations when using regexps is simpler.

Context Grammar

Now that we’ve taken a closer look at context-free languages, let's go up one more step in the Chomsky hierarchy: context-sensitive languages.

For them, the structural rules will take the following form:

αAβ → αγβThis mixture of symbols at first looks quite complicated, but in fact everything is simple. The core is still the template

A → γthat we defined for context-free grammars. They simply added to αit βin both directions. These two form a context (which also gave a name to this class of grammars). So, Anow you can replace it γonly if it has αleft and βright. To make the foregoing more understandable, try to interpret the following rules:

a b A -> a b ca B c -> a Q H cH B -> H CIn Russian, it will sound like this:

Заменить `A` на `c`, но только в том случае, если у него имеется `a b` слева.Заменить `B` на `Q H`, но только в том случае, если у него имеется `a` слева и `c` справа.Заменить `B` на `C`, но только если у него есть `H` слева.Context-sensitive languages are something that you rarely see in “normal” programming. They are more important in natural language processing (because natural languages are definitely not context-free. Words have different meanings depending on context). But even people who process natural languages work with the so-called “soft context-sensitive languages”, because they are enough for modeling, and parsing is much faster.

To understand how powerful context-sensitive grammars are, let's look at another class of grammars that has exactly the same expressive power as context-sensitive grammars: non-shortening grammars.

For non-shortening grammars, each inference rule has the form

α ->β, where αand βare arbitrary character strings with a single restriction: the number of characters on the right should be no less than the number of characters on the left. Formally, this is expressed by the formula |α| <= |β|, where |x|denotes the length of the string of characters. So non-shorthand grammars allow any rules until they start shortening the input line. Those.

A B C -> H Qwill be an invalid rule, because its left part contains three characters, and the right - only two. Therefore, this rule will be shortening. On the other hand, the opposite rule H Q -> A B Cwill do, because it has more characters on the right than on the left, which extends the string.These relationships (equivalent for context-sensitive and non-lengthening grammars) make it absolutely clear that with context-sensitive grammars you can do almost anything. Just don’t shorten :)

To get an idea of why both grammars have the same expressive power, look at the following example of transformations:

// неукорачивающая грамматикаA B -> C D// может быть преобразована в следующую контекстно-зависимую грамматикуA B -> A XA X -> Y XY X -> Y DY D -> C DOkay, back to regular expressions. Can they also describe context-sensitive languages?

This time I can’t give you a definite answer. Of course, they can describe some context-sensitive languages, but I have no idea if they can describe them all .

As an example of a context-sensitive language that can be easily described using regexps, we take a modification of the context-free language

{a^n b^n, n>0}, which we talked about above. When you change it to {a^n b^n c^n, n>0}, i.e. a certain quantity aprecedes the same quantity band cthen it will become context-sensitive. The PCRE regexp for this language is as follows:

/^

(?=(a(?-1)?b)c)

a+(b(?-1)?c)

$/x

If you ignore the statement

(?=...), then we will only have left a+(b(?-1)?c). It checks that an arbitrary quantity is afollowed by the same quantity band c. (?-1)- relative reference to a subpattern, meaning "last defined subpattern." In this case, this (b(?-1)?c). A new entity for us is this

(?=...). It is called a “conditional expression of zero width” and checks that the text following it is described by a mask, but the check itself is carried out by its subexpression. Thus, there is a check for compliance with both patterns at the same time. Part of the a+(b(?-1)?с)checks that the number band cthe same, and (a(?-1)?b)c- that the number aandbthe same. Both masks together provide confirmation that all three characters are contained in the same amount. In the regexp above, you can also see how the concept of context is implemented in regular expressions using statements. If we now return to the definition of context-sensitive grammar, then you can say that a view inference rule

αAβ → αγβcan be converted to the following DEFINE regexp rule:

(?<A> (?<= α ) γ (?= β ) )This is the same as saying that

Ait is γ, but only if it has a αleft and to your βright.Looking at the above, you might think that now you can easily convert a context-sensitive grammar to a regular expression, but this is actually not the case. The reason is that the retrospective statement

((?<= ... ))has one significant limitation: it must be of a fixed length. This means that the length of the text described by the statement must be known in advance. Those. you can write (?<= a(bc|cd) ), but you cannot write (?<= ab+). In the first case, the statement associates exactly three characters in any case and, therefore, has a fixed length. In the second case, the statement may associate ab, abb, abbbb etc. They all have different lengths, and the engine does not know when it should start matching them. So they are simply prohibited.This is a major blow to the ease of converting context-sensitive grammars into regexps. Almost all such grammars require variable widths for retrospective statements.

But the fact that it is impossible to directly convert context-sensitive grammar to regexp alone does not mean that regular expressions cannot describe them all. For example, the above language

{a^n b^n c^n, n>0}also has a grammar that requires a retrospective statement of variable width. Maybe something similar is possible for other context-sensitive grammars. I honestly don’t know. So what can we say in the end? Regexps can describe at least some context-sensitive languages, but it is not known whether they can describe all of them .

Unlimited grammars

The next class of grammars in the Chomsky hierarchy is unlimited grammars. The set of languages that can be formed with their help includes all recursively enumerated languages.

Little can be said about unlimited grammars, since they, uh, are unlimited. Their inference rules take the form

α -> β, where αand βare strings of characters with no restrictions whatsoever. Therefore, unlimited grammars simply remove the “non-shortening” part from the non-shortening grammars. Therefore, for them it

A B C -> H Qwill be a valid rule, although previously it was not. How accurate are unlimited grammars? So much so that they cannot be stronger: they are Turing-full. There is even a “programming language” based on unlimited grammars: Thue. Since it is Turing-complete, it can do everything that other programming languages are capable of.

One of the consequences of Turing completeness is that the problem of checking whether a certain line belongs to a certain grammar is unsolvable in the general case.

Unfortunately, I cannot tell you anything else about how regular expressions and unlimited grammars are related. Hell, I couldn't even find an example of adequate unlimited grammar (which would not be non-shortening).

But now, since we are talking about Turing completeness, let's move on to the next point:

Regular expressions with backlinks are NP-complete

Here is another very powerful regular expression feature that I haven't mentioned before: backlinks.

Those. containing such a very simple regexp:

/^(.+)\1$/(.+)and \1describe the same arbitrary text. Generally \nmeans "something there, described by the nth subpattern." Those. if it (.+)describes foo, it \1will also describe foo nothing more. Therefore, the expression (.+)\1means "some text, followed by a copy of it." What this regexp describes is called the “copy language” and is another typical example of context-sensitive languages.

Similarly, you can use the other links to describe the other grammar examples above:

# {a^n b^n, n>0} (context-free)

/^ (?: a (?= a* (\1?+ b) ) )+ \1 $/x

# {a^n b^n c^n, n>0} (context-sensitive)

/^ (?: a (?= a* (\1?+ b) b* (\2?+ c) ) )+ \1 \2 $/x

An explanation of how this works is beyond the scope of this article, but you can read about this great topic on StackOverflow .

As you can see, simply adding a backlink (without the support of a recursive subpattern) already increases the power of regular expressions. In principle, it is so powerful that it makes the description of regular expressions an NP-complete problem.

What does NP-complete mean? NP-completeness is one of the classes in the theory of computational complexity for solving problems, into which many "difficult" problems fall. Examples of NP-complete problems are the Traveling Salesman Problem (TSP), the Boolean Formula Satisfaction Problem (SAT), and the Knapsack Problem (BKP).

Another important condition for calling a problem NP-complete is the ability to reduce any other NP-problem to it. Thus, all NP-complete problems are basically interchangeable. If you find a quick solution to one of them, then you will have a quick solution to all of them.

So if someone finds a quick solution for an NP-complete problem, then almost all of the complex computational problems of humanity will be solved in one fell swoop. What, as we know, will mean the end of civilization.

To prove that regular expressions with backlinks are exactly NP-complete, you can simply take one of the well-known NP-complete problems and prove that it can be solved by using regular expressions. As an example, I chose the 3-CNF SAT task.

The 3-CNF SAT stands for “The feasibility problem for Boolean formulas in 3-conjunctive normal form” and is simple enough to understand. There is a Boolean formula of the following form:

(!$a || $b || $d)&& ( $a || !$c || $d)&& ( $a || !$b || !$d)&& ( $b || !$c || !$d)&& (!$a || $c || !$d)&& ( $a || $b || $c)&& (!$a || !$b || !$c)It consists of a series of conditions separated by AND operators. Each of these conditions consists of three variables (or their negations) separated by OR operators. The 3-CNF SAT asks: is there a solution to this Boolean formula (e.g., true)?

The above Boolean formula can be converted to the following regular expression:

<?php

$regex = '/^

(x?)(x?)(x?)(x?) .* ;

(?: x\1 | \2 | \4 ),

(?: \1 | x\3 | \4 ),

(?: \1 | x\2 | x\4 ),

(?: \2 | x\3 | x\4 ),

(?: x\1 | \3 | x\4 ),

(?: \1 | \2 | \3 ),

(?: x\1 | x\2 | x\3 ),

$/x';

$string = 'xxxx;x,x,x,x,x,x,x,';

var_dump(preg_match($regex, $string, $matches));

var_dump($matches);

If you run this code, you'll get the following result

$matches: array(5) { [0]=> string(19) "xxxx;x,x,x,x,x,x,x," [1]=> string(1) "x" [2]=> string(1) "x" [3]=> string(0) "" [4]=> string(0) ""}This means that the formula given above is satisfied if

$a = true, $b = true, $c = falseand $d = false. The regular expression uses a very simple trick: for any three conditions, the string contains

xwhich must be described. So if you have something like this (?: \1 | x\3 | \4 )in regexp, then a line can only be described if \1it is x(true), or \3is an empty line (false), or \4is x(true). Now it's up to the engine. It checks the various paths for string binding until either a solution is found or it surrenders.

Summarizing

Since the article was a bit long, here is a summary of the main points:

- Regular expressions used by programmers have very little in common with the original notion of regularity in the context of formal language theory.

- Regular expressions (at least PCRE) can describe all context-free languages. Including syntactically correct HTML, and generally almost all other programming languages.

- Regular expressions are able to describe at least some context-sensitive languages.

- Regular Expression Description NP is complete. So you can solve any other NP problem using regular expressions.

But do not forget: from what you can do not follow what you should . Processing HTML with regular expressions is a really bad idea in some cases. And in others, this is perhaps the best thing to do.

Just figure out what is the simplest solution for your specific task, and use it. If you choose to solve the problem using regular expressions, do not forget about the x-modifier, which allows you to make the format of your regexp more beautiful. For complex regular expressions, also remember to use DEFINE statements and named subpatterns to keep your code clean and readable.

That's all.

From the translator: possible comments regarding the translation, please write in a personal. I will be very grateful for them.