Nine rounds of automated testing

I want to talk about the automated testing system we created. The system in my understanding is not only code, but also hardware, processes and people.

I will answer the questions: What are we testing? Who is doing this? Why is this all happening? What do we have?

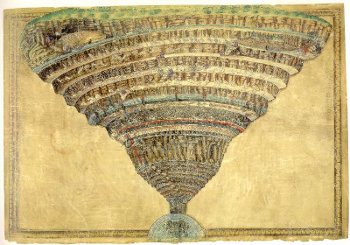

And then I’ll tell you how it works: I’ll describe the testing circles - from the first to the ninth.

What?

Our product is a corporate web application Service Desk, written in java.

Who!

I am the leader of the automated testing group; programmers whose code we are testing; hand testers whose routine we eradicate; managers who believe that if the tests pass, then everything is not so bad.

What for?

My group’s goal is to protect the product from the regression spiral of death .

The task of the group is to not detect defects with a maximum of interesting methods with a minimum amount of manual labor.

What do we already have?

900 short and not very test cases for using the application encoded in tests.

CI Jenkins on six servers, three DBMSs, two OS families and three browsers for which we are writing a product.

How it works?

Circle one - test scenario.

The smallest unit - the test, begins with a script.

Javadoc test method, which we then collect and show to manual testers and PMAs.

- The title of the test is a short and clear description of what we are testing.

- Link to the statement on which the test is written; if it is not there, then the case is just a game of the tester’s inflamed imagination, we have corporate software, we’re not joking.

- The test script in Russian, describing the three phases of the test - preparation, execution of actions and verification.

Note: The script is not a translation from java into Russian, on the contrary, the script is primary, and writing a high-quality case takes more time than coding it. In our case, it is usually not written by the person who encodes the test.

The first phase of the test is the preparation of the test system.

Catalog catalog = DAOCatalog.create(true, false);

catalog.set(Catalog.TITLE, “Я хочу чтоб в этом тесте у объекта было такое название”)

DSLCatalog.add(catalog);

CatalogItem item = DAOCatalogItem.create(catalog);

DSLCatalogItem.add(item);

Concept - there is a system model described by methods from DAO; there are methods with the DSL prefix that bring the system under test to the state described by the model.

Methods from DSL change the application through the system API, in our case it is a restfull service. We carry out the entire first phase of the test through the API.

Note: The rule is to write a DSL for each encoded test action so that it can later be executed through the API.

The model is a map of lines, we try to avoid unnecessarily complicating the testing system.

The second phase of the test is the test action using selenium, through the browser.

DSLCatalog.goToCard(tester, item.get(CatalogItem.PARENT_CODE));

tester.clickElement(ADD_ELEMEMT);

DSLForm.assertFormAppear(tester, DSLForm.DIALOG);

tester.sendKeys(DSLForm.TITLE_INPUT, item.get(CatalogItem.TITLE));

tester.sendKeys(DSLForm.CODE_INPUT, item.get(CatalogItem.CODE));

tester.clickElement(DSLForm.SAVE_ON_FORM);

DSLForm.assertFormDisappear(tester, DSLForm.DIALOG);

Note: Also, to ensure testability, each new interface element in the system has its own unique id within the page. Therefore, our xpath looks like this:or"//*[@id='description-value']""//*[@id='description-input']"

Not like that:"/html/body/table/tbody/tr/td[6]/table/tbody/tr[4]/td/div"

The class of problems with layout changes is solved.

The third phase is verification or verification. At least once, we execute it through the interface:

Assert.assertEquals("Название отображается неверно в карточке шаблона отчета", template.get(ReportTemplate.TITLE), tester.getTextElement(DSLForm.TITLE_VALUE));

And then you can check it through the API.

ModelMap map = SdDataUtils.getObject(sc.get(Bo.METACLASS_FQN), Bo.UUID, sc.getUUID());

String message = String.format("Атрибут БО с uuid-ом '%s' имеет другое значение.", sc.getUUID());

Assert.assertEquals(message, team.get(Bo.TITLE), SdDataUtils.getMapValue(map, "responsibleTeam").get("title"));

Note: Our application has an asynchronous interface; the automation community writes a lot about problems with waiting for the appearance of elements, but we ordered the programmer asynchronous request counter. Significantly speeds up the testing process.

The second and third phases can take place without using a browser, such as almost a hundred tests on the API.

Note: Someone should have the thought: is there too much attention paid to testing through the UI and on the raised application?

I will answer: a feature of our software is the high complexity of not individual functions, but business logic. Almost every test requires a database and a large context. In this case, unit-tests do not much win in time and significantly lose in writing complexity.

The second circle. The environment of the test.

Before each launch of the testing system:

- check the availability of the system and the ability to log into it

- check the global settings of the system and if necessary set the necessary values

Before each class with tests

- restart the browser

- check system availability

After each test:

- delete all the objects created by him - this is necessary to maintain the speed of the system and isolate the tests. When created, objects are queued and deleted in the reverse order.

The third circle is the hierarchy of tests.

- One test - one action. Exceptions no more than 5%

- Tests are combined in classes of 3-10 pieces, they test a business requirement

- 5-15 classes are combined in packages. The package tests the essence or aspect of the application.

- Packages 15

Note: Javadoc , assembled by the teammvn -f sdng-inttest/pom.xml javadoc:test-javadoc -e

It helps manual testers and PMs to find out what tests are, and allows you not to search for what is needed in the git web interface and not to install an IDE.The root looks like this:

The fourth circle is the organization of the design of the testing system.

60,000 lines of code of the testing system is

- Test code

- Utility domain methods: DSL code (work with the system under test) and DAO (work with models)

- Utility methods not tied to the application under test - working with strings, files, JSON, etc.

- The core of the testing system: logs, screenshots, cleaning, interface to webdriver, exception mechanism.

Note: This is how the browser settings for tests look, I hope someone will find it useful:Firefoxprofile/** * Открыть Firefox браузер. * http://code.google.com/p/selenium/wiki/FirefoxDriver * @return возвращает экземпляр класса FirefoxDriver, реализующий интерфейс WebDriver. * @exception WebDriverException если невозможно открыть браузер. */ private WebDriver openFirefox() { FirefoxProfile firefoxProfile = new FirefoxProfile(); //Память на вкладки firefoxProfile.setPreference("browser.sessionhistory.max_total_viewer", "1"); //Значение 3 не просто так, иначе не работает авторефреш firefoxProfile.setPreference("browser.sessionhistory.max_entries", 3); firefoxProfile.setPreference("browser.sessionhistory.max_total_viewers", 1); firefoxProfile.setPreference("browser.sessionstore.max_tabs_undo", 0); //Асинхронные запросы к серверу firefoxProfile.setPreference("network.http.pipelining", true); firefoxProfile.setPreference("network.http.pipelining.maxrequests", 8); //Задержка отрисовки firefoxProfile.setPreference("nglayout.initialpaint.delay", "0"); //Сканирование внутренним сканером загнрузок firefoxProfile.setPreference("browser.download.manager.scanWhenDone", false); //Анимация переключения вкладок firefoxProfile.setPreference("browser.tabs.animate", false); //Автоподстановка firefoxProfile.setPreference("browser.search.suggest.enabled", false); //Анимация гифок firefoxProfile.setPreference("image.animation_mode", "none"); //Резервные копии вкладок firefoxProfile.setPreference("browser.bookmarks.max_backups", 0); //Автодополнение firefoxProfile.setPreference("browser.formfill.enable", false); //Убрал дисковый кеш и кеш в памяти //firefoxProfile.setPreference("browser.cache.memory.enable", false); firefoxProfile.setPreference("browser.cache.disk.enable", false); //Сохранять файлы без подтверждения в tslogs firefoxProfile.setPreference("browser.download.folderList", 2); firefoxProfile.setPreference("browser.download.manager.showWhenStarting", false); firefoxProfile.setPreference("browser.download.dir", new File("").getAbsolutePath()); firefoxProfile.setPreference("browser.helperApps.neverAsk.saveToDisk", "application/xml,application/pdf,application/zip,text/plain,application/vnd.ms-excel"); return new FirefoxDriver(firefoxProfile); }

And we also have such a wonderful thing as unit tests on the core of the testing system.

Note: The testing system, has been living for a year and a half, has survived the move from svn to git, 300 commits, so 100 unit tests are vital

Two main use cases are starting from Jenkins and from eclipse.

The algorithm for working with the launch configuration:

- Testing system settings passed from maven - the highest priority. We write them in the config. If there is no config, then create.

- We take the rest of the settings from the config. If there is no config, then by default.

- Thus, the developers of the application and tests configure their personal config, and in CI we pass all the necessary parameters through maven.

My configuration file:

allowscreenshot=true

needinit=true

superlogin=naumen

allowsaveconfig=true

clickertime=3600000

testerpassword=atpassword

testerlogin=atlogin

superpassword=тутявсежезаменилтекст

needdelete=true

webaddress=http://localhost:9090/sd/

browsertype=firefox

Note: TS is all adult - it has its own settings and usage scenarios.

“As an autotester, I want to be able to run a testing application on a local computer, to perform certain tests on a local bench or client, for further analysis of the data received, or for the development of new tests.”

“As a developer, I want the TS to check the project after each change , for timely identification of problems and errors.

Etc.

We perform the balancing of tests for their parallelization in a rather ugly manner.

Startup Profile in pom.xml

smoke-snap2-branch

...

integration-tests all **/selenium/**/advlist/*Test.java **/selenium/**/bo/*Test.java **/selenium/**/advimport/*Test.java **/selenium/**/user/*Test.java **/selenium/**/rights/*Test.java There are several such profiles by which packages are manually broken.

The fifth circle. Source code management.

We use this git branching model as the most appropriate for our needs.

Note: Tests are in the same place as the application code. Thus, by detaching a branch, the developer also detaches the test code, this ensures the synchronization of tests and applications. We did not immediately come to this obvious decision.

The circle of the sixth. Separate assembly in CI Jenkins.

Assembly begins with a description. I believe that the rules should be as close as possible to their place of application. Here are useful links.

Look

Useful plugins for configuring assemblies:

- Clone Workspace , which avoids the desync when someone made a commit between compiling the code in the parent assembly and running the tests in the child.

- Xvfb is a virtual screen.

Running tests:

export DISPLAY='localhost:'$DISPLAY_NUM'.0';

mvn verify -P war-deploy,$TEST_PROFILE -Dext.prop.dir=$WORKSPACE/$PROP_DIR -Dwebaddress=http://localhost:$DEPLOY_PORT/sd -Dselenium.deploy.port=$DEPLOY_PORT -Dcargo.tomcat.ajp.port=$ARJ_PORT -Dcargo.rmi.port=$RMI_PORT

where the war-deploy profile is lifting the application using maven-cargo-plugin

Post - assembly operations.

- Archive artifacts, save fingerprints

- Jabber and email notification

- Blame Upstream Committers allows you to send letters to the committers of the parent assembly.

Circle of the seventh. Assembly hierarchy.

The assembly group that provides service to programmers is called a branch.

Parent assembly - compilation of the project.

Second-level child assemblies - unit tests for postgresql, mssql, oracle; static code analysis (CPD, PMD, findbugs); assembly of a war file (for one browser to save time) for UI tests.

The third level - UI tests in three streams and tests for migration.

Typical Third Branch

Note: The migration testing assembly is a simple and effective test in which we take a backup of an ancient database with data and climb to the last war file. Allows you to make sure that all data migrations pass and the application rises to a non-empty database.

Eighth circle, continuous integration system level

Our CI is 4 branches in which programmers can run tests, manually starting and specifying their branch. And one main branch, launched by a commit in develop, differs from the others by the availability of test assemblies on all supported DBMSs. Not earlier than two weeks ago, each branch was located on a separate server (we chose EX4 , since we do not need reliability, but processor speed is critical; experiments showed that it is optimal - three Jenkins nodes per server). After reconfiguring the virtual screens, ports, and DBMS settings, we now have a common node space.

The loading schedule looks like this:

Jenkins promoted builds plugin , which allows you to draw beautiful stars for assemblies, all of whose daughters passed without dropped tests. It turned out to be more useful than three hundred tests through an interface or a thousand unit tests. Before him, some unconscious PMs released releases, despite the presence of fallen tests, persuasion and threats did not help. But as soon as each build appeared asterisks - the first alarm system worked and once every two weeks the leitmotif of the day - we are waiting for two stars . This resulted in increased requirements for the speed of error correction by programmers and the quality of the code of the testing system - random operations are now more expensive.

PMs are meditating on this picture on release day.

The assembly using Performance Plugin - tracking the performance of typical operations, allows you to quickly find the most serious errors related to system performance.

The result looks something like this:

In development, an assembly that automatically performs full load testing. But this is the topic of a separate article.

Note: A few more useful things:

- Parameterized Trigger Plugin - if you have a complex assembly hierarchy.

- JobConfigHistory Plugin - works wonders with LDAP.

- Configuration Slicing Plugin - if you have more than 50 almost different assemblies.

- Rebuild Plugin is just a useful thing.

- The Continuous Integration Game plugin — моя группа всегда выигрывает, так как мы только и делаем, что пишем тесты.

Девятый круг, люди и процессы.

The hardest part in automated testing is getting a good case. It takes up to half the time. The main supplier is manual testers, we eat their cases and ask for more, but they have a lot of other interesting work. Therefore, we write tests for defects, and in general, every completed task in JIRA passes through my gaze - can I write a test on it? We also have Emma Plugin and three builds of the coverage counting assembly - for uint tests, for UI tests and total. Based on these reports, we write tests for the system API. But for the rest of the functionality, the coverage report has no value - the specifics of our software are such that we need to focus on covering tests not with code, but with requirements.

Simple coverage schedule

The development of a feature in the context of automated testing looks like this:

Unhook a branch in git. Write a feature code. Run tests in the branch. Fix tests in the branch. Get two stars. Merge with develop.

The principle that slowed down the development speed, increased the readiness of the code for release and saved a lot of time for my group: The

moral right to commit belongs to the programmer, the assembly of testing in the branches of which received two stars.

If the programmer has unhooked the commit in which all the tests passed, then his commit should have the same property.

At first, the principle was reinforced by the revert of commits and the prohibition of commits in develop.

Such a development process imposed a limit on the total time for passing all tests - 2 hours. We have no nightly tests and tests that run on the weekend, absolutely all tests pass in a maximum of 2 hours. Over the past six months, the automated testing group worked in approximately this mode:

Write tests up to 2 hours - buy servers and parallelize - write tests up to 2 hours - optimize test code - write tests up to 2 hours - change servers to faster ones - write tests ...

Afterword

All that I talked about is not my merit. My contribution to the test code is one third of the lines. In CI - a quarter of configs. Two dozen people worked on the testing system and continuous integration - from technical support and testers to the development manager and analysts.

I want to hear from you not only questions, but also wise advice, useful settings and cool ideas. Just remember about my role in the project - I can change anything in the code, much in CI, something in the processes. I can’t change people.

If there is someone who wants to not only give valuable guidance, but for example learn how to do it all yourself - or teach me how to work correctly - welcome to the PM, I'm looking for a colleague.