Recommendations for the backup and recovery policy after Doomsday

On the Day of the End of the World it is appropriate to recall what should be the policy of backup and recovery of data after failures and disasters.

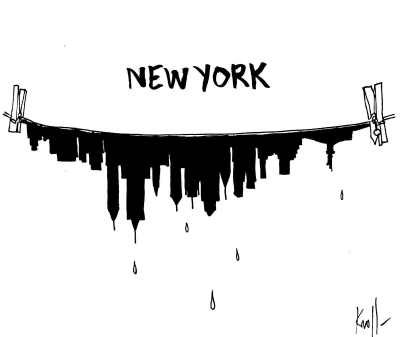

When significant disasters like the Sandy hurricane and the New York flood occur, companies recall their “insurance”: whether there was a backup, if it was lost along with the original data, whether it is possible to restore applications and data from it, whether it was covered by the process the entire productive system or only part of it, and how long will it take to restore

The answers to these questions may vary depending on how the company initially related to its “insurance”: whether the data protection project was well thought out and funded, whether the backup process was integrated into a comprehensive business continuity plan, or was it a “shard” incomplete mosaic.

Some companies do not pay enough attention to threat modeling for data and applications in their infrastructure, do not test backups, and do not test the ability to restore the system in compliance with SLA for recovery time (RTO).

Often, small and medium-sized businesses (SMBs) do not have a sufficiently reliable plan to ensure the fault tolerance of their systems. Some people find this too complicated and costly for themselves. Others simply have “no time” to think about the reliability of storing their data. Still others believe that nothing bad can happen to data.

And even if a backup product is installed in the company, often no one tests the backups to check if it is possible to restore the system from them if a failure occurs.

Now there are a large number of backup solutions on the market with different functionality and price, and the problem for SMB companies is to develop a rational data protection strategy for themselves and find a product that is simple, productive and reasonably priced. The need to place the data of the backup repository somewhere adds to the problem of choosing suitable hardware storage systems.

So what can you advise when designing a backup strategy?

Disk-to-disk backup, block-by-block changes

Typically, a Disk-to-Disk (D2D) backup operation is faster and a data recovery operation is much faster than a Disk-Tape operation. Block-level backups are much faster than file-level backups, as changes are tracked at a much smaller chunk level. As a result, the backup window shrinks. If a company needs to comply with any legal requirements that require long-term storage, it is wise to combine the Disk-Disk (D2D) and Disk-Tape (D2T) approaches and use the Disk-Disk-Tape (D2D2T) configuration, storing backups in within (say) 30 days on disk storage, and long-term storage on tape (since the unit cost of storage on tape has no equal so far, despite the reduction in the unit cost of storage on disk data storages). You can read more about the benefits of this approach inthis and this post.

Deduplication of backups

Deduplication is the process of eliminating duplicate blocks from backup data. In a virtualization environment, deduplication is particularly effective because many virtual machines are created using the same template and contain the same set of software. Read more about deduplication of backups here .

If you use virtualization - use specialized backup products

There are "old" backup products created for backup of physical machines. They install special agent programs inside the machines, and copy data from the inside, usually doing this at the file level of the corresponding operating system. There are “new” products created specifically for virtualization systems that use new technological capabilities of virtual environments. They do not require agents, load the virtualization server less and work much faster due to the use of new technologies, such as the vStorage API . Read more about the benefits of using specialized backup products here .

Replication, or scheduled backup with a minimum period

How long can new or modified data from a productive system not be reserved? This time period is called RPO. And modern backup products have made good progress in minimizing RPOs that reach up to 15 minutes. The use of replication generally allows you to get a mode close to continuous continuous data backup (near-CDP).

Backup on the principle of "copy everything except ..."

The strategy of copying only selective user data “do not copy anything except for explicitly included objects” can lead to the fact that important user data will not be fully backed up. For example, this can happen when the user asked to include his working directory in the backup plan, and then created other working directories outside the subtree of the first directory. The same thing can happen when a user installs a new program that saves the user's work files to a directory that has not been configured as being backed up. Or, in the new version of the program, the directory for storing the user's working files will be changed to a location that, again, will not be backed up ...

Fast data recovery

Failures occur not only due to causes of disasters or power and equipment failures. Up to a third of cases are human errors (for example, accidentally deleting a file). For this reason, it is often necessary to restore not the entire server or network segment as a whole, but only an individual file or application object. You need to use backup products that support granular data recovery. This significantly reduces recovery time and simplifies the entire process for the administrator. More information about granular recovery can be found here .

Off-site backup storage

It is reasonable to store backup copies of data separately (and, as far as possible) from the original data, since there is a possibility that the event that led to the failure can destroy both the original data and their copies. There are two most common options for cloning a backup obtained after copying Disk-to-Disk:

- storage of additional copies on tapes (which can be taken to another office, or to a bank cell) - this scheme is called D2D2T and is most common at the moment,

- Duplication of copies to the cloud (Cloud), for example, in Amazon.

Virtualization of critical applications (or their backup cluster nodes)

Here, I would just like to note that the use of virtualization in itself makes it easier to recover from failures, since it isolates virtual machines from specific equipment. A virtual machine can be run on a virtualization server with any hardware that does not necessarily match the one on which it was running before the failure. Many virtualization systems have the functionality to move a virtual machine between hosts in the event of a failure, as well as failover and clustering functionality.

Backup Testing

Data recovery testing should be done regularly, rather than a simple backup integrity test. That is, check the operability of the restored systems and the presence of the correct data in them, and not just check the checksums of the data blocks of the backup files. In the case when the productive network is located in a virtual environment, backup products created specifically for the virtual environment allow the data verification process to be automated and transparent for the administrator, since they allow you to create virtual sandbox test laboratories isolated from the productive network. You can see more about this in this post . You can read about SureBackup technology here .

Information Security Backups

The source data is usually protected by access rights. After transferring this data to backup copies, you need to ensure that only authorized access is obtained to it. There are several options: for example, you can encrypt backups, make them inaccessible to unauthorized network users, or even exclude access to them from a network segment where ordinary network users work (administrators can connect from another network segment).

Why, in the case of virtualization, backing up at the host level reduces information security risks can be found in this post .

***

Additional Resources for Virtualization Backup Strategies

:- Site Backup Academy (Eng. Lang)

- Expert article on backing up networks with hundreds of virtual machines

- Article "Backup and Restore for Microsoft Hyper-V"

- An article about backing up virtual machines on networks with NAS (English)