Amazon SQS vs RabbitMQ

Introduction

Any progress and optimization is welcomed by anyone. Today I would like to talk about a wonderful thing that makes life much easier - queues. Implementation of best practices in this matter not only improves application performance, but also successfully prepares your application for the architecture in the style of Cloud Computing. Moreover, not using ready-made solutions from cloud providers is simply stupid.

In this article, we will look at Amazon Web Services in terms of designing the architecture of medium and large web applications.

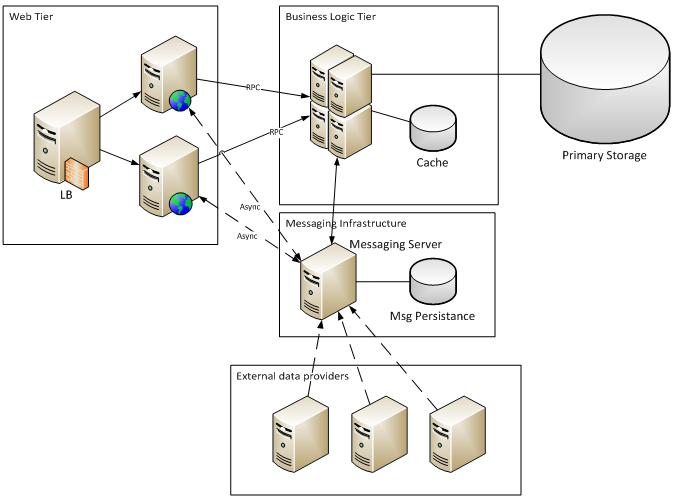

Consider the scheme of such an application:

Examples of such an organization can be various kinds of aggregators: news, exchange rates, stock quotes, etc.

External data providers generate a stream of messages that, after processing, are stored in the database.

Users through web-tier make a selection of information from the database according to certain criteria (filters, grouping, sorting), and then optional processing of samples (various statistical functions).

Amazon tries to identify the most common application components, then automates and provides the service component. Now there are more than two dozen such services and a full list can be found on the AWS website: http://aws.amazon.com/products/ . There was already an article on the hub describing a number of popular services: Popular about Amazon Web Services . This is attractive primarily because there is no need for self-installation and configuration, as well as higher reliability and piecework payment

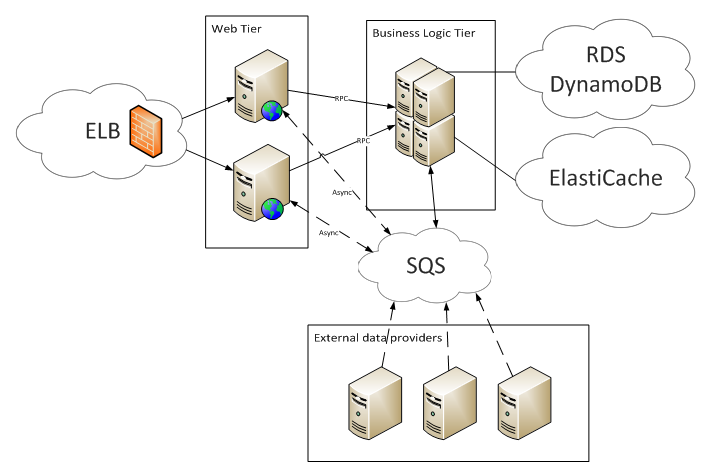

And if you use AWS, then the project scheme will look like this:

Undoubtedly, this approach is in demand and it has its own market. But often questions arise about the financial component:

- How much can you save using AWS?

- Is it possible to independently implement a service with the same properties, but for less money?

- Where is the line that separates AWS from its counterpart?

Next we will try to answer these questions.

1. Overview of analogues

For comparison, we will consider the following components:

- Message-oriented middleware - RabbitMQ

- An analogue of the above service from AWS, which is called SQS

SQS service is paid based on the number of API requests + traffic.

We will consider each service in more detail.

1.1. SQS

Amazon SQS is a service that allows you to create and work with message queues. The standard cycle for working with the finished SQS queue is as follows:

- To send a message to the queue, the producer must know its URL. Then, using the SendMessage command, adds the message.

- Consumer receives the message using the ReceiveMessag command.

- As soon as a message is received, it will be blocked for re-receipt for a while.

- After successfully processing the message, Consumer uses the DeleteMessage command to remove the message from the queue. If an error occurred during processing or the DeleteMessage command was not called, then after a timeout the message will be returned back to the queue.

Thus, on average, 3 API calls are required to send and process one message.

Using SQS, you pay for the number of API calls + traffic between regions. The cost of 10k calls is $ 0.01, i.e. for an average of 10k messages (x3 API calls) you pay $ 0.03. You can look at rates in other regions here .

There are a large number of options for organizing a message sending service:

- Rabbitmq

- Activemq

- Zmq

- Openmq

- ejabbered (xmpp)

Each option has its pros and cons. We will choose RabbitMQ as one of the most popular implementations of the AMQP protocol.

1.2. Rabbitmq

1.2.1. Deployment Scheme

A server with RabbitMQ installed and default settings produces very good performance. But this version of deployment does not suit us, because in the event of a fall of this node, we can immediately get a number of problems:

- Loss of important data in messages;

- "Accumulation" of information on the Producer, which can lead to an overload of Consumers after the restoration of the queue;

- Stopping the entire application while a problem is being resolved.

In testing, we will use 2 nodes in active-active mode with replication of queues between nodes. For RabbitMQ, this is called mirrored queues.

For each such queue, a master and a set of slaves are defined where a copy of the queue is stored. In the event of a master node crash, one of the slaves is selected by the master.

To create such a queue, the “x-ha-policy” parameter is set during declaration, which indicates where copies of the queue should be stored. Possible 2 parameter values

- all: copies of the queue will be stored on all nodes of the cluster. When adding a new node to the cluster, a copy will be created on it;

- nodes: copies will be created only on the nodes specified by the "x-ha-policy-params" parameter.

You can read more about mirrored queues here: http://www.rabbitmq.com/ha.html .

1.2.2. Performance Measurement Technique

Earlier, we examined how the test environment will be organized. Now let's look at what and how we will measure.

For all measurements, m1.small instances (AWS) were used.

We will carry out a number of measurements:

The speed of sending messages to a certain value, then the speed of receiving - thereby we will check the degradation of performance with increasing queue.

1. The speed of sending messages to a certain value, then the speed of receiving - thereby we will check the degradation of performance with increasing queue.

2. Simultaneous sending and receiving messages from one queue.

3. Simultaneous sending and receiving messages from different queues.

4. Asymmetric load on the queue:

- a. Queue up to 10 times more threads than receive;

- b. 10 times more streams are received from the queue than they are sent.

- a. 16 bytes

- b. 1 kilobyte;

- c. 64 kilobytes (max for SQS).

All tests except the first will be carried out in 3 stages:

- Warming up 2 seconds;

- Test run 15 seconds;

- Queue cleaning.

Message Acknowledgment

This property is used to confirm the delivery and processing of a message. There are two modes of operation:

- Auto acknowledge - the message is considered to be delivered successfully immediately after it is sent to the recipient; in this mode, to get one message, just one call to the server is enough.

- Manual acknowledge - the message is considered to be delivered successfully after the recipient calls the appropriate command. This mode allows you to ensure guaranteed processing of the message if you confirm delivery only after processing. In this mode, two calls to the server are required.

In the test, the second mode is selected, because it corresponds to the work of SQS, where message processing is done by two commands: ReceiveMessage and DeleteMessage.

Batch processing

In order not to waste time on each message to establish a connection, authorization and other things, RabbitMQ and SQS allow you to process messages in batches. This is available for both sending and receiving messages. Because batch processing is disabled by default in both RabbitMQ and SQS, we also will not use it for comparison.

1.2.3. Test results

Load-Unload Test

Summary Results:

| Load unload test | msg / s | Request Time | ||||

| avg ms | min, ms | max ms | 90%, ms | |||

| SQS | Consume | 198 | 25 | 17 | 721 | 28 |

| Producer | 317 | 16 | 10 | 769 | 20 | |

| Rabbitmq | Consume | 1293 | 3 | 0 | 3716 | 3 |

| Producer | 1875 | 2 | 0 | 14785 | 0 | |

The table shows that SQS works much more stable than RabbitMQ, in which failures can occur when sending a message for 15 seconds! Unfortunately, we could not immediately find the reason for this behavior, and in the test we try to adhere to the standard settings. At the same time, the average speed of RabbitMQ is about 6 times higher than that of SQS, and the query execution time is several times lower.

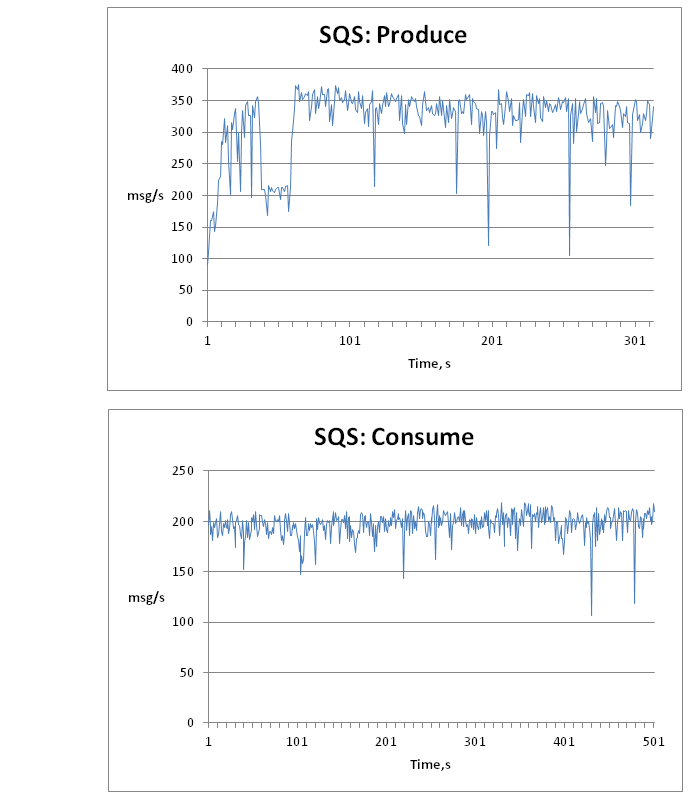

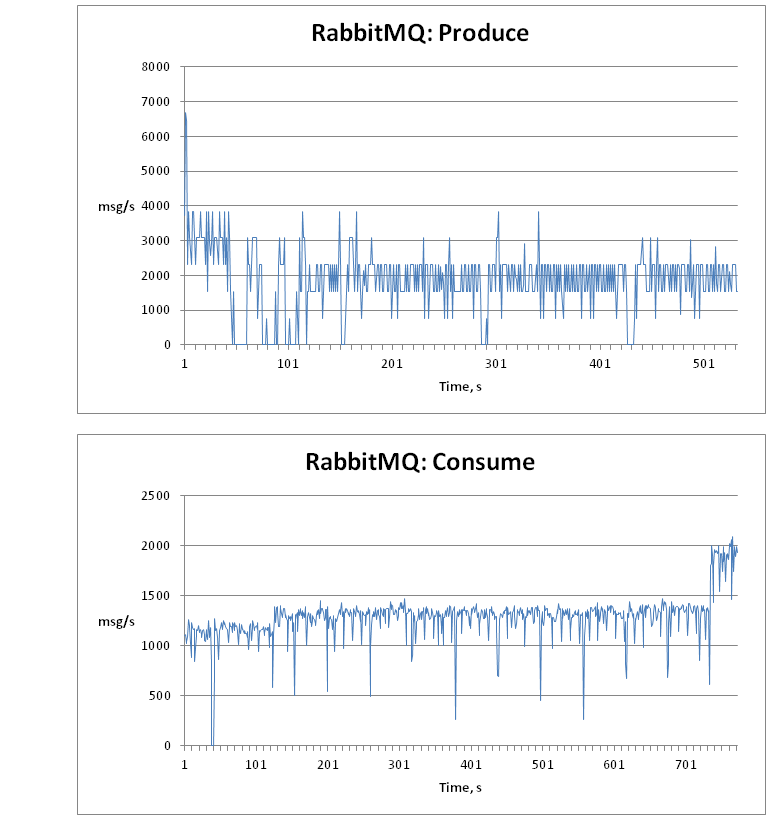

The following are graphs with the distribution of average speed versus time.

In general, no drop in performance with an increase in the number of messages in the queue has been noticed, which means you can not be afraid that when the receiving nodes fall, the queue will become a bottleneck.

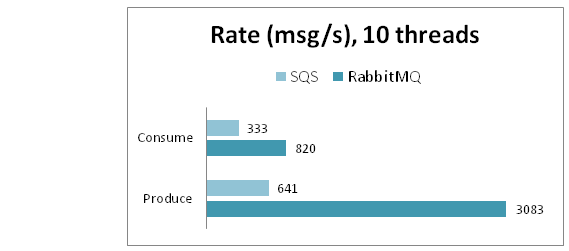

Parallel

No less interesting is the test of the dependence of the speed of work on the number of simultaneously working threads. The results of the SQS test can be easily predicted: since the work is done over the HTTP protocol and most of the time it takes to establish a connection, then, presumably, the results should grow with the number of threads, as the following table illustrates well:

| SQS msg / s | Threads | |||

| 1 | 5 | 10 | 40 | |

| Producer | 65 | 324 | 641 | 969 |

| Consume | 33 | 186 | 333 | 435 |

It can also be seen that for 1, 5, and 10 flows, the dependence is linear, but with an increase of up to 40 flows, the average speed increases by 50% for sending and 30% for receiving, but the average request time significantly increases: 43ms and 98ms, respectively.

For RabbitMQ, speed saturation is much faster, already with 5 threads the maximum is reached:

| RabbitMQ Threads | Threads | ||||

| 1 | 5 | 10 | 40 | ||

| Producer | speed, msg / s | 3086 | 3157 | 3083 | 3200 |

| latency, ms | 0 | 1 | 3 | eleven | |

| Consume | speed, msg / s | 272 | 811 | 820 | 798 |

| latency, ms | 3 | 6 | 12 | 51 | |

During testing, a feature was revealed: if 1 thread for sending and 1 thread for receiving work at the same time, then the speed of receiving messages drops to almost 0, while the sending thread shows the maximum performance. The problem is solved if the context is forced to switch after each iteration of the test, while the sending throughput decreases, but the upper limit of the query execution time is significantly reduced. From local tests with 1 thread (send / read): 11000/25 vs 5000/1000.

Additionally, we conducted a test for RabbitMQ with several queues for 5 threads:

| Rabbitmq | Queues | |

| 1 | 5 | |

| Producer | 3157 | 3489 |

| Consume | 811 | 880 |

It can be seen that the speed for several queues is slightly higher. The summary results for 10 streams are presented in the following diagram:

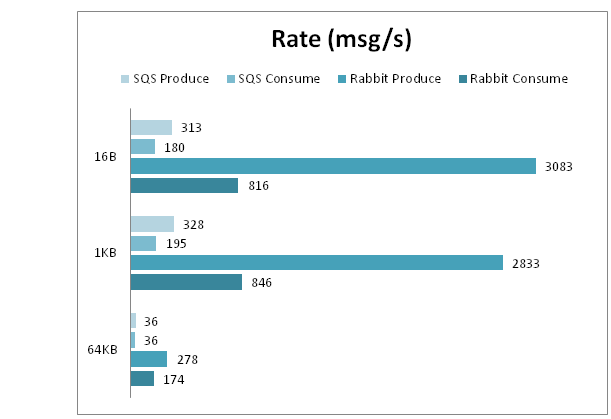

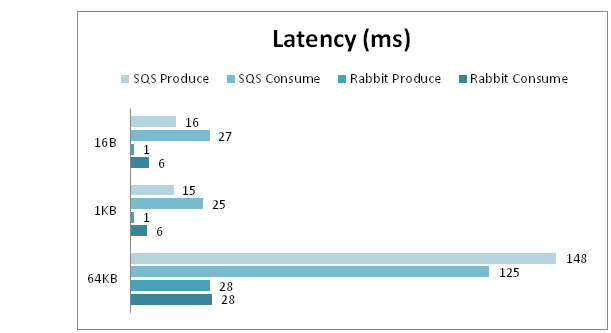

Size

In this test, we consider the dependence of speed on the size of the transmitted data.

Both RabbitMQ and SQS showed the expected deterioration in the speed of sending and receiving with increasing message size. In addition, the queue in RabbitMQ with the size of the message more often "freezes" and does not respond to requests. This indirectly confirms the conjecture that this is related to working with a hard disk.

Comparative speed results:

Comparative query time results:

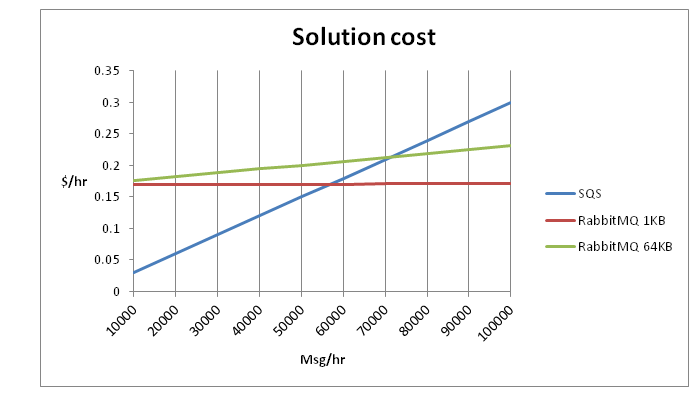

2. Calculation of cost and recommendations

From the estimated cost of $ 0.08 for one small instance in the European region, we get a price of $ 0.16 for RabbitMQ in a two-node configuration + traffic cost. In SQS, the cost of sending and receiving 10,000 messages is $ 0.03. We get the following dependency:

60 thousand messages per hour is about 17 messages per second, which is much less than the speed that SQS and RabbitMQ can provide.

Thus, if your application requires an average speed of less than 17 messages per second, then SQS is preferable. If the application needs are getting higher, then it is worth considering migration paths to dedicated messaging servers.

It is important to understand that these recommendations are valid only for medium speeds, and calculations should be carried out for the entire period of the load fluctuation cycle, but if your application requires a speed much higher than SQS allows at the peak, then this is also an occasion to think about changing the provider.

Another reason to use RabbitMQ may be the requirement for latency of the request, which is an order of magnitude lower than that of SQS.

2.1. Is it possible to reduce the cost of RabbitMQ solutions?

There are two ways to reduce the cost:

- Do not use a cluster.

- Use micro instance.

In the first case, the HA of the cluster is lost in the event of a fall of the node or the entire active zone, but this is not scary if the entire application is hosted in only one zone.

In the second case, micro instance can be cut down resources, if for some time the utilization of resources is close to 100%. This can affect the operation of the queue when persistence queues are used.

3. Conclusion

Thus, we see that there is simply no definite answer to the question “What solution should I use?” It all depends on many factors: the size of your wallet, the number of messages per second and the time it took to send these messages. Nevertheless, based on the metrics in this material, you can calculate the behavior for a particular case.

Thanks!

The article was written and adapted based on the research of Maxim Bruner ( minim ), for the EPAM Cloud Computing Competency Center