Functional programming in the shell on the example of xargs

- Tutorial

Abstract : a story about how to quickly and beautifully process lists in the shell, a little manual on xargs and a lot of water about the philosophy of programming, or administration.

A bit of SEO optimization: currying, lambda function, composition of functions, map, filtering the list, working with sets in the shell.

System administrators often find themselves in a situation where you need to take the output of one program, and apply a different program to each output element. Or even not one. As a funny (and useless) example, we take the following: you need to calculate the total size of all executable files that are currently running on the system along with all the dynamic libraries that they use.

This is not a real “task”, this is a case study, solving which (in the solution there will be a single-line) I will talk about a very unusual and powerful system administration tool - linear functional programming. It is linear, because using the pipe "|" this is linear programming, and using xargs allows you to turn a complex program with nested loops into a single-line functional view. The purpose of the article is not to show “how to find the size of libraries” and not to retell the arguments of xargs, but to explain the spirit of the solution and explain the philosophy behind it.

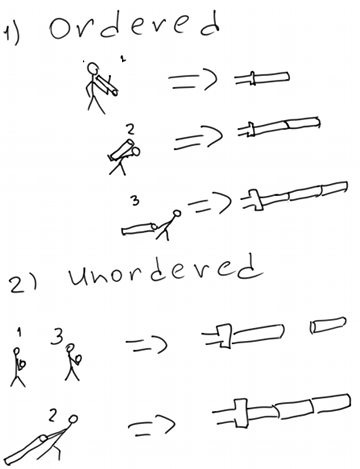

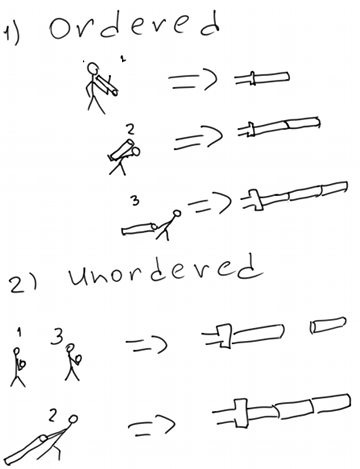

There are several programming styles. One of them looks like this: for each element of the list, make a cycle in which for each element of the list, if it is not an empty string, take the file name, and if the file size is not equal to zero, then add to the counter. Ah, yes, the counter must first be set to zero.

Another looks like this:

Apply to the list a function that applies to each element of the list, if this element is a non-empty string and the file size with this name is not zero, add to the sum.

Even the words show that the second option is shorter.

Taking into account the expressive means of programming languages, constructs are obtained that are capacious and devoid of problems of ordinary cycles - loops, incorrect exit from the cycle, etc.

In fact, in the context of the problem under discussion, we are talking about indicating a function that needs to be applied to each element of the list. The function itself, in turn, can also be a list handler, with its own processing function.

To solve the problem, we need to get a list of running processes. This is easier than it sounds - all processes are in / proc / [0-9] +. Next, we need the paths to the binary. And this is also simple: / proc / PID / exe for all processes except nuclear ones, indicates the path to the process. Next task: we need a list of libraries for the file. This is done by the ldd command, which expects the file path and displays (in a tricky format) a list of libraries. The next question is technology and walking around symlinks - we need to go through the library symlinks to the stop, and then calculate the size of each of the files.

Thus, a high-level description of the task looks like this: take lists of executable files and libraries, and for each of them find out the size.

Note that in the process, the list of executable files will be used twice - once "by itself", the second time - to get a list of libraries of each file.

(exaggerate and omit the details)

Disgusting, right? This, we note, without regexp for the correct pid filtering (we do not need to try to read the non-existent / proc / mdstat / exe) and without handling numerous cases of errors.

We are aware of the task. Since our input data are homogeneous files, we can simply present them as a list and process them the same way. Capsule I will write "not written" sections of code.

We cheat a little, and use stderr to duplicate the list.

What does this code do? The unwritten portion of the EXE_LIST generates an exe list in the system. tee takes this list, writes to stderr (stream number 2) and writes to stdout (stream number 1). Thread # 1 is passed to LIB_LIST. Next, we combine the output of all three commands (brackets) with stderr and put it into stdout as a single list and pass it to CALC.

Now we need to do EXE_LIST

(approx. to see _all_ processes in the system, you need to be root).

We will go in a slightly unusual way, and instead of ls in the loop, use find. In principle, ls is also possible, but there will be more problems with processing symlinks.

So:

we need maxdepth to ignore threads, we get output similar to the output of ls. We need to filter it out.

So, we improve EXE_LIST:

Observation: we will use stderr to transmit data, so we don’t need flood floods for problems with all kinds of nuclear threads.

It's simple: for each file transferred, we need to use ldd, and then comb the output.

What's so interesting? We limit ldd to a maximum of 32 files at a time, and run 4 ldd queues in parallel (yes, we have such a homegrown hadup). The -P option allows you to parallelize the performance of ldd, which will give us some speed boost on multi-core machines and good disks (fopping is in this case, but if we are doing something more inhibited than ldd, then parallelism can be a salvation ... )

At the input of files, at the output you need to give a figure of the total size of all files. We will determine the size ... However, stop. Who says symlinks point to files? Of course, symlinks point to symlinks that point to symlinks or files. And those symlinks ... Well, they did.

Add readlink. And he, the infection, wants one parameter at a time. But there is the -f option, which will save us a lot of effort - it will show the file name, regardless of whether it is a symlink or just a file.

| xargs -n 1 -P 16 readlink -f | sort -u

... and so, we will determine the size using du. Note that we could just use the -C option here, which sums up the numbers and gives an answer, but in the training course we are not looking for simple ways. So, without muhlezh.

| xargs -n 32 -P 4 du -b | awk '{sum + = $ 1} END {printf "% i \ n", sum}'

Why do we need sort -u? The fact is that we will have a lot of repetitions. We need to select unique values from the list, that is, turn the list into a set. This is done with a naive method: we sort the list and say, throw out the repeated lines when sorting.

We write everything together:

(find / proc / -name exe -ls 2> / dev / null | awk '{print $ 13}' | tee / dev / stderr | xargs -n 32 -P 4 ldd 2> / dev / null | grep / | awk '{print $ 3}') 2> & 1 | sort -u | xargs -n 1 -P 16 readlink -f | xargs -n 32 -P 4 du -b | awk '{sum + = $ 1} END { print sum} '

(I didn’t put a raw tag in this horror, so that the line is automatically wrapped so as not to tear you rss-reader feeds, love me.)

Of course, THIS is no better than what was given at the beginning. Fear and horror, in a word. Although, if you are a guru of 98 level and swing the 99th, then such single-line players can be the usual style of work ...

However, back to the storm of the 10th level.

We want to cut it. For readable and debugged pieces of code. Sane. Commented out.

So, back to the initial form of writing: (EXE_LIST | tee / dev / stderr | LIB_LIST) 2> & 1 | CALC

Readable? Maybe yes.

It remains to figure out how to correctly write EXE_LIST.

Option one: using functions:

And a little functional nobility:

Of course, purists of the FNPs will say that there is no type inference, no control over them, no lazy calculations, and in general, consider this list processing in a functional style - madness and pornography.

However, this is code, it works, it is easy to write. It does not have to be a real product environment, but it can easily be a tool that leaves three minutes of interesting programming out of three hours of monotonous work. This is the specifics of the system administrator.

The main thing I wanted to show: a functional approach to processing sequences in the shell gives a more readable and less bulky code than a direct iteration. And it works faster, by the way (due to xargs concurrency).

A further development of “shell on hull” is the gnu parallels utility, which allows you to execute code on multiple servers in parallel.

A bit of SEO optimization: currying, lambda function, composition of functions, map, filtering the list, working with sets in the shell.

Example

System administrators often find themselves in a situation where you need to take the output of one program, and apply a different program to each output element. Or even not one. As a funny (and useless) example, we take the following: you need to calculate the total size of all executable files that are currently running on the system along with all the dynamic libraries that they use.

This is not a real “task”, this is a case study, solving which (in the solution there will be a single-line) I will talk about a very unusual and powerful system administration tool - linear functional programming. It is linear, because using the pipe "|" this is linear programming, and using xargs allows you to turn a complex program with nested loops into a single-line functional view. The purpose of the article is not to show “how to find the size of libraries” and not to retell the arguments of xargs, but to explain the spirit of the solution and explain the philosophy behind it.

Lyrics

There are several programming styles. One of them looks like this: for each element of the list, make a cycle in which for each element of the list, if it is not an empty string, take the file name, and if the file size is not equal to zero, then add to the counter. Ah, yes, the counter must first be set to zero.

Another looks like this:

Apply to the list a function that applies to each element of the list, if this element is a non-empty string and the file size with this name is not zero, add to the sum.

Even the words show that the second option is shorter.

Taking into account the expressive means of programming languages, constructs are obtained that are capacious and devoid of problems of ordinary cycles - loops, incorrect exit from the cycle, etc.

In fact, in the context of the problem under discussion, we are talking about indicating a function that needs to be applied to each element of the list. The function itself, in turn, can also be a list handler, with its own processing function.

Decision Data

To solve the problem, we need to get a list of running processes. This is easier than it sounds - all processes are in / proc / [0-9] +. Next, we need the paths to the binary. And this is also simple: / proc / PID / exe for all processes except nuclear ones, indicates the path to the process. Next task: we need a list of libraries for the file. This is done by the ldd command, which expects the file path and displays (in a tricky format) a list of libraries. The next question is technology and walking around symlinks - we need to go through the library symlinks to the stop, and then calculate the size of each of the files.

Thus, a high-level description of the task looks like this: take lists of executable files and libraries, and for each of them find out the size.

Note that in the process, the list of executable files will be used twice - once "by itself", the second time - to get a list of libraries of each file.

Imperative decision

(exaggerate and omit the details)

get_exe_list(){

for a in `ls /proc/*;

do

readlink -f $a/exe;

done

}

get_lib(){

for a in `cat `;

do

ldd $a

done |awk '{print $3}'

}

calc(){

sum=0

sum=$(( $sum + `for a in $(cat); do du -b $a|awk '{print $1}'; done` ))

}

exe_list=`get_exe_list|sort -u`

lib_list=`for a in $exe_list; do get_lib $a;done|sort -u`

size=$(( calc_size $exe_list + calc_size $lib_list))

echo $size

Disgusting, right? This, we note, without regexp for the correct pid filtering (we do not need to try to read the non-existent / proc / mdstat / exe) and without handling numerous cases of errors.

Lists

We are aware of the task. Since our input data are homogeneous files, we can simply present them as a list and process them the same way. Capsule I will write "not written" sections of code.

Part One: Double List Processing

We cheat a little, and use stderr to duplicate the list.

(EXE_LIST |tee /dev/stderr|LIB_LIST) 2>&1 | CALC

What does this code do? The unwritten portion of the EXE_LIST generates an exe list in the system. tee takes this list, writes to stderr (stream number 2) and writes to stdout (stream number 1). Thread # 1 is passed to LIB_LIST. Next, we combine the output of all three commands (brackets) with stderr and put it into stdout as a single list and pass it to CALC.

Now we need to do EXE_LIST

Part Two: List Filtering

(approx. to see _all_ processes in the system, you need to be root).

We will go in a slightly unusual way, and instead of ls in the loop, use find. In principle, ls is also possible, but there will be more problems with processing symlinks.

So:

find /proc/ -maxdepth 2 -name "exe" -lswe need maxdepth to ignore threads, we get output similar to the output of ls. We need to filter it out.

So, we improve EXE_LIST:

find /proc/ -name "exe" -ls 2>/dev/null|awk '{print $13}'

Observation: we will use stderr to transmit data, so we don’t need flood floods for problems with all kinds of nuclear threads.

Part Three: LIB_LIST

It's simple: for each file transferred, we need to use ldd, and then comb the output.

xargs -n 32 -P 4 ldd 2>/dev/null|grep "/"|awk '{print $3}'

What's so interesting? We limit ldd to a maximum of 32 files at a time, and run 4 ldd queues in parallel (yes, we have such a homegrown hadup). The -P option allows you to parallelize the performance of ldd, which will give us some speed boost on multi-core machines and good disks (fopping is in this case, but if we are doing something more inhibited than ldd, then parallelism can be a salvation ... )

Part Four: CALC

At the input of files, at the output you need to give a figure of the total size of all files. We will determine the size ... However, stop. Who says symlinks point to files? Of course, symlinks point to symlinks that point to symlinks or files. And those symlinks ... Well, they did.

Add readlink. And he, the infection, wants one parameter at a time. But there is the -f option, which will save us a lot of effort - it will show the file name, regardless of whether it is a symlink or just a file.

| xargs -n 1 -P 16 readlink -f | sort -u

... and so, we will determine the size using du. Note that we could just use the -C option here, which sums up the numbers and gives an answer, but in the training course we are not looking for simple ways. So, without muhlezh.

| xargs -n 32 -P 4 du -b | awk '{sum + = $ 1} END {printf "% i \ n", sum}'

Why do we need sort -u? The fact is that we will have a lot of repetitions. We need to select unique values from the list, that is, turn the list into a set. This is done with a naive method: we sort the list and say, throw out the repeated lines when sorting.

One-liner, terrifying

We write everything together:

(find / proc / -name exe -ls 2> / dev / null | awk '{print $ 13}' | tee / dev / stderr | xargs -n 32 -P 4 ldd 2> / dev / null | grep / | awk '{print $ 3}') 2> & 1 | sort -u | xargs -n 1 -P 16 readlink -f | xargs -n 32 -P 4 du -b | awk '{sum + = $ 1} END { print sum} '

(I didn’t put a raw tag in this horror, so that the line is automatically wrapped so as not to tear you rss-reader feeds, love me.)

Of course, THIS is no better than what was given at the beginning. Fear and horror, in a word. Although, if you are a guru of 98 level and swing the 99th, then such single-line players can be the usual style of work ...

However, back to the storm of the 10th level.

Decent view

We want to cut it. For readable and debugged pieces of code. Sane. Commented out.

So, back to the initial form of writing: (EXE_LIST | tee / dev / stderr | LIB_LIST) 2> & 1 | CALC

Readable? Maybe yes.

It remains to figure out how to correctly write EXE_LIST.

Option one: using functions:

EXE_LIST (){

find /proc/ -name "exe" -ls 2>/dev/null|awk '{print $13}'

}

LIB_LIST (){

xargs -n 32 -P 4 ldd 2>/dev/null|grep /|awk '{print $3}'

}

CALC (){

sort -u|xargs -n 1 -P 16 readlink -f|xargs -n 32 -P 4 du -b|awk '{sum+=$1}END{print sum}'

}

(EXE_LIST |tee /dev/stderr|LIB_LIST) 2>&1 | CALC

And a little functional nobility:

EXE_LIST () ( find /proc/ -name "exe" -ls 2>/dev/null|awk '{print $13}' )

LIB_LIST () ( xargs -n 32 -P 4 ldd 2>/dev/null|grep / |awk '{print $3}' )

CALC() ( sort -u|xargs -n 1 -P 16 readlink -f|xargs -n 32 -P 4 du -b|awk '{sum+=$1}END{print sum}' )

(EXE_LIST |tee /dev/stderr|LIB_LIST) 2>&1 | CALC

Of course, purists of the FNPs will say that there is no type inference, no control over them, no lazy calculations, and in general, consider this list processing in a functional style - madness and pornography.

However, this is code, it works, it is easy to write. It does not have to be a real product environment, but it can easily be a tool that leaves three minutes of interesting programming out of three hours of monotonous work. This is the specifics of the system administrator.

The main thing I wanted to show: a functional approach to processing sequences in the shell gives a more readable and less bulky code than a direct iteration. And it works faster, by the way (due to xargs concurrency).

scalabilty

A further development of “shell on hull” is the gnu parallels utility, which allows you to execute code on multiple servers in parallel.