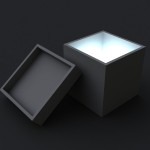

Black Box vs White Box in System Administration

I would like to draw the attention of fellow system administrators, as well as those who take them to work, on two diametrically opposite approaches to system administration. It seems to me that understanding the difference between these approaches can significantly reduce the mutual frustration of both sides.

It seems to be nothing new, but in the almost 15 years that I have been connected with this topic, I have witnessed so many times problems, misunderstandings and even conflicts related to misunderstanding or unwillingness to understand the difference between these two approaches, which seems to be worth raising the topic once again . If you are a system administrator and you are not at ease at work, or if you are a manager hiring a system administrator - perhaps this article is just for you.

I will deliberately exaggerate a little in order to make the difference a little more visual.

Black Box Administration

Under the black boxadministration (the analogy with a black opaque tightly closed box, with buttons and bulbs), I understand the situation when there is a certain system, there are instructions for its operation, some set of tricks, questions and answers in Google. But there is no information on how the system works, we do not know (or do not want to know) what is inside it, how it works, what is inside with what and how it interacts. Yes, this is not important: if it is operated under normal conditions, we just know how to use it and what to expect. A set of commands / actions that lead to the results we need is described in advance and it doesn’t matter how the system does this, we just “order” and get the result. Or, if it is figuratively described in advance (or found by typing, that is, by experience), which button must be pressed to light up the desired combination of bulbs.

Accordingly, administration in this case comes down, firstly, to supporting standard operating conditions under which the system behaves as agreed, and secondly, to “pressing the necessary buttons” at the request of customers / users. This is done strictly according to the well-known knowledge about which button lights which bulb, variations are not only not welcomed, but even contraindicated, because another way can give unexpected side effects, bring the system from normal mode to a state that is not described in the documentation, and that then to do to correct the situation is unknown.

If something went wrong and the system stopped working as it should, the first thing is done - search for what is wrong with the operating conditions, which differs from how it was when it worked and how to return it back, how to “turn off all the lights” and return the system in the initial state. If this does not help, google “secret button combinations” from experts and try them all in descending order of similarity to the described situation until the treasured light comes on or the system returns to the state we know. If this does not help - a dead end. Either rollback to backups, or contacting support (if the system has one) or replacing (reinstalling) the system.

It is worth noting that there are a number of systems for which this approach is the only possible one. For example, in cases where the system device is a trade secret of its manufacturer and the possibilities of studying its device are severely limited by contractual obligations and internal company rules. Or in the case of huge complex systems with complex internal relationships, the support of various components of which is carried out by different departments that do not have access to information and do not have the right to do anything outside their area of responsibility. In addition, there are many cases where this approach is simply more appropriate. For example, Windu is often easier to reinstall than to figure out what broke in its bowels.

White box

Accordingly, the White Box is when the box is transparent. We have the opportunity to see (and also understand) how the system works. In this situation, the instruction is secondary, it allows you to understand how the system is supposed to be used and how it is arranged, but does not limit us to this. There is an understanding of how the system works and, as a result, how it will behave under different conditions, including those not described in the documentation.

After some time has been spent studyingdevice system, there is an understanding of why it is necessary to press the buttons in this sequence and why the system must be operated in such conditions. The same actions can be done in different ways, if this leads to the desired result, because we can predict possible side effects in advance, and therefore we can choose the most effective way for the current task. If something went wrong - we can see what exactly and how exactly it broke, which gear jammed. We can consciously return the system to its original state or change that and only the factor that prevents the system from working normally. That is, to move from the internal state and needs of the system, and not from the available documentation / experience.

In this situation, the possibilities of solving problems increase many times, the “dead end” is achieved much longer and less often, the system can be operated more fully and flexibly, more efficiently. But, this approach requires mastering, digesting and keeping in your head an order of magnitude more information, which is much longer and more complicated.

This approach is also the only one available. For example, when a system is an internal development of a company, constantly being developed, changing, supplemented. Therefore, no one knows what to expect from her, and the documentation is often missing. In this case, situations will regularly appear that simply cannot be solved within a reasonable time without understanding how the system works and not knowing how to “dig into its gears”.

The essence of the problem

From my personal experience, I can say that most system administrators feel more comfortable (as well as more efficient) within one of these two approaches, and, accordingly, not at ease when there is a need to work within the other most of the time approach.

We will consider both options in a real (slightly simplified , so as not to waste time on inconsequential but time-consuming details) life example.

A certain site runs on 2 8-core machines with 8GB of memory. Apache2 + PHP + MySQL + memcache. At peak hours, the system periodically began to terribly slow down and the site itself to respond with delays of 10-30 seconds or not to respond at all.

To begin with, the problem was considered according to the Black Box approach.

On both servers, the top command showed that there is almost no free memory, load average around 20, swap is actively used and the system does not crawl out of iowait. Restarting apache returned everything to its normal state. Then they inserted apache restart into the cron once an hour and forgot about the problem for another six months ...

What exactly happened and why it happened is the remaining unknown, the actual problem was “the site slows down and does not open”, the problem is solved, the site no longer slows down. Diagnostics - 3 minutes, solution - another 5 minutes. That is, in less than 10 minutes the problem is fixed, not knowing almost nothing about why the problem appeared. There is no certainty that this will help for a long time and that this is a general solution, but (!) 10 minutes and in fact the site works again without problems for almost another six months.

Six months later, the problem began to appear again despite the hourly restart of Apache. They began to reduce the restart interval, complaints began to appear that the connection to the site sometimes simply terminated, the page turned out to be underloaded. That is, the very solution to the problem began to create new problems.

Further, the same system began to be considered in more detail. Like a white box.

I will omit the details of the process, as a result of which the system was studied almost under a microscope, I will dwell immediately on the conclusions. It turned out:

- Different requests to the server take up a very different amount of memory, there are a small number of requests that eat up to 200 megabytes, but the bulk consumes no more than 5-10. At the same time, php frees memory, but apache within the framework of one child doesn’t free memory, keeps it with itself, so if it is needed it already exists. As a result, we get that sooner or later at least one heavy request will pass through each child, as a result of which the Apache will “save” memory for the future much more than most subsequent requests will need.

- The number of Apache “children” is quite large 250 pieces, which, with their smooth “fattening” up to 200MB, smoothly, but inevitably leads to memory consumption much greater than what is available in the system. The system starts to swap, everything starts to work more slowly, requests are processed more slowly, and they arrive the same way, which leads to more simultaneous requests, which leads to the fact that all 250 Apache children are actively involved and have a queue of requests, and together they actively “get fat” and swap .

- In addition, this growing snowball was somewhat accelerated by a number of long-polling requests constantly hanging on the background and, as a result, keeping additional Apache children busy, which did not allow unnecessary Apache processes to end due to “too many unused children”.

The solution was as follows (taking into account the specifics of the project, which is not described here):

- they put nginx at the input, it doesn’t get fat from the number of requests and the queue.

- Nginx via url match forwarded heavy requests for a separate apache instance with mod-fpm, thereby eliminating the problem of “jammed” memory in the root, allowing a maximum of 25 parallel processes and only 5 spare processes (max spare childs).

- “Light” requests went to the usual apache, which stopped “getting fat” but just in case they still put a maximum of 1000 requests to the child, so that, if suddenly something, the memory was periodically released.

- Long polling, with the support of programmers, generally sent a server to a small node.js server that proxies an apache request for each client once per second, having looked first if there is a flag about “fresh data” in memcache and “skipping con” if nothing fresh appeared. These requests are very lightweight, for Apache they are no longer long-polling and occur only when there is really new data - these requests fly in microseconds and are not even noticeable, practically do not occupy apache-childs.

- In addition (thanks to pinba) the scripts themselves were also slightly corrected, some of them after that began to eat less and work faster.

As a result, at rush hour, no more than 25-30 light Apaches work simultaneously, 5-10 heavier individual php via mod_fpm, node.js moves a little, occupying no more than 2-5 Apache processes simultaneously on their micro requests. If it suddenly forms, the Nginx queue is held easily, without straining, while the processor almost does not consume, because it does nothing, it only proxies and, thanks to its architecture, supporting several hundred simultaneous sessions, has absolutely no difficulties. In addition, nginx buffers Apache responses and slowly gives them to the client itself, which allows apache to get rid of the request faster.

As a result, the average load average on servers at “rush hour” walks around 0.2-0.5. Memory consumption - about 2-3GB for all processes. The rest of the memory is cache. Swap is not used. The response time now does not change at rush hour and has become approximately equal to the same as in quiet time (when there are only 2-3 clients on the site).

The number of clients that the site can serve without having problems with the load has increased by about 10 times (further difficulties with the database already begin).

That is, the problem is solved again, but this time with a huge margin and clearly understanding what to count on and how long it will work without problems. Everything is justified, thoughtful, balanced. The decision time is 2 weeks ...

Summary

At the risk of getting the title of “captain of evidence”, I turn to the consequences of such a “separation”:

- It is worth considering what type of system you need to service at your company before selecting a system administrator. Black-Box-guru in the case of complex home-made constantly changing systems is unlikely to be as useful as white-box-guru, and is unlikely to be pleased with its work. White-box-guru in the case of stable, well-functioning systems in which it is undesirable to “get inside” will not find a place at all and will most likely work only formally, doing all its free time with some kind of personal, personal projects and experiments. Well, or he will constantly try to “redo everything here correctly, and not how it is now.”

- The system administrator should “understand himself”, which approach is closer to the heart, and choose a job based on this understanding.

- Black-box-guru solves problems very quickly, just as quickly it is able to take on service new well-documented and widely used systems. The results are stable and predictable. He prefers to solve problems of the same type and predictably (and often this is a huge plus, especially when working in a team), but not always optimally.

- White-box-guru spends considerable time studying the system, but then it produces much more effective solutions. Able to solve more complex problems and those for which the black-box-guru “dead end” state has arrived, but not so fast and not so much. At the same time, it’s practically useless for quick “extinguishing fires,” because instead of just quickly restarting apache will consider what is happening “in hot pursuit”, studying the state of the system in its unhealthy state is “visible”.

- A large company cannot do without a team with administrators of both types: while some quickly “put out the fire”, that is, they make the system work at least somehow, others calmly understand the roots of the problems and make sure that they never happen again. And these second ones should not be forced to do what the first ones do well, and vice versa - nothing good will come of it.

- The most valuable, but also the rarest, shots are those who can successfully work on both approaches, but they also prefer (more comfortable) any one approach.

When choosing an employee or job, remember these few points and perhaps you will save time, money and nerves. That's probably all on this topic. Thanks to those who read it. :)